AI Art Generation Handbook/ControlNet/Pose

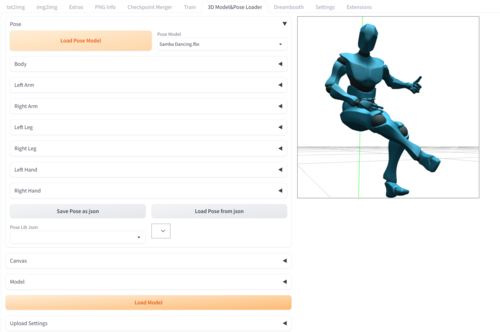

Appearance

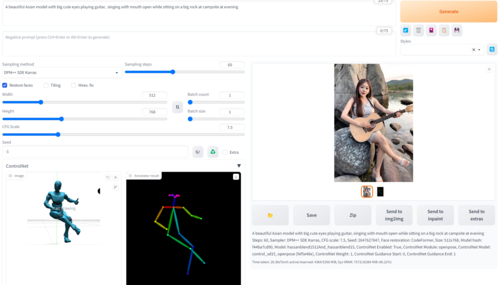

OpenPose in ControlNet was introduced in the paper "Adding Conditional Control to Text-to-Image Diffusion Models" by Standford researchers: Lvmin Zhang and Maneesh Agrawala, published in 2023.

It uses human pose estimation (detects key points on the human body [ joints, face landmarks] ) as a conditioning input for image generation models. It allows users to control the pose and positioning of human figures in generated images by providing a skeleton-like representation of the desired pose.

This concept is not entirely new as this is based from earlier works by researchers at Carnegie Mellon University in the paper "OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields"