Abstract Algebra/Printable version

| This is the print version of Abstract Algebra You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Abstract_Algebra

Sets

Sets

[edit | edit source]In the so-called naive set theory, which is sufficient for the purpose of studying abstract algebra, the notion of a set is not rigorously defined. We describe a set as a well-defined aggregation of objects, which are referred to as members or elements of the set. If a certain object is an element of a set, it is said to be contained in that set. The elements of a set can be anything at all, but in the study of abstract algebra, elements are most frequently numbers or mathematical structures. The elements of a set completely determine the set, and so sets with the same elements as each other are equal. Conversely, sets which are equal contain the same elements.

For an element and a set , we can say either , that is, is contained in , or , that is, is not contained in . To state that multiple elements are contained in , we write .

The axiom of extensionality

[edit | edit source]Using this notation and the symbol , which represents logical implication, we can restate the definition of equality for two sets and as follows:

- if and only if and .

This is known as the axiom of extensionality.

Comprehensive notation

[edit | edit source]If it is not possible to list the elements of a set, it can be defined by giving a property that its elements are sole to possess. The set of all objects with some property can be denoted by . Similarly, the set of all elements of a set with some property can be denoted by . The colon : here is read as "such that". The vertical bar | is synonymous with the colon in similar contexts. This notation will appear quite often in the rest of this book, so it is important for the readers to familiarize themselves with this now.

As an example of this notation, the set of integers can be written as , and the set of even integers can be written as .

Set inclusion

[edit | edit source]For two sets and , we define set inclusion as follows: is included in, or a subset of, , if and only if every member of is a member of . In other words,

where the symbol denotes "is a subset of", and denotes "if and only if".

By the aforementioned axiom of extensionality, one finds that .

The empty set

[edit | edit source]One can define an empty set, written , such that , where denotes universal quantification (read as "for all" or "for every"). In other words, the empty set is defined as the set which contains no elements. The empty set can be shown to be unique.

Since the empty set contains no elements, it can be shown to be a subset of every set. Similarly, no set but the empty set is a subset of the empty set.

Proper set inclusion

[edit | edit source]For two sets and , we can define proper set inclusion as follows: is a proper subset of if and only if is a subset of , and does not equal . In other words, there is at least one member in not contained in

- ,

where the symbol denotes "is a proper subset of" and the symbol denotes logical and.

Cardinality of sets

[edit | edit source]The cardinality of a set , denoted by , can be said informally to be a measure of the number of elements in . However, this description is only rigorously accurate for finite sets. To find the cardinality of infinite sets, more sophisticated tools are needed.

Set intersection

[edit | edit source]For sets and , we define the intersection of and by the set which contains all elements which are common to both and . Symbolically, this can be stated as follows:

- .

Because every element of is an element of and an element of , is, by the definition of set inclusion, a subset of and .

If the sets and have no elements in common, they are said to be disjoint sets. This is equivalent to the statement that and are disjoint if .

Set intersection is an associative and commutative operation; that is, for any sets , , and , and .

By the definition of intersection, one can find that and . Furthermore, .

One can take the intersection of more than two sets at once; since set intersection is associative and commutative, the order in which these intersections are evaluated is irrelevant. If are sets for every , we can denote the intersection of all by

In cases like this, is called an index set, and are said to be indexed by .

In the case of one can either write or

- .

Set union

[edit | edit source]For sets and , we define the union of and by the set which contains all elements which are in either or or both. Symbolically,

- .

Since the union of sets and contains the elements of and , and .

Like set intersection, set union is an associative and commutative operation; for any sets , , and , and .

By the definition of union, one can find that . Furthermore, .

Just as with set intersection, one can take the union of more than two sets at once; since set union is associative and commutative, the order in which these unions are evaluated is irrelevant. Let be sets for all . Then the union of all the is denoted by

(Where may be read as "there exists".)

For the union of a finite number of sets , that is, one can either write or abbreviate this as

- .

Distributive laws

[edit | edit source]Set union and set intersection are distributive with respect to each other. That is,

- and

- .

Cartesian product

[edit | edit source]The Cartesian product of sets and , denoted by , is the set of all ordered pairs which can be formed with the first object in the ordered pair being an element of and the second being an element of . This can be expressed symbolically as

- .

Since different ordered pairs result when one exchanges the objects in the pair, the Cartesian product is not commutative. The Cartesian product is also not associative. The following identities hold for the Cartesian product for any sets :

- ,

- ,

- ,

- .

The Cartesian product of any set and the empty set yields the empty set; symbolically, for any set , .

The Cartesian product can easily be generalized to the n-ary Cartesian product, which is also denoted by . The n-ary Cartesian product forms ordered n-tuples from the elements of sets. Specifically, for sets ,

- .

This can be abbreviated as

- .

In the n-ary Cartesian product, each is referred to as the -th coordinate of .

In the special case where all the factors are the same set , we can generalize even further. Let be the set of all functions . Then, in analogy with the above, is effectively the set of "-tuples" of elements in , and for each such function and each , we call the -th coordinate of . As one might expect, in the simple case when for an integer , this construction is equivalent to , which we can abbreviate further as . We also have the important case of , giving rise to the set of all infinite sequences of elements of , which we can denote by . We will need this construction later, in particular when dealing with polynomial rings.

Disjoint union

[edit | edit source]Let and be any two sets. We then define their disjoint union, denoted to be the following: First create copies and of and such that . Then define . Notice that this definition is not explicit, like the other operations defined so far. The definition does not output a single set, but rather a family of sets. However, these are all "the same" in a sense which will be defined soon. In other words, there exists bijective functions between them.

Luckily, if a disjoint union is needed for explicit computation, one can easily be constructed, for example .

Set difference

[edit | edit source]The set difference, or relative set complement, of sets and , denoted by , is the set of elements contained in which are not contained in . Symbolically,

- .

By the definition of set difference, .

The following identities hold for any sets :

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- ,

- .

The set difference of two Cartesian products can be found as .

The universal set and set complements

[edit | edit source]We define some arbitrary set for which every set under consideration is a subset of as the universal set, or universe. The complement of any set is then defined to be the set difference of the universal set and that set. That is, for any set , the complement of is given by . The following identities involving set complements hold true for any sets and :

- De Morgan's laws for sets:

- ,

- ,

- Double complement law:

- ,

- Complement properties:

- ,

- ,

- ,

- ,

- .

The set complement can be related to the set difference with the identities and .

Symmetric difference

[edit | edit source]For sets and , the symmetric set difference of and , denoted by or by , is the set of elements which are contained either in or in but not in both of them. Symbolically, it can be defined as

More commonly, it is represented as

- or as

- .

The symmetric difference is commutative and associative so that and . Every set is its own symmetric-difference inverse, and the empty set functions as an identity element for the symmetric difference, that is, and . Furthermore, if and only if .

Set intersection is distributive over the symmetric difference operation. In other words, .

The symmetric difference of two set complements is the same as the symmetric difference of the two sets: .

Notation for specific sets

[edit | edit source]Commonly-used sets of numbers in mathematics are often denoted by special symbols. The conventional notations used in this book are given below.

Natural numbers with 0: or

Natural numbers without 0:

Integers:

Rational numbers:

Real numbers:

Complex numbers:

Equivalence relations and congruence classes

We often wish to describe how two mathematical entities within a set are related. For example, if we were to look at the set of all people on Earth, we could define "is a child of" as a relationship. Similarly, the operator defines a relation on the set of integers. A binary relation, hereafter referred to simply as a relation, is a binary proposition defined on any selection of the elements of two sets.

Formally, a relation is any arbitrary subset of the Cartesian product between two sets and so that, for a relation , . In this case, is referred to as the domain of the relation and is referred to as its codomain. If an ordered pair is an element of (where, by the definition of , and ), then we say that is related to by . We will use to denote the set

- .

In other words, is used to denote the set of all elements in the codomain of to which some in the domain is related.

Equivalence relations

[edit | edit source]To denote that two elements and are related for a relation which is a subset of some Cartesian product , we will use an infix operator. We write for some and .

There are very many types of relations. Indeed, further inspection of our earlier examples reveals that the two relations are quite different. In the case of the "is a child of" relationship, we observe that there are some people A,B where neither A is a child of B, nor B is a child of A. In the case of the operator, we know that for any two integers exactly one of or is true. In order to learn about relations, we must look at a smaller class of relations.

In particular, we care about the following properties of relations:

- Reflexivity: A relation is reflexive if for all .

- Symmetry: A relation is symmetric if for all .

- Transitivity: A relation is transitive if for all .

One should note that in all three of these properties, we quantify across all elements of the set .

Any relation which exhibits the properties of reflexivity, symmetry and transitivity is called an equivalence relation on . Two elements related by an equivalence relation are called equivalent under the equivalence relation. We write to denote that and are equivalent under . If only one equivalence relation is under consideration, we can instead write simply . As a notational convenience, we can simply say that is an equivalence relation on a set and let the rest be implied.

Example: For a fixed integer , we define a relation on the set of integers such that if and only if for some . Prove that this defines an equivalence relation on the set of integers.

Proof:

- Reflexivity: For any , it follows immediately that , and thus for all .

- Symmetry: For any , assume that . It must then be the case that for some integer , and . Since is an integer, must also be an integer. Thus, for all .

- Transitivity: For any , assume that and . Then and for some integers . By adding these two equalities together, we get , and thus .

Q.E.D.

Remark. In elementary number theory we denote this relation and say a is equivalent to b modulo p.

Equivalence classes

[edit | edit source]Let be an equivalence relation on . Then, for any element we define the equivalence class of as the subset given by

Theorem:

Proof: Assume . Then by definition, .

- We first prove that . Let be an arbitrary element of . Then by definition of the equivalence class, and by transitivity of equivalence relations. Thus, and .

- We now prove that Let be an arbitrary element of . Then, by definition . By transitivity, , so . Thus, and .

As and as , we have .

Q.E.D.

Partitions of a set

[edit | edit source]A partition of a set is a disjoint family of sets , , such that .

Theorem: An equivalence relation on induces a unique partition of , and likewise, a partition induces a unique equivalence relation on , such that these are equivalent.

Proof: (Equivalence relation induces Partition): Let be the set of equivalence classes of . Then, since for each , . Furthermore, by the above theorem, this union is disjoint. Thus the set of equivalence relations of is a partition of .

(Partition induces Equivalence relation): Let be a partition of . Then, define on such that if and only if both and are elements of the same for some . Reflexivity and symmetry of is immediate. For transitivity, if and for the same , we necessarily have , and transitivity follows. Thus, is an equivalence relation with as the equivalence classes.

Lastly obtaining a partition from on and then obtaining an equivalence equation from obviously returns again, so and are equivalent structures.

Q.E.D.

Quotients

[edit | edit source]Let be an equivalence relation on a set . Then, define the set as the set of all equivalence classes of . In order to say anything interesting about this construction we need more theory yet to be developed. However, this is one of the most important constructions we have, and one that will be given much attention throughout the book.

Functions

Definition

[edit | edit source]A function is a triplet such that:

- is a set, called the domain of

- is a set, called the codomain of

- is a subset of , called the graph of

In addition the following two properties hold:

- .

- .

we write for the unique such that .

We say that is a function from to , which we write:

Example

[edit | edit source]Let's consider the function from the reals to the reals which squares its argument. We could define it like this:

Remark

[edit | edit source]As you see in the definition of a function above, the domain and codomain are an integral part of the definition. In other words, even if the values of don't change, changing the domain or codomain changes the function.

Let's look at the following four functions.

The function:

is neither injective nor surjective (these terms will be defined later).

The function:

is not injective but surjective.

The function:

is injective but not surjective.

The function:

is injective and surjective

As you see, all four functions have the same mapping but all four are different. That's why just giving the mapping is insufficient; a function is only defined if its domain and codomain are known.

Image and preimage

[edit | edit source]For a set , we write for the set of subsets of .

Let . We will now define two related functions.

The image function:

The preimage function:

Note that the image and preimage are written respectively like and its inverse (if it exists). There is however no ambiguity because the domains are different. Note also that the image and preimage are not necessarily inverse of one another. (See the section on bijective functions below).

We define , which we call the image of .

For any , we call the support of .

Proposition: Let . Then

Example

[edit | edit source]Let's take again the function:

Let's consider the following examples:

Further definitions

[edit | edit source]Let and . We define by , which we call the composition of and .

Let be a set. We define the identity function on A as

Properties

[edit | edit source]Definition: A function is injective if

Lemma: Consider a function and suppose . Then is injective if and only if there exists a function with .

Proof:

:

Suppose is injective. As let's define as an arbitrary element of . We can then define a suitable function as follows:

It is now easy to verify that .

:

Suppose there is a function with . Then . is thus injective.

Q.E.D.

Definition: A function is surjective if

Lemma: Consider a function . Then is surjective if and only if there exists a function with .

Proof:

:

Suppose is surjective. We can define a suitable function as follows:

It is now easy to verify that .

:

Suppose there is a function with . Then . Then . is thus surjective.

Q.E.D.

Definition: A function is bijective if it is both injective and surjective.

Lemma: A function is bijective if and only if there exists a function with and . Furthermore it can be shown that such a is unique. We write it and call it the inverse of .

Proof:

Left as an exercise.

Proposition: Consider a function . Then

- is injective iff

- is surjective iff

- is bijective iff the image and preimage of are inverse of each other

Example: If and are sets such that , there exists an obviously injective function , called the inclusion , such that for all .

Example: If is an equivalence relation on a set , there is an obviously surjective function , called the canonical projection onto , such that for all .

Theorem: Define the equivalence relation on such that if and only if . Then, if is any function, decomposes into the composition

where is the canonical projection, is the inclusion , and is the bijection for all .

Proof: The definition of immediately implies that , so we only have to prove that is well defined and a bijection. Let . Then . This shows that the value of is independent of the representative chosen from , and so it is well-defined.

For injectivity, we have , so is injective.

For surjectivity, let . Then there exists an such that , and so by definition of . Since is arbitrary in , this proves that is surjective.

Q.E.D.

Definition: Given a function , is a

(i) Monomorphism if given any two functions such that , then .

(ii) Epimorphism if given any two functions such that , then .

Theorem: A function between sets is

(i) a monomorphism if and only if it is injective.

(ii) an epimorphism if and only if it is surjective.

Proof: (i) Let be a monomorphism. Then, for any two functions , for all . This is the definition if injectivity. For the converse, if is injective, it has a left inverse . Thus, if for all , compose with on the left side to obtain , such that is a monomorphism.

(ii) Let be an epimorphism. Then, for any two functions , for all and . Assume , that is, that is not surjective. Then there exists at least one not in . For this choose two functions which coincide on but disagree on . However, we still have for all . This violates our assumption that is an epimorphism. Consequentally, is surjective. For the converse, assume is surjective. Then the epimorphism property immediately follows.

Q.E.D.

Remark: The equivalence between monomorphism and injectivity, and between epimorphism and surjectivity is a special property of functions between sets. This not the case in general, and we will see examples of this when discussing structure-preserving functions between groups or rings in later sections.

Example: Given any two sets and , we have the canonical projections sending to , and sending to . These maps are obviously surjective.

In addition, we have the natural inclusions and which are obviously injective as stated above.

Universal properties

[edit | edit source]The projections and inclusions described above are special, in that they satisfy what are called universal properties. We will give the theorem below. The proof is left to the reader.

Theorem: Let be any sets.

(i) Let and . Then there exists a unique function such that and are simultaneously satisfied. is sometimes denoted .

(ii) Let and . Then there exists a unique function such that and are simultaneously satifsied.

The canonical projections onto quotients also satisfy a universal property.

Theorem: Define the equivalence relation on and let be any function such that for all . Then there exists a unique function such that , where is the canonical projection.

Binary Operations

A binary operation on a set is a function . For , we usually write as .

Properties

[edit | edit source]The property that for all is called closure under .

Example: Addition between two integers produces an integer result. Therefore addition is a binary operation on the integers. Whereas division of integers is an example of an operation that is not a binary operation. is not an integer, so the integers are not closed under division.

To indicate that a set has a binary operation defined on it, we can compactly write . Such a pair of a set and a binary operation on that set is collectively called a binary structure. A binary structure may have several interesting properties. The main ones we will be interested in are outlined below.

Definition: A binary operation on is associative if for all , .

Example: Addition of integers is associative: . Notice however, that subtraction is not associative. Indeed, .

Definition: A binary operation on is commutative is for all , .

Example: Multiplication of rational numbers is commutative: . Notice that division is not commutative: while . Notice also that commutativity of multiplication depends on the fact that multiplication of integers is commutative as well.

Exercise

[edit | edit source]- Of the four arithmetic operations, addition, subtraction, multiplication, and division, which are associative? commutative and identity?

Answer

[edit | edit source]| operation | associative | commutative |

|---|---|---|

| Addition | yes | yes |

| Multiplication | yes | yes |

| Subtraction | No | No |

| Division | No | No |

Algebraic structures

[edit | edit source]Binary operations are the working parts of algebraic structures:

Augustus De Morgan expressed the venture into the categories of algebraic structures as follows: It is "inventing a distinct system of unit-symbols, and investigating or assigning relations which define their mode of action on each other." De Morgan 1844, quoted by A.N. Whitehead, A Treatise on Universal Algebra, 1898, page 131.

One binary operation

[edit | edit source]

A closed binary operation o on a set A is called a magma (A, o ).

If the binary operation respects the associative law a o (b o c) = (a o b) o c, then the magma (A, o ) is a semigroup.

If a magma has an element e satisfying e o x = x = x o e for every x in it, then it is a unital magma. The element e is called the identity with respect to o. If a unital magma has elements x and y such that x o y = e, then x and y are inverses with respect to each other.

A magma for which every equation a x = b has a solution x, and every equation y c = d has a solution y, is a quasigroup. A unital quasigroup is a loop.

A unital semigroup is called a monoid. A monoid for which every element has an inverse is a group. A group for which x o y = y o x for all its elements x and y is called a commutative group. Alternatively, it is called an abelian group.

Two binary operations

[edit | edit source]A pair of structures, each with one operation, can used to build those with two: Take (A, o ) as a commutative group with identity e. Let A_ denote A with e removed, and suppose (A_ , * ) is a monoid with binary operation * that distributes over o:

- a * (b o c) = (a * b) o (a * c). Then (A, o, * ) is a ring.

In this construction of rings, when the monoid (A_ , * ) is a group, then (A, o, * ) is a division ring or skew field. And when (A_ , * ) is a commutative group, then (A, o, * ) is a field.

The two operations sup (v) and inf (^) are presumed commutative and associative. In addition, the absorption property requires: a ^ (a v b) = a, and a v (a ^ b) = a. Then (A, v, ^ ) is called a lattice.

In a lattice, the modular identity is (a ^ b) v (x ^ b) = ((a ^ b) v x ) ^ b. A lattice satisfying the modular identity is a modular lattice.

Three binary operations

[edit | edit source]A module is a combination of a ring and a commutative group (A, B), together with a binary function A x B → B. When A is a field, then the module is a vector space. In that case A consists of scalars and B of vectors. The binary operation on B is termed addition.

Four binary operations

[edit | edit source]Suppose (A, B) is a vector space, and that B has a second operation called multiplication. Then the structure is an algebra over the field A.

Linear Algebra

The reader is expected to have some familiarity with linear algebra. For example, statements such as

- Given vector spaces and with bases and and dimensions and , respectively, a linear map corresponds to a unique matrix, dependent on the particular choice of basis.

should be familiar. It is impossible to give a summary of the relevant topics of linear algebra in one section, so the reader is advised to take a look at the linear algebra book.

In any case, the core of linear algebra is the study of linear functions, that is, functions with the property , where greek letters are scalars and roman letters are vectors.

The core of the theory of finitely generated vector spaces is the following:

Every finite-dimensional vector space is isomorphic to for some field and some , called the dimension of . Specifying such an isomorphism is equivalent to choosing a basis for . Thus, any linear map between vector spaces with dimensions and and given bases and induces a unique linear map . These maps are precisely the matrices, and the matrix in question is called the matrix representation of relative to the bases .

Remark: The idea of identifying a basis of a vector space with an isomorphism to may be new to the reader, but the basic principle is the same. An alternative term for vector space is coordinate space since any point in the space may be expressed, on some particular basis, as a sequence of field elements. (All bases are equivalent under some non-singular linear transformation.) The name associated with pointy things like arrows, spears, or daggers, is distasteful to peace-loving people who don’t imagine taking up such a weapon. The orientation or direction associated with a point in coordinate space is implicit in the positive orientation of the real line (if that is the field) or in an orientation instituted in a polar expression of the multiplicative group of the field.

A coordinate space V with basis has vectors where ei is all zeros except 1 at index i.

As an algebraic structure, V is an amalgam of an abelian group (addition and subtraction of vectors), a scalar field F (the source of the xi's), its multiplicative group F *, and a group action F * x V → V, given by

- The group action is scalar-vector multiplication.

Linear transformations are mappings from one coordinate space V to another W corresponding to a matrix (ai j ). Suppose W has basis

Then the elements of the matrix (ai j ) are given by the rate of change of yj depending on xi:

- constant.

A common case involves V = W and n is a low number, such as n = 2. When F = {real numbers} = R, the set of matrices is denoted M(2,R). As an algebraic structure, M(2,R) has two binary operations that make it a ring: component-wise addition and matrix multiplication. See the chapter on 2x2 real matrices for a deconstruction of M(2,R) into a pencil of planar algebras.

More generally, when dim V = dim W = n, (ai j ) is a square matrix, an element of M(n, F), which is a ring with the + and x binary operations. These benchmarks in algebra serve as representations. In particular, when the rows or columns of such a matrix are linearly independent, then there is a matrix (bi j ) acting as a multiplicative inverse with respect to the identity matrix. The subset of invertible matrices is called the general linear group, GL(n, F). This group and its subgroups carry the burden of demonstrating physical symmetries associated with them.

The pioneers in this field included w:Sophus Lie, who viewed the continuous groups as evolving out of 1 in all directions according to an "algebra" now named after him. w:Hermann Weyl, spurred on by Eduard Study, explored and named GL(n, F) and its subgroups, calling them the classical groups.

Number Theory

As numbers of various number systems form basic units with which one must work when studying abstract algebra, we will now define the natural numbers and the rational integers as well as the basic operations of addition and multiplication. Using these definitions, we will also derive important properties of these number sets and operations. Following this, we will discuss important concepts in number theory; this will lead us to discussion of the properties of the integers modulo n.

The Peano postulates and the natural numbers

[edit | edit source]Definition: Using the undefined notions "1" and "successor" (denoted by ), we define the set of natural numbers without zero , hereafter referred to simply as the natural numbers, with the following axioms, which we call the Peano postulates:

- Axiom 1.

- Axiom 2.

- Axiom 3.

- Axiom 4.

- Axiom 5.

We can prove theorems for natural numbers using mathematical induction as a consequence of the fifth Peano Postulate.

Addition

[edit | edit source]Definition: We recursively define addition for the natural numbers as a composition using two more axioms; the other properties of addition can subsequently be derived from these axioms. We denote addition with the infix operator +.

- Axiom 6.

- Axiom 7.

Axiom 6 above relies on the first Peano postulate (for the existence of 1) as well as the second (for the existence of a successor for every number).

Henceforth, we will assume that proven theorems hold for all in .

Multiplication

[edit | edit source]Definition: We similarly define multiplication for the natural numbers recursively, again using two axioms:

- Axiom 8.

- Axiom 9.

Properties of addition

[edit | edit source]We start by proving that addition is associative.

Theorem 1: Associativity of addition:

Proof: Base case: By axioms 6 and 7, .

- By axiom 6, .

- Inductive hypothesis: Suppose that, for , .

- Inductive step: By axiom 7, .

- By the inductive hypothesis, .

- By axiom 7, .

- By axiom 7, .

- By induction, . QED.

Lemma 1:

Proof: Base case: 1+1=1+1.

- Inductive hypothesis: Suppose that, for , .

- Inductive step: By axiom 6, .

- By the inductive hypothesis, .

- By theorem 1, .

- By axiom 6, .

- By induction, . QED.

Theorem 2: Commutativity of addition:

Proof: Base case: By lemma 1, .

- Inductive hypothesis: Suppose that, for , .

- By axiom 6, .

- By theorem 1, .

- By the inductive hypothesis, .

- By theorem 1, .

- By lemma 1, .

- By theorem 1, .

- By axiom 6, .

- By induction, . QED.

Theorem 3: .

Proof: Base case: Suppose .

- By theorem 2, .

- By axiom 6, .

- By axiom 4, .

- Inductive hypothesis: Suppose that, for , .

- Inductive step: Suppose .

- By axiom 6, .

- By theorem 2, .

- By theorem 1, .

- By the base case, . Thus, .

- By the inductive hypothesis, .

- By induction, . QED.

Properties of multiplication

[edit | edit source]Theorem 4: Left-distributivity of multiplication over addition: .

Proof: Base case: By axioms 6 and 9, .

- By axiom 8, .

- Inductive hypothesis: Suppose that, for , .

- Inductive step: By axiom 7, .

- By axiom 9, .

- By the inductive hypothesis, .

- By theorem 1, .

- By axiom 9, .

- By induction, . QED.

Theorem 5: .

Proof: Base case: By axiom 8, 1(1)=1.

- Inductive hypothesis: Suppose that, for , .

- Inductive step: By axiom 6, .

- By theorem 4, .

- By the base case, .

- By the inductive hypothesis, .

- By axiom 6, .

- By induction, . QED.

Theorem 6: .

Proof: Base case: By axiom 8, .

- By axiom 6, .

- By axiom 8, .

- Inductive hypothesis: Suppose that, , .

- Inductive step: By axiom 9, .

- By the inductive hypothesis, .

- By axiom 6, .

- By theorem 1, .

- By theorem 2, .

- By theorem 1,

- By axiom 9, .

- By theorem 1, .

- By axiom 6, .

- By induction, . QED.

Theorem 7: Associativity of multiplication:

Proof: Base case: By axiom 8, .

- Inductive hypothesis: Suppose that, for , .

- Inductive step: By axiom 9, .

- By the inductive hypothesis, .

- By theorem 4, .

- By axiom 9, .

- By induction, . QED.

Theorem 8: Commutativity of multiplication: .

Proof: Base case: By axiom 8 and theorem 5, .

- Inductive hypothesis: Suppose that, for , .

- Inductive step: By axiom 9, .

- By the inductive hypothesis, .

- By theorem 6, .

- By induction, . QED.

Theorem 9: Right-distributivity of multiplication over addition: .

Proof: By theorems 4 and 7, .

- By theorem 7, . QED.

The integers

[edit | edit source]The set of integers can be constructed from ordered pairs of natural numbers (a, b). We define an equivalence relation on the set of all such ordered pairs such that

Then the set of rational integers is the set of all equivalence classes of such ordered pairs. We will denote the equivalence class of which some pair (a, b) is a member with the notation [(a, b)]. Then, for any natural numbers a and b, [(a, b)] represents a rational integer.

Integer addition

[edit | edit source]Definition: We define addition for the integers as follows:

Using this definition and the properties for the natural numbers, one can prove that integer addition is both associative and commutative.

Integer multiplication

[edit | edit source]Definition: Multiplication for the integers, like addition, can be defined with a single axiom:

Again, using this definition and the previously-proven properties of natural numbers, it can be shown that integer multiplication is commutative and associative, and furthermore that it is both left- and right-distributive with respect to integer addition.

Group Theory/Group

In this section we will begin to make use of the definitions we made in the section about binary operations. In the next few sections, we will study a specific type of binary structure called a group. First, however, we need some preliminary work involving a less restrictive type of binary structure.

Monoids

[edit | edit source]Definition 1: A monoid is a binary structure satisfying the following properties:

- (i) for all . This is defined as associativity.

- (ii) There exists an identity element such that for all .

Now we have our axioms in place, we are faced with a pressing question; what is our first theorem going to be? Since the first few theorems are not dependent on one another, we simply have to make an arbitrary choice. We choose the following:

Theorem 2: The identity element of is unique.

Proof: Assume and are both identity elements of . Then both satisfy condition (ii) in the definition above. In particular, , proving the theorem. ∎

This theorem will turn out to be of fundamental importance later when we define groups.

Theorem 3: If are elements of for some , then the product is unambiguous.

Proof: We can prove this by induction. The cases for and are trivially true. Assume that the statement is true for all . For , the product , inserting parentheses, can be "partitioned" into . Both parts of the product have a number of elements less than and are thus unambiguous. The same is true if we consider a different "partition", , where . Thus, we can unambiguously compute the products , , and , and rewrite the two "partitions" as and . These equal each other by the definition of a monoid.∎

This is about as far as we are going to take the idea of a monoid. We now proceed to groups.

Groups

[edit | edit source]Definition 4: A group is a monoid that also satisfies the property

- (iii) For each , there exists an element such that .

Such an element is called an inverse of . When the operation on the group is understood, we will conveniently refer to as . In addition, we will gradually stop using the symbol for multiplication when we are dealing with only one group, or when it is understood which operation is meant, instead writing products by juxtaposition, .

Remark 5: Notice how this definition depends on Theorem 2 to be well defined. Therefore, we could not state this definition before at least proving uniqueness of the identity element. Alternatively, we could have included the existence of a distinguished identity element in the definition. In the end, the two approaches are logically equivalent.

Also note that to show that a monoid is a group, it is sufficient to show that each element has either a left-inverse or a right-inverse. Let , let be a right-inverse of , and let be a right-inverse of . Then, . Thus, any right-inverse is also a left-inverse, or . A similar argument can be made for left-inverses.

Theorem 6: The inverse of any element is unique.

Proof: Let and let and be inverses of . Then, . ∎

Thus, we can speak of the inverse of an element, and we will denote this element by . We also observe this nice property:

Corollary 7: .

Proof: This follows immediately since .

The next couple of theorems may appear obvious, but in the interest of keeping matters fairly rigorous, we allow ourselves to state and prove seemingly trivial statements.

Theorem 8: Let be a group and . Then .

Proof: The result follows by direct computation: . ∎

Theorem 9: Let . Then, if and only if . Also, if and only if .

Proof: We will prove the first assertion. The second is identical. Assume . Then, multiply on the left by to obtain . Secondly, assume . Then, multiply on the left by to obtain . ∎

Theorem 10: The equation has a unique solution in for any .

Proof: We must show existence and uniqueness. For existence, observe that is a solution in . For uniqueness, multiply both sides of the equation on the left by to show that this is the only solution. ∎

Notation: Let be a group and . We will often encounter a situation where we have a product . For these situations, we introduce the shorthand notation if is positive, and if is negative. Under these rules, it is straightforward to show and and for all .

Definition 11: (i) The order of a group , denoted or , is the number of elements of if is finite. Otherwise is said to be infinite.

(ii) The order of an element of , similarly denoted or , is defined as the lowest positive integer such that if such an integer exists. Otherwise is said to be infinite.

Theorem 12: Let be a group and . Then .

Proof: Let the order of be . Then, , being the smallest positive integer such that this is true. Now, multiply by on the left and on the right to obtain implying . Thus, we have shown that . A similar argument in the other direction shows that . Thus, we must have , proving the theorem. ∎

Corollary 13: Let be a group with . Then, .

Proof: By Theorem 12, we have that . ∎

Theorem 14: An element of a group not equal to the identity has order 2 if and only if it is its own inverse.

Proof: Let have order 2 in the group . Then, , so by definition, . Now, assume and . Then . Since , 2 is the smallest positive integer satisfying this property, so has order 2. ∎

Definition 15: Let be a group such that for all , . Then, is said to be commutative or abelian.

When we are dealing with an abelian group, we will sometimes use so-called additive notation, writing for our binary operation and replacing with . In such cases, we only need to keep track of the fact that is an integer while is a group element. We will also talk about the sum of elements rather than their product.

Abelian groups are in many ways nicer objects than general groups. They also admit more structure where ordinary groups do not. We will see more about this later when we talk about structure-preserving maps between groups.

Definition 16: Let be a group. A subset is called a generating set for if every element in can be written in terms of elements in . We write .

Now that we have our definitions in place and have a small arsenal of theorems, let us look at three (really, two and a half) important families of groups.

Multiplication tables

[edit | edit source]We will now show a convenient way of representing a group structure, or more precisely, the multiplication rule on a set. This notion will not be limited to groups only, but can be used for any structure with any number of operations. As an example, we give the group multiplication table for the Klein 4-group . The multiplication table is structured such that is represented by the element in the "-position", that is, in the intersection of the -row and the -column.

This next group is for the group of integers under addition modulo 4, called . We will learn more about this group later.

We can clearly see that and are "different" groups. There is no way to relabel the elements such that the multiplication tables coincide. There is a notion of "equality" of groups that we have not yet made precise. We will get back to this in the section about group homomorphisms.

The reader might have noticed that each row in the group table features each element of the group exactly once. Indeed, assume that an element appeared twice in some row of the multiplication table for . Then there would exist such that , implying and contradicting the assumption of appearing twice. We state this as a theorem:

Theorem 17: Let be a group and . Then .

Using this, the reader can use a multiplication table to find all groups of order 3. He/she will find that there is only one possibility.

Problems

[edit | edit source]Problem 1: Show that , the set of matrices with real entries, forms a group under the operation of matrix addition.

Problem 2: Let be vector spaces and be the set of linear maps from to . Show that forms an abelian group by defining .

Problem 3: Let be generated by the elements such that , and . Show that forms a group. Are any of the conditions above redundant? When the identity e is written 1 and m = −1, then is called the quaternion group. The are imaginary units. Using 1 and one of these as a basis for a number plane results in the complex number plane.

Problem 4: Let be any nonempty set and consider the set . Show that has a natural group structure.

is the set of functions . Let and define the binary operation for all . Then is a group with identity such that for all and inverses for all .∎

Problem 5: Let be a group with two distinct elements and , both of order 2. Show that has a third element of order 2.

We consider first the case where . Then and is distinct from and . If , then and is distinct from and . ∎

Problem 6: Let be a group with one and only one element of order 2. Show that .

Since the product of two elements generally depends on the order in which we multiply them, the stated product is not necessarily well-defined. However, it works out in this case.

Since every element of appears once in the product, for every element , the inverse of must appear somewhere in the product. That, is, unless in which case is its own inverse by Theorem 14. Now, applying Corollary 13 to the product shows that its order is that same as the order of the product of all elements of order 2 in . But there is only one such element, , so the order of the product is 2. Since the only element in having order 2 is , the equality follows. ∎

Group Theory/Subgroup

Subgroups

[edit | edit source]We are about to witness a universal aspect of mathematics. That is, whenever we have any sort of structure, we ask ourselves: does it admit substructures? In the case of groups, the answer is yes, as we will immediately see.

Definition 1: Let be a group. Then, if is a subset of which is a group in its own right under the same operation as , we call a subgroup of and write .

Example 2: Any group has at least 2 subgroups; itself and the trivial group . These are called the improper and trivial subgroups of , respectively.

Naturally, we would like to have a method of determining whether a given subset of a group is a subgroup. The following two theorems provide this. Since naturally inherits the associativity property from , we only need to check closure.

Theorem 3: A nonempty subset of a group is a subgroup if and only if

- (i) is closed under the operation on . That is, if , then ,

- (ii) ,

- (iii) is closed under the taking of inverses. That is, if , then .

Proof: The left implication follows directly from the group axioms and the definition of subgroup. For the right implication, we have to verify each group axiom for . Firstly, since is closed, it is a binary structure, as required, and as mentioned, inherits associativity from G. In addition, has the identity element and inverses, so is a group, and we are done. ∎

There is, however, a more effective method. Each of the three criteria listed above can be condensed into a single one.

Theorem 4: Let be a group. Then a nonempty subset is a subgroup if and only if .

Proof: Again, the left implication is immediate. For the right implication, we have to verify the (i)-(iii) in the previous theorem. First, assume . Then, letting , we obtain , taking care of (ii). Now, since we have so is closed under taking of inverses, satisfying (iii). Lastly, assume . Then, since , we obtain , so is closed under the operation of , satisfying (i), and we are done. ∎

All right, so now we know how to recognize a subgroup when we are presented with one. Let's take a look at how to find subgroups of a given group. The next theorem essentially solves this problem.

Theorem 5: Let be a group and . Then the subset is a subgroup of , denoted and called the subgroup generated by . In addition, this is the smallest subgroup containing in the sense that if is a subgroup and , then .

Proof: First we prove that is a subgroup. To see this, note that if , then there exists integers such that . Then, we observe that since , so is a subgroup of , as claimed. To show that it is the smallest subgroup containing , observe that if is a subgroup containing , then by closure under products and inverses, for all . In other words, . Then automatically since is a subgroup of . ∎

Theorem 6: Let and be subgroups of a group . Then is also a subgroup of .

Proof: Since both and contain the identity element, their intersection is nonempty. Let . Then and . Since both and are subgroups, we have and . But then . Thus is a subgroup of . ∎

Theorem 6 can easily be generalized to apply for any arbitrary intersection where is a subgroup for every in an arbitrary index set . The reasoning is identical, and the proof of this generalization is left to the reader to formalize.

Definition 7: Let be a group and be a subgroup of . Then is called a left coset of . The set of all left cosets of in is denoted . Likewise, is called a right coset, and the set of all right cosets of in is denoted .

Lemma 8: Let be a group and be a subgroup of . Then every left coset has the same number of elements.

Proof: Let and define the function by . We show that is a bijection. Firstly, by left cancellation, so is injective. Secondly, let . Then for some and , so is surjective and a bijection. It follows that , as was to be shown. ∎

Lemma 9: The relation defined by is an equivalence relation.

Proof: Reflexivity and symmetry are immediate. For transitivity, let and . Then , so and we are done. ∎

Lemma 10: Let be a group and be a subgroup of . Then the left cosets of partition .

Proof: Note that for some . Since is an equivalence relation and the equivalence classes are the left cosets of , these automatically partition . ∎

Theorem 11 (Lagrange's theorem): Let be a finite group and be a subgroup of . Then .

Proof: By the previous lemmas, each left coset has the same number of elements and every is included in a unique left coset . In other words, is partitioned by left cosets, each contributing an equal number of elements . The theorem follows. ∎

Note 12: Each of the previous theorems have analagous versions for right cosets, the proofs of which use identical reasoning. Stating these theorems and writing out their proofs are left as an exercise to the reader.

Corollary 13: Let be a group and be a subgroup of . Then right and left cosets of have the same number of elements.

Proof: Since is a left and a right coset we immediately have for all . ∎

Corollary 14: Let be a group and be a subgroup of . Then the number of left cosets of in and the number of right cosets of in are equal.

Proof: By Lagrange's theorem and its right coset counterpart, we have . We immediately obtain , as was to be shown. ∎

Now that we have developed a reasonable body of theory, let us look at our first important family of groups, namely the cyclic groups.

Problems

[edit | edit source]Problem 1 (Matrix groups): Show that:

- i) The group of invertible matrices is a subgroup of . This group is called the general linear group of order .

- ii) The group of orthogonal matrices is a subgroup of . This group is called the orthogonal group of order .

- iii) The group is a subgroup of . This group is called the special orthogonal group of roder .

- iv) The group of unitary matrices is a subgroup of . This is called the unitary group of order .

- v) The group is a subgroup of . This is called the special unitary group of order .

Problem 2: Show that if are subgroups of , then is a subgroup of if and only if or .

Group Theory/Cyclic groups

- A cyclic group generated by g is

- where

- Induction shows:

A cyclic group of order n is isomorphic to the integers modulo n with addition

[edit | edit source]Theorem

[edit | edit source]Let Cm be a cyclic group of order m generated by g with

Let be the group of integers modulo m with addition

- Cm is isomorphic to

Lemma

[edit | edit source]Let n be the minimal positive integer such that gn = e

- Let i > j. Let i - j = sn + r where 0 ≤ r < n and s,r,n are all integers.

1. 2. as i - j = sn + r, and gn = e 3. 4. as n is the minimal positive integer such that gn = e - and 0 ≤ r < n

5. 0. and 7. 6.

Proof

[edit | edit source]- 0. Define

- Lemma shows f is well defined (only has one output for each input).

- f is homomorphism:

- f is injective by lemma

- f is surjective as both and have m elements and f is injective

Cyclic groups

[edit | edit source]In the previous section about subgroups we saw that if is a group with , then the set of powers of , constituted a subgroup of , called the cyclic subgroup generated by . In this section, we will generalize this concept, and in the process, obtain an important family of groups which is very rich in structure.

Definition 1: Let be a group with an element such that . Then is called a cyclic group, and is called a generator of . Alternatively, is said to generate . If there exists an integer such that , and is the smallest positive such integer, is denoted , the cyclic group of order . If no such integer exists, is denoted , the infinite cyclic group.

The infinite cyclic group can also be denoted , the free group with one generator. This is foreshadowing for a future section and can be ignored for now.

Theorem 2: Any cyclic group is abelian.

Proof: Let be a cyclic group with generator . Then if , then and for some . To show commutativity, observe that and we are done. ∎

Theorem 3: Any subgroup of a cyclic group is cyclic.

Proof: Let be a cyclic group with generator , and let . Since , in particular every element of equals for some . We claim that if the lowest positive integer such that , then . To see this, let . Then and for unique . Since is a subgroup and , we must have . Now, assume that . Then contradicts our assumption that is the least positive integer such that . Therefore, . Consequently, only if , and and is cyclic, as was to be shown. ∎

As the alert reader will have noticed, the preceding proof invoked the notion of division with remainder which should be familiar from number theory. Our treatment of cyclic groups will have close ties with notions from number theory. This is no coincidence, as the next few statements will show. Indeed, an alternative title for this section could have been "Modular arithmetic and integer ideals". The notion of an ideal may not yet be familiar to the reader, who is asked to wait patiently until the chapter about rings.

Theorem 4: Let with addition defined modulo . That is , where . We denote this operation by . Then is a cyclic group.

Proof: We must first show that is a group, then find a generator. We verify the group axioms. Associativity is inherited from the integers. The element is an identity element with respect to . An inverse of is an element such that . Thus . Then, , and so , and is a group. Now, since , generates and so is cyclic. ∎

Unless we explicitly state otherwise, by we will always refer to the cyclic group . Since the argument for the generator of can be made valid for any integer , this shows that also is cyclic with the generator .

Theorem 5: An element is a generator if and only if .

Proof: We will need the following theorem from number theory: If are integers, then there exists integers such that , if and only if . We will not prove this here. A proof can be found in the number theory section.

For the right implication, assume that . Then for all , for some integer . In particular, there exists an integer such that . This implies that there exists another integer such that . By the above-mentioned theorem from number theory, we then have . For the left implication, assume . Then there exists integers such that , implying that in . Since generates , it must be true that is also a generator, proving the theorem. ∎

We can generalize Theorem 5 a bit by looking at the orders of the elements in cyclic groups.

Theorem 6: Let . Then, .

Proof: Recall that the order of is defined as the lowest positive integer such that in . Since is cyclic, there exists an integer such that is minimal and positive. This is the definition of the least common multiple; . Recall from number theory that . Thus, , as was to be proven. ∎

Theorem 7: Every subgroup of is of the form .

Proof: The fact that any subgroup of is cyclic follows from Theorem 3. Therefore, let generate . Then we see immediately that . ∎

Theorem 8: Let be fixed, and let . Then is a subgroup of generated by .

Proof: We msut first show that is a subgroup. This is immediate since . From the proof of Theorem 3, we see that any subgroup of is generated by its lowest positive element. It is a theorem of number theory that the lowest positive integer such that for fixed integers and equals the greatest common divisor of and or . Thus generates . ∎

Theorem 9: Let and be subgroups of . Then is the subgroup generated by .

Proof: The fact that is a subgroup is obvious since and are subgroups. To find a generator of , we must find its lowest positive element. That is, the lowest positive integer such that is both a multiple of and of . This is the definition of the least common multiple of and , or , and the result follows. ∎

It should be obvious by now that and , and and are the same groups. This will be made precise in a later section but can be visualized by denoting any generator of or by .

We will have more to say about cyclic groups later, when we have more tools at our disposal.

Group Theory/Permutation groups

Permutation Groups

[edit | edit source]For any finite non-empty set S, A(S) the set of all 1-1 transformations (mapping) of S onto S forms a group called Permutation group and any element of A(S) i.e., a mapping from S onto itself is called Permutation.

Symmetric groups

[edit | edit source]Theorem 1: Let be any set. Then, the set of bijections from to itself, , form a group under composition of functions.

Proof: We have to verify the group axioms. Associativity is fulfilled since composition of functions is always associative: where the composition is defined. The identity element is the identity function given by for all . Finally, the inverse of a function is the function taking to for all . This function exists and is unique since is a bijection. Thus is a group, as stated. ∎

is called the symmetric group on . When , we write its symmetric group as , and we call this group the symmetric group on letters. It is also called the group of permutations on letters. As we will see shortly, this is an appropriate name.

Instead of , we will use a different symbol, namely , for the identity function in .

When , we can specify by specifying where it sends each element. There are many ways to encode this information mathematically. One obvious way is to identify as the unique matrix with value in the entries and elsewhere. Composition of functions then corresponds to multiplication of matrices. Indeed, the matrix corresponding to has value in the entries , which is the same as , so the product has value in the entries . This notation may seem cumbersome. Luckily, there exists a more convenient notation, which we will make use of.

We can represent any by a matrix . We obviously lose the correspondence between function composition and matrix multiplication, but we gain a more readable notation. For the time being, we will use this.

Remark 2: Let . Then the product is the function obtained by first acting with , and then by . That is, . This point is important to keep in mind when computing products in . Some textbooks try to remedy the frequent confusion by writing functions like , that is, writing arguments on the left of functions. We will not do this, as it is not standard. The reader should use the next example and theorem to get a feeling for products in .

Example 3: We will show the multiplication table for . We introduce the special notation for : , , , , and . The multiplication table for is then

Theorem 4: has order .

Proof: This follows from a counting argument. We can specify a unique element in by specifying where each is sent. Also, any permutation can be specified this way. Let . In choosing we are completely free and have choices. Then, when choosing we must choose from , giving a total of choices. Continuing in this fashion, we see that for we must choose from , giving a total of choices. The total number of ways in which we can specify an element, and thus the number of elements in is then , as was to be shown. ∎

Theorem 5: is non-abelian for all .

Proof: Let be the function only interchanging 1 and 2, and be the function only interchanging 2 and 3. Then and . Since , is not abelian. ∎

Definition 6: Let such that for some . Then is called an -cycle, where is the smallest positive such integer. Let be the set of integers such that . Two cycles are called disjoint if . Also, a 2-cycle is called a transposition.

Remark 3: It's important to realize that if , then so is . If , then if we have that is not 1–1.

Theorem 7: Let . If , then .

Proof: For any integer such that but we have . A similar argument holds for but . If , we must have . Since , we have now exhausted every , and we are done. ∎

Theorem 8: Any permutation can be represented as a composition of disjoint cycles.

Proof: Let . Choose an element and compute . Since is finite of order , we know that exists and . We have now found a -cycle including . Since , this cycle may be factored out from to obtain . Repeat this process, which terminates since is finite, and we have constructed a composition of disjoint cycles that equals . ∎

Now that we have shown that all permuations are just compositions of disjoint cycles, we can introduce the ultimate shorthand notation for permutations. For an -cycle , we can show its action by choosing any element and writing .

Theorem 9: Any -cycle can be represented as a composition of transpositions.

Proof: Let . Then, (check this!), omitting the composition sign . Interate this process to obtain . ∎

Note 10: This way of representing as a product of transpositions is not unique. However, as we will see now, the "parity" of such a representation is well defined.

Definition 11: The parity of a permutation is even if it can be expressed as a product of an even number of transpositions. Otherwise, it is odd. We define the function if is even and if is odd.

Lemma 12: The identity has even parity.

Proof: Observe first that for . Thus the minimum number of transpositions necessary to represent is 2: . Now, assume that for any representation using less than transpositions must be even. Thus, let . Now, since in particular , we must have for some . Since disjoint transpositions commute, and where , it is always possible to configure the transpositions such that the first two transpositions are either , reducing the number of transposition by two, or . In this case we have reduced the number of transpositions involving by 1. We restart the same process as above. with the new representation. Since only a finite number of transpositions move , we will eventually be able to cancel two permutations and be left with transpositions in the product. Then, by the induction hypothesis, must be even and so is even as well, proving the lemma. ∎

Theorem 13: The parity of a permutation, and thus the function, is well-defined.

Proof: Let and write as a product of transposition in two different ways: . Then, since has even parity by Lemma 11 and . Thus, , and , so has a uniquely defined parity, and consequentially is well-defined. ∎

Theorem 14: Let . Then, .

Proof: Decompose and into transpositions: , . Then has parity given by . If both are even or odd, is even and indeed . If one is odd and one is even, is odd and again , proving the theorem. ∎

Lemma 15: The number of even permutations in equals the number of odd permutations.

Proof: Let be any even permutation and a transposition. Then has odd parity by Theorem 14. Let be the set of even permutations and the set of odd permutations. Then the function given by for any and a fixed transposition , is a bijection. (Indeed, it is a transposition in !) Thus and have the same number of elements, as stated. ∎

Definition 16: Let the set of all even permutations in be denoted by . is called the alternating group on letters.

Theorem 17: is a group, and is a subgroup of of order .

Proof: We first show that is a group under composition. Then it is automatically a subgroup of . That is closed under composition follows from Theorem 14 and associativity is inherited from . Also, the identity permutation is even, so . Thus is a group and a subgroup of . Since the number of even and odd permutations are equal by Lemma 14, we then have that , proving the theorem. ∎

Theorem 18: Let . Then is generated by the 3-cycles in .

Proof: We must show that any even permutation can be decomposed into 3-cycles. It is sufficient to show that this is the case for pairs of transpositions. Let be distinct. Then, by some casework,

- i) ,

- ii) , and

- iii) ,

proving the theorem. ∎

In a previous section we proved Lagrange's Theorem: The order of any subgroup divides the order of the parent group. However, the converse statement, that a group has a subgroup for every divisor of its order, is false! The smallest group providing a counterexample is the alternating group , which has order 12 but no subgroup of order 6. It has subgroups of orders 3 and 4, corresponding respectively to the cyclic group of order 3 and the Klein 4-group. However, if we add any other element to the subgroup corresponding to , it generates the whole group . We leave it to the reader to show this.

Dihedral Groups

[edit | edit source]

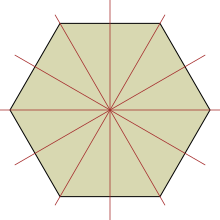

The dihedral groups are the symmetry groups of regular polygons. As such, they are subgroups of the symmetric groups. In general, a regular -gon has rotational symmetries and reflection symmetries. The dihedral groups capture these by consisting of the associated rotations and reflections.

Definition 19: The dihedral group of order , denoted , is the group of rotations and reflections of a regular -gon.

Theorem 20: The order of is precisely .

Proof: Let be a rotation that generates a subgroup of order in . Obviously, then captures all the pure rotations of a regular -gon. Now let be any rotation in The rest of the elements can then be found by composing each element in with . We get a list of elements . Thus, the order of is , justifying its notation and proving the theorem. ∎

Remark 21: From this proof we can also see that is a generating set for , and all elements can be obtained by writing arbitrary products of and and simplifying the expression according to the rules , and . Indeed, as can be seen from the figure, a rotation composed with a reflection is new reflection.

Group Theory/Homomorphism

We are finally making our way into the meat of the theory. In this section we will study structure-preserving maps between groups. This study will open new doors and provide us with a multitude of new theorems.

Up until now we have studied groups at the "element level". Since we are now about to take a step back and study groups at the "homomorphism level", readers should expect a sudden increase in abstraction starting from this section. We will try to ease the reader into this increase by keeping one foot at the "element level" throughout this section.

From here on out the notation will denote the identity element in the group unless otherwise specified.

Group homomorphisms

[edit | edit source]Definition 1: Let and be groups. A homomorphism from to is a function such that for all ,

- .

Thus, a homomorphism preserves the group structure. We have included the multiplication symbols here to make explicit that multiplication on the left side occurs in , and multiplication on the right side occurs in .

Already we see that this section is different from the previous ones. Up until now we have, excluding subgroups, only dealt with one group at a time. No more! Let us start by deriving some elementary and immediate consequences of the definition.

Theorem 2: Let be groups and a homomorphism. Then . In other words, the identity is mapped to the identity.

Proof: Let . Then, , implying that is the identity in , proving the theorem. ∎

Theorem 3: Let be groups and a homomorphism. Then for any , . In other words, inverses are mapped to inverses.

Proof: Let . Then implying that , as was to be shown. ∎

Theorem 4: Let be groups, a homomorphism and let be a subgroup of . Then is a subgroup of .

Proof: Let . Then and . Since , , and so is a subgroup of . ∎

Theorem 5: Let be groups, a homomorphism and let be a subgroup of . Then is a subgroup of .

Proof: Let . Then , and since is a subgroup, . But then, , and so is a subgroup of . ∎

From Theorem 4 and Theorem 5 we see that homomorphisms preserve subgroups. Thus we can expect to learn a lot about the subgroup structure of a group by finding suitable homomorphisms into .

In particular, every homomorphism has associated with it two important subgroups.

Definition 6: A homomorphism is called an isomorphism if it is bijective and its inverse is a homomorphism. Two groups are called isomorphic if there exists an isomorphism between them, and we write to denote " is isomorphic to ".

Theorem 7: A bijective homomorphism is an isomorphism.

Proof: Let be groups and let be a bijective homomorphism. We must show that the inverse is a homomorphism. Let . then there exist unique such that and . Then we have since is a homomorphism. Now apply to all equations. We obtain , and , so is a homomorphism and thus is an isomorphism. ∎

Definition 8: Let be groups. A homomorphism that maps every element in to is called a trivial homomorphism (or zero homomorphism), and is denoted by

Definition 9: Let be a subgroup of a group . Then the homomorphism given by is called the inclusion of into . Let be a group isomorphic to a subgroup of a group . Then the isomorphism induces an injective homomorphism given by , called an imbedding of into . Obviously, .

Definition 10: Let be groups and a homomorphism. Then we define the following subgroups:

- i) , called the kernel of , and

- ii) , called the image of .

Theorem 11: The composition of homomorphisms is a homomorphism.

Proof: Let be groups and and homomorphisms. Then is a function. We must show it is a homomorphism. Let . Then , so is indeed a homomorphisms. ∎

Theorem 12: Composition of homomorphisms is associative.

Proof: This is evident since homomorphisms are functions, and composition of functions is associative. ∎

Corollary 13: The composition of isomorphisms is an isomorphism.

Proof: This is evident from Theorem 11 and since the composition of bijections is a bijection. ∎

Theorem 14: Let be groups and a homomorphism. Then is injective if and only if .

Proof: Assume and . Then , implying that . But by assumption then , so is injective. Assume now that and . Then there exists another element such that . But then . Since both and map to , is not injective, proving the theorem. ∎

Corollary 15: Inclusions are injective.

Proof: The result is immediate. Since for all , we have . ∎

The kernel can be seen to satisfy a universal property. The following theorem explains this, but it is unusually abstract for an elementary treatment of groups, and the reader should not worry if he/she cannot understand it immediately.

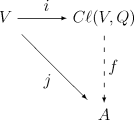

Theorem 16: Let be groups and a group homomorphism. Also let be a group and a homomorphism such that . Also let is the inclusion of into . Then there exists a unique homomorphism such that.

Proof: Since , by definition we must have , so exists. The commutativity then forces , so is unique. ∎

Definition 17: A commutative diagram is a pictorial presentation of a network of functions. Commutativity means that when several routes of function composition from one object lead to the same destination, the two compositions are equal as functions. As an example, the commutative diagram on the right describes the situation in Theorem 16. In the commutative diagrams (or diagrams for short, we will not show diagrams which no not commute) shown in this chapter on groups, all functions are implicitly assumed to be group homomorphisms. Monomorphisms in diagrams are often emphasized by hooked arrows. In addition, epimorphims are often emphasized by double headed arrows. That an inclusion is a monomorphism will be proven shortly.

Remark 18: From the commutative diagram on the right, the kernel can be defined completely without reference to elements. Indeed, Theorem 16 would become the definition, and our Definition 10 i) would become a theorem. We will not entertain this line of thought in this book, but the advanced reader is welcome to work it out for him/herself.

Automorphism Groups

[edit | edit source]In this subsection we will take a look at the homomorphisms from a group to itself.

Definition 19: A homomorphism from a group to itself is called an endomorphism of . An endomorphism which is also an isomorphism is called an automorphism. The set of all endomorphisms of is denoted , while the set of all automorphisms of is denoted .

Theorem 20: is a monoid under composition of homomorphisms. Also, is a submonoid which is also a group.

Proof: We only have to confirm that is closed and has an identity, which we know is true. For , the identity homomorphism is an isomorphism and the composition of isomorphisms is an isomorphism. Thus is a submonoid. To show it is a group, note that the inverse of an automorphism is an automorphism, so is indeed a group. ∎

Groups with Operators

[edit | edit source]An endomorphism of a group can be thought of as a unary operator on that group. This motivates the following definition:

Definition 21: Let be a group and . Then the pair is called a group with operators. is called the operator domain and its elements are called the homotheties of . For any , we introduce the shorthand for all . Thus the fact that the homotheties of are endomorphisms can be expressed thus: for all and , .

Example 22: For any group , the pair is trivially a group with operators.

Lemma 23: Let be a group with operators. Then can be extended to a submonoid of such that the structure of is identical to .

Proof: Let include the identity endomorphism and let be a generating set. Then is closed under compositions and is a monoid. Since any element of is expressible as a (possibly empty) composition of elements in , the structures are identical. ∎

In the following, we assume that the operator domain is always a monoid. If it is not, we can extend it to one by Lemma 23.

Definition 24: Let and be groups with operators with the same operator domain. Then a homomorphism is a group homomorphism such that for all and , we have .

Definition 25: Let be a group with operators and a subgroup of . Then is called a stable subgroup (or a -invariant subgroup) if for all and , . We say that respects the homotheties of . In this case is a sub-group with operators.

Example 26: Let be a vector space over the field . If we by denote the underlying abelian group under addition, then is a group with operators, where for any and , we define . Then the stable subgroups are precisely the linear subspaces of (show this).

Problems

[edit | edit source]Problem 1: Show that there is no nontrivial homomorphism from to .

Group Theory/Normal subgroups and Quotient groups

In the preliminary chapter we discussed equivalence classes on sets. If the reader has not yet mastered this notion, he/she is advised to do so before starting this section.

Normal Subgroups

[edit | edit source]Recall the definition of kernel in the previous section. We will exhibit an interesting feature it possesses. Namely, let be in the coset . Then there exists a such that for all . This is easy to see because a coset of the kernel includes all elements in that are mapped to a particular element. The kernel inspires us to look for what are called normal subgroups.

Definition 1: A subgroup is called normal if for all . We may sometimes write to emphasize that is normal in .

Theorem 2: A subgroup is normal if and only if for all .

Proof: By the definition, a subgroup is normal if and only if since conjugation is a bijection. The theorem follows by multiplying on the right by . ∎

We stated that the kernel is a normal subgroup in the introduction, so we had better well prove it!

Theorem 3: Let be any homomorphism. Then is normal.

Proof: Let and . Then , so , proving the theorem. ∎

Theorem 4: Let be groups and a group homomorphism. Then if is a normal subgroup of , then is normal in .

Proof: Let and . Then since is normal in , and so , proving the theorem. ∎

Theorem 5: Let be groups and a group homomorphism. Then if is a normal subgroup of , is normal in .

Proof: Let And . Then if such that , we have for some since is normal. Thus for all and so is normal in . ∎

Corollary 6: Let be groups and a surjective group homomorphism. Then if is a normal subgroup of , is normal in .

Proof: Replace with in the proof of Theorem 5. ∎

Remark 7: If is a normal subgroup of and is a normal subgroup of , it does not necessarily imply that is a normal subgroup of . The reader is invited to display a counterexample of this.

Theorem 8: Let be a group and be subgroups. Then

- i) If is normal, then is a subgroup of .

- ii) If both and are normal, then is a normal subgroup of .

- iii) If and are normal, then is a normal subgroup of .

Proof: i) Let be normal. First, since for each , there exists such that , so . To show is a subgroup, let . Then for some since is normal, and so is a subgroup.

ii) Let and . Then since both and are normal, there exists such that . It follows that and so is normal.