The two most important things in Theory of The Fourier Transform are "differential calculus" and "integral calculus". The readers are required to learn "differential calculus" and "integral calculus" before studying the Theory of The Fourier Transform. Hence, we will learn them on this page.

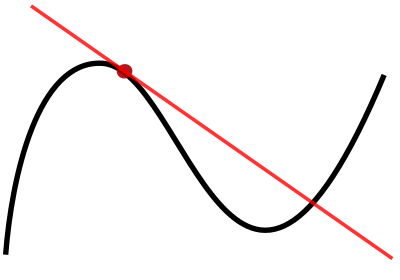

The graph of a function, drawn in black, and a tangent line to that function, drawn in red. The slope of the tangent line is equal to the derivative of the function at the marked point. Differentiation is the process of finding a derivative of the function

f

(

x

)

{\displaystyle f(x)}

x . The differentiation of

f

(

x

)

{\displaystyle f(x)}

f

′

(

x

)

{\displaystyle f'(x)}

d

d

x

f

(

x

)

{\displaystyle {\frac {d}{dx}}f(x)}

Differentiation is manipulated as follows:

d

d

x

(

x

3

+

1

)

{\displaystyle {\frac {d}{dx}}(x^{3}+1)}

=

3

x

3

−

1

{\displaystyle =3x^{3-1}}

=

3

x

2

{\displaystyle =3x^{2}}

As you see, in differentiation, the number of the degree of the variable is multiplied to the variable, while the degree is subtracted one from itself at the same time. The term which doesn't have the variable

x

{\displaystyle x}

d

d

x

(

x

5

+

x

2

+

28

)

{\displaystyle {\frac {d}{dx}}(x^{5}+x^{2}+28)}

=

5

x

5

−

1

+

2

x

2

−

1

{\displaystyle =5x^{5-1}+2x^{2-1}}

x , so 28 is removed

=

5

x

4

+

2

x

1

{\displaystyle =5x^{4}+2x^{1}}

=

5

x

4

+

2

x

{\displaystyle =5x^{4}+2x}

d

d

x

(

x

7

+

x

4

+

x

+

7

)

{\displaystyle {\frac {d}{dx}}(x^{7}+x^{4}+x+7)}

=

7

x

7

−

1

+

4

x

4

−

1

+

1

x

1

−

1

{\displaystyle =7x^{7-1}+4x^{4-1}+1x^{1-1}}

x , so 7 is removed

=

7

x

6

+

4

x

3

+

+

1

x

0

{\displaystyle =7x^{6}+4x^{3}++1x^{0}}

=

7

x

6

+

4

x

3

+

1

{\displaystyle =7x^{6}+4x^{3}+1}

(1)

d

d

x

(

x

4

+

15

)

=

{\displaystyle {\frac {d}{dx}}(x^{4}+15)=}

(2)

d

d

x

(

x

5

+

x

3

+

x

)

=

{\displaystyle {\frac {d}{dx}}(x^{5}+x^{3}+x)=}

If you differentiate

f

(

x

)

=

x

3

{\displaystyle f(x)=x^{3}}

f

(

x

)

=

x

3

−

2

{\displaystyle f(x)=x^{3}-2}

f

′

(

x

)

=

3

x

2

{\displaystyle f'(x)=3x^{2}}

f

′

(

x

)

=

3

x

2

{\displaystyle f'(x)=3x^{2}}

integral calculus is used. Integration of

f

(

x

)

{\displaystyle f(x)}

∫

f

(

x

)

d

x

{\displaystyle \int f(x)dx}

Integration is manipulated as follows:

∫

3

x

2

d

x

{\displaystyle \int 3x^{2}dx}

=

3

x

2

+

1

2

+

1

+

C

{\displaystyle ={\frac {3x^{2+1}}{2+1}}+C}

=

x

3

+

C

{\displaystyle =x^{3}+C}

C

{\displaystyle C}

More generally speaking, the integration of f(x) is defined as:

∫

x

n

d

x

=

x

n

+

1

n

+

1

+

C

{\displaystyle \int x^{n}dx={\frac {x^{n+1}}{n+1}}+C}

Definite integral is defined as follows:

∫

a

b

f

(

x

)

d

x

{\displaystyle \int _{a}^{b}f(x)dx}

=

[

F

(

x

)

]

a

b

{\displaystyle =[F(x)]_{a}^{b}}

=

F

(

b

)

−

F

(

a

)

{\displaystyle =F(b)-F(a)}

F

(

x

)

=

∫

f

(

x

)

d

x

{\displaystyle F(x)=\int f(x)dx}

(1)

∫

4

x

d

x

{\displaystyle \int 4xdx}

=

4

x

1

+

1

1

+

1

+

C

{\displaystyle ={\frac {4x^{1+1}}{1+1}}+C}

=

2

x

2

+

C

{\displaystyle =2x^{2}+C}

(2)

∫

1

2

(

2

x

+

1

)

d

x

{\displaystyle \int _{1}^{2}(2x+1)dx}

=

[

x

2

+

x

]

1

2

{\displaystyle =[x^{2}+x]_{1}^{2}}

=

(

4

+

2

)

−

(

1

+

1

)

{\displaystyle =(4+2)-(1+1)}

=

4

{\displaystyle =4}

(1)

∫

6

x

d

x

=

{\displaystyle \int 6xdx=}

∫

0

2

(

3

x

2

+

3

)

d

x

=

{\displaystyle \int _{0}^{2}(3x^{2}+3)dx=}

Euler's number

e

{\displaystyle e}

∫

e

x

d

x

=

e

x

+

C

{\displaystyle \int e^{x}dx=e^{x}+C}

d

d

x

e

x

=

e

x

{\displaystyle {\frac {d}{dx}}e^{x}=e^{x}}

By the way, in Mathematics,

e

x

p

(

x

)

{\displaystyle exp(x)}

e

x

{\displaystyle e^{x}}

Fourier Series

For the function

f

(

x

)

{\displaystyle f(x)}

f

(

x

)

=

f

(

a

)

+

f

′

(

a

)

1

!

(

x

−

a

)

+

f

″

(

a

)

2

!

(

x

−

a

)

2

+

⋯

+

f

(

n

)

(

a

)

n

!

(

x

−

a

)

n

+

⋯

{\displaystyle f(x)=f(a)+{\frac {f'(a)}{1!}}(x-a)+{\frac {f''(a)}{2!}}(x-a)^{2}+\cdots +{\frac {f^{(n)}(a)}{n!}}(x-a)^{n}+\cdots }

This is the Taylor expansion of

f

(

x

)

{\displaystyle f(x)}

f

(

x

)

{\displaystyle f(x)}

For the function

f

(

x

)

{\displaystyle f(x)}

2

π

{\displaystyle 2\pi }

a

0

2

+

∑

n

=

1

∞

(

a

n

cos

n

x

+

b

n

sin

n

x

)

⋯

(

1

)

{\displaystyle {\frac {a_{0}}{2}}+\sum _{n=1}^{\infty }(a_{n}\cos nx+b_{n}\sin nx)\cdots (1)}

This series is referred to as Fourier series of

f

(

x

)

{\displaystyle f(x)}

a

n

{\displaystyle a_{n}}

b

n

{\displaystyle b_{n}}

a

n

=

1

π

∫

−

π

π

f

(

x

)

cos

(

n

x

)

d

x

{\displaystyle a_{n}={\frac {1}{\pi }}\int _{-\pi }^{\pi }f(x)\cos(nx)dx}

b

n

=

1

π

∫

−

π

π

f

(

x

)

sin

(

n

x

)

d

x

{\displaystyle b_{n}={\frac {1}{\pi }}\int _{-\pi }^{\pi }f(x)\sin(nx)dx}

where

n

{\displaystyle n}

f

(

x

)

{\displaystyle f(x)}

f

(

x

)

{\displaystyle f(x)}

f

(

x

)

=

a

0

2

+

∑

n

=

1

∞

(

a

n

cos

n

x

+

b

n

sin

n

x

)

{\displaystyle f(x)={\frac {a_{0}}{2}}+\sum _{n=1}^{\infty }(a_{n}\cos nx+b_{n}\sin nx)}

The Fourier transform relates the function's time domain, shown in red, to the function's frequency domain, shown in blue. The component frequencies, spread across the frequency spectrum, are represented as peaks in the frequency domain. Fourier Transform is to transform the function which has certain kinds of variables, such as time or spatial coordinate,

f

(

t

)

{\displaystyle f(t)}

f

^

(

ξ

)

=

∫

−

∞

∞

f

(

t

)

e

−

i

2

π

t

ξ

d

t

{\displaystyle {\hat {f}}(\xi )=\int _{-\infty }^{\infty }f(t)\ e^{-i2\pi t\xi }\,dt}

This integral above is referred to as Fourier integral, while

f

^

(

ξ

)

{\displaystyle {\hat {f}}(\xi )}

Fourier transform of

f

(

t

)

{\displaystyle f(t)}

t

{\displaystyle t}

ξ

{\displaystyle \xi }

On the other hand, Inverse Fourier transform is defined as follows:

f

(

t

)

=

∫

−

∞

∞

f

^

(

ξ

)

e

i

2

π

t

ξ

d

ξ

{\displaystyle f(t)=\int _{-\infty }^{\infty }{\hat {f}}(\xi )\ e^{i2\pi t\xi }\,d\xi }

In the textbooks of universities, the Fourier transform is usually introduced with the variable Angular frequency

ω

{\displaystyle \omega }

ξ

→

ω

=

2

π

ξ

{\displaystyle \xi \rightarrow \omega =2\pi \xi }

1.

f

^

(

ω

)

=

∫

−

∞

∞

f

(

t

)

e

−

i

ω

t

d

t

{\displaystyle {\hat {f}}(\omega )=\int _{-\infty }^{\infty }f(t)e^{-i\omega t}dt}

f

(

t

)

=

1

2

π

∫

−

∞

∞

f

^

(

t

)

e

i

ω

t

d

ω

{\displaystyle f(t)={\frac {1}{2\pi }}\int _{-\infty }^{\infty }{\hat {f}}(t)e^{i\omega t}d\omega }

2.

f

^

(

ω

)

=

1

2

π

∫

−

∞

∞

f

(

t

)

e

−

i

ω

t

d

t

{\displaystyle {\hat {f}}(\omega )={\frac {1}{\sqrt {2\pi }}}\int _{-\infty }^{\infty }f(t)e^{-i\omega t}dt}

f

(

t

)

=

1

2

π

∫

−

∞

∞

f

^

(

t

)

e

i

ω

t

d

ω

{\displaystyle f(t)={\frac {1}{\sqrt {2\pi }}}\int _{-\infty }^{\infty }{\hat {f}}(t)e^{i\omega t}d\omega }

Fourier Sine Series

The series below is called Fourier sine series .

f

(

x

)

=

∑

n

=

1

∞

b

n

sin

n

π

x

L

{\displaystyle f(x)=\sum _{n=1}^{\infty }b_{n}\sin {\frac {n\pi x}{L}}}

where

b

n

=

2

L

∫

0

L

f

(

x

)

sin

n

π

x

L

d

x

{\displaystyle b_{n}={\frac {2}{L}}\int _{0}^{L}f(x)\sin {\frac {n\pi x}{L}}dx}

Fourier Cosine Series

The series below is called Fourier cosine series .

f

(

x

)

=

a

0

2

+

∑

n

=

1

∞

a

n

cos

n

π

x

L

{\displaystyle f(x)={\frac {a_{0}}{2}}+\sum _{n=1}^{\infty }a_{n}\cos {\frac {n\pi x}{L}}}

where

a

n

=

2

L

∫

0

L

f

(

x

)

cos

n

π

x

L

d

x

{\displaystyle a_{n}={\frac {2}{L}}\int _{0}^{L}f(x)\cos {\frac {n\pi x}{L}}dx}

Let

f

(

x

)

=

(

−

∞

,

∞

)

{\displaystyle f(x)=(-\infty ,\infty )}

suppose

∫

−

∞

∞

|

f

(

t

)

|

d

t

≤

M

{\displaystyle \int _{-\infty }^{\infty }|f(t)|dt\leq M}

Then we have the functions below.

f

(

t

)

=

1

2

π

∫

−

∞

∞

f

^

(

ω

)

e

i

ω

t

d

ω

{\displaystyle f(t)={\frac {1}{2\pi }}\int _{-\infty }^{\infty }{\hat {f}}(\omega )e^{i\omega t}d\omega }

This function

f

(

t

)

{\displaystyle f(t)}

Fourier integral .

f

^

(

ω

)

=

∫

−

∞

∞

f

(

t

)

e

−

i

ω

t

d

t

{\displaystyle {\hat {f}}(\omega )=\int _{-\infty }^{\infty }f(t)e^{-i\omega t}dt}

This function

f

^

(

ω

)

{\displaystyle {\hat {f}}(\omega )}

∫

−

∞

∞

|

x

(

t

)

|

2

d

t

=

∫

−

∞

∞

|

X

(

f

)

|

2

d

f

{\displaystyle \int _{-\infty }^{\infty }|x(t)|^{2}\,dt=\int _{-\infty }^{\infty }|X(f)|^{2}\,df}

where

X

(

f

)

=

F

{

x

(

t

)

}

{\displaystyle X(f)={\mathcal {F}}\{x(t)\}}

x (t ) and f represents the frequency component of x . The function above is called Parseval's theorem .

Let

X

¯

(

f

)

{\displaystyle {\bar {X}}(f)}

X

(

f

)

{\displaystyle X(f)}

X

(

−

f

)

=

∫

−

∞

∞

x

(

−

t

)

e

−

i

f

t

{\displaystyle X(-f)=\int _{-\infty }^{\infty }x(-t)e^{-ift}}

=

∫

−

∞

∞

x

(

t

)

e

i

f

t

{\displaystyle =\int _{-\infty }^{\infty }x(t)e^{ift}}

=

X

¯

(

f

)

{\displaystyle ={\bar {X}}(f)}

∫

−

∞

∞

|

X

(

f

)

|

2

d

f

{\displaystyle \int _{-\infty }^{\infty }|X(f)|^{2}\,df}

Here, we know that

X

(

f

)

{\displaystyle X(f)}

x

(

t

)

{\displaystyle x(t)}

x

(

t

)

{\displaystyle x(t)}

|

X

(

f

)

|

2

{\displaystyle |X(f)|^{2}}

∫

−

∞

∞

X

¯

(

f

)

X

(

f

)

d

f

{\displaystyle \int _{-\infty }^{\infty }{\bar {X}}(f)X(f)\,df}

=

∫

−

∞

∞

(

1

2

π

∫

−

∞

∞

x

(

t

)

e

i

f

t

d

t

)

(

1

2

π

∫

−

∞

∞

x

(

t

′

)

e

−

i

f

t

′

d

t

′

)

d

f

{\displaystyle =\int _{-\infty }^{\infty }\left({\frac {1}{{\sqrt {2}}\pi }}\int _{-\infty }^{\infty }x(t)e^{ift}dt\right)\left({\frac {1}{{\sqrt {2}}\pi }}\int _{-\infty }^{\infty }x(t')e^{-ift'}dt'\right)df}

=

∫

−

∞

∞

x

(

t

)

x

(

t

′

)

(

1

2

π

e

−

i

f

(

t

−

t

′

)

d

f

)

d

t

d

t

′

{\displaystyle =\int _{-\infty }^{\infty }x(t)x(t')\left({\frac {1}{2\pi }}e^{-if(t-t')}df\right)dtdt'}

=

∫

−

∞

∞

∫

−

∞

∞

x

(

t

)

x

(

t

′

)

δ

(

t

−

t

′

)

d

t

d

t

′

{\displaystyle =\int _{-\infty }^{\infty }\int _{-\infty }^{\infty }x(t)x(t')\delta (t-t')dtdt'}

=

∫

−

∞

∞

|

x

(

t

)

|

2

d

t

{\displaystyle =\int _{-\infty }^{\infty }|x(t)|^{2}dt}

Hence

∫

−

∞

∞

|

x

(

t

)

|

2

d

t

=

∫

−

∞

∞

|

X

(

f

)

|

2

d

f

{\displaystyle \int _{-\infty }^{\infty }|x(t)|^{2}\,dt=\int _{-\infty }^{\infty }|X(f)|^{2}\,df}

Bessel Functions

The equation below is called Bessel's differential equation.

x

2

d

2

y

d

x

2

+

x

d

y

d

x

+

(

x

2

−

n

2

)

y

=

0

{\displaystyle x^{2}{\frac {d^{2}y}{dx^{2}}}+x{\frac {dy}{dx}}+(x^{2}-n^{2})y=0}

The two distinctive solutions of Bessel's differential equation are either one of the two pairs: (1)Linear combination of Bessel function(also known as Bessel function of the first kind) and Neumann function(also known as Bessel function of the second kind) (2)Linear combination of Hankel function of the first kind and Hankel function of the second kind.

Bessel function (of the first kind) is denoted as

J

n

(

x

)

{\displaystyle \displaystyle J_{n}(x)}

J

n

(

x

)

=

x

n

2

n

Γ

(

1

−

n

)

(

1

−

x

2

2

(

2

n

+

2

)

+

x

4

2

⋅

4

(

2

n

+

2

)

(

2

n

+

4

)

−

⋯

)

{\displaystyle J_{n}(x)={\frac {x^{n}}{2^{n}\Gamma (1-n)}}(1-{\frac {x^{2}}{2(2n+2)}}+{\frac {x^{4}}{2\cdot 4(2n+2)(2n+4)}}-\cdots )}

=

∑

m

=

0

∞

(

−

1

)

m

m

!

Γ

(

m

+

n

+

1

)

(

x

2

)

2

m

+

n

{\displaystyle =\sum _{m=0}^{\infty }{\frac {(-1)^{m}}{m!\,\Gamma (m+n+1)}}{\left({\frac {x}{2}}\right)}^{2m+n}}

where

H

n

(

1

)

(

x

)

=

J

n

(

x

)

+

i

N

n

(

x

)

{\displaystyle H_{n}^{(1)}(x)=J_{n}(x)+iN_{n}(x)}

H

n

(

2

)

(

x

)

=

J

n

(

x

)

−

i

N

n

(

x

)

{\displaystyle H_{n}^{(2)}(x)=J_{n}(x)-iN_{n}(x)}

N

n

(

x

)

=

J

n

(

x

)

cos

(

n

π

)

−

J

−

n

(

x

)

sin

(

n

π

)

{\displaystyle N_{n}(x)={\frac {J_{n}(x)\cos(n\pi )-J_{-n}(x)}{\sin(n\pi )}}}

Γ(z ) is the gamma function.

i

{\displaystyle i}

N

n

(

x

)

{\displaystyle N_{n}(x)}

H

n

(

x

)

{\displaystyle H_{n}(x)}

The Laplace transform is an integral transform which is widely used in physics and engineering.

Laplace Transforms involve a technique to change an expression into another form that is easier to work with using an improper integral. We usually introduce Laplace Transforms in the context of differential equations, since we use them a lot to solve some differential equations that can't be solved using other standard techniques. However, Laplace Transforms require only improper integration techniques to use. So you may run across them in first year calculus.

Notation: The Laplace Transform is denoted as

L

{

f

(

t

)

}

{\displaystyle \displaystyle {\mathcal {L}}\left\{f(t)\right\}}

The Laplace transform is named after mathematician and astronomer Pierre-Simon Laplace.

Topics You Need to Understand For This Page

Improper Integrals

For a function

f

(

t

)

{\displaystyle f(t)}

e

{\displaystyle e}

s

{\displaystyle s}

F

(

s

)

{\displaystyle F(s)}

F

(

s

)

=

L

{

f

(

t

)

}

(

s

)

=

∫

0

∞

e

−

s

t

f

(

t

)

d

t

{\displaystyle F(s)={\mathcal {L}}\left\{f(t)\right\}(s)=\int _{0}^{\infty }e^{-st}f(t)\,dt}

The parameter

s

{\displaystyle s}

s

=

σ

+

i

ω

,

{\displaystyle s=\sigma +i\omega ,\,}

σ

{\displaystyle \sigma }

ω

{\displaystyle \omega }

This

F

(

s

)

{\displaystyle F(s)}

Laplace transform of

f

(

t

)

{\displaystyle f(t)}

Here is what is going on.

Examples of Laplace transform

f

(

t

)

{\displaystyle f(t)}

F

(

s

)

=

L

{

f

(

t

)

}

{\displaystyle F(s)={\mathcal {L}}\{f(t)\}}

C

{\displaystyle C}

C

s

{\displaystyle {\frac {C}{s}}}

t

{\displaystyle t}

1

s

2

{\displaystyle {\frac {1}{s^{2}}}}

t

n

{\displaystyle t^{n}}

n

!

s

n

+

1

{\displaystyle {\frac {n!}{s^{n+1}}}}

t

n

−

1

(

n

−

1

)

!

{\displaystyle {\frac {t^{n-1}}{(n-1)!}}}

1

s

n

{\displaystyle {\frac {1}{s^{n}}}}

e

a

t

{\displaystyle e^{at}}

1

s

−

a

{\displaystyle {\frac {1}{s-a}}}

e

−

a

t

{\displaystyle e^{-at}}

1

s

+

a

{\displaystyle {\frac {1}{s+a}}}

c

o

s

ω

t

{\displaystyle {\rm {cos}}\ \omega t}

s

s

2

+

ω

2

{\displaystyle {\frac {s}{s^{2}+{\omega }^{2}}}}

s

i

n

ω

t

{\displaystyle {\rm {sin}}\ \omega t}

ω

s

2

+

ω

2

{\displaystyle {\frac {\omega }{s^{2}+{\omega }^{2}}}}

t

n

−

1

Γ

(

n

)

{\displaystyle {\frac {t^{n-1}}{\Gamma (n)}}}

1

s

n

{\displaystyle {\frac {1}{s^{n}}}}

δ

(

t

−

a

)

{\displaystyle \delta (t-a)}

e

−

a

s

{\displaystyle e^{-as}}

H

(

t

−

a

)

{\displaystyle H(t-a)}

e

−

a

s

s

{\displaystyle {\frac {e^{-as}}{s}}}

In the above table,

C

{\displaystyle C}

a

{\displaystyle a}

n

{\displaystyle n}

δ

(

t

−

a

)

{\displaystyle \delta (t-a)}

H

(

t

−

a

)

{\displaystyle H(t-a)}

ID

Function

Time domain

x

(

t

)

=

L

−

1

{

X

(

s

)

}

{\displaystyle x(t)={\mathcal {L}}^{-1}\left\{X(s)\right\}}

Laplace domain

X

(

s

)

=

L

{

x

(

t

)

}

{\displaystyle X(s)={\mathcal {L}}\left\{x(t)\right\}}

Region of convergence for causal systems

1

Ideal delay

δ

(

t

−

τ

)

{\displaystyle \delta (t-\tau )\ }

e

−

τ

s

{\displaystyle e^{-\tau s}\ }

1a

Unit impulse

δ

(

t

)

{\displaystyle \delta (t)\ }

1

{\displaystyle 1\ }

a

l

l

s

{\displaystyle \mathrm {all} \ s\,}

2

Delayed n th power with frequency shift

(

t

−

τ

)

n

n

!

e

−

α

(

t

−

τ

)

⋅

u

(

t

−

τ

)

{\displaystyle {\frac {(t-\tau )^{n}}{n!}}e^{-\alpha (t-\tau )}\cdot u(t-\tau )}

e

−

τ

s

(

s

+

α

)

n

+

1

{\displaystyle {\frac {e^{-\tau s}}{(s+\alpha )^{n+1}}}}

s

>

0

{\displaystyle s>0\,}

2a

n th Power

t

n

n

!

⋅

u

(

t

)

{\displaystyle {t^{n} \over n!}\cdot u(t)}

1

s

n

+

1

{\displaystyle {1 \over s^{n+1}}}

s

>

0

{\displaystyle s>0\,}

2a.1

q th Power

t

q

Γ

(

q

+

1

)

⋅

u

(

t

)

{\displaystyle {t^{q} \over \Gamma (q+1)}\cdot u(t)}

1

s

q

+

1

{\displaystyle {1 \over s^{q+1}}}

s

>

0

{\displaystyle s>0\,}

2a.2

Unit step

u

(

t

)

{\displaystyle u(t)\ }

1

s

{\displaystyle {1 \over s}}

s

>

0

{\displaystyle s>0\,}

2b

Delayed unit step

u

(

t

−

τ

)

{\displaystyle u(t-\tau )\ }

e

−

τ

s

s

{\displaystyle {e^{-\tau s} \over s}}

s

>

0

{\displaystyle s>0\,}

2c

Ramp

t

⋅

u

(

t

)

{\displaystyle t\cdot u(t)\ }

1

s

2

{\displaystyle {\frac {1}{s^{2}}}}

s

>

0

{\displaystyle s>0\,}

2d

n th Power with frequency shift

t

n

n

!

e

−

α

t

⋅

u

(

t

)

{\displaystyle {\frac {t^{n}}{n!}}e^{-\alpha t}\cdot u(t)}

1

(

s

+

α

)

n

+

1

{\displaystyle {\frac {1}{(s+\alpha )^{n+1}}}}

s

>

−

α

{\displaystyle s>-\alpha \,}

2d.1

Exponential decay

e

−

α

t

⋅

u

(

t

)

{\displaystyle e^{-\alpha t}\cdot u(t)\ }

1

s

+

α

{\displaystyle {1 \over s+\alpha }}

s

>

−

α

{\displaystyle s>-\alpha \ }

3

Exponential approach

(

1

−

e

−

α

t

)

⋅

u

(

t

)

{\displaystyle (1-e^{-\alpha t})\cdot u(t)\ }

α

s

(

s

+

α

)

{\displaystyle {\frac {\alpha }{s(s+\alpha )}}}

s

>

0

{\displaystyle s>0\ }

4

Sine

sin

(

ω

t

)

⋅

u

(

t

)

{\displaystyle \sin(\omega t)\cdot u(t)\ }

ω

s

2

+

ω

2

{\displaystyle {\omega \over s^{2}+\omega ^{2}}}

s

>

0

{\displaystyle s>0\ }

5

Cosine

cos

(

ω

t

)

⋅

u

(

t

)

{\displaystyle \cos(\omega t)\cdot u(t)\ }

s

s

2

+

ω

2

{\displaystyle {s \over s^{2}+\omega ^{2}}}

s

>

0

{\displaystyle s>0\ }

6

Hyperbolic sine

sinh

(

α

t

)

⋅

u

(

t

)

{\displaystyle \sinh(\alpha t)\cdot u(t)\ }

α

s

2

−

α

2

{\displaystyle {\alpha \over s^{2}-\alpha ^{2}}}

s

>

|

α

|

{\displaystyle s>|\alpha |\ }

7

Hyperbolic cosine

cosh

(

α

t

)

⋅

u

(

t

)

{\displaystyle \cosh(\alpha t)\cdot u(t)\ }

s

s

2

−

α

2

{\displaystyle {s \over s^{2}-\alpha ^{2}}}

s

>

|

α

|

{\displaystyle s>|\alpha |\ }

8

Exponentially-decaying sine

e

−

α

t

sin

(

ω

t

)

⋅

u

(

t

)

{\displaystyle e^{-\alpha t}\sin(\omega t)\cdot u(t)\ }

ω

(

s

+

α

)

2

+

ω

2

{\displaystyle {\omega \over (s+\alpha )^{2}+\omega ^{2}}}

s

>

−

α

{\displaystyle s>-\alpha \ }

9

Exponentially-decaying cosine

e

−

α

t

cos

(

ω

t

)

⋅

u

(

t

)

{\displaystyle e^{-\alpha t}\cos(\omega t)\cdot u(t)\ }

s

+

α

(

s

+

α

)

2

+

ω

2

{\displaystyle {s+\alpha \over (s+\alpha )^{2}+\omega ^{2}}}

s

>

−

α

{\displaystyle s>-\alpha \ }

10

n th Root

t

n

⋅

u

(

t

)

{\displaystyle {\sqrt[{n}]{t}}\cdot u(t)}

s

−

(

n

+

1

)

/

n

⋅

Γ

(

1

+

1

n

)

{\displaystyle s^{-(n+1)/n}\cdot \Gamma \left(1+{\frac {1}{n}}\right)}

s

>

0

{\displaystyle s>0\,}

11

Natural logarithm

ln

(

t

t

0

)

⋅

u

(

t

)

{\displaystyle \ln \left({t \over t_{0}}\right)\cdot u(t)}

−

t

0

s

[

ln

(

t

0

s

)

+

γ

]

{\displaystyle -{t_{0} \over s}\ [\ \ln(t_{0}s)+\gamma \ ]}

s

>

0

{\displaystyle s>0\,}

12

Bessel function n

J

n

(

ω

t

)

⋅

u

(

t

)

{\displaystyle J_{n}(\omega t)\cdot u(t)}

ω

n

(

s

+

s

2

+

ω

2

)

−

n

s

2

+

ω

2

{\displaystyle {\frac {\omega ^{n}\left(s+{\sqrt {s^{2}+\omega ^{2}}}\right)^{-n}}{\sqrt {s^{2}+\omega ^{2}}}}}

s

>

0

{\displaystyle s>0\,}

(

n

>

−

1

)

{\displaystyle (n>-1)\,}

13

Modified Bessel function n

I

n

(

ω

t

)

⋅

u

(

t

)

{\displaystyle I_{n}(\omega t)\cdot u(t)}

ω

n

(

s

+

s

2

−

ω

2

)

−

n

s

2

−

ω

2

{\displaystyle {\frac {\omega ^{n}\left(s+{\sqrt {s^{2}-\omega ^{2}}}\right)^{-n}}{\sqrt {s^{2}-\omega ^{2}}}}}

s

>

|

ω

|

{\displaystyle s>|\omega |\,}

14

Bessel function

Y

0

(

α

t

)

⋅

u

(

t

)

{\displaystyle Y_{0}(\alpha t)\cdot u(t)}

15

Modified Bessel function

K

0

(

α

t

)

⋅

u

(

t

)

{\displaystyle K_{0}(\alpha t)\cdot u(t)}

16

Error function

e

r

f

(

t

)

⋅

u

(

t

)

{\displaystyle \mathrm {erf} (t)\cdot u(t)}

e

s

2

/

4

erfc

(

s

/

2

)

s

{\displaystyle {e^{s^{2}/4}\operatorname {erfc} \left(s/2\right) \over s}}

s

>

0

{\displaystyle s>0\,}

17

Constant

C

{\displaystyle C}

C

s

{\displaystyle \displaystyle {\frac {C}{s}}}

Explanatory notes:

u

(

t

)

{\displaystyle u(t)\,}

δ

(

t

)

{\displaystyle \delta (t)\,}

Γ

(

z

)

{\displaystyle \Gamma (z)\,}

γ

{\displaystyle \gamma \,}

t

{\displaystyle t\,}

time , any independent dimension.

s

{\displaystyle s\,}

α

{\displaystyle \alpha \,}

β

{\displaystyle \beta \,}

τ

{\displaystyle \tau \,}

ω

{\displaystyle \omega \,}

C

{\displaystyle C}

n

{\displaystyle n\,}

A causal system is a system where the impulse response h (t ) is zero for all time t prior to t = 0. In general, the ROC for causal systems is not the same as the ROC for anticausal systems. See also causality.

1. Calculate

L

{

C

}

{\displaystyle {\mathcal {L}}\{C\}}

C

{\displaystyle C}

∫

0

∞

e

−

s

t

C

d

t

=

C

∫

0

∞

e

−

s

t

d

t

=

C

lim

b

→

∞

∫

0

b

e

−

s

t

d

t

=

C

lim

b

→

∞

e

−

s

t

−

s

|

t

=

0

t

=

b

=

−

C

s

[

lim

b

→

∞

e

−

b

s

−

e

0

]

=

−

C

s

[

0

−

1

]

=

C

s

{\displaystyle {\begin{array}{rcl}\displaystyle {\int _{0}^{\infty }e^{-st}C\,dt}&=&C\displaystyle {\int _{0}^{\infty }e^{-st}\,dt}\\&=&\displaystyle {C\lim _{b\to \infty }{\int _{0}^{b}e^{-st}\,dt}}\\&=&\displaystyle {\left.C\lim _{b\to \infty }{\frac {e^{-st}}{-s}}\right|_{t=0}^{t=b}}\\&=&\displaystyle {-{\frac {C}{s}}\left[\lim _{b\to \infty }{e^{-bs}}-e^{0}\right]}\\&=&\displaystyle {-{\frac {C}{s}}[0-1]}\\&=&\displaystyle {\frac {C}{s}}\end{array}}}

∴

L

{

C

}

=

C

s

{\displaystyle \therefore \displaystyle {\mathcal {L}}\left\{C\right\}={\frac {C}{s}}}

2. Calculate

L

{

e

−

a

t

}

{\displaystyle {\mathcal {L}}\{e^{-at}\}}

∫

0

∞

e

−

s

t

⋅

e

−

a

t

d

t

=

∫

0

∞

e

−

(

s

+

a

)

t

d

t

=

lim

b

→

∞

∫

0

b

e

−

(

s

+

a

)

t

d

t

=

lim

b

→

∞

[

−

e

−

(

s

+

a

)

t

s

+

a

]

t

=

0

t

=

b

=

lim

b

→

∞

[

−

e

−

(

s

+

a

)

b

s

+

a

−

−

e

−

(

s

+

a

)

0

s

+

a

]

=

1

s

+

a

{\displaystyle {\begin{array}{rcl}\displaystyle \int _{0}^{\infty }e^{-st}\cdot e^{-at}\,dt&=&\displaystyle \int _{0}^{\infty }e^{-(s+a)t}\,dt\\&=&\displaystyle \lim _{b\to \infty }{\displaystyle \int _{0}^{b}e^{-(s+a)t}\,dt}\\&=&\displaystyle \lim _{b\to \infty }\left[\displaystyle {\frac {-e^{-(s+a)t}}{s+a}}\right]_{t=0}^{t=b}\\&=&\displaystyle \lim _{b\to \infty }\left[\displaystyle {\frac {-e^{-(s+a)b}}{s+a}}-\displaystyle {\frac {-e^{-(s+a)0}}{s+a}}\right]\\&=&\displaystyle {\frac {1}{s+a}}\end{array}}}

∴

L

{

e

−

a

t

}

=

1

s

+

a

{\displaystyle \therefore {\mathcal {L}}\{e^{-at}\}=\displaystyle {\frac {1}{s+a}}}

Complex Integration

On the piecewise smooth curve

C

:

z

=

z

(

t

)

{\displaystyle C:z=z(t)}

(

a

≦

t

≦

b

)

{\displaystyle (a\leqq t\leqq b)}

f(z) is continuous. Then we obtain the equation below.

∫

C

f

(

z

)

d

z

=

∫

a

b

f

{

z

(

t

)

}

d

z

(

t

)

d

t

d

t

{\displaystyle \int _{C}f(z)dz=\int _{a}^{b}f\{z(t)\}\ {\frac {dz(t)}{dt}}dt}

where

f

(

z

)

{\displaystyle f(z)}

z

{\displaystyle z}

Let

f

(

z

)

=

u

(

x

,

y

)

+

i

v

(

x

,

y

)

{\displaystyle f(z)=u(x,y)+iv(x,y)}

d

z

=

d

x

+

i

d

y

{\displaystyle dz=dx+idy}

Then

∫

C

f

(

z

)

d

z

{\displaystyle \int _{C}f(z)dz}

=

∫

C

(

u

+

i

v

)

(

d

x

+

i

d

y

)

{\displaystyle =\int _{C}(u+iv)(dx+idy)}

=

(

∫

C

u

d

x

−

∫

C

v

d

y

)

+

i

(

∫

C

v

d

x

−

∫

C

u

d

y

)

{\displaystyle =\left(\int _{C}udx-\int _{C}vdy\right)+i\left(\int _{C}vdx-\int _{C}udy\right)}

The right side of the equation is the real integral, therefore, according to calculus, the relationship below can be applied.

∫

x

1

x

2

f

(

x

)

d

x

=

∫

t

1

t

2

f

(

x

)

d

x

d

t

d

t

{\displaystyle \int _{x_{1}}^{x_{2}}f(x)dx=\int _{t_{1}}^{t_{2}}f(x){\frac {dx}{dt}}dt}

Hence

∫

C

f

(

z

)

d

z

{\displaystyle \int _{C}f(z)dz}

=

(

∫

a

b

u

d

x

d

t

d

t

−

∫

a

b

v

d

y

d

t

d

t

)

+

i

(

∫

a

b

v

d

x

d

t

d

t

−

∫

a

b

u

d

y

d

t

d

t

)

{\displaystyle =\left(\int _{a}^{b}u{\frac {dx}{dt}}dt-\int _{a}^{b}v{\frac {dy}{dt}}dt\right)+i\left(\int _{a}^{b}v{\frac {dx}{dt}}dt-\int _{a}^{b}u{\frac {dy}{dt}}dt\right)}

=

(

∫

a

b

u

x

′

(

t

)

d

t

−

∫

a

b

v

y

′

(

t

)

d

t

)

+

i

(

∫

a

b

v

x

′

(

t

)

d

t

−

∫

a

b

u

y

′

(

t

)

d

t

)

{\displaystyle =\left(\int _{a}^{b}ux'(t)dt-\int _{a}^{b}vy'(t)dt\right)+i\left(\int _{a}^{b}vx'(t)dt-\int _{a}^{b}uy'(t)dt\right)}

=

(

u

+

i

v

)

(

x

′

(

t

)

+

i

y

′

(

t

)

)

d

t

{\displaystyle =(u+iv)\left(x'(t)+iy'(t)\right)dt}

=

∫

a

b

f

{

z

(

t

)

}

z

′

(

t

)

d

t

{\displaystyle =\int _{a}^{b}f\{z(t)\}\ z'(t)dt}

This completes the proof.

The Basics

A

=

[

a

11

a

12

a

12

a

14

a

21

a

22

a

23

a

24

a

31

a

32

a

33

a

34

]

.

{\displaystyle \mathbf {A} ={\begin{bmatrix}a_{11}&a_{12}&a_{12}&a_{14}\\a_{21}&a_{22}&a_{23}&a_{24}\\a_{31}&a_{32}&a_{33}&a_{34}\\\end{bmatrix}}.}

A matrix is composed of a rectangular array of numbers arranged in rows and columns . The horizontal lines are called rows and the vertical lines are called columns. The individual items in a matrix are called elements . The element in the i-th row and the j-th column of a matrix is referred to as the i,j, (i,j), or (i,j)th element of the matrix. To specify the size of a matrix, a matrix with m rows and n columns is called an m-by-n matrix, and m and n are called its dimensions.

(1)

[

5

7

3

1

2

9

]

+

[

4

0

5

8

3

0

]

=

{\displaystyle {\begin{bmatrix}5&7&3\\1&2&9\end{bmatrix}}+{\begin{bmatrix}4&0&5\\8&3&0\end{bmatrix}}=}

4

[

−

1

0

−

5

7

9

−

6

]

=

{\displaystyle 4{\begin{bmatrix}-1&0&-5\\7&9&-6\end{bmatrix}}=}

[

−

2

5

7

0

0

9

]

T

=

{\displaystyle {\begin{bmatrix}-2&5&7\\0&0&9\end{bmatrix}}^{\mathrm {T} }=}

Multiplication of two matrices is defined only if the number of columns of the left matrix is the same as the number of rows of the right matrix. If A is an m-by-n matrix and B is an n-by-p matrix, then their matrix product AB is the m-by-p matrix whose entries are given by dot product of the corresponding row of A and the corresponding column of B[ 2]

[

A

B

]

i

,

j

=

A

i

,

1

B

1

,

j

+

A

i

,

2

B

2

,

j

+

⋯

+

A

i

,

n

B

n

,

j

=

∑

r

=

1

n

A

i

,

r

B

r

,

j

{\displaystyle [\mathbf {AB} ]_{i,j}=A_{i,1}B_{1,j}+A_{i,2}B_{2,j}+\cdots +A_{i,n}B_{n,j}=\sum _{r=1}^{n}A_{i,r}B_{r,j}}

[ 3]

Schematic depiction of the matrix product AB of two matrices A and B .

[

−

2

0

3

2

]

[

1

2

3

−

1

]

{\displaystyle {\begin{bmatrix}-2&0\\3&2\end{bmatrix}}{\begin{bmatrix}1&2\\3&-1\end{bmatrix}}}

=

[

−

2

+

0

−

4

+

0

3

+

6

6

+

(

−

2

)

]

{\displaystyle ={\begin{bmatrix}-2+0&-4+0\\3+6&6+(-2)\end{bmatrix}}}

=

[

−

2

−

4

9

4

]

{\displaystyle ={\begin{bmatrix}-2&-4\\9&4\end{bmatrix}}}

(1)

[

1

0

2

2

]

[

4

2

]

=

{\displaystyle {\begin{bmatrix}1&0\\2&2\end{bmatrix}}{\begin{bmatrix}4\\2\end{bmatrix}}=}

(2)

[

1

2

2

3

]

[

2

3

1

4

]

=

{\displaystyle {\begin{bmatrix}1&2\\2&3\end{bmatrix}}{\begin{bmatrix}2&3\\1&4\end{bmatrix}}=}

A row vector is a 1 × m matrix, while a column vector is a m × 1 matrix.

Suppose A is row vector and B is column vector, then the dot product is defined as follows;

A

⋅

B

=

|

A

|

|

B

|

c

o

s

θ

{\displaystyle A\cdot B=|A||B|cos\theta }

or

A

⋅

B

=

(

a

1

a

2

⋯

a

n

)

(

b

1

b

2

⋮

b

n

)

=

a

1

b

1

+

a

2

b

2

+

⋯

+

a

n

b

n

=

∑

i

=

1

n

a

i

b

i

{\displaystyle \mathbf {A} \cdot \mathbf {B} ={\begin{pmatrix}a_{1}&a_{2}&\cdots &a_{n}\end{pmatrix}}{\begin{pmatrix}b_{1}\\b_{2}\\\vdots \\b_{n}\end{pmatrix}}=a_{1}b_{1}+a_{2}b_{2}+\cdots +a_{n}b_{n}=\sum _{i=1}^{n}a_{i}b_{i}}

A

=

(

a

1

a

2

a

3

)

{\displaystyle \mathbf {A} ={\begin{pmatrix}a_{1}&a_{2}&a_{3}\end{pmatrix}}}

B

=

(

b

1

b

2

b

3

)

{\displaystyle \mathbf {B} ={\begin{pmatrix}b_{1}\\b_{2}\\b_{3}\end{pmatrix}}}

A

⋅

B

=

(

a

1

a

2

a

3

)

(

b

1

b

2

b

3

)

=

a

1

b

1

+

a

2

b

2

+

a

3

b

3

{\displaystyle \mathbf {A} \cdot \mathbf {B} ={\begin{pmatrix}a_{1}&a_{2}&a_{3}\end{pmatrix}}{\begin{pmatrix}b_{1}\\b_{2}\\b_{3}\end{pmatrix}}=a_{1}b_{1}+a_{2}b_{2}+a_{3}b_{3}}

Suppose

A

=

(

2

1

3

)

{\displaystyle \mathbf {A} ={\begin{pmatrix}2\\1\\3\end{pmatrix}}}

B

=

(

7

5

4

)

{\displaystyle \mathbf {B} ={\begin{pmatrix}7\\5\\4\end{pmatrix}}}

A

⋅

B

=

(

2

1

3

)

(

7

5

4

)

{\displaystyle \mathbf {A} \cdot \mathbf {B} ={\begin{pmatrix}2&1&3\end{pmatrix}}{\begin{pmatrix}7\\5\\4\end{pmatrix}}}

=

2

⋅

7

+

1

⋅

5

+

3

⋅

4

{\displaystyle =2\cdot 7+1\cdot 5+3\cdot 4}

=

14

+

5

+

12

{\displaystyle =14+5+12}

=

31

{\displaystyle =31}

(1)

A

=

(

3

2

5

)

{\displaystyle \mathbf {A} ={\begin{pmatrix}3\\2\\5\end{pmatrix}}}

B

=

(

1

4

3

)

{\displaystyle \mathbf {B} ={\begin{pmatrix}1\\4\\3\end{pmatrix}}}

A

⋅

B

=

{\displaystyle \mathbf {A} \cdot \mathbf {B} =}

(2)

A

=

(

1

0

3

)

{\displaystyle \mathbf {A} ={\begin{pmatrix}1\\0\\3\end{pmatrix}}}

B

=

(

6

9

2

)

{\displaystyle \mathbf {B} ={\begin{pmatrix}6\\9\\2\end{pmatrix}}}

A

⋅

B

=

{\displaystyle \mathbf {A} \cdot \mathbf {B} =}

Cross product is defined as follows:

A

×

B

=

|

A

|

|

B

|

s

i

n

θ

{\displaystyle A\times B=|A||B|sin\theta }

Or, using detriment,

A

×

B

=

|

e

x

e

y

e

z

a

x

a

y

a

z

b

x

b

y

b

z

|

=

(

a

y

b

z

−

a

z

b

y

,

a

z

b

x

−

a

x

b

z

,

a

x

b

y

−

a

y

b

x

)

{\displaystyle \mathbf {A\times B} ={\begin{vmatrix}e_{x}&e_{y}&e_{z}\\a_{x}&a_{y}&a_{z}\\b_{x}&b_{y}&b_{z}\\\end{vmatrix}}=(a_{y}b_{z}-a_{z}b_{y},a_{z}b_{x}-a_{x}b_{z},a_{x}b_{y}-a_{y}b_{x})}

where

e

{\displaystyle e}

Lagrange Equations

d

d

t

(

∂

L

∂

x

˙

)

−

∂

L

∂

x

=

0

{\displaystyle {\frac {d}{dt}}\left({\frac {\partial L}{\partial {\dot {x}}}}\right)-{\frac {\partial L}{\partial x}}=0}

where

x

˙

=

d

x

d

t

{\displaystyle {\dot {x}}={\frac {dx}{dt}}}

Lagrange Equation .

Let the kinetic energy of the point mass be

T

{\displaystyle T}

U

{\displaystyle U}

T

−

U

{\displaystyle T-U}

Lagrangian . Then the kinetic energy is expressed by

T

=

1

2

m

x

˙

2

+

1

2

m

y

˙

2

{\displaystyle T={\frac {1}{2}}m{\dot {x}}^{2}+{\frac {1}{2}}m{\dot {y}}^{2}}

=

m

2

(

x

˙

2

+

y

˙

2

)

{\displaystyle ={\frac {m}{2}}({\dot {x}}^{2}+{\dot {y}}^{2})}

Thus

T

=

T

(

x

˙

,

y

˙

)

{\displaystyle T=T({\dot {x}},{\dot {y}})}

U

=

U

(

x

,

y

)

{\displaystyle U=U(x,y)}

Hence the Lagrangian

L

{\displaystyle L}

L

=

T

−

U

{\displaystyle L=T-U}

=

T

(

x

˙

,

y

˙

)

−

U

(

x

,

y

)

{\displaystyle =T({\dot {x}},{\dot {y}})-U(x,y)}

=

m

2

(

x

˙

2

+

y

˙

2

)

−

U

(

x

,

y

)

{\displaystyle ={\frac {m}{2}}({\dot {x}}^{2}+{\dot {y}}^{2})-U(x,y)}

Therefore

T

{\displaystyle T}

x

˙

{\displaystyle {\dot {x}}}

y

˙

{\displaystyle {\dot {y}}}

U

{\displaystyle U}

x

{\displaystyle x}

y

{\displaystyle y}

∂

L

∂

x

˙

=

∂

T

∂

x

˙

=

m

x

˙

{\displaystyle {\frac {\partial L}{\partial {\dot {x}}}}={\frac {\partial T}{\partial {\dot {x}}}}=m{\dot {x}}}

∂

L

∂

y

˙

=

∂

T

∂

y

˙

=

m

y

˙

{\displaystyle {\frac {\partial L}{\partial {\dot {y}}}}={\frac {\partial T}{\partial {\dot {y}}}}=m{\dot {y}}}

In the same way, we have

∂

L

∂

x

=

−

∂

U

∂

x

{\displaystyle {\frac {\partial L}{\partial x}}=-{\frac {\partial U}{\partial x}}}

∂

L

∂

y

=

−

∂

U

∂

y

{\displaystyle {\frac {\partial L}{\partial y}}=-{\frac {\partial U}{\partial y}}}

The State Equation

As an experimental rule, about gas, the equation below is found.

P

V

=

n

R

T

{\displaystyle PV=nRT}

where

p

{\displaystyle \ p}

V

{\displaystyle \ V}

n

{\displaystyle \ n}

T

{\displaystyle \ T}

R

{\displaystyle \ R}

R

≃

8.31

J

(

K

m

o

l

)

−

1

{\displaystyle R\simeq 8.31J(Kmol)^{-1}}

The gas which strictly follows the law (1) is called ideal gas . (1) is called ideal gas law . Ideal gas law is the state equation of gas.

In high school, the ideal gas constant below might be used:

R

=

0.082

L

a

t

m

K

−

1

m

o

l

−

1

{\displaystyle R=0.082LatmK^{-1}mol^{-1}}

But, in university, the gas constant below is often used:

R

=

8.31

J

K

−

1

m

o

l

−

1

{\displaystyle R=8.31JK^{-1}mol^{-1}}

![{\displaystyle =[F(x)]_{a}^{b}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/248a4bcd8d64b28d0278b5de0f12d1f15f821b4a)

![{\displaystyle =[x^{2}+x]_{1}^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0aa19d348001596e8b33964f34c77def5987b649)

![{\displaystyle {\sqrt[{n}]{t}}\cdot u(t)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d4345a4c33a88daeb8ec5a3002d02d62f66ff3fb)

![{\displaystyle -{t_{0} \over s}\ [\ \ln(t_{0}s)+\gamma \ ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6fce40449b6e96562216aebb919341a47876cf15)

![{\displaystyle {\begin{array}{rcl}\displaystyle {\int _{0}^{\infty }e^{-st}C\,dt}&=&C\displaystyle {\int _{0}^{\infty }e^{-st}\,dt}\\&=&\displaystyle {C\lim _{b\to \infty }{\int _{0}^{b}e^{-st}\,dt}}\\&=&\displaystyle {\left.C\lim _{b\to \infty }{\frac {e^{-st}}{-s}}\right|_{t=0}^{t=b}}\\&=&\displaystyle {-{\frac {C}{s}}\left[\lim _{b\to \infty }{e^{-bs}}-e^{0}\right]}\\&=&\displaystyle {-{\frac {C}{s}}[0-1]}\\&=&\displaystyle {\frac {C}{s}}\end{array}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/901cc97eec2cfdf6a97bf864db698323ef9a69ae)

![{\displaystyle {\begin{array}{rcl}\displaystyle \int _{0}^{\infty }e^{-st}\cdot e^{-at}\,dt&=&\displaystyle \int _{0}^{\infty }e^{-(s+a)t}\,dt\\&=&\displaystyle \lim _{b\to \infty }{\displaystyle \int _{0}^{b}e^{-(s+a)t}\,dt}\\&=&\displaystyle \lim _{b\to \infty }\left[\displaystyle {\frac {-e^{-(s+a)t}}{s+a}}\right]_{t=0}^{t=b}\\&=&\displaystyle \lim _{b\to \infty }\left[\displaystyle {\frac {-e^{-(s+a)b}}{s+a}}-\displaystyle {\frac {-e^{-(s+a)0}}{s+a}}\right]\\&=&\displaystyle {\frac {1}{s+a}}\end{array}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2d6fb988be8e16bb358081ffba699e0f26bc3ee0)

![{\displaystyle [\mathbf {AB} ]_{i,j}=A_{i,1}B_{1,j}+A_{i,2}B_{2,j}+\cdots +A_{i,n}B_{n,j}=\sum _{r=1}^{n}A_{i,r}B_{r,j}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/db581eb370e44283170093b08a2ae8407bc38314)