Cg Programming/Unity/Nonlinear Deformations

This tutorial introduces vertex blending as an example of a nonlinear deformation. The main application is actually the rendering of skinned meshes.

While this tutorial is not based on any other specific tutorial, a good understanding of Section “Vertex Transformations” is very useful.

Blending between Two Model Transformations

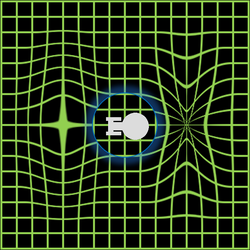

[edit | edit source]Most deformations of meshes cannot be modeled by the affine transformations with 4×4 matrices that are discussed in Section “Vertex Transformations”. The deformation of space by fictional warp fields is just one example. A more important example in computer graphics is the deformation of meshes when joints are bent, e.g. elbows or knees.

This tutorial introduces vertex blending to implement some of these deformations. The basic idea is to apply multiple model transformations in the vertex shader (in this tutorial we use only two model transformations) and then blend the transformed vertices, i.e. compute a weighted average of them with weights that have to be specified for each vertex. For example, the deformation of the skin near a joint of a skeleton is mainly influenced by the position and orientation of the two (rigid) bones meeting in the joint. Thus, the positions and orientations of the two bones define two affine transformations. Different points on the skin are influenced differently by the two bones: points at the joint might be influenced equally by the two bones while points farther from the joint around one bone are more strongly influenced by that bone than the other. These different strengths of the influence of the two bones can be implemented by using different weights in the weighted average of the two transformations.

For the purpose of this tutorial, we use two uniform transformations float4x4 _Trafo0 and float4x4 _Trafo1, which are specified by the user. To this end a small JavaScript (which should be attached to the mesh that should be deformed, e.g. the default sphere) allows us to specify two other game objects and copies their model transformations to the uniforms of the shader:

@script ExecuteInEditMode()

public var bone0 : GameObject;

public var bone1 : GameObject;

function Update ()

{

if (null != bone0)

{

GetComponent(Renderer).sharedMaterial.SetMatrix("_Trafo0",

bone0.GetComponent(Renderer).localToWorldMatrix);

}

if (null != bone1)

{

GetComponent(Renderer).sharedMaterial.SetMatrix("_Trafo1",

bone1.GetComponent(Renderer).localToWorldMatrix);

}

if (null != bone0 && null != bone1)

{

transform.position = 0.5 * (bone0.transform.position

+ bone1.transform.position);

transform.rotation = bone0.transform.rotation;

}

}

In C#, the script (named "MyClass") would look like this:

using UnityEngine;

using System.Collections;

[ExecuteInEditMode]

public class MyClass : MonoBehaviour

{

public GameObject bone0;

public GameObject bone1;

void Update ()

{

if (null != bone0)

{

GetComponent<Renderer>().sharedMaterial.SetMatrix("_Trafo0",

bone0.GetComponent<Renderer>().localToWorldMatrix);

}

if (null != bone1)

{

GetComponent<Renderer>().sharedMaterial.SetMatrix("_Trafo1",

bone1.GetComponent<Renderer>().localToWorldMatrix);

}

if (null != bone0 && null != bone1)

{

transform.position = 0.5f * (bone0.transform.position

+ bone1.transform.position);

transform.rotation = bone0.transform.rotation;

}

}

}

The two other game objects could be anything — I like cubes with one of the built-in semitransparent shaders such that their position and orientation is visible but they don't occlude the deformed mesh.

In this tutorial, the weight for the blending with the transformation _Trafo0 is set to input.vertex.z + 0.5:

float weight0 = input.vertex.z + 0.5;</code>

and the other weight is 1.0 - weight0. Thus, the part with positive input.vertex.z coordinates is influenced more by _Trafo0 and the other part is influenced more by _Trafo1. In general, the weights are application dependent and the user should be allowed to specify weights for each vertex.

The application of the two transformations and the weighted average can be written this way:

float4 blendedVertex =

weight0 * mul(_Trafo0, input.vertex)

+ (1.0 - weight0) * mul(_Trafo1, input.vertex);

Then the blended vertex has to be multiplied with the view matrix and the projection matrix. The product of these two matrices is available as UNITY_MATRIX_VP:

output.pos = mul(UNITY_MATRIX_VP, blendedVertex);

In order to illustrate the different weights, we visualize weight0 by the red component and 1.0 - weight0 by the green component of a color (which is set in the fragment shader):

output.col = float4(weight0, 1.0 - weight0, 0.0, 1.0);

For an actual application, we could also transform the normal vector by the two corresponding transposed inverse model transformations and perform per-pixel lighting in the fragment shader.

Complete Shader Code

[edit | edit source]All in all, the shader code looks like this:

Shader "Cg shader for vertex blending" {

SubShader {

Pass {

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

// Uniforms set by a script

uniform float4x4 _Trafo0; // model transformation of bone0

uniform float4x4 _Trafo1; // model transformation of bone1

struct vertexInput {

float4 vertex : POSITION;

};

struct vertexOutput {

float4 pos : SV_POSITION;

float4 col : COLOR;

};

vertexOutput vert(vertexInput input)

{

vertexOutput output;

float weight0 = input.vertex.z + 0.5;

// depends on the mesh

float4 blendedVertex =

weight0 * mul(_Trafo0, input.vertex)

+ (1.0 - weight0) * mul(_Trafo1, input.vertex);

output.pos = mul(UNITY_MATRIX_VP, blendedVertex);

output.col = float4(weight0, 1.0 - weight0, 0.0, 1.0);

// visualize weight0 as red and weight1 as green

return output;

}

float4 frag(vertexOutput input) : COLOR

{

return input.col;

}

ENDCG

}

}

}

This is, of course, only an illustration of the concept but it can already be used for some interesting nonlinear deformations such as twists around the axis.

For skinned meshes in skeletal animation, many more bones (i.e. model transformations) are necessary and each vertex has to specify which bone (using, for example, an index) contributes with which weight to the weighted average. However, Unity computes the blending of vertices in software; thus, this topic is less relevant for Unity programmers.

Summary

[edit | edit source]Congratulations, you have reached the end of another tutorial. We have seen:

- How to blend vertices that are transformed by two model matrices.

- How this technique can be used for nonlinear transformations and skinned meshes.

Further reading

[edit | edit source]If you still want to learn more

- about the model transformation, the view transformation, and the projection, you should read the description in Section “Vertex Transformations”.

- about vertex skinning, you could read the section about vertex skinning in Chapter 8 of the “OpenGL ES 2.0 Programming Guide” by Aaftab Munshi, Dan Ginsburg, and Dave Shreiner, published 2009 by Addison-Wesley.