Computer Systems Engineering/Markov models

Markov Models

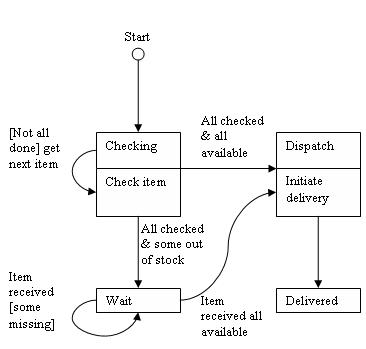

[edit | edit source]State diagrams describe the behavior of a system. They describe all possible states of a system (object in UML) and how the state changes as a result of events that reach the system (object).

For example,

It should be noted that Markov Models treat state transitions as a stochastic process.

Let each state be defined by a random variable. The set of random variables {xn} forms a Markov chain if the probability that the next value (state) is xn+1 depends only on the present value (state) xn and not on any previous values. The effect of the entire previous history on future behavior is summed up in the current state. For “discrete time” Markov chains, transitions can only occur at the integers 1,2,3… For “continuous time” Markov chains, transitions can occur at any time. This is important since the past history is specified entirely by the present state, and we cannot also specify how long a process has been in the present state.

Analytically: P(x(tn+1) = xn+1 | x(tn) = xn, x(tn-1) = xn-1, … x(t1) = x1) = P(x(tn+1) = xn+1 | x(tn) = xn) where t1 < t2 < … < tn < tn+1 and x1, x2, …, xn are in some discrete state space.

Consider the discrete time Markov chain:

Observe: the sum of probabilities of leaving each city is 1.

P(xn = j | x1 = i1, x2 = i2, …, xn-1 = in-1) = P(xn = j | xn-1 = in-1).

P(xn = j | xn-1 = in-1) is a one-step transition probability. The probability goes from one state E=i at step n-1 to state E=j at step n.

Let Pij(n) = probability that a system goes from state Ei to state Ej in n-1 steps. Then, is the probability that the system goes from state Ei to state Ek in one step and then goes from state Ek to state Ej in n-1 steps. This is called the Chapman-Kolmogorov equation.

Let P(n) be a matrix. Each entry is Pij(n). If this is a matrix of n-step transition probabilities, then

(called homogenous if this is independent of n)

Define Pij = P(xn = j | xn-1 = i) as the probability that a system goes to the next state Ej given in state Ei. This is called the set of one-step transition probabilities.

Example: P00 = 0, P01 = ¾ , P02 = ¼ , P12 = ¾

In matrix form:

Let πj = P(xn = j) be the probability that the system is in state j at step n. In our example,

The probability in state j at step n+1 is the probability in state i at step n and goes to state j in one step.

In vector form:

In particular,

For example: Let Π(0) = [0,1,0], starting in Columbia.

After a long time, let

Therefore,

Example:

Observe:

The sum of (1) and (2) is negative (3), so therefore there is a linear dependence between the equations, and thus, there is no unique solution. We also know that π0 + π1 + π2 = 1 (i.e. the system must be in some state). This is called a normalizing condition.

Now solve:

Observe:

This is like flipping a coin, where r = probability of heads and 1-r = probability of tails. So, P(sequence of exactly m heads) = rm (1-r).

How long does a process remain in a state i?

[edit | edit source]Suppose the process enters state Ei. Then the probability of remaining in state Ei at the next step is Pii. The probability that the process leaves Ei at the next step is 1-Pii. Each time step is independent, so P(system remains in state Ei for exactly m additional time steps given that it has just entered state Ei) = (1-Pii) Piim. In other words, the process stays for m time steps with a probability of m*( Pii Pii Pii …Pii) and leaves the state on the m+1 step.

Geometric distribution

[edit | edit source]The average time in state Ei is E(time in state E):

Now, , therefore:

Therefore,

In short, the average time a process stays in a state before leaving is the inverse of the probability that it leaves.

Assume a watch state i for k steps and also that the system does not change state. What is the probability that the process does not leave the state on the next step? Let y = the steps to the first departure.

Thus the process is memory less – as we knew.

Continuous Time Markov Chains

[edit | edit source]P(x(tn+1) = j | x(tn) = in … x(t0) = i0) = P(x(tn+1) = j | x(tn) = in)

Recall that Markov chains are memoryless, so a transition does not depend on how long a process is in a given state.

Let τi = the amount of time in a state i. P(τi > s+t | τi > s) = h(t), which is only a function of t (not s). The function shows how long a process is in a state.

Thus, exponential is the only continuous distribution that satisfies this. Again, if we set s,t = 0, then P(τi >0) = 1, and

Defining the time-dependent transition probability

[edit | edit source]Now, consider the times s ≤ u ≤ t. To get from state Ei at time s to Ej at time t, a process must pass through some state at time u. Therefore,

This is the Chapman-Kolmogorov equation, and is exactly analogous to the discrete case, but is difficult to use directly.

Recall that

In the Chapman-Kolmogorov equation, substitute t+h for t:

Divide by h, take the limit as h approaches 0, u approaches t:

This is called Kolmogorov’s forward equation.

Also,

is Kolmogorov’s backward equation.

If time homogeneous transition probabilities do not depend on initial time t but only on interval h, then qij(t) = qij and qj(t) = qj and = Pij(h), then

State transition probabilities and initial distribution determine all properties of a Markov chain. Observe:

Divide by h, take the limit as h approaches 0, v approaches t:

.

If time homogeneous:

State classifications

[edit | edit source]Recall that

A state is transient if there is a positive probability that a system will not return to that state. Let Xji = number of visits to state i starting at state j. The expected value of Xji, E(Xji) is

A state i is transient if E(Xji) is finite. This implies that Pji(n) approaches 0 as n approaches infinity.

A state i is recurrent if and only if starting from state j, a process returns to state i with a probability of 1. Also, is infinite.

Consider the time it takes to reach a state i from state j for a recurrent chain. Let fij(n) = probability of the first visit to j from i in n steps. Let fii(n) = probability of the first return from i to i in n steps. Then, the probability of ever visiting j starting from state i is:

State i is recurrent if fii = 1, and transient if fii < 1. If fii = 1, then the mean recurrence time is A state is called recurrent non-null if is finite, and recurrent null if is infinite.

For a recurrent state i, The period of state i, , is the greatest common divisor of a set of positive integers n such that A system is aperiodic if = 1, and periodic if > 1.

A system is absorbing if and only if Pii = 1.

A Markov chain is irreducible if every state can be reached from every other state in a finite number of steps; All states of an irreducible Markov chain are of the same type. In an aperiodic Markov chain, all states are aperiodic. If one state is periodic with period d, then all are periodic with the same period d.

Continuous Time Markov Chains

[edit | edit source]Let = random variable = time in state i. A Markov property means the probability of a transition cannot depend on how long the system has been in a state.

Now for s=0, and we have

s = t = 0 again requires

Now,

Therefore, the time in state is exponentially distributed with parameter which may depend on the state.

We have seen that = time in state i and that Let t be a very small increment of time h:

This last equation is the probability that a system leaves a state in the next increment of time h. This can be expressed in a Taylor series:

Number the states 0,1… Let = probability of transition to a higher numbered state (birth). Let = probability of transition to a lower numbered state (death). Only allow transitions to the next higher or lower numbered state (or stay in the same state). This is called a birth-death process.

Let P(n=k,t) = probability of being in state k at time t. Consider how a process can get into state n=k at time t+h.

For births: must have been in state n=k-1 and a birth occurred

For deaths: must have been in state n=k+1 and a death occurred

If no births and no deaths: must have been in state n=k at time t

Balance Equations

[edit | edit source]Equilibrium Balance Equations

[edit | edit source]Flow Balance

[edit | edit source]The equilibrium balance equations can be intuitively constructed by inspection from the state transition rate diagram below.

This is similar to the state transition diagram we have already seen. In equilibrium, the rate of flow of probability into a state must equal the rate of flow of probability out of that state. Hence

Solving the Balance Equations

[edit | edit source]The quantity is called the “traffic intensity” and is usually denoted by

Recall that the queue will be unstable if jobs arrive faster than they can be served. Thus

As we will see determines most of the properties of interest in the queue. Note that it is determined by the ratio of the arrival and service rates and not their absolute values. Queues with equal traffic intensities can be expected to have similar properties even though their respective arrival or service rates may be quite different.

The constant P(0) is determined using the normalizing requirement, as shown below:

Note the similarity of the last equation to a geometric distribution:

This is the probability that there are k jobs in the queue and is shown below:

The probability that there is at least 1 job in the queue is This is the utilization of the server, the probability that it is busy.

Number in the Queue

[edit | edit source]The average number of jobs in the queue is

The sum is evaluated by using the following trick: note that

Now since the sum of a derivative is the derivative of the sum,

This is shown below as increases from 0 to 1:

The probability that there are more than some number r of jobs in the queue is

This is shown below for several values of

Note that P(n≥r) decreases geometrically in . Recall also that is the utilization of the server. Thus for lightly utilized servers (small ) the likelihood that there are many jobs in the queue is small. For heavily utilized servers ( near 1) the likelihood that there are a lot of jobs waiting to be served is much higher.

Let T = the average waiting time, Tn = the waiting time for arrival n with n-1 customers in the system, xi = the service requirement of the ith customer (i = 1,2,…,n), xo = the remaining service for the customer in service, and xn = the service requirement of n+1 customers.