Computer Systems Engineering/Probability

PROBABILITY REVIEW

[edit | edit source]1.0 BASICS

[edit | edit source]An experiment, real or imagined, can be characterized by three properties. These are:

- 1. A set of outcomes

- 2. A grouping of these outcomes into classes

- 3. The relative frequency with which these classes occur.

For example, coin tossing is an experiment, the outcome of which is either heads or tails. One grouping of outcomes would be as heads (H) or tails (T). The relative frequency of heads would then be the number of heads in a large number of tosses:

(1)

If pairs of tosses are taken as the outcome of the experiment the set of outcomes would be HH, HT, TH and TT. These could be grouped into the three classes: 2 heads, 2 tails and 1 head and 1 tail. Assuming that the coin is fair the relative frequency of the class 1 head and 1 tail would be twice the other two.

In probability theory the “sample space”, denoted by S, corresponds to the set of outcomes, with each possible outcome being an element of S. An “event” corresponds to a class of outcomes and the “probability” is a measure of the relative frequency of the event. This can be interpreted as the fractional number of times the event occurs if the experiment is repeated many times:

(2)

Formally, the probability measure satisfies the properties:

*1. For any event A, 0≤P(A)≤1 *2. P(S) = 1 *3. If A and B are mutually exclusive events then P(A+B)=P(A)+P(B). (3)

A + B is the set of elements in the sample space that are included in either the event A or B or both. An event A can be described as

A = {ω: ω satisfies membership property for A} (4)

where ω is a member of the sample space, ωєS. This is read: “A is the set ω such that ω satisfies membership property for A.” Similarly, introducing the set theoretic notation for the complement, union and intersection of events, let

AC = {ω: ω not in A}

= complement of A

A + B = {ω: ω in A or B or both}

= union of A and B* (5)

A,B = {ω: ω in both A and B}

= intersection of A and B*

Ø = SC = null event = no sample points since all sample points are in S

- In set theoretic notation both of the symbols “+” and “U” are used to denote the union of 2 sets. Similarly, the intersection is denoted by “,”, “∩”, “∙” or, sometimes by writing the symbols adjacent to each other. The symbols “+” and “∙” are suggestive of the arithmetic opera¬tions of addition and multiplication with which the set operations of union and intersection are analogous. However, they should not be confused. One applies to sets, the other to numbers.

These are illustrated using the Venn diagrams below. The points in the square represent the sample space, S and those in an enclosed loop an event.

In I note that

S = A + AC (6)

and that points in AC and A are mutually exclusive. Hence using (3)

P(S)= P(AC + A) (7)

= P(AC)+P(A)

But P(S) = 1, hence

P(AC) = 1 - P(A) (8)

In IV points in A and B are not mutually exclusive. In this case

(9)

The last term in (9) is necessary since both of the first 2 terms include the points in A,B but we only want to count these points once in A+B,

Conditional Probabilities

If the event B happens the “conditional probability” that A also happens, P(A|B), (read: “probabiljty of A given B”) is defined as

(10)

This gives the fraction of the points in B that are also in A. In effect, with knowledge of B we can create a new sample space containing only the points in B and then look for the relative frequency of the points in A that are in B,

A and B are said to be independent if

P(A,B) = P(A) P(B) (11)

Note that if A and B are independent then

(12)

implying that knowledge of B tells nothing about how often A occurs. As we noted in (5) and (6), A and AC cover S and are mutually exclusive:

A + AC = S (13) A, AC = Ø

Thus

B = B,A + B, AC (14)

which simply states that every point in B is also in either A or AC. Then by (3)

P(B) = P(B,A + B, AC) (15) = P(B,A) + P(B, AC)

By (10)

P(B,A) = P(B|A) P(A) (16)

and

P(B,AC) = P(B|AC) P(AC) (17)

Hence

P(B) = P(B|A) P(A) + P(B|AC) P(AC) (18)

If the events Ai, i = 1,2…n are mutually exclusive and exhaustive, i.e.

A1 + A2 +… An = S (19) Ai,Aj = Ø for all i ≠ j

then (15) and (18) can be written more generally as

(20)

and

(21)

This is the “law of total probability.” Another useful relation which follows from (10) is “Baye’s Law”:

(22)

However, by (18)

P(B) = P(B|A)P(A) + P(B|AC)P(AC) (23)

and thus

(24)

Thus if we know the probability of event A, P(A), and the effect of A on the probability of B, P(B|A), the effect of B on the probability of A, P(A|B), can be calculated.

An Example

Baye’s Law can have some nonintuitive consequences in component testing.

Suppose that we have a way of testing memory chips. If the chip is bad (B) the test indicates bad (T) 95% of the time:

P(T|B) = .95 (25)

If the chips is good (BC) the test indicates good (TC) 95% of the time

P(TC|BC) = .95 (26)

Also assume that 1% of the chips are bad:

P(B) = .01 (27)

What percent of the chips which test bad really are bad? i.e., What is P(B|T)? Applying (24) we have

(28)

By (8)

P(BC) = 1 – P(B) = .99 P(T| BC) = 1 – P(TC|BC) = .05 (29)

Thus

(30)

Also the probability that a chip which tests good, is good is

(31)

and by (8)

P(TC|B) = 1 - P(T|B) = .05 (32)

Thus

(33)

Thus almost all of the chips that test good, are good, but only 16% of the chips that test bad are really defective. The test is not as discriminating as we might wish.

Combinations and Permutations

N distinguishable objects can be ordered (permuted) in N! different ways. This is easily shown by observing that any of the N objects can be put in the first position; any the remaining n-1 objects in the second position; and so on until only 1 object can be put in the last position.

More generally, a set of r objects can be selected from the set of N objects in N(N—1) (N—2) ... (N—r+1) = N! / (N—r)! different ways. This is sampling without replacement. If r =N, there are N! sets and each set is simply an ordering of the N objects.

Once selected, the r objects in the set can be ordered in r! different ways without changing the composition of the set. Each ordering corresponds to another of the N! / (N—r)! samplings. Thus, if only the objects selected and not their order is important, there are

different ways to select r objects from the set of N objects. This is called the combination of N objects taken r at a time and given the special notation

Note that for r = N,

and for r = 1,

The combination can also be thought of as the number of ways objects can be partitioned into groups of r objects and (N—r) objects respectively. This naturally leads to the extension that N objects can be Partitioned into k groups with ni objects in group i, i = 1,2,…k and N = Σni in

different ways.

2.0 RANDOM VARIABLES

[edit | edit source]A random variable is a variable whose value depends upon the outcome of the experiment. Thus a random variable is, in effect, a function assigning a numeric value to each event in the sample space, S. In practice the values assigned by the random variable to the events are chosen to have some physical meaning. For example, if we bet on the coin tossing experiment and win $l.00 for a head and lose $.50 for a tail the random variable X could be assigned the values

(34)

where ω is the outcome of the experiment.

Usually we are interested in the probability with which a random variable takes on a particular value:

P(X = x) (35)

This is the sum of the relative frequency with which the members, ω, of the sample space for which X(ω) = x occurs. That is

P(X = x) = relative frequency for which X(ω) = x. (36)

If the coin is fair (i.e. heads and tails are equally likely) in (34) then

P(X = 1) = 1/2 P(X = -.5) = 1/2 (37)

Another useful representation of a probability is the 'probability distribution function,'

(38)

This is also called the 'cumulative distribution function.' It is shown below for (34)

Note that since each point in S is mapped by the random variable onto the real line

P ( X ≤ -∞ ) = 0 P ( X ≤ ∞ ) = 1 P ( X ≤ x ) ≥ 0, for all x on the real line (39)

Also for two points a and b on the real line

P ( X ≤ b) – P (X ≤ a ) = P ( a < X ≤ b ), for a < b (40)

and

P ( X ≤ b) ≥ P (X ≤ a ), for a ≤ b (41)

The random variable used to describe the coin tossing experiment can take on only a finite number of values ( 2 in this case: +1 and -0.5 ). This is called a discrete random variable. The random variable used to describe other experiments (e.g. the time between when two things happen) may, more appropriately, have a continuous range of values. This ‘continuous random variable’ has a continuous distribution function which we denote by

F ( x ) = P ( X ≤ x ) (42)

As in the discrete case, F(x) is a non-decreasing function, and

0 ≤ F(x) ≤ 1 , for all x. (43)

A commonly encountered example of such a random variable is the exponentially distributed random variable

(44)

where λ < 0. This is shown below

For purposes of calculation, another function, the ‘probability density function,’ is often more convenient than the distribution function. Assuming that the derivative exists, this is defined as the derivative of the distribution function.

(45)

Since F(x) is non-decreasing, f(x) ≥ 0. Also by (45)

(46)

and

1 = F(∞) = (47)

and

P ( a ≤ x ≤ b ) = F ( b ) – F ( a ) = (48)

Thus f ( x ) is a function which, when integrated over an interval, gives the probability that the random variable X lies in that interval.

Note that by (47) the area under the curve defined by f ( x ) is 1. Thus letting Δx be a small interval

f ( x ) Δx

is a rectangle of height f ( x ) and width Δx. Then f ( x ) Δx is approximately the amount of area under the curve of f ( x ) in the range of x to x + Δx

In the limit as Δx goes to zero ( denoted by dx: lim Δx 0 Δx = dx ) this approximation becomes exact. Thus it is often useful to think of f ( x ) dx as being the probability that X ‘equals’ x:

f(x)dx = P(X ‘=’ x) (49)

Strictly speaking it is the probability that X lies in a very small interval about the point x.

In modeling computer systems we are often interested in things such as the number of jobs in the system, waiting times and service requirements which can have only non-negative values. The random variables used to describe these quantities can have only non-negative values:

F(x) = P(X ≤ x) = 0, for x < 0 (50)

and this often makes the mathematics for dealing with them slightly simpler. In what follows we will assume that the random variables are non-negative but this can be easily extended to the more general case.

The exponentially distributed random variable (44) is a non-negative random variable. Its derivative is defined everywhere for x ≥ 0 as

= λe-λx (51)

Expected Value

Although the probability distribution function describes the composite behavior of a large number of experiments it is generally too complex to be useful for comparing the results of alternative experiments. What is needed is a single number, a ‘figure of merit,’ which represents the outcome of the experiment. This is provided by the ‘expected value,’ ‘average value,’ or ‘mean’ (the three terms are synonymous) of the random variable. If X is a random variable then the notations E(X) and

are both used to represent its expected value. It is defined as the sum of the products of the value of the random variable and how often the experiment gives this result. For the discrete case this is

(52)

and in the continuous case

(53)

In the important case where the random variable is integer valued, (52) becomes

(54)

Note that this can be rewritten as

(55)

The sum of the terms in each column is kP(N = k), where k = 1, 2, … is the column. But the first row of (55) is simply the probability that N > 0, the second row that N > 1, and so on. Thus we can also write

(56)

Putting this in terms of the distribution function (38) we have

P ( N > k ) = 1 – P ( N ≤ k ) (57)

giving

(58)

Since the distribution function P ( N ≤ k ) can often be measured or estimated more easily than the density P ( N = k ), (58) gives a convenient way of calculating the average value of a random variable.

A similar result holds in the continuous case

(59)

This is easily shown by integrating (59) by parts:

(60)

Recalling that F ( ∞ ) = 1 and that

(61)

this reduces to

(62)

Since every random variable is associated with a number they can be operated on numerically (added, multiplied, etc.) and have arbitrary functions defined on them just like ordinary numbers. Let g(X) be a function defined on the random variable X. The definition of expected value (52, 53) can be extended to give the expected value of g(X): E(g(X))

E(g(X)) = (63)

if X is discrete or

E(g(X)) = (64)

in the continuous case.

The expected value of the sum of two random variables X and Y is

E ( X + Y ) = (65)

Using (16) we note that

P ( X=x, Y=y ) = P ( X=x / Y=y ) P ( Y=y ) (66)

and also

P (X=x, Y=y ) = P ( Y=y, X=x ) = P (Y=y / X=x ) P ( X=x ) (67)

Hence

E ( X + Y ) = (68)

Now

(69a)

and

(69b)

hence

E ( X+Y ) = E ( X ) + E ( Y ) (70)

Also, if X and Y are independent

E( X,Y ) = (71)

since X and Y are independent. Thus

E ( X,Y ) = = E ( X ) E ( Y ) (72)

The results, ( 70 and 72 ), also apply when X and Y are continuous random variables.

Recall from (21) that

P (X=xi) = (73)

and by definition, (52)

E(X) = (74)

Hence

E (X) = (75)

By analogy with (52) the second sum in (75) is defined as the conditional expected value of X given Y=yj. This is usually written as E(X/Y):

E(X/Y) = E(X/ Y=yj) = (76)

Thus (75) is

E(X) = (77)

but this is just the expected value of E(X/Y).

Hence

E(X) = E( E(X/Y) ) (78)

Again, this is also true if X is a continuous random variable.

Variance

As we noted earlier, the area under the density function is 1. If this “area” were cut out and placed on a pivot the expected value is the point at which it would balance.

Because of this relation with mechanics the expected value is often called the first moment of the random variable.

A second figure of merit which is also useful in describing a random variable is its “second moment” defined as

E (X2) = (79)

for the discrete case and

E (X2) = (80)

for the continuous case. Note that it is the expected value of the square of the random variable.

Instead of using the second moment directly, we usually are more interested in finding the “second moment about the mean” or “second central moment.” For the discrete case this is

(81)

Recalling that the first sum is the second moment, (79), the second is the mean, (52), and the third by definition, (3), must be 1 this becomes

(82)

Similarly, for the continuous case

(83)

The second central moment provides a measure of how closely the values of the random variable are grouped about the mean. For example, if the random variable can have only one value, α then

P(X=x) = 1 for x= α (84) 0 for x≠ α

and

(85)

and

(86)

From which we see that, by (82),

(87)

For the exponentially distributed random variable mentioned earlier, (44, 51)

(88)

and

(89)

Hence

(90)

The second central moment in effect tells how much variation there is in the random variable. Appropriately enough, it is usually called the “variance” of the random variable, Var(X), and given by the symbol σ2:

(91)

Note that since σ2 is the expected value of the square of a random variable it is always positive.

Also, the standard deviation of the random variable, represented by the symbol σ, is defined as the square root of the variance:

(92)

Note that this has the same units of measure as both the random variable and the mean.

Although the variance and standard deviation provide a measure of the amount of variation to expect in the values of a random variable, a given amount of variation is usually less important if the random variable has a large mean than if it is small. The “coefficient of variation,” C, gives a way of expressing the amount of variation in relation to the mean value of the random variable. It is defined as

(93)

Note that for the deterministic random variable (84, 87) σ = σ2 = 0 and thus

C = 0 (94)

For the exponential random variable (44, 90)

(95)

and hence using (88) and (89) in (93)

(96)

The Third Central Moment

The third central moment, M3, is defined as

and

for the discrete and continuous cases respectively. This gives a measure of the symmetry of the distribution.

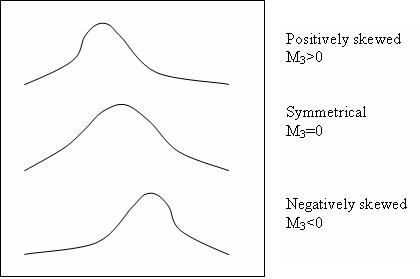

For M3 = 0 the distribution is symmetrical. For M3 > 0 it is positively skewed, and for M3 < 0 it is negatively skewed. This is illustrated below.

The Fourth Central Moment

The fourth central moment, M4, defined as

and

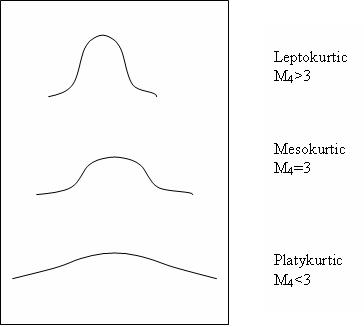

for the discrete and continuous case respectively, measures the flatness or peakedness of the distribution. It is a somewhat more sensitive measure of the spread of the distribution than the Variance.

The figure below shows distributions having the same Variance, σ2 = 1, and M4 > 3, M4 = 3, and M4 < 3. For M4 > 3 the distribution is “tall and thin;” for M4 < 3 it is “flat” and for M4 = 3 it is “medium.”

REFERENCE

- 1. Kleinrock, Leonard, Queueing Systems Volume 1: Theory. John Wiley, NY, 1975.