Computer Systems Engineering/Queueing system models

Queues

[edit | edit source]A queue is formally defined as consisting of a server and a waiting line for customers needing service at the server. Each customer joins the queue at the end of the waiting line. As the customers at the front of the line are served those behind gradually move to the head of the line. A customer leaves as soon as it has received its service requirement from the server and the server begins to serve the next customer in line. This is illustrated below.

A number of natural systems can be described in this way. For example, shoppers in a grocery store line up to wait for the cashier to total their groceries. Here the shoppers are the customers, the cashier is the server and the time it takes to total each shopper’s groceries is that shopper’s service requirement. Similarly, in a bank customers wait in line to reach a teller where they complete their transaction. In this case a single wait¬ing line may be used by all the tellers. In a computer system a customer is a job submitted for processing, a server is a device such as the CPU or a disk controller and the service requirement is the time the job will spend at that device. For example, if the server is the CPU the service requirement is the amount of processing the job will get; if the server is a disk con¬troller the service requirement is the time to do a disk read or write opera¬tion.

The time between when jobs join the waiting line and enter the queue and the amount of service each job requires are both given by random variables. The arrival rate is the average number of jobs that arrive per second. This is denoted by and hence is the average time between arrivals.

and are both used to represent the average service requirement. In a large interval T the expected number of arrivals is

expected number arrivals in T =

and these jobs have an average service requirement of . Thus the expected amount of service given by the server is

The fraction of time that the server is busy is its utilization. This is

The quantity is called the “traffic intensity” for the queue and denoted by the special symbol :

Note that if is greater than 1, more jobs arrive than can be served during T. In this case the number of jobs in the waiting line will grow in¬definitely and the queue is unstable. For only the queue having a deterministic arrival process and deterministic service requirement is stable. Due to fluctuations in the job arrivals and/or service requirements, non-deterministic queues must have for stability. For , is the probability (i.e. fraction of time) that the server is busy. Hence is the probability that it is idle.

In studying queues, the most important questions to ask are:

• How long must a job wait before receiving service? • How long will it stay in the queue before leaving (waiting time plus service time)? • How many jobs are in the queue? • What is the utilization of the server? (i.e. What fraction of the time is the server busy?)

Finite Population Model

[edit | edit source]In a finite population model there is a fixed number N of jobs. Jobs arrive at the queue from some source and after completing their service requirement they return to this source. This is shown below

If there are k jobs in the queue there are N-k jobs in the source. We will assume that jobs wait in the source an exponentially distributed amount of time with average before returning to the queue and that they are independent of each other. If there are (N-k) jobs in the source then they create a Poisson input process to the queue with rate

The state transition diagram for the finite population M/M/1 queue is shown below.

Note that when there are N jobs in the queue there are no jobs in the source and . Thus the probability of a queue state n > N is zero.

The normalizing equation becomes

Thus

or

Little’s Formula

[edit | edit source]It Describe a relationship between the Average Queuing length ,Average Arrival Rate and Average waiting time in Queue.The Amazing part of this theorem For any Queuing System is that has Steady State Condition.

Justification

[edit | edit source]A customer spends time w in system, and during that time customers arrive in a steady state. This must be the average number of customers in the system (otherwise the number either grows or shrinks).

Formally,

Little’s Formula (formal proof)

[edit | edit source]In a system with different types of customers, Little’s Formula applies to each type separately. For example, in a priority system,

Server utilization

[edit | edit source]Consider the system consisting of only the server:

Comten Network Processor packet: 2048B = 16,384 bits line: 56Kbps

292.5ms = .2924 sec ≈ .3sec

Say we want to send 7 packets per second aggregate:

However, recall that there is a delay at 100% utilization,

so we may actually want to use 4 lines to further reduce the utilization rate.

Response Time

[edit | edit source]The average time that a job spends in the queue is found by Little’s formula:

This is called the “response time” for the queue and is denoted by T. Thus using we have

or

This is shown below as increases from 0 to 1.

Note that the response time (and number in the system) remains fairly flat for less than 50% server utilization (i.e., ) and increases sharply as the server nears 100% utilization. This phenomenon is characteristic of nearly all queuing systems. When we attempt to run the server near its capacity (i.e. ) the response time and number in the queue get very large. For the system becomes unstable and the number in the queue and the response time grow indefinitely.

Stop and Wait Protocol

[edit | edit source]The stop-and-wait protocol ties up the transmission line, because the time on the line is used to send a packet, and then delays until an acknowledgement is received.

Model Communications Line

[edit | edit source]line = server waiting line = messages to be sent at station service time = transmission time

Or, with stop-and-wait, service time = time for cycle.

In order to increase the line speed, the service time must be reduced.

m-Server Loss

[edit | edit source]m-servers reject arrival if more than m are busy.

Notice that there is no closed-form expression in this m-server loss system. Values can be calculated from the tables. This system is often used to size telephone channels, based on calling rate and call duration

Generalized Birth-Death Processes

[edit | edit source]Our discussion of the M/M/1 queue can be generalized by allowing the arrival and service rates to be dependent on the state of the queue. That is, the arrival process is Poisson with parameter and the ser¬vice requirement is exponentially distributed with parameter . The subscript n refers to the number of jobs in the queue. If the queue state is n=k the probability of an arrival is

and the probability of a departure (assuming the queue is not empty) is

The probability of an arrival or departure depends on the queue state only through the parameters and .

Because of the memoryless property of the Poisson process the probability that the queue is in state n=k at time t and that there is an arrival or a departure during an interval h given that the queue is in state k at the start of the interval are independent. Thus the state transition diagram below describes a Markov chain.

The equilibrium balance equations, found by inspection, requiring that the “rate of flow of probability” out of a state equal the flow in are

and again the normalizing equation is

These equations could have been found more formally by considering the probabilities of an arrival, a departure and no change in an interval h as was done for the simple M/M/1 queue.

and for k=l,

Substituting for P(1) gives

and in general,

The constant P(0) is found using the normalizing equation. Sub¬stituting for P(k) gives

or

Combining gives the general solution

Kleinrock discusses several variations of birth-death queues in equilibrium. Two cases are especially useful in modeling computer systems. These are the “Infinite Server Queue” and the “Finite Population Model.”

Infinite Server Queue

[edit | edit source]In the infinite server queue there is always a free server when a job arrives at the queue.

If there are 2 jobs In the queue the service rate is (we can think of this as the Superposition of 2 Poisson service processes each having rate ), and if the queue is in state n=k the service rate is . Note that this implies that the average time between service completions is

when the queue state is n=k. It is apparent that this queuing system can also be interpreted as having a single server which lin¬early increases its service rate when more jobs are waiting for service.

For this queue,

and the state transition diagram is

The solution reduces to

and

Now

Thus

and combining gives

The average number in the queue is

or letting r = k-1

The average response time, T, is found by applying Little’s formula, , to give:

or

This is expected since no job must wait before starting to receive service.

The infinite server queue is often used in modeling a computer system to represent a bank of user terminals or to represent a random delay which involves no queuing or conflicts between jobs.

Queuing Networks

[edit | edit source]Introduction

[edit | edit source]A queuing network is constructed by connecting the output of one queue to the input of another. This is illustrated below.

Note that a single queue can be fed from the output of several others and that the output of a queue can be split to form input streams to several other queues. Each queue forms a “node” of the network and these can be numbered 1,2…M, where M is the number of nodes in the network. The “routing probability” is the probability that a departure from node i of the network goes immediately to node j.

Networks of queues can be used to model systems of several interacting devices, each of which can be modeled as a queue. For example, a simple com¬puter system consisting of a CPU and disk can be represented by a 2-queue network.

Types of Networks

[edit | edit source]A queuing network can be either “open” or “closed.” In an open network, jobs arrive at some of the nodes from an external source and can leave the network (to some absorbing destination or “sink”) from some nodes. The number of jobs in the network can vary and the source has an infinite supply. In a closed network a fixed number of jobs circulate among the nodes of the network; no new jobs enter the network and none leave.

Closed networks have been particularly successful in modeling computer systems. Two of the most widely used are the Central Server Model and the Machine Repairman Model.

Central Server Model

[edit | edit source]A central server queuing network is shown below.

Here node 1 represents the CPU and nodes 2 through M represent the system Input/Output (I/O) devices—usually disk controllers. Jobs are visualized as receiving service at the CPU and then being routed with probability to I/O device i. After receiving service at the I/O device the job returns to the CPU. In this model the processing of a job by the computer system is represented by several visits of the job to the CPU and I/O devices. The path from the CPU back to itself (with probability ) represents the completion of one job in the system and the simultaneous start of another.

The central server network is especially useful for modeling the inner resources (CPU and disks) of multiprogrammed computer systems. In these systems the level of multiprogramming (number of jobs in memory simultaneously) is often limited to a small number of jobs in the interest of system efficiency. Under moderate to heavy loads the system multiprogramming level is usually at or near this limit. Thus the assumption that the number of jobs in the network is constant is a good approximation to the real system.

Machine Repairman Model

[edit | edit source]The machine repairman model, originally developed in operations research, is often used to represent interactive computer systems. This network, as shown below

has one multi-server node which represents the user terminals. The other (system) nodes represent the other system resources. These may be arbitrarily connected although a variant of the central server network is often used. Jobs begin at the terminal node and then enter the system where they circulate among the system nodes before returning to the terminal node. The time from when the job leaves the terminal node until it returns is the system response tine, and the time it spends at the terminal node is called the user think time. The number of jobs in the network is the same as the number of servers at the terminal node. Hence there is no waiting for a job to begin to receive service at this queue and its service time is the same as the think time.

Open Networks

[edit | edit source]Open networks are most often used to model computer systems having a variable number of jobs or a constant job arrival rate. Batch processing systems and communications networks are often of this type.

Terminal Driven Systems

[edit | edit source]One particularly useful model for many interactive computer systems is the terminal driven system shown below.

In this model the number of jobs is equal to the number of terminals (i.e., there is one job per terminal). A job is visualized as leaving the “Terminals” when the user hits a “carriage return”, circulating among the various devices in the system as it is processed, and then returning to the terminal node. The time from the job’s departure from the terminal node until its return is the system response time. The time that the job waits at the terminals (i.e., the time from a response until the next input to the system) is the user “think time”.

Let: denote the rate with which jobs enter the system (system throughput); R denote the system response time; Z denote the user think time; N denote the number of terminals (or jobs) in the System.

We now apply Little’s Formula to the SYSTEM (all red capitals) consisting of both the terminals and the System (capital S) shown below.

For SYSTEM each job spends an average amount of time Z at the terminals and an average amount of time R in System giving an average amount of time Z + R in SYSTEM. It then leaves and immediately returns. The average job throughput rate is and the number of jobs in the system is fixed at N.

Hence, by Little’s Formula:

or

We now seek to find bounds on the response time R, and the throughput .

Let the various devices in the System be numbered 1,2,...M, and let be the average total amount of service a job receives from device i. Note that while a job is in the System it might actually visit device i more than once, in which case is the sum of the amounts of service it receives on each visit. Let be the average amount of service a job receives on each visit to device i. If is independent of then

is the average number of visits a job makes to device i.

Bounds on the System Response Time

[edit | edit source]If there is only one terminal and hence only one job in the system, R is simply the sum of the amount of service the job requires from each device. This is the minimum possible response time. Hence:

Since the utilization of each device cannot exceed 100% the throughput at device i, , is at most . Thus,

and since a job visits device i an average of times on each pass through the System,

Thus,

Substituting this equation into the expression for the response time yields the lower bound:

To find an upper bound on R we note that the most time that a job would spend at any device i would occur if at each visit it had to wait for the other N-l jobs to get their service before it received its service. Thus,

These bounds on the response time versus the number of terminals in the system are shown below. The dotted line shows a typical system response time function.

The region between is often called the feasible response time region for the system. In practice, as the number of terminals is increased, the response time becomes asymptotic to the line

This is the leftmost of the lines , and the one for which is the largest. The device corresponding to this line is called the system bottleneck and the other lines for the other devices are the potential bottlenecks due to those devices. The bottleneck and potential bottlenecks above are easily located. Observe that each is a straight line with an intercept on the horizontal (terminal) axis of and a slope of . Since gets smaller as the lines progress from left to right they have the appearance of “falling down”.

Bounds on the System Throughput

[edit | edit source]From , for all i, we note that

where is the total service time at the device for which is largest. This is the maximum possible system throughput.

Rearranging gives the expression for :

We noted that with N jobs in the system . Thus,

Similarly, since , we have,

The following figure shows these bounds on the system throughput versus the number of terminals.

Saturation Point

[edit | edit source]The point

shown in the previous two figures is called the saturation point for the system. It is interpreted as follows.

With one terminal connected to the system a job is never delayed waiting for other jobs to receive their service at a device. Thus, it spends an amount of time in the System and the throughput is . If, as the number of terminals is increased, each job that enters the system is not delayed waiting for other jobs to be served at a device, each job will spend only an amount of time in the system. The response time will be and the throughput will increase along the line .

However, when the number of terminals, N, becomes equal to N*, the system throughput will have reached and the bottleneck device will have reached 100% utilization. At that point it is “saturated”. If an additional job then enters the system, jobs can do no better than to arrive at the bottleneck device just as another job is beginning to be served. Thus, each job will have to wait a time before beginning its own service. Consequently, for each terminal added beyond N* the minimum possible response time increases by an amount and the minimum response time will increase along the line .

Effects of System Changes

[edit | edit source]The bounds in the previous two figures provide insight into the behavior of a system when we start to modify its configuration.

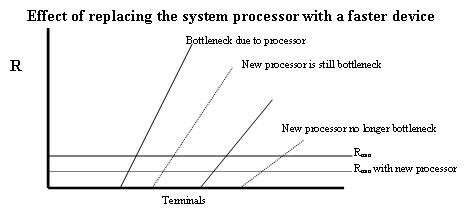

Consider, for example, a computer system in which the processor handles the terminal I/O traffic and is the system bottleneck. Let be the average processing time a job receives at the processor. If the processor is replaced with a faster device, is reduced. Thus the delay, , is reduced and the “potential bottleneck” due to the processor is moved to the right. If it moves far enough, so that another device becomes the leftmost potential bottleneck, then that device becomes the new bottleneck. If it does not move to the right of one of the other potential bottlenecks, then it remains the bottleneck but a lower response time and higher throughput can be obtained. The following figure shows the effects of these changes on the response time asymptotes.

More interesting possibilities arise if the terminal I/O processing is unloaded onto a Front-End Processor (FEP). This also reduces and moves the processor bottleneck to the right, but it adds a new device and corresponding new potential bottleneck to the system.

Let be the amount of time the processor spent doing I/O. Then is reduced by the amount . If the FEP is slower than the processor then will be greater than and will be increased by the difference - . If the FEP is faster, will be decreased by the amount .

If the system is heavily loaded so that the processor is the active bottleneck controlling the response time and limiting the throughput; as long as the system throughput will increase even if the FEP is much slower than the processor. What device becomes the new system bottleneck when the processor bottleneck moves to the right depends on the values and the other devices in the system.

Example

[edit | edit source]Saturate with:

Questions

Can we get 1 sec response time? – No. Can we get 2 sec response time with 30 terminals? – Yes. Can we get 2 sec response time with 35 terminals? – No.

The CPU is the bottleneck. Use a faster CPU to get better performance.

A faster CPU results in shorter processing time (say .75 sec).

Results in a big performance improvement.

A faster disk…

… is a little better, but not much.

A slightly faster CPU, vcxc = .6 …

… and the CPU is still the bottleneck.

However, if vcxc = .4 …

…then the disk is now the bottleneck, and further improvements in the CPU don’t help much.

Adding a second CPU…

The bounds model is independent of internal system structure. The model depends on total service required for a job at each device. Therefore, the bounds model can be represented as a serial system.

Summary

[edit | edit source]total service = vixi = Ti is easy to measure Ui is easy to measure is easy to measure vi is usually hard to measure xi is usually hard to measure