Computer network technologies and services/Print version

| This is the print version of Computer network technologies and services You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Computer_network_technologies_and_services

WAN

Strictly speaking, a Wide Area Network (WAN) is a network that is extended over a broad area, spanning regions, countries or in the case of the Internet even the world. More generally, any computer networking technology used to transmit data over long distances can be called as a WAN.

A WAN technology should meet some requirements in terms of service duration, bit rate and delay constraints according to the application (telemetry, telephony, data transfer, etc.) it is designed for.

Asynchronous Transfer Mode (ATM) represents the convergence for a wide variety of technologies that in the past both telecom and IT worlds in parallel introduced in order to transmit data over long distances:

- in the telecom world, the telephony turned from analog to digital, then ISDN and B-ISDN started to carry data along with the voice;

- in the IT world, Frame Relay superseded analog and digital leased lines by taking advantage of packet switching, and X.25 by moving the complexity from core to edge nodes.

Nowadays ATM is going to be abandoned in favour of IP thanks to its lower complexity and greater simplicity.

ISDN

[edit | edit source]Integrated Service Digital Network (ISDN) allows to carry data along with the voice: a variety of digital devices can be connected to a bus and can transmit over the available ISDN channels:

- Basic Rate Access (BRA) or Basic Rate Interface (BRI): it offers 2 data B-channels at 64 kbps and 1 signaling D-channel at 16 kbps → total: 144 kbps (good for single users or small offices);

- Primary Rate Access (PRA) or Primary Rate Interface (PRI): it offers 30 data B-channels at 64 kbps and 1 signaling D-channel at 16 kbps → total: 2 Mbps (good for companies).

The transmission is based on Time Division Multiplexing (TDM); all channels go to a Network Termination and enter the network over a digital wire called 'local loop'. The channels inherit the logics from telecom operators: they keep being alive also when no data is exchanged.

PDH

[edit | edit source]

Plesiochronous Digital Hierarchy (PDH) is an old standard designed to transfer digital voice channels at 64 Kb/s (PCM) over TDM-based digital telephone networks. The system is called 'plesiochronous' because a tight synchronization between transmitter and receiver is required, even if each device has its own clock.

Data flows are organized in a hierarchical way: channels are aggregated into flows from the lowest layer to the highest one (grooming), and the higher the hierarchical layer, the higher is the bit rate. For example, at layer T1 24 T0-layer channels are put into a single frame one next to another: as the frame has to last 125 µs for all layers, at layer T1 the bit rate will be 24 times higher than the one at layer T0.[1]

SDH

[edit | edit source]

Synchronous Digital Hierarchy (SDH), the European equivalent of the international standard SONET, differs from PDH for its higher speeds:

- a single clock exists for the whole system → a synchronization network is required for a tighter synchronization;

- copper cables need to be replaced with optical fibers;

- the flow multiplexing is more complex than PDH, because it is designed to optimize the hardware processing.

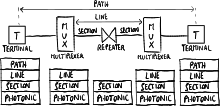

The protocol architecture is organized as a layer stack, and each node in the physical network architecture implements them according to its functionality:

- path layer: end-to-end interconnection between two terminals;

- line layer: a path is split into lines by multiplexers;

- section layer: a line is split into sections by repeaters (for long distances);

- photonic layer: the lowest layer for optical fibers.

Each time frame lasts 125 µs and its header includes synchronization information used to combine and separate channels, and OAM (Operation, Administration and Management) information used to detect failures and recover from them.

SDH and PDH represent the transport layer which ATM and Frame Relay operate on.

Frame Relay

[edit | edit source]Frame Relay is a layer 2 connection-oriented standard to set up permanent virtual circuits over packet-switching networks. Each permanent circuit is identified by a Data Link Connection Identifier (DLCI).

The standard is very flexible: in fact it does not specify the technology at upper layer (ATM, X.25...) used internally in the network.

CIR

[edit | edit source]

The maximum supported bit rate is not enough to describe the performance of a Frame Relay network, because an user may send bits consecutively at the maximum bit rate (wire speed) for a long period of time causing congestion in the network. Therefore the network provider provides also the Committed Information Rate (CIR), that is the maximum number of bits the user can transmit within a certain interval of time so that the service is guaranteed:

where is called committed burst size:

- low burstiness: the user rarely sends packets → the service is always guaranteed;

- high burstiness: the user keeps sending packets consecutively at wire speed → when he exceeds the committed burst size the service will not be guaranteed anymore.

The user's Data Terminal Equipment (DTE) can stop the transmission when the maximum burstiness is reached.

ATM

[edit | edit source]Asynchronous Transfer Mode (ATM) is a connection-oriented standard to set up virtual circuits over B-ISDN networks. Each circuit is identified by a Virtual Path Identifier (VPI) and a Virtual Circuit Identifier (VCI), and it can be permanent or dynamically set up through signaling messages.

ATM cells are very small: each ATM cell is 53 bytes long, made up of a 5-bytes-long header, containing the connection identifiers, and a 48-bytes-long payload → low latency and low packetization delays.

ATM networks have a very complex model, derived from a telecom-operator mentality to have the full control of the network and guarantee a high fault tolerance.

AAL 5

[edit | edit source]When ATM was designed, it was thought to be implemented ubiquitously in the network, also at its edges in the network cards of the user PCs. Nowadays PCs at the edges are implementing only the IP protocol because its implementation is cheaper, and ATM can be found only as transport layer in the core of the network hidden from the user.

ATM Adaptation Layer (AAL) of type 5 is used for Segmentation and Reassembly (SAR):

- Segmentation: IP packets are split into ATM cells;

- Reassembly: ATM cells are combined into IP packets.

AAL makes interaction between IP and ATM complex, because IP addresses should be translated to ATM connection identifiers and vice versa → nowadays the tendency is abandoning the ATM control plane and adopting the MPLS control plane.

Optical networks

[edit | edit source]In optical networks data are transmitted over electromagnetic waves multiplexed by using WDM, transported via optical fibers and switched by mirror-based optical switching systems.

Wavelength Division Multiplexing (WDM) allows to put multiple optical signals into a single optical fiber → the transmission capacity of fibers is increased:

- Coarse WDM (CWDM): it allows to transmit a lower number of signals with wavelengths well-separated one from each other → cheaper because demultiplexing is easier;

- Dense WDM (DWDM): it allows to transmit a higher number of signals with any wavelength → more expensive because demultiplexing is more complex.

Optical switching is based on mirrors controlled by micro-electro-mechanical systems (MEMS), reflecting electromagnetic signals from an input fiber to an output fiber. Optical switching is very flexible: it exploits physical properties of electromagnetic waves without caring about bits → networks can be upgraded to higher speeds because optical switches keep working independently of the bit rate.

Several types of optical switches exist:

- add/drop multiplexer: it is the simplest optical switch: it can be interposed between two fibers to optically insert (add) signals coming from transmitters into the network, and extract (drop) signals from the network towards the receivers;

- cross-connect: it can connect multiple input fibers to multiple output fibers:

- fiber cross-connect: all the electromagnetic waves coming from an input fiber are switched to an output fiber;

- waveband cross-connect: a set of electromagnetic waves with close wavelengths coming from an input fiber is switched to an output fiber;

- wavelength cross-connect: a set of electromagnetic waves with the same wavelength coming from an input fiber is switched to an output fiber;

- wavelength switch: configuration is dynamic, that is switches can change circuits faster than cross-connects → fault recovering is fast.

Two signals with the same wavelength may be coming from two different input fibers but they may need to be switched to the same output fiber → through the wavelength conversion an optical switch can change the wavelength of a signal to one not still used in the output fiber, in order to keep all signals separated.

Optical switches can be used in the network backbone to interconnect the major access points, by setting up optical paths via optical fibers among the cities in the world. Optical switches can set up optical paths by using signaling and routing protocols such as LDP and RSVP. Optical switches are fault tolerant: when a link breaks, they can reflect the waves along another optical path.

WDM can be deployed as the transport layer on which any layer 2 protocol (SONET, Ethernet...) can operate delimiting the frames.

However the technology for pure optical switching is still in an embryonic stage: nowadays WDM switches are more expensive than packet-switching ones, and they can have few interfaces because the mirror system would be very complex for a lot of interfaces. Moreover optical switching is connection-oriented: when a circuit is set up, the resources keep being allocated even if the circuit is not currently used → optical switching is good for the network backbone where the traffic is quite continuous.

Cheaper solutions try to overcome technological limits by replacing mirrors with an electrical switching matrix: each optical signal is converted to a sequence of bits through an optical-to-electrical (OE) conversion so that it can be switched more easily, then it is converted again into an optical signal. The reconverted signal is regenerated, being able to travel for a longer distance before losing power, but this solution has a lot of disadvantages: the switches consume a lot of power with respect to all-optical switches, and changing the bit rate requires to upgrade the switches.

References

[edit | edit source]- ↑ Signaling bits are not considered.

MPLS

Multiprotocol Label Switching (MPLS) is the enabling technology for the new broadband (IP) public network. It can be considered as a protocol architecture (or a suite of protocols) to control different sub-protocols.

MPLS operates at a layer that is generally considered to lie between traditional definitions of layer 2 (data-link layer) and layer 3 (network layer).

Benefits

[edit | edit source]IP protocol was developed for research purpose and was not designed to be sold as a service. It is a so-called 'best-effort protocol', which means that there is no explicit purpose in giving a guaranteed reliable service (speed, delays...).

When IP was starting to become a commercial good, the International Telecommunication Union (ITU) started developing protocols (such as ATM, frame relay, etc.) targeting service reliability and stability, thinking they would have been permeating the computer telecommunication world. Nevertheless end users have kept using IP, and as a result service providers nowadays have to deal with a lot of protocols in order to carry IP to end users: this 'big onion' makes very few sense for service providers due to high maintenance, equipment and software development costs to guarantee interoperability.

Cisco Systems was the first vendor to implement tag switching into their routers, then IETF adopted the protocol and named it as MPLS.

MPLS combines the best features from the connection-less protocols with the best ones from the connection-oriented protocols, representing the solution for the 'big onion' problem for two reasons:

- MPLS provides an IP-based network with a greater service reliability and a single unified control plane more isolated from the data plane:

- in IP control and data planes are continuously updated on every change in the network;

- in MPLS updating occurs just when a new LSP is set up; since there is a separation between data plane and control plane it is possible to set up paths with independent constraints;

- MPLS allows to re-use the traditional ATM devices by simply updating their software.

- Main features

- possibility of \ul{traffic engineering}: distributing traffic load over the network to avoid congestions;

- protocol independence (multi-protocol) → useful for transition from IPv4 to IPv6;

- designed to grant quality of service (not yet supported);

- unified control plane: it can be used for any network besides IP (e.g. MPλS for optical networks);

- fast fault recovery: two paths between a pair of nodes can be created, so that in case of failure in the first path the LSR can just notify the failure and quickly deviate the traffic to the second path[1] (instead in IP it is difficult to insert two paths into a routing table, and if a link fails routers need to exchange routing information and perform sophisticated algorithms to find another path).

Network architecture

[edit | edit source]

A Label Switch Router (LSR) is the device responsible for switching the labels used to route packets. LSRs are called label edge routers when placed at the edges of the MPLS cloud. LSRs combine smartness of routers and speed of switches: they are able to route in a clever way like routers, avoiding complicated data structures and algorithms like switches.

MPLS clouds can be gradually deployed: they can grow up and can be integrated to each other.

Data plane

[edit | edit source]Data plane is the capability of switching packets based on their labels.

MPLS header

[edit | edit source]

IP packets are prefixed with an MPLS header containing one or more label stack entries. Each label stack entry contains four fields:

- label: routing is based on this field instead of the IP destination address;

- traffic class (exp): for quality of service (QoS) priority and Explicit Congestion Notification (ECN);

- bottom of stack flag (S): if set, the current label is the last one in the stack;

- Time to Live (TTL).

Label switching

[edit | edit source]

A Label Switched Path (LSP) is a path set up by a signaling protocol that links a source label edge router (ingress) to a drain one (egress):

- when the ingress LSR receives a packet, it adds a label to it and forwards it to the next hop of the LSP previously created;

- when the egress LSR receives a packet, it strips off its label and forwards it out of the MPLS cloud.

A Forwarding Equivalence Class (FEC) is a set of packets which may be forwarded in the same way; that is, they may be bound to the same MPLS labels. Labels are not unique over the whole MPLS cloud, but they are changed on each hop (label swapping). Consider that granting the uniqueness of the labels all over the network would require too complex protocols and too long labels.

Using labels enables MPLS to provide two kinds of services:

- fast lookup: IP routing, based on the `longest prefix matching' algorithm, is sophisticated, difficult to be optimized and not fast enough when dealing with a wide amount of routes.

- MPLS provides a faster lookup with respect to IP because packet-forwarding decisions are made solely on the label, placed before the IP packet, without the need to examine the contents of the packet itself: each label in fact can be used as key to access the routing table as an array or hash table in order to expedite the route discovery;

- traffic engineering: IP tends to aggregate the traffic, but having lots of packets going through the same path doesn't provide an efficient service. This can not be avoided easily as it would require a static route configuration → expensive and not scalable.

- MPLS is able to control the traffic like a connection-oriented protocol: MPLS routing involves both source and destination labels, and routers can assign to a new packet flow the label corresponding to the least-loaded path in order to avoid congestion and allow traffic distribution. Moreover a failure in a path due to a non-working node will not affect the other paths.

Hierarchy and scalability

[edit | edit source]

MPLS is very scalable: inside a big MPLS cloud of domain 1 it is possible to define in a hierarchical way a smaller MPLS cloud of domain 2 and so on, and multiple label stack entries can be stored next to each other in a stack data structure. The label stack entries are added from the inner one to the outer one while the packet enters clouds of higher domain and stripped off from the outer one to the inner one while the packet exits clouds of lower domain, and LSRs not at the edges of the clouds always process the outer label stack entry. This hierarchy of labels can correspond to a hierarchy of providers, and the number of labels is limited only by the Ethernet frame size.

This technique introduces some advantages:

- it reduces the size of the routing and forwarding tables, because they do not have to be comprehensive;

- it allows to re-use the existing switching hardware (ATM, frame relay, etc.): MPLS headers are put directly into the 2-level headers, so that they can be processed by the existing hardware that now processes the level 2 simply by upgrading its software.

Control plane

[edit | edit source]Control plane is the capability of choosing the labels to be inserted into the packets.

The creation of a forwarding table (and in a broader sense of the LSP) for a specific FEC is performed in three steps:

- label binding: it is always performed by the downstream node, which chooses a label for the FEC, and this can be performed in two ways (not mutually exclusive):

- unsolicited: the downstream node can decide any time to assign labels, even if there is no traffic in the network;

- on-demand: the upstream node can asks the downstream node for a fixed label;

- label distribution: the downstream node communicates the chosen label to the upstream node;

- label mapping: the upstream node creates a new entry in its forwarding table by binding incoming packets, coming from a specific port with a specific label, to outcoming packets, going out of a specific port with a specific label.

Labels can be assigned in two ways:

- statically: network manager sets LSPs manually, like permanent virtual circuits (PVC) in connection-oriented technologies like ATM → this solution does not scale and limits the interoperability among different service providers;

- dynamically: label binding, distribution and mapping are performed automatically by LSRs without manual intervention:

- data-driven: the creation of an LSP is triggered by the reception of data packets, and each LSR autonomously chooses labels based on the traffic;

- control-driven: at some point the LSR assigns a label, even if there is no traffic;

- topology-driven (or protocol-driven): whenever a new destination is discovered, an LSP is created towards this destination → no traffic engineering: the network works exactly like an IP network;

- explicit: the creation of LPSs, usually initiated by label edge routers either data-driven or by manual configuration, is performed through explicit signaling.

Protocols

[edit | edit source]Label distribution protocols

[edit | edit source]Three protocols, incompatible to each other, can be used by the downstream node in order to communicate to the upstream node the label bindings:

- Label Distribution Protocol (LDP): designed specifically for label distribution;

- extended Border Gateway Protocol (BGP): the downstream node includes in BGP routing messages, used to advertise new destinations, a new field that tells the upstream node the chosen labels (only for protocol-driven label binding);

- extended Resource Reservation Protocol (RSVP): the downstream node includes in RSVP messages, used to notify the traffic types of packet flows for quality of service, a new field that tells the upstream node the chosen labels:

Computer network technologies and services/Quality of service.

Computer network technologies and services/Quality of service.

Routing protocols

[edit | edit source]The traditional routing protocols can be enhanced to support traffic engineering because they carry information about routing constraints.

Thanks to routing protocols such as OSPF-TE and IS-IS-TE (based on OSPF, IS-IS, BGP-4), every node can collect information about the network topology in order to know which nodes are its upstream nodes to be notified with the label bindings.

There are two possible routing strategies:

- hop-by-hop (as it is in IP routing): distributed routing protocol where each LSR decides by itself according to the shortest path criterion, so it may happen that all routers choose the same path → risk of congestion;

- explicit (possibility of constraint-based routing): centralized routing protocol where the egress LSRs are advertised to understand which links are currently the most loaded ones and choose the least loaded links for creating new LSPs so that they are disjointed as much as possible from other paths.

- In order to support explicit routing, the basic distribution labels should be extended:

- Constraint-based Routing LDP (CR-LDP) is an extension to LDP;

- RSVP for Traffic Engineering (RSVP-TE) is an extension to RSVP.

References

[edit | edit source]- ↑ An overhead is required to keep available two LSPs for the same FEC.

IPv6

Internet Protocol version 6 (IPv6) is a new protocol aimed to overcome IPv4 limits: the main reason for introducing a new protocol is to have a larger address space with respect to the IPv4 one.

Comparison to IPv4

[edit | edit source]IPv6 expands ICMP protocol by integrating the following protocols:

- ARP: called 'neighbor discovery' for address configuration process;

- IGMP: called 'Multicast Listener Discovery' to manage multicast group memberships.

With IPv6 some protocols need just to be upgraded, mainly due to the fact that they all deal with addresses (these protocols are not layer-3 independent):

- DNS protocols;

- routing protocols: RIP, OSPF, BGP, IDRP;

- transport protocols: TCP, UDP;

- socket interfaces.

IPv6 additional features

[edit | edit source]The additional features listed below were originally designed as add-ons for IPv4, then they were ported to be embedded into IPv6.

- Deployment on LANs

It is more efficient, thanks to an efficient usage of multicast and anycast addresses:

- multicast: each multicast address identifies a group of stations, and the packet is forwarded to all the nodes in the group;

- anycast: each anycast address identifies a group of stations, but the packet is forwarded just to the closest node in the group.

- Data security and privacy

Security mechanisms such as IPsec are included in the IPv6 protocol.

- Policy routing

It is the possibility to forward packets by using policies different than the destination address (e.g. forwarding by source address).

- Plug and play

Autoconfiguration protocols are defined:

- stateless: only link-local access is guaranteed without contacting any server;

- stateful: it is possible to have access to the Internet by using a DHCP server.

- Traffic differentiation

Not all data flows are equal (e.g. phone calls require less delays).

- Mobility

It is the capability of moving the device across different networks while keeping available all services (e.g. mobile devices that use GSM/LTE moving around different cells).

- Nomadicity

It is the capability of moving the device across different networks without needing to grant the services active → less strict than mobility.

- Better scalability with routing

As a general rule aggregation is required to make routing easier but it requires a waste of addresses. IPv6 routing uses almost the same techniques as IPv4 but it can reduce the routing tables, if the addresses are given in an efficient way.

Addressing

[edit | edit source]Address format

[edit | edit source]Each IPv6 address is 128-bit-long, and the prefix replaces the netmask:

| prefix | interface identifier |

Links

[edit | edit source]The concept of link in IPv6 is the same as the concept of subnetwork in IPv4:

- in IPv4 a subnetwork is a set of hosts with the same prefix;

- in IPv6 a link is the actual physical network.

All the hosts in the same subnetwork belong to the same link and vice versa:

- on-link hosts have the same prefix, so they can communicate directly;

- off-link hosts have different prefixes, so they can communicate through a router.

Addressing space organization

[edit | edit source]Global unicast addresses

[edit | edit source]Aggregatable global unicast addresses

[edit | edit source]They are equivalent to the IPv4 public addresses, and they begin with the three bits '001':

| 3 | 16 | 48 | 64 | 88 | 96 | 104 | 128 |

| 001 | ID TLA | ID NLA | ID SLA | OUI ('universal' bit = 1) | FF | FE | manufacturer-selected MAC portion |

| prefix | interface identifier (EUI 64) | ||||||

- Prefix: it must be the same as the one assigned to the link which the host is connected to.

- Assignment criterion for prefixes is topology-based: they are assigned according to the service provider hierarchy:

- Top Level Authority (TLA): a large service provider;

- Next Level Authority (NLA): an intermediate service provider;

- Subnet Level Authority (SLA): the organization.

- Interface identifier: it identifies the host interface.

- Optionally it can be in EUI-64 format: the 64-bit IPv6 interface identifier derives from the host's 48-bit MAC address:

| 24 | 48 |

| OUI ('universal' bit = 0) | manufacturer-selected MAC portion |

- where the 'universal' bit is the seventh bit in the OUI and it is changed from 0 to 1.

Addresses for IPv4 interoperability

[edit | edit source]They are to be used during the transition phase, and they begin with 80 bits set to zero:

- IPv4-mapped addresses: the first 80 bits are zeros and the next 16 bits are set to one:

| 0000 | 0000 | 0000 | 0000 | 0000 | FFFF | ... |

- IPv4-compatible addresses: the first 80 bits are zeros and the next 16 bits are set to zero (e.g. the IPv6 address '::10.0.0.1' maps the IPv4 address '10.0.0.1'):

| 0000 | 0000 | 0000 | 0000 | 0000 | 0000 | ... |

Local unicast addresses

[edit | edit source]Link local addresses

[edit | edit source]They refer to the 'automatic' private addresses, generated by autoconfiguration, which is the process where a station automatically generates an address to connect to an IPv6 link:

| FExx | ... |

Site local addresses

[edit | edit source]They are equivalent to the IPv4 private addresses:

| FDxx | ... |

Multicast addresses

[edit | edit source]A multicast address identifies a group of stations and it has the following format:

| 8 | 12 | 16 | 128 |

| FF | Flag (000T) | Scope | Group ID |

where the fields are:

- Flag field (4 bits): it is used to mark a multicast group:

- T = 1: the multicast group is temporary (e.g. user-defined conference call);

- T = 0: the multicast group is permanent (e.g. address of all the hosts in the network, it can not be overwritten);

- Scope field (4 bits): it is used to limit the diffusion of the multicast (better than IPv4 TTL):

- 1 = node local: the packet can not go outside the host;

- 2 = link local: the packet can not go outside the layer 2 network;

- 5 = site local: the packet can not go outside e.g. the campus network;

- 8 = organization local: the packet can not go outside the organization network;

- E = global: the packet can go everywhere;

- Group ID field (112 bits): it identifies the multicast group, and the packet is forwarded to all the nodes in the group.

If a host wants to belong to a multicast group, it needs to ask for it by using the ICMP protocol; once it is added to the multicast group, it will receive all the packets sent to that particular multicast address. It is very important to notice that the hosts that will receive a multicast packet are not defined by the source, but they are 'decided' by the destinations.

Solicited node multicast addresses

[edit | edit source]Every operating node by default belongs to a solicited node multicast group whose address derives from its IPv6 address:

| 96 | 104 | 128 |

| FF02::1 | FF | 24 least significant bits from the IPv6 address |

There may be more than one host in the same multicast group, but generally there are not since the multicast address is generated from the IPv6 address.

Mapping IPv6 over Ethernet

[edit | edit source]Each multicast packet is delivered through an Ethernet frame with a specific MAC address derived from the IPv6 multicast address, so that the packet is processed just by the interested hosts:

| 16 | 48 |

| 3333 | 32 least significant bits from the target IPv6 address |

Advanced topics related to IPv6 addresses

[edit | edit source]Renumbering

[edit | edit source]As the prefixes for global addresses are assigned according to the service provider hierarchy, if a company wants to change from a service provider to another one, all the links in the company network will have to change their prefixes. IPv6 is meant to support easy renumbering for both hosts and routers:

- hosts: routers gradually stop advertising the old prefix (deprecated) and start advertising the new one (preferred) → each host will have during the migration phase two addresses with different prefixes for the same interface;

- routers: Router Renumbering is a standard which allows the border router to notify the other internal routers of the new prefix.

However renumbering still has some unsolved problems, related to how to automatically update e.g. the DNS entries, firewall filters, address-based corporate policies, etc.

Multi-homing

[edit | edit source]

A big company may decide to buy Internet connectivity from two different service providers because it wants to keep being connected to the Internet even if one of the service providers has some problems.

As the prefixes for global addresses are assigned according to the service provider hierarchy, each host inside the company network will have two global addresses with different prefixes for the same interface → the host will have to select which address to use for every outcoming packet. This may cause some non-trivial configuration problems:

- routing based on destination address: the host should be able to select the right prefix for outcoming packets, otherwise let us suppose the host selects the provider A's prefix but the destination is in the provider B's network → the border router thanks to its routing mechanisms will forward the packet directly into the provider B's network → the provider B will block that packet because the source address has a different prefix;

- double registration in DNSes: the host should be registered in DNSes by two different addresses for the same alias;

- automatic renumbering: renumbering mechanisms should dynamically support a change from a provider B to a provider C.

Scoped addresses

[edit | edit source]

A host can have two interfaces (e.g. an Ethernet interface and a wi-fi one) which can be connected to two different links at the same time. When the host wants to send a packet to a link local target address, it does not know whether to make the packet exit the interface A or the interface B, because both the links have the same prefix; moreover, as each link local address is unique within its link, a host in the link A may have the same link local address as another host in the link B.

In IPv6 the host needs to specify in the target IPv6 address an identifier called scope which is used to identify the physical interface (e.g. FE80::0237:00FF:FE02:A7FD%19). The values for the scopes are selected by the operating system according to its internal criteria.

Standard IPv6 header

[edit | edit source]The standard IPv6 header has the following fixed-size (40 bytes) format:

| 4 | 12 | 16 | 24 | 32 |

| Version (6) | Priority | Flow label | ||

| Payload length | Next header | Hop limit | ||

| Source | ||||

| address | ||||

| Destination | ||||

| address | ||||

where the most significant fields are:

- Version field (4 bits): it is not really used, because the packet discrimination is made by the layer 2 → this enables the dual-stack approach (

Computer network technologies and services/Migration to IPv6#Migrating operating systems);

Computer network technologies and services/Migration to IPv6#Migrating operating systems); - Priority field (8 bits): equivalent to the IPv4 'Type of Service' field, it allows to distinguish different kinds of services for quality of service (

Computer network technologies and services/Quality of service#Architecture);

Computer network technologies and services/Quality of service#Architecture); - Flow label field (20 bits): it allows to distinguish different flows for quality of service;

- Next header field (8 bits): it refers to the packet payload, that is a header at upper layer (e.g. TCP/UDP) or the first extension header in the chain (

#Extension headers);

#Extension headers); - Hop limit field (8 bits): it is equivalent to the IPv4 'Time To Live' field;

- Source address field (128 bits): it contains the sender's IPv6 source address for the packet;

- Destination address field (128 bits): it contains the addressee's IPv6 destination address for the packet.

Some IPv4 fields have been removed:

- Checksum field: error protection is delegated to layer 2 (frame check sequence);

- Fragmentation field: fragmentation is delegated to the 'Fragment' extension header;

- Header length field: IPv6 header is fixed-size, as additional features are optionally offered by extension headers.

Extension headers

[edit | edit source]There are six extension headers, added only when needed and processed in the following order:

- Hop by hop option: it includes optional information to be processed by every hop;

- Routing: it enables source routing, that is the source decides which route the packet needs to take;

- Fragment: it manages fragmentation;

- Authentication Header (AH): it allows to authenticate the sender;

- Encapsulating Security Payload (ESP): it allows to encrypt the packet contents;

- Destination option: it includes optional information to be processed just by the destination.

Routers always process only up to the 'Routing' extension header.

All the extension headers have the same generic format (the length must be a multiple of 64 bits):

| 8 | 16 | 32 |

| Next Header | Header Length | |

| Extension data ::: | ||

where the fields are:

- Next Header field: it specifies the following extension header in the chain, or the header at upper layer (e.g. TCP/UDP) if this is the last extension header;

- Header Length field: it specifies the length of the current extension header.

- As new extension headers can be standardized over time, old devices may not be able to process recent extension headers → they can look at the 'Length' field to skip the unknown extension header.

- The 'Header Length' field may be not in some extension headers (such as the 'Fragment' extension header) which the IPv6 standard defines as having fixed length.

Hop by hop option and Destination option

[edit | edit source]The Hop by hop option and Destination option extension headers can include multiple additional options:

- Hop by hop option: it includes options which every router the packet goes through has to process;

- Destination option: it includes options which just the destination has to process.

For example, if there are two options with 8-bit-long values, the extension header will have the following format:

| 8 | 16 | 24 | 32 |

| Next Header | Header Length | Type1 | Length |

| Value1 | Type2 | Length2 | Value2 |

where each option always has the three following fields:

- Length field (8 bits): it specifies the length of the current option, so that routers unable to recognize the option can just skip it;

- Type field (8 bits): it identifies the current option.

- The first two bits always specify the action to be executed in case the option is not recognized, while the third bit specifies whether the option can be changed on-the-fly:

- 00 = the current option can be ignored and it is possible to proceed to the next one;

- 01 = the packet must be discarded;

- 10 = the packet must be discarded and an ICMPv6 Parameter Problem must be generated;

- 11 = the packet must be discarded and an ICMPv6 Parameter Problem must be generated, unless the destination address is a multicast one;

- xx0 = the option can not be changed;

- xx1 = the option can be changed on-the-fly;

- Value field (variable length): it contains the value of the option.

Routing

[edit | edit source]The Routing extension header allows the source to decide decides which route the packet needs to take (source routing), and it has the following format:

| 8 | 16 | 24 | 32 | |

| Next Header | Header Length | Routing Type | Segment Left | |

| (reserved) | ||||

| Router | ||||

| Address 1 | ||||

| ... | ||||

| Router | ||||

| Address N | ||||

where the fields are:

- Routing Type field (8 bits): it specifies the type of routing (currently '0' for classical source routing);

- Segment Left field (8 bits): it specifies the number of remaining hops to the destination;

- Router Address fields (128 bits each one): they are the list of the IPv6 addresses of the routers which the packet should go through.

In the example in the side figure, source S sends the packet towards destination D, adding a 'Routing' extension header which forces the packet to go through intermediate routers R1 and R2. So at first the packet apparently has router R1 as destination, while real destination D is specified as last step in the router list specified by the 'Routing' extension header. When the packet arrives at router R1, this recognizes it as apparently addressed to it; in fact, its address appears in the 'Destination Address' field in the IPv6 header. Router R1 checks the next headers and it discovers the packet contains a 'Routing' extension header, realizing that the final destination for the packet is another host (in particular the 'Segment Left' field says that two hops should be traversed before arriving at the final destination). Router R1 finds the IPv6 address of the next hop to which it should send the packet and replaces it with its IPv6 address, then it sends the packet with destination set to R2. The process will continue hop by hop, until destination D will receive an IPv6 packet whose 'Routing' extension header contains the 'Segment Left' field set to 0, which means that the packet has reached the final destination. Destination D is able to know all the hops the packet passed through because they are all written in the 'Routing' extension header, so it can forward the reply to source S by specifying the same (reversed) list of hops.

Fragment

[edit | edit source]The Fragment extension header allows to send a packet in smaller parts called 'fragments', and it has the following format:

| 8 | 16 | 29 | 31 | 32 |

| Next Header | (reserved) | Fragment Offset | (reserved) | M |

| Identification | ||||

where the fields are:

- Fragment Offset field (13 bits): it specifies the byte number at which the fragment starts within the fragmented section in the original packet;

- More Fragments (M) flag (1 bit): if it is set to 0 the current packet is the last fragment;

- Identification field (32 bits): all the fragments of a specific packet have the same identifier.

Each packets includes two sections:

- a section that can not be fragmented, so it is repeated in all fragments: it includes the IPv6 header and all the extension headers preceding the 'Fragment' extension header;

- a section that can be fragmented: it includes all the extension headers following the 'Fragment' extension header and the packet payload.

In contrast to IPv4, only the sender node is allowed to fragment the datagrams, while IPv6 routers do not support fragmentation. Moreover, the IPv6 standard strongly suggests to use Path MTU Discovery instead of fragmentation for performance reasons: ![]() #Packet Too Big.

#Packet Too Big.

IPsec

[edit | edit source]The solutions developed for IPv6 have been ported from IPv4-IPsec protocol suite. In IPv6 IPSec is an integrated protocol suite that defines two headers:

- Authentication Header (AH): it authenticates the whole packet, but the fields which are changed on passing from one hop to another (e.g. 'Hop limit' field), by guaranteeing that no one has tempered the contents of the packet;

- Encapsulating Security Payload (ESP): it authenticates and encrypts the packet payload for data privacy.

SA

[edit | edit source]IPsec does not define which algorithms are to be used for encryption and authentication, but the two parties have to agree on which ones to use for exchanging IPsec-protected information → flexibility: algorithms are chosen according to the current needs.

A Security Association (SA) can be defined as the set of agreements between two parties A and B on the private keys and algorithms to be used for ESP authentication and encryption and AH authentication. Each SA is identified by an identification tag called Security Parameter Index (SPI), included in the AH and ESP headers, and it is a one-way logical channel: A and B have to open a SA to agree on keys and algorithms for messages going from A to B, and they have to open another SA to agree on them for messages going from B to A. Often a SA is opened for each TCP port.

IKE

[edit | edit source]How can A and B agree on secrete keys avoiding that extraneous people know them? There are three main strategies:

- static configuration: the keys are configured manually in A and B → key negotiation is not required at all;

- Diffie-Hellman method: it allows to agree on a key without exchanging it → nobody can discover the secret keys by sniffing the traffic between A and B;

- Internet Key Exchange (IKE) protocol: it uses digital certificates and asymmetrical cryptography to send secret keys in a secure way.

The IKE protocol specifies that an IKE SA has to be established from A to B to agree on the secret keys for the child SA from A to B, and vice versa another one for the child SA from B to A. The IKE SA from A to B consists of the following operations based on asymmetrical cryptography:[1]

- B asks A for a secret key to be used for the child SA from A to B;

- A asks a trusty certification authority for B's digital certificate, in order to know if B is really who he is telling to be;

- the certification authority provides A with B's digital certificate, encrypted by using the certification authority's private key, containing B's signature, that is the association between B and a public key;

- A decrypts the digital certificate by using the certification authority's public key and learns the public key associated to B;

- A sends the secret key for the child SA to B, encrypting the message by using the public key associated to B so that it can be decrypted only by knowing B's private key;

- B receives the message from A, decrypts it by using its private key and learns the secret key decided by A for the child SA;

- the child SA using the agreed secret key can be opened from A to B.

Some extraneous people may look at the traffic exchanged between A and B and guess the secret keys after a while, by performing brute-force attacks or analyzing some deduced statistical information. Internet Security Association Key Management Protocol (ISAKMP) is a sub-protocol of IKE to periodically renegotiate the secret keys in a secure way, so that extraneous people do not have time to guess them.

AH

[edit | edit source]The Authentication Header (AH) guarantees connectionless integrity and data origin authentication for IP packets: it authenticates the \ul{whole packet}, but the fields which are changed on passing from one hop to another (e.g. 'Hop limit' field), by guaranteeing that no one has tempered the contents of the packet.

AH has problems dealing with NATs, because it also authenticates the addresses and the ports.

The Authentication Header has the following format:

| 8 | 16 | 32 |

| Next Header | Payload Length | (reserved) |

| SPI | ||

| Sequence Number | ||

| Authentication Data ::: | ||

where the fields are:

- Next Header field (8 bits): it specifies the next encapsulated protocol;

- Payload Length field (8 bits): it specifies the Authentication Header length in 32-bit words − 2 (it may be cleared to zero);

- Security Parameters Index (SPI) field (32 bits): it identifies the Security Association for this datagram (if cleared to zero, a Security Association does not exist; values in the range 1 to 255 are reserved);

- Sequence Number field (32 bits): it contains a monotonically increasing counter value;

- Message Digest field (variable length): it summarizes the contents of the packet by using a secret key: everyone who wants to change the contents of the packet has to know the key in order to recompute the message digest (similar to the error detection field).

ESP

[edit | edit source]The Encapsulating Security Payload (ESP) header provides origin authenticity, integrity and confidentiality protection for IP packets: it authenticates and encrypts the packet payload for data privacy.

Though ESP can authenticate, it does not perform the same functionality of AH: ESP does not authenticate the whole IPv6 packet.

The ESP header is always the last one in the header chain and it has the following format:

| 16 | 24 | 32 | ||

| SPI | authenticated | |||

| Sequence Number | ||||

| Payload Data ::: | encrypted | |||

| Padding ::: | ||||

| Payload Length | Next Header | |||

| Authentication Data ::: | ||||

where the fields are:

- Security Parameters Index (SPI) field (32 bits): it identifies the Security Association for this datagram;

- Sequence Number field (unsigned 32 bits): it contains a monotonically increasing counter value.

- The 'Sequence Number' field is mandatory for the sender and it is always present even if the receiver does not select to enable the anti-replay service for a specific SA, but processing of this field is at the discretion of the receiver;

- Payload Data field (variable length): it contains the data described by the 'Next header' field;

- Padding field (variable length 0 to 255 bits): padding may be required, irrespective of encryption algorithm requirements, to ensure that the resulting ciphertext terminates on a 4-byte boundary;

- Padding Length field (8 bits): it specifies the size of the 'Padding' field (in bytes);

- Next Header field (8 bits): an IPv4/IPv6 protocol number describing the format of the 'Payload Data' field;

- Authentication Data field (variable length): it contains an Integrity Check Value (ICV) computed over the ESP packet minus the 'Authentication Data' field.

- The 'Authentication Data' field length is specified by the selected authentication function. The 'Authentication Data' field is optional: it is included only if the authentication service has been selected for the SA at issue. The authentication algorithm specification must specify the ICV length and the comparison rules and processing steps for validation. Note that the 'Authentication Data' field is not encrypted.

Two usage modes are possible for ESP (optionally in combination with AH):

- transport mode: ESP does not encrypt the IPv6 header → anybody in the middle is able to see the source and destination IP addresses in the IPv6 header:

| IPv6 header | other extension headers | ESP header (for encryption) |

TCP/UDP header | payload | ESP authentication |

| encrypted data | |||||

| authenticated data | |||||

- tunnel mode: the IPv6 packet is encapsulated into another IPv6 packet having ESP → the IPv6 header of the original packet, containing the source and destination IP addresses, is encrypted and nobody can see it:

| IPv6 header | ESP header (for encryption) |

IPv6 header | other extension headers | TCP/UDP header | payload | ESP authentication |

| encrypted data | ||||||

| authenticated data | ||||||

ICMPv6

[edit | edit source]Internet Control Message Protocol version 6 (ICMPv6) is an integral part of the IPv6 standard, and it in turn integrates the functionalities of ARP and IGMP protocols expanding them.

All the ICMPv6 messages are put just after the extension headers in the packet, and they have the same generic format:

| 8 | 16 | 32 |

| Type | Code | Checksum |

| Message Body ::: | ||

where the 'Type' field identifies the type of ICMPv6 message:

- diagnostics messages: like in ICMPv4, they allow to report errors or problems in the network:

- 1 = Destination Unreachable

- 2 = Packet Too Big

- 3 = Time Exceeded

- 4 = Parameter Problem

- messages used by the ping command:

- 128 = Echo Request

- 129 = Echo Reply

- Multicast Listener Discovery messages: they expand the IGMP functionality:

- 130 = Multicast Listener Query

- 131 = Multicast Listener Report

- 132 = Multicast Listener Done

- Neighbor Discovery messages: they expand the ARP functionality:

- 133 = Router Solicitation

- 134 = Router Advertisement

- 135 = Neighbor Solicitation

- 136 = Neighbor Advertisement

- 137 = Redirect

Packet Too Big

[edit | edit source]When a router receives a packet having a too large size, it performs a technique called Path MTU Discovery: it discards the packet and sends back an ICMPv6 message of type Packet Too Big in order to notify the sender of the allowed Maximum Transmission Unit (MTU) size and force it to send again the packet itself (and the next packets) with a size not exceeding the MTU specified by the router. This technique has the goal to avoid fragmentation as much as possible.

Multicast Listener Discovery

[edit | edit source]Multicast Listener Discovery is the component in ICMPv6 which expands the functionality of the IPv4 IGMP protocol to manage multicast group memberships:

- Multicast Listener Query:

- General Query: the router asks hosts if they are interested in joining some of multicast groups;

- Multicast Address Specific Query: the router asks hosts if they are interested in joining a particular multicast group;

- Multicast Listener Report: the host notifies the router it wants to join a particular multicast group to receive all the multicast packets addressed to the multicast address corresponding to the specified multicast group;

- Multicast Listener Done: the host notifies the router it wants to stop receiving the multicast packets for a particular multicast group.

Neighbor Discovery

[edit | edit source]Neighbor Discovery is the component in ICMPv6 which expands the functionality of the IPv4 ARP protocol:

- Neighbor Solicitation: the host sends a multicast packet having, as the target IPv6 address, the solicited node multicast address corresponding to the IPv6 address of which it wants to learn the MAC address;

- Neighbor Advertisement: the host having the specified IPv6 address sends back its MAC address;

- Router Solicitation: the host sends a multicast packet to solicit the router sending back a 'Router Advertisement' message containing details for the given network (as Prefix associated to the Link, flag of autoconfiguration/DHCP, default device);

- Router Advertisement: the router advertises its presence within the link reporting the prefix(es) associated to the link and a flag which states if the hosts should auto-configure themself or query a DHCP server.

'Neighbor Discovery' ICMPv6 messages are used to autoconfigure the IPv6 addresses for a host connecting to a link: firstly the host has to get a link local address in order to be able to contact the other hosts within the link, then it has to get a global address in order to be able to exit the link and access the Internet by a globally unique address.

Link local address autoconfiguration process

[edit | edit source]The link local address is autoconfigured by using 'Neighbor Solicitation' and 'Neighbor Advertisement' ICMPv6 messages:

- the host generates by itself an IPv6 address candidate to be its link local address:

- prefix: it is always 'FE80::';

- interface identifier: it can be generated either based on MAC address (EUI-64 format) or randomly for privacy reasons (traceability);

- the host sends via multicast a 'Neighbor Solicitation' message to the Multicast Solicited Node corresponding to the candidate linklocal address chosen, specifying as target IPv6 address its self-generated address and asking if a host whose link local address is the same as the specified IPv6 address exists in the link (Duplicated Address Detection);

- if a host having the sender's link local address already exists in the link, it sends back a 'Neighbor Advertisement' message to the sender, which will have to generate randomly another candidate address and send via multicast another 'Neighbor Solicitation' message;

- if no one replies, the address is unique within the link and the host is able to contact every other host within the same link by using its link local address, but it is not able to access the Internet yet because it needs a global address.

Global address autoconfiguration process

[edit | edit source]The global address is autoconfigured by using 'Router Solicitation', 'Router Advertisement', 'Neighbor Solicitation' and 'Neighbor Advertisement' ICMPv6 messages:

- the host sends via multicast a 'Router Solicitation' message to solicit the router sending back a 'Router Advertisement' message containing the interface identifier associated to the link;[2]

- the router sends back a 'Router Advertisement' message containing the two flags 'Managed Address Configuration' (M) and 'Other configuration' (O):

- M = 1: the host has to contact the DHCP server for the prefix of the link and the other network configuration parameters (such as the DNS address), without caring of 'Router Advertisement' messages from the router (stateful configuration);

- M = 0: the host has to look at the 'O' flag:

- O = 1: the host can take the prefix of the link from the 'Router Advertisement' message, but it still has to contact the DHCP server for the other network configuration parameters (such as the DNS address);

- O = 0: the host can take the prefix of the link from the 'Router Advertisement' message, and no other configuration information is available from the DHCP server (stateless configuration) → either the other network configuration parameters (such as the DNS address) will have to be configured by hand on the host, or the host can get the DNS address via IPv4 (

Computer network technologies and services/Migration to IPv6#Migrating DNSes);

Computer network technologies and services/Migration to IPv6#Migrating DNSes);

- the host generates by itself an IPv6 address candidate to be its global address:

- prefix: it is equal to the prefix of the link, taken either from the 'Router Advertisement' message or by contacting the DHCP server;

- interface identifier: it can be generated either based on MAC address (EUI-64 format) or randomly for privacy reasons (traceability);

- the host sends via multicast a 'Neighbor Solicitation' message to all the hosts within the link, specifying as target IPv6 address its self-generated address and asking if a host whose global address is the same as the specified IPv6 address exists in the link (Duplicated Address Detection);

- if a host having the sender's global address already exists in the link, it sends back a 'Neighbor Advertisement' message to the sender, which will have to generate randomly another candidate address and send via multicast another 'Neighbor Solicitation' message;

- if no one replies, the address is globally unique and the host is able to access the Internet by using its global address.

Another implementation proposed by Microsoft consists in the possibility for the host to contact the DNS server without knowing its address: the host sends packets to a fixed anycast address, and the network takes care of delivering the packet to the DNS server. However this implementation is not really used:

- implementations for anycast address management are rare;

- this solution is not supported by GNU/Linux operating system.

Autoconfiguration is based on the MAC address, so if the network card breaks and needs to be replaced the host will have to change its address, but the caches (e.g. the DNS cache) can not update immediately → static configuration is still possible, especially for fixed machines (e.g. servers for public websites) which need to avoid changing their addresses in order to keep being reachable as much continuously as possible.

References

[edit | edit source]- ↑ For simplicity we suppose that a single secret key is required for the SA.

- ↑ This step is not mandatory if the router is configured to periodically multicast 'Router advertisement' messages.

Migration to IPv6

Introduction

[edit | edit source]During the migration phase, hosts should gradually start being able to reach IPv6 destinations while keeping being able to reach IPv4 destinations. Migrating all network devices is a condition needed but not sufficient: the user needs to make them work together by making a new addressing plan for the whole network.

Migrating hosts

[edit | edit source]Migrating applications

[edit | edit source]Introducing IPv6 support into applications results in need to change the source code:

- servers: the running process on a server should open two threads, one on listening to the IPv4 socket and another one on listening to the IPv6 socket, in order to be able to serve both IPv4 and IPv6 requests;

- clients: applications such as web browsers should be able to print in output and get in input addresses in the new format.

Migrating operating systems

[edit | edit source]Applications lie mostly on the operating system libraries, which can introduce IPv6 support by adopting the dual stack approach:

- without dual layer: the operating system processes independently IPv4 and IPv6 addresses → the software should be able to manage both IPv4 and IPv6 addresses;

- with dual layer: the operating system is able to convert an IPv4 address into an IPv4-mapped IPv6 address → the software can just support IPv6 addresses without caring of IPv4 addresses.

The variant with dual layer is the most used one because it moves the complexity to the core of the operating system.

Migrating network devices

[edit | edit source]Migrating switches

[edit | edit source]Although in theory switches should be not affected at all by changes at layer 3 because they work up to the layer 2, there could be some troubles with additional functions: for example IGMP snooping, a functionality used to filter incoming multicast packets, needs to look inside the packet → as the packet format and fields change the switch can not recognize the multicast IPv6 packets and it discards them.

Migrating routers

[edit | edit source]Nowadays routers are mostly ready for IPv6, even if performance in IPv6 is still worse than the one in IPv4 because of lack of experience and lower traffic demand.

Tipically routers supporting IPv6 adopt the dual stack 'ships in the night'-like approach: IPv4 and IPv6 are supported by two independent stacks for transport layer → this requires the complete duplication for all components: routing protocols, routing tables, access lists, etc.

Routing tables

[edit | edit source]Routing in IPv6 is performed in the same way as IPv4 but it requires two distinct routing tables, one for IPv4 routes and another for IPv6 routes. IPv6 routing tables can store several types of entries, including:

- indirect entries (O/S codes): they specify the addresses, typically link local, of the interfaces of the next-hop routers to which to send packets addressed towards remote links;

- direct entries: they specify the interfaces of the router itself through which to send packets addressed towards local links:

- connected networks (C code): they specify the prefixes of the local links;

- interface addresses (L code): they specify the interface identifiers in the local links.

Routing protocols

[edit | edit source]Routing protocols supporting IPv6 can adopt two approaches:

- integrated routing (e.g. BGP): the protocol allows to exchange both IPv4 and IPv6 routing information at the same time → IPv4 and IPv6 addresses belonging to the same destination can be transported via a single message → higher efficiency;

- ships in the night (e.g. RIP, OSPF): the protocol allows to exchange only IPv6 routing information → given a destination, a message needs to be exchanged for its IPv4 address and another message for its IPv6 address, and the messages are completely independent of each other → higher flexibility: two different protocols can be used, one for IPv4 routing information and another for IPv6 routing information.

Migrating DNSes

[edit | edit source]DNSes supporting IPv6 can map two IP addresses to the same alias: an IPv4 address and an IPv6 one → a public destination can be reachable via either IPv4 or IPv6.

DNSes supporting IPv6 can return IPv6 addresses not only via IPv6, but also via IPv4: DNS messages in fact belong to the application layer, so the transport layer used to forward the DNS queries and replies does not matter. DNSv6 queries are performed by the following command: set q=aaaa.

A company may decide to offer access to its public website also via IPv6. However, presently most traffic is via IPv4, so generally the service for IPv4 traffic is more reliable in terms of performance and fault tolerance than the one for IPv6 traffic. Therefore the company, especially if it bases its business on its website, does not want the user connecting via IPv6 decides to change to another competitor website because of performance problems. A possible solution is to perform some preliminary assessments to test the performance of the connectivity between the user and the company server, and to implement an additional mechanism into DNSes: they should be able to look at the source address of the DNS query, and return either just the IPv4 address if no assessments have been performed for the connectivity, or both the IPv4 and IPv6 addresses if the performance are good enough.

Tunneling solutions

[edit | edit source]The network will not be IPv6-compatible from day zero → IPv6 traffic may need to traverse IPv4-only network portions. Network-oriented tunneling solutions enable connectivity among IPv6 networks even if they are connected through an IPv4-only infrastructure, and consist in encapsulating the IPv6 packet inside an IPv4 header just for transporting it along the tunnel:

The size for the tunneled packet, including the 20-byte-long IPv4 header, must not exceed the maximum size for IPv4 packets → two solutions are possible:

- fragmentation: the routers should fragment the IPv4 packet before sending it into the tunnel → fragmentation is deprecated due to performance reasons;

- smaller IPv6 packets: the hosts should generate IPv6 packets with a smaller MTU size to take into account the extra-size due to the IPv4 header insertion → routers can specify the allowed MTU size through the 'Router Advertisement' ICMPv6 messages.

Host-oriented tunneling solutions

[edit | edit source]Host-oriented tunneling solutions are more plug-and-play for hosts, but they are not professional solutions and do not solve the problem of IPv4 address shortage because every host still needs to have an IPv4 address. Solutions of this kind are:

- use of IPv6 IPv4-compatible addresses, tunnel termination on host or router;

- 6over4;

- ISATAP.

Use of IPv6 IPv4-compatible addresses

[edit | edit source]They assume that dual-stack hosts, when it is necessary to contact an IPv4 destination, send IPv6 packets to an IPv4-compatible IPv6 address, that is built with the first 96 most significant bits to zero and with the remaining 32 ones coinciding with those of the destination IPv4 address. This IPv6 packet is then encapsulated in an IPv4 packet, whose destination is different depending on whether you want to end the tunnel on the destination host or on a dual-stack router, in particular:

- end-to-end termination: the pseudo-interface on the dual-stack host performs the encapsulation in an IPv4 packet destined for the host to be contacted;

- router dual-stack termination: the pseudo-interface on the host sends the packets destined for a host to the IPv4 address of the dual-stack router, therefore:

- an IPv6 IPv4-compatible address is generated for the destination, as before;

- the IPv6 packet is encapsulated into an IPv4 one destined for the dual-stack router;

- the dual-stack router decapsulates the packet and sends it to the destination host.

6over4

[edit | edit source]The idea is to emulate, through IPv4, a local network that has support for multicast. In practice, as for connecting two IPv6 hosts through the underlying Ethernet network, neighbor discovery is used, relying on the fact that Ethernet has mechanisms for broadcasting, in this solution we reason as if IPv4 was the lower level protocol and we change the neighbor discovery to find IPv4 addresses instead of MAC addresses. This discussion can be generalized to the case in which we want to connect not individual hosts, but clouds of IPv6 networks through dual-stack routers that communicate in an IPv4 network. In this case, in addition to neighbor discovery, a modified version of the router discovery can be used, in order to send a router solicitation to discover the IPv4 addresses of the routers connected to the host's IPv4 network that allow to reach various IPv6 networks; in fact from the router advertisement the host can get information about the IPv6 networks that can be reached from that router.

The problem with this solution is the use of IPv4 multicast, which is usually disabled in networks involving different providers. This solution can be used when you have a whole network under your control: for this reason it cannot be used to migrate the global network from IPv4 to IPv6.

6over4 Neighbor discovery

[edit | edit source]At the proposal of the RFC, IPv6 addresses are mapped to IPv4 addresses: in practice the IPv4 address is used as the interface identifier of the IPv6 address of the destination. This would make the mechanism illustrated so far unnecessary, because the host could tunnel directly, without the need for neighbor discovery to know the IPv4 address. This obviously is not valid when the IPv6 address is not built starting from the IPv4 one, so a more general mechanism is still required to contact a router. Assuming, therefore, to know only one IPv6 address, the neighbor discovery is sent to the solicited node multicast address (for example if the IPv6 address is fe80::101:101 then it is sent to ff02::1:ff01:101) on an IPv4 6over4 multicast network at the address 239.192.x.y, built with the last 16 bits of the IPv6 address (therefore in the previous example it will be 239.192.1.1).

ISATAP

[edit | edit source]The idea is similar to that of 6over4, that is, to use the IPv4 network as a physical link to reach IPv6 destinations, but we want to overcome the limitation of requesting support for multicast. In the absence of neighbor discovery mechanisms, the IPv4 addressing of destinations using ISATAP is incorporated in the IPv6 address, more precisely in the interface identifier, whose format is 0000:5efe:x:y, where x and y are the 32 bits of the IPv4 address. As you can see, this solution does not address the problem for which IPv6 was introduced, that is, the scarcity of IPv4 addresses. However, this solution is more useful in the scenario of an IPv4 link that connects non-hosts, but routers that have IPv6 clouds on the border. In this case a host within the IPv4 network that wants to communicate in IPv6 with a host belonging to a cloud must be equipped with a Potential Router List (PRL). The issues that arise at this point are:

- How to get the PRL?

- Two different solutions exist: the former, which is proprietary, is based on the use of DHCP; the latter, which is standard, is based on the use of DNS. In the latter a DNS Query for a particular name with the format isatap.dominio.it, which will provide the PRL of the IPv6 routers connected to the IPv4 network of the domain specified in the query.

- Which router should the packets for the IPv6 destination be sent to?

- A unicast router discovery is used towards each of the PRL routers, in order to get a reply through a router advertisement. Remember, in fact, that in the advertisement router routers can also announce the list of IPv6 networks that can be reached through them (see the L=0 flag in the Prefix Information Option of the ICMP Router advertisement).

Network-oriented tunneling solutions

[edit | edit source]Typically the network-oriented tunneling solutions require manual configuration, and encapsulation can be based on IPv6 in IPv4 (protocol type = 41), GRE, IPsec, etc.

6to4

[edit | edit source]The biggest step forward from previous solutions comes from the consideration that in the new scenario there is a whole IPv6 network that needs an IPv4 address to get out of the IPv6 cloud, no longer a single host. The mapping between the two addresses is then done in the IPv6 prefix, not in the interface identifier: a special prefix is assigned to all IPv6 networks that includes the IPv4 address assigned to the interface of the dual-stack router that faces the cloud. The prefix 2002::/16 identifies the IPv6 stations which are using 6to4: in the following 32 bits the IPv4 address is set and 16 other ones remain available to represent multiple different subnets, while the interface identifier is obtained as in the other IPv6 use cases. In this solution there is also a router that has a particular role, the 6to4 Relay, which must be the default gateway of the 6to4 routers, in order to forward packets that do not have the 6to4 format just seen to the global IPv6. This router has address 192.88.99.1, which is an anycast address: it was used by who conceived 6to4 because it was considered the scenario in which there are multiple 6to4 Relays in the same network, which would lead to the problem of having to use different addresses. In this way instead, since the anycast address is processed in different ways by routing protocols, you can use the same address and also provide load balancing.

Practical example

[edit | edit source]Let's assume that there are two IPv6 clouds connected to an IPv4 cloud, and that the interfaces of the dual-stack routers have, for the interfaces connected to the IPv6 clouds, the addresses 192.1.2.3 for network A and 9.254.2.252 for network B. Let's also suppose that a host a belonging to network A wants to send a packet to host b belonging to network B. From the configuration outlined and from what has been said it is clear that the hosts existing in network A will have an address of type 2002:c001:02:03/48 and the ones existing in network B 2002:09fe:02fc::/48. The IPv6 packet from a to b will be encapsulated in an IPv4 packet having as destination address 9.254.2.252, obtained from the prefix of the destination IPv6 address: when the packet arrives at that router, it will be decapsulated and forwarded according to the IPv6 addressing plan of the cloud containing network B.

Teredo

[edit | edit source]It is very similar to 6to4, except for the fact that encapsulation is done inside a UDP segment contained in an IPv4 packet, instead of simply being encapsulated in IPv4. This is done to overcome a 6to4 limit, i.e. crossing through NATs: since in 6to4 there is no 2-level segment inside the encapsulating IPv4 packet, the NAT can not work.

Tunnel broker

[edit | edit source]The problem with the 6to4 solution is that it is not generic enough: you are tied to the use of the 2002::/16 addresses and you can not use usual global unicasts. In the tunnel broker solution, since it is no longer possible to deduce from the IPv6 prefix which endpoint the packet should be sent to, a server is used which, given a generic IPv6 address, provides the address of the endpoint tunnel to be contacted. The routers implementing the tunnel broker are called tunnel servers, while the servers providing the mapping are called tunnel broker servers. The tunnels are made as in 6to4, therefore IPv6 inside IPv4: if there was the problem of crossing a NAT you could also think of using Teredo's approach, by encapsulating inside UDP, then inside IPv4.

The tunnel broker server needs to be configured: Tunnel Information Control (TIC) is used to forward information about the networks reachable by a given tunnel server, from the tunnel server being configured to the tunnel broker server. The Tunnel Setup Protocol (TSP) is instead used to request information from the tunnel broker server. Again, you can have a default gateway for the global IPv6 network. In summary, a router with this configuration, when a packet arrives, can:

- forward it directly if it matches with an entry in the routing table (classic situation);

- ask the tunnel broker server to see if it is an address for which tunneling is needed;

- send it to a global IPv6 default gateway if the tunnel broker server response is negative.

Issues

[edit | edit source]- It is a centralized solution and therefore the tunnel broker server is a single point of failure.

- It complicates the control plan.

- If this server is used to interconnect different networks, even belonging to different providers, the problem of responsibility for its management arises.

Advantages

[edit | edit source]- It is more flexible than 6to4, because it allows the use of all global unicast addresses.

Bringing IPv6 support to the network edges

[edit | edit source]NAT-based solutions

[edit | edit source]

The goal is to migrate big provider's networks, so that IPv4 and/or IPv6 clouds at the network edges can use the IPv6 backbone to interoperate. The common scenario is a user who wants to connect to an IPv4 destination through the provider's IPv6 network.

All the available options make use of the NAT. The NAT usage is a bit countercurrent as IPv6 had among its goals the one of avoiding the NAT usage in networks because of several problems brought by NATs (change of packets in transit, problems on peer-to-peer networks, etc.). However the fact that these solutions are based on NATs presents a variety of advantages: NATs are largely spread over networks, their problems and limitations are known, applications which may have problems in going through NATs are known; so in general the advantage is the big experience gained so far.

Major components

[edit | edit source]In NAT-based solutions there are three major components:

- Customer-Premises Equipment (CPE): it is the router at the customer edge just before the provider's network;

- Address Family Transition Router (AFTR): it is an IPv6 Tunnel Concentrator, that is the device at the end of an IPv6 tunnel;

- NAT44/NAT64: it is a NAT for translation from IPv4/IPv6 addresses to IPv4 addresses.

Main NAT-based solutions

[edit | edit source]- NAT64;

- Dual-Stack Lite (DS-Lite): NAT44 + 4-over-6 tunnel;

- Dual-Stack Lite Address+Port (DS-Lite A+P): DS-Lite with preconfigured port ranges;

- NAT444: CGN + CPE NAT44, that is when a home user, which gets the service from the telephone company, adds a NAT in its own home network; every packet outcoming from the home network is subjected to two address translations;

- Carrier Grade NAT (CGN): large-scale NAT44, that is NAT used by telephone companies to map the hundreds of thousands of (users') private addresses into the limited public addresses which are available.

For migration of big networks oriented to mobile devices NAT64 solution is being chosen.

In order to migrate to IPv6 keeping the IPv4 compatibility at the edges of the network some telephone operators are planning massive migrations to DS-Lite because it is a plenty tested solution, and there are several compatible devices already on sale.