GLSL Programming/Blender/Silhouette Enhancement

This tutorial covers the transformation of surface normal vectors. It assumes that you are familiar with alpha blending as discussed in the tutorial on transparency and with uniforms as discussed in the tutorial on shading in view space.

The objective of this tutorial is to achieve an effect that is visible in the photo to the left: the silhouettes of semitransparent objects tend to be more opaque than the rest of the object. This adds to the impression of a three-dimensional shape even without lighting. It turns out that transformed normals are crucial to obtain this effect.

Silhouettes of Smooth Surfaces

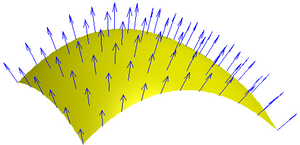

[edit | edit source]In the case of smooth surfaces, points on the surface at silhouettes are characterized by normal vectors that are parallel to the viewing plane and therefore orthogonal to the direction to the viewer. In the figure to the left, the blue normal vectors at the silhouette at the top of the figure are parallel to the viewing plane while the other normal vectors point more in the direction to the viewer (or camera). By calculating the direction to the viewer and the normal vector and testing whether they are (almost) orthogonal to each other, we can therefore test whether a point is (almost) on the silhouette.

More specifically, if V is the normalized (i.e. of length 1) direction to the viewer and N is the normalized surface normal vector, then the two vectors are orthogonal if the dot product is 0: V·N = 0. In practice, this will be rarely the case. However, if the dot product V·N is close to 0, we can assume that the point is close to a silhouette.

Increasing the Opacity at Silhouettes

[edit | edit source]For our effect, we should therefore increase the opacity if the dot product V·N is close to 0. There are various ways to increase the opacity for small dot products between the direction to the viewer and the normal vector. Here is one of them (which actually has a physical model behind it, which is described in Section 5.1 of this publication) to compute the increased opacity from the regular opacity of the material:

It always makes sense to check the extreme cases of an equation like this. Consider the case of a point close to the silhouette: V·N ≈ 0. In this case, the regular opacity will be divided by a small, positive number. (Note that GLSL guarantees to handle the case of division by zero gracefully; thus, we don't have to worry about it.) Therefore, whatever is, the ratio of and a small positive number, will be larger. The function will take care that the resulting opacity is never larger than 1.

On the other hand, for points far away from the silhouette we have V·N ≈ 1. In this case, α' ≈ min(1, α) ≈ α; i.e., the opacity of those points will not change much. This is exactly what we want. Thus, we have just checked that the equation is at least plausible.

Implementing an Equation in a Shader

[edit | edit source]In order to implement an equation like the one for in a shader, the first question should be: Should it be implemented in the vertex shader or in the fragment shader? In some cases, the answer is clear because the implementation requires texture mapping, which is usually only available in the fragment shader. In many cases, however, there is no general answer. Implementations in vertex shaders tend to be faster (because there are usually fewer vertices than fragments) but of lower image quality (because normal vectors and other vertex attributes can change abruptly between vertices). Thus, if you are most concerned about performance, an implementation in a vertex shader is probably a better choice. On the other hand, if you are most concerned about image quality, an implementation in a pixel shader might be a better choice. The same trade-off exists between per-vertex lighting (i.e. Gouraud shading) and per-fragment lighting (i.e. Phong shading).

The next question is: in which coordinate system should the equation be implemented? (See “Vertex Transformations” for a description of the standard coordinate systems.) Again, there is no general answer. However, an implementation in view coordinates is often a good choice in Blender because many uniform variables are specified in view coordinates. (In other environments implementations in world coordinates are more common.)

The final question before implementing an equation is: where do we get the parameters of the equation from? The regular opacity is specified (within a RGBA color) by a game property (see the tutorial on shading in view space). The normal vector gl_Normal is a standard vertex attribute (see the tutorial on debugging of shaders). The direction to the viewer can be computed in the vertex shader as the vector from the vertex position in view space to the camera position in view space, which is always the origin, i.e. , as discussed in “Vertex Transformations”. In other words, we should compute minus the position in view space, which is just the negative position in view space (apart from the fourth coordinate, which should be 0 for a direction).

Thus, we only have to transform the vertex position and the normal vector into view space before implementing the equation. The transformation matrix gl_ModelViewMatrix from object space to view space and its inverse gl_ModelViewMatrixInverse and the transpose of the inverse gl_ModelViewMatrixInverseTranspose are all provided by Blender as discussed in the tutorial on shading in view space. The application of transformation matrices to points and normal vectors is discuss in detail in “Applying Matrix Transformations”. The basic result is that points and directions are transformed just by multiplying them with the transformation matrix, e.g.:

vec4 positionInViewSpace = gl_ModelViewMatrix * gl_Vertex;

vec3 viewDirection = -vec3(positionInViewSpace); // == vec3(0.0, 0.0, 0.0) - vec3(positionInViewSpace)

On the other hand normal vectors are transformed by multiplying them with the transposed inverse transformation matrix. Since Blender provides us with the transposed inverse transformation matrix gl_ModelViewMatrixInverseTranspose, this could be used to transform normals. An alternative is to multiply the normal vector from the left to the inverse matrix gl_ModelViewMatrixInverse, which is equivalent to multiplying it from the right to the transposed inverse matrix as discussed in “Applying Matrix Transformations”. However, the best alternative is to use the 3×3 matrix gl_NormalMatrix, which is the transposed inverse 3×3 matrix of the model-view matrix:

vec3 normalInViewSpace = gl_NormalMatrix * gl_Normal;

Now we have all the pieces that we need to write the shader.

Shader Code

[edit | edit source]The fragment shader uses alpha blending as discussed in the tutorial on transparency:

import bge

cont = bge.logic.getCurrentController()

VertexShader = """

varying vec3 varyingNormalDirection;

// normalized surface normal vector

varying vec3 varyingViewDirection;

// normalized view direction

void main()

{

varyingNormalDirection =

normalize(gl_NormalMatrix * gl_Normal);

varyingViewDirection =

-normalize(vec3(gl_ModelViewMatrix * gl_Vertex));

gl_Position = gl_ModelViewProjectionMatrix * gl_Vertex;

}

"""

FragmentShader = """

varying vec3 varyingNormalDirection;

// normalized surface normal vector

varying vec3 varyingViewDirection;

// normalized view direction

const vec4 color = vec4(1.0, 1.0, 1.0, 0.3);

void main()

{

vec3 normalDirection = normalize(varyingNormalDirection);

vec3 viewDirection = normalize(varyingViewDirection);

float newOpacity = min(1.0,

color.a / abs(dot(viewDirection, normalDirection)));

gl_FragColor = vec4(vec3(color), newOpacity);

}

"""

mesh = cont.owner.meshes[0]

for mat in mesh.materials:

shader = mat.getShader()

if shader != None:

if not shader.isValid():

shader.setSource(VertexShader, FragmentShader, 1)

mat.setBlending(bge.logic.BL_SRC_ALPHA,

bge.logic.BL_ONE_MINUS_SRC_ALPHA)

The assignment to newOpacity is an almost literal translation of the equation

Note that we normalize the varyings varyingNormalDirection and varyingViewDirection in the vertex shader (because we want to interpolate between directions without putting more nor less weight on any of them) and at the begin of the fragment shader (because the interpolation can distort our normalization to a certain degree). However, in many cases the normalization of varyingNormalDirection in the vertex shader is not necessary. Similarly, the normalization of varyingViewDirection in the fragment shader is in most cases unnecessary.

More Artistic Control

[edit | edit source]The fragment shader uses a constant color to specify the RGBA color of the surface. It would be easier for CG artists to modify this color if this constant was replaced by four game properties (see tutorial on shading in view space).

Moreover, this silhouette enhancement lacks artistic control; i.e., a CG artist cannot easily create a thinner or thicker silhouette than the physical model suggests. To allow for more artistic control, you could introduce another (positive) floating-point number property and take the dot product |V·N| to the power of this number before using it in the equation above. This will allow CG artists to create thinner or thicker silhouettes independently of the opacity of the base color.

Summary

[edit | edit source]Congratulations, you have finished this tutorial. We have discussed:

- How to find silhouettes of smooth surfaces (using the dot product of the normal vector and the view direction).

- How to enhance the opacity at those silhouettes.

- How to implement equations in shaders.

- How to transform points and normal vectors from object space to view space.

- How to compute the viewing direction (as the difference from the camera position to the vertex position).

- How to interpolate normalized directions (i.e. normalize twice: in the vertex shader and the fragment shader).

- How to provide more artistic control over the thickness of silhouettes .

Further Reading

[edit | edit source]If you still want to know more

- about object space and world space, you should read the description of the “Vertex Transformations”.

- about how to apply transformation matrices to points, directions and normal vectors, you should read “Applying Matrix Transformations”.

- about the basics of rendering transparent objects, you should read the tutorial on transparency.

- about uniform variables provided by Blender and game properties, you should read the tutorial on shading in view space.

- about the mathematics of silhouette enhancement, you could read Section 5.1 of the paper “Scale-Invariant Volume Rendering” by Martin Kraus, published at IEEE Visualization 2005, which is available online.