GNU C Compiler Internals/Print version

| This is the print version of GNU C Compiler Internals You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/GNU_C_Compiler_Internals

- Introduction

- GNU C Compiler Architecture. Compilation of an expression

- Compilation of a function

- Function cals

- Stackguard

- GEM Framework

- Function Overloading in C

- Return Address Defense

- Adding Syntactic Sugar

- Improving Code Style

- Security Enhancements

- Links

- Contributors

Introduction

What is GCC?

GNU Compiler Collection (GCC) is a free software project that includes compilers for Ada, C, C++, Fortran, Java, and Objective-C, as well as libraries for these languages. It is capable of generating executables for a variety of platforms including x86, ARM, MIPS, PowerPC, etc.

History of GCC

The homepage of GCC is http://gcc.gnu.org. The modern history of GCC begins with the GCC 2.95 release. This version was released on July 31, 1999. GCC 3.0 which is considered modern history for the C++ compiler was released on June 18, 2001. Additional branches were created later on. As of now, the active development branches are GCC 3.4 with a latest release on November 30, 2005, and GCC 4.0 released last time on September 28, 2005. GCC 4.1 was released on Feb 28 2006. GCC 4.2 is the development branch of GCC. The source code repository is available online. GCC 4.2 (the trunk) is the only place where real development happens and new features can go.

GCC has become popular in industry and academia. The availability of its source code allows one to add new features to the compiler. GCC is used in several source-code based security projects, that is, in the tools that instrument the source code of the program to make it more secure. However, very few documents describing the GCC internals have been published so far.

When new functionality is implemented, the source code of GCC is modified directly. However, these compiler extensions are difficult to distribute because GCC is a really big program. A framework for creating modular extensions would greatly simplify the development of compiler extensions.

GCC 4.0 and above includes SSA optimizers and should be used for further high level optimizations and transformations. The RTL level should only be used for target specific optimizations and optimizations which are low level such as scheduling. Any GCC before 4.0 is in some minds an old dated compiler which was showing its age.

Purpose of this book

The purpose of this book is to address the demands of GCC hackers. We start with a description of GCC 3.4.1 architecture focusing on the source code parser. We chose this version of GCC because we used this version mostly. Then we address the problem of extension development. We present the GCC Extensibility Modules (GEM) project in the next chapter. GEM provides a number of hooks throughout GCC source code. It is implemented as a patch to GCC. A GEM-based compiler extension is developed as a stand-alone program. When the extension is completed, only its source code is distributed compared with distributing the source code of the GCC if GEM is not used. We give examples that demonstrate GEM programming at the end of the book.

| Take home: | What this book is: a guide to GCC internals for extension programmers. GEM framework for developing modular extensions is introduced. What this book is not: a programming language reference, GCC installation manual. |

GNU C Compiler Architecture. Compiling an Expression

An Overview of GCC Architecture. Compilation of an expression.

This section is based on a Red Hat magazine article [1].

The GNU Compiler Collection (GCC) comprises a number of compilers for different programming languages. The main GCC executable gcc processes source files written in C, C++, Objective-C, Objective-C++, Java, Fortran, or Ada and produces an assembly file for each source file. It is a driver program that invokes the appropriate compilation programs depending on the language of the source file. For a C source file they are the preprocessor and compiler cc1, the assembler as, and the linker collect2. The first and the third programs come with a GCC distribution; the assembler is a part of the GNU binutils package. This book describes the internals of the preprocessor and compiler cc1.

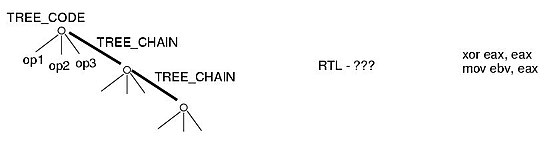

Each compiler includes three components: a front end, a middle end, and a back end. GCC compiles one file at a time. A source file goes through all three components one after another. Its representation is modified when it goes from a component to the next. Figure 1 illustrates the components and the source file representations associated with each of them. The abstract syntax tree (AST), register transfer language (RTL), and object are the main representations.

Main Representations

The purpose of the front end is to read the source file, parse it, and convert it into the standard abstract syntax tree (AST) representation. The AST is a dual-type representation: it is a tree where a node can have children and a list of statements where nodes are chained one after another. There is one front end for each programming language.

The AST is then used to generate a register-transfer language (RTL) tree. RTL is a hardware-based representation that corresponds to an abstract target architecture with an infinite number of registers. An RTL optimization pass optimizes the tree in the RTL form. Finally, a GCC back end generates the assembly code for the target architecture using the RTL representation. Examples of back ends are the x86 back end and the MIPS back end.

In the next sections we describe the internals of the C front end and the x86 back end. The compiler starts with its initialization and command line options processing. After that the C front end preprocesses the source file, parses it and performs a number of optimizations. The back end then generates the assembly code for the target platform and saves it to a file.

Auxiliary Data Structures

GCC has a number of additional data structures that facilitate code development, for example vector and heap.

The macros defined in vec.h implement a set of templated vector types and associated interfaces. These templates are implemented with macros, as we're not in C++ land. The interface functions are typesafe and use static inline functions, sometimes backed by out-of-line generic functions. The vectors are designed to interoperate with the GTY machinery.

Because of the different behavior of structure objects, scalar objects and of pointers, there are three flavors, one for each of these variants. Both the pointer and structure object variants pass pointers to objects around -- in the former case the pointers are stored into the vector and in the latter case the pointers are dereferenced and the objects copied into the vector. The scalar object variant is suitable for int-like objects, and the vector elements are returned by value.

There are both 'index' and 'iterate' accessors. The iterator returns a boolean iteration condition and updates the iteration variable passed by reference. Because the iterator will be inlined, the address-of can be optimized away.

The vectors are implemented using the trailing array idiom, thus they are not resizeable without changing the address of the vector object itself. This means you cannot have variables or fields of vector type -- always use a pointer to a vector. The one exception is the final field of a structure, which could be a vector type. You will have to use the embedded_size & embedded_init calls to create such objects, and they will probably not be resizeable (so don't use the 'safe' allocation variants). The trailing array idiom is used (rather than a pointer to an array of data), because, if we allow NULL to also represent an empty vector, empty vectors occupy minimal space in the structure containing them.

Each operation that increases the number of active elements is available in 'quick' and 'safe' variants. The former presumes that there is sufficient allocated space for the operation to succeed (it dies if there is not). The latter will reallocate the vector, if needed. Reallocation causes an exponential increase in vector size. If you know you will be adding N elements, it would be more efficient to use the reserve operation before adding the elements with the 'quick' operation. This will ensure there are at least as many elements as you ask for, it will exponentially increase if there are too few spare slots. If you want to reserve a specific number of slots, but do not want the exponential increase (for instance, you know this is the last allocation), use a negative number for reservation. You can also create a vector of a specific size from the get go.

You should prefer the push and pop operations, as they append and remove from the end of the vector. If you need to remove several items in one go, use the truncate operation. The insert and remove operations allow you to change elements in the middle of the vector. There are two remove operations, one which preserves the element ordering 'ordered_remove', and one which does not 'unordered_remove'. The latter function copies the end element into the removed slot, rather than invoke a memmove operation. The 'lower_bound' function will determine where to place an item in the array using insert that will maintain sorted order.

When a vector type is defined, first a non-memory managed version is created. You can then define either or both garbage collected and heap allocated versions. The allocation mechanism is specified when the type is defined, and is therefore part of the type. If you need both gc'd and heap allocated versions, you still must have exactly one definition of the common non-memory managed base vector.

If you need to directly manipulate a vector, then the 'address' accessor will return the address of the start of the vector. Also the 'space' predicate will tell you whether there is spare capacity in the vector. You will not normally need to use these two functions.

Vector types are defined using a DEF_VEC_{O,P,I}(TYPEDEF) macro, to get the non-memory allocation version, and then a DEF_VEC_ALLOC_{O,P,I}(TYPEDEF,ALLOC) macro to get memory managed vectors. Variables of vector type are declared using a VEC(TYPEDEF,ALLOC) macro. The ALLOC argument specifies the allocation strategy, and can be either 'gc' or 'heap' for garbage collected and heap allocated respectively. It can be 'none' to get a vector that must be explicitly allocated (for instance as a trailing array of another structure). The characters O, P and I indicate whether TYPEDEF is a pointer (P), object (O) or integral (I) type. Be careful to pick the correct one, as you'll get an awkward and inefficient API if you use the wrong one. There is a check, which results in a compile-time warning, for the P and I versions, but there is no check for the O versions, as that is not possible in plain C. Due to the way GTY works, you must annotate any structures you wish to insert or reference from a vector with a GTY(()) tag. You need to do this even if you never declare the GC allocated variants.

An example of their use would be,

DEF_VEC_P(tree); // non-managed tree vector.

DEF_VEC_ALLOC_P(tree,gc); // gc'd vector of tree pointers. This must

// appear at file scope.

struct my_struct {

VEC(tree,gc) *v; // A (pointer to) a vector of tree pointers.

};

struct my_struct *s;

if (VEC_length(tree,s->v)) { we have some contents }

VEC_safe_push(tree,gc,s->v,decl); // append some decl onto the end

for (ix = 0; VEC_iterate(tree,s->v,ix,elt); ix++)

{ do something with elt }

Additional Representations

Figure 2 shows additional representations of GCC 4.1.

Because of the differences in languages, the format of the generated ASTs is slightly different for each language. The next step after AST generation is the unification step in which the AST tree is converted into a unified form called generic. After this, the middle end part of the compiler takes control. First, the tree is converted into another representation called GIMPLE. In this form, each expression contains no more than three operands, all control flow constructs are represented as combinations of conditional statements and goto operators, arguments of a function call can only be variables, etc. Figure 2 illustrates the differences between a tree in generic form and a tree in GIMPLE form. GIMPLE is a convenient representations for optimizing the source code.

After GIMPLE, the source code is converted into the static single assignment (SSA) representation. The central idea of this form is the fact that each variable is assigned to only once, but can be used at the right hand side of an expression many times. Every time the same variable of a tree in the GIMPLE form is reassigned, the compiler creates a new version of that variable and stores the new value into it. When the same variable is assigned to in both branches of a conditional expression, one needs to merge the two possible values of the variable into a single variable. This operation is denoted as PHI function in the SSA form.

The SSA form is also used for optimizations. GCC performs more than 20 different optimizations on SSA trees. After the SSA optimization pass, the tree is converted back to the GIMPLE form.

| Take home: | GCC is a compiler collection that consists of a front end for each programming language, a middle end, and a back end for each architecture. The main representations that each source file goes through are AST in the front end, RTL in the middle end, and the assembly representation in the back end. GCC compiles one file at a time. |

GCC Initialization

The C front end includes the C/C++ preprocessor and the C compiler. Program cc1 includes both the preprocessor and C compiler. It compiles a C source file and generates an assembly (.S) file.

The compiler frontend and backend interact with each other using callback functions called language hooks. All hooks are included into a global variable struct lang_hooks lang_hooks that is defined in file langhooks.h. There are the following types of hooks: hooks for tree inlining, hooks for call graph, hooks for functions, hooks for tree dump, hooks for types, hooks for declarations, and language-specific hooks. The default values for the hooks are defined in file langhooks-def.h.

GCC initialization consists of command line option parsing, initializing the back end, creating the global scope, and initializing the built-in data types and functions.

Each declaration is associated with a scope. For example, a local variable is associated with its function's scope. A global declaration is associated with the global scope.

File toplev.c contains the main cc1 function toplev_main() and global variables that define the state of the compiler. Variable current_function_decl is the declaration of the function being compiled or NULL if between functions.

Function toplev_main() is the function that processes the command-line options, initializes the compiler, compiles a file, and frees up the allocated resources. Function decode_options() processes the command-line options and sets the corresponding variables within the compiler.

Following the command line option parsing function do_compile() is called. It performs the back-end initialization by calling function backend_init().

Backend initialization includes a number of steps. Function init_emit_once() generates RTL expressions for a number of registers: pc_rtx for the program counter, cc0 for the condition, stack_pointer_rtx, frame_pointer_rtx, etc. They are saved in array global_rtl.

After that, function lang_dependent_init() performs language-dependent initialization which includes the initialization of a front-end and a back-end. The C initialization function c_objc_common_init() creates built-in data types, initializes the global scope and performs other initialization tasks. Function c_common_nodes_and_builtins() creates pre-defined types described in file builtin-types.def.

The standard C types are created at the initialization time. The following table presents several types:

| Variable Name | C type |

| char_type_node | char |

| integer_type_node | int |

| unsigned_type_node | unsigned int |

| void_type_node | void |

| ptr_type_node | void* |

GCC built-in functions are the functions that are evaluated at compile time. For example, if the size argument of a strcpy() function is a constant then GCC replaces the function call with the required number of assignments. The compiler replaces standard library calls with built-in functions and then evaluates them once the function's AST is constructed. In case of strcpy(), the compiler checks the size argument and uses the optimized built-in version of strcpy() if the argument is constant. builtin_constant_p() allows to find out if the value of its argument is known at compile time. GCC builtins are used beyond GCC. For example, the string processing library of Linux kernel uses builtin_constant_p() to invoke the optimized version of a string processing function if the string size is known at compile time.

GCC evaluates each builtin function using a corresponding expand_builtin() function. For example, builtin_strcmp() is evaluated using expand_builtin_strcmp(). The following table gives examples of GCC builtins:

| Builtin Name | Explanation |

| builtin_constant_p | returns true if the argument is a constant |

| builtin_memcpy | equivalent to memcpy() |

| builtin_strlen | equivalent to strlen() |

| Take home: | GCC initialization consists of command line option parsing, initializing the back end, creating the global scope, and initializing the built-in data types and functions. |

Parser and Preprocessor

Following the initialization, function do_compile() calls function compile_file(). This function invokes parse_file() front-end language hook which is set to function c_common_parse_file() for C language. The latter function invokes function finish_options() which initializes the preprocessor and handles -D, -U, and -A command line options (which are equivalent to #define, #undef, and #assert respectively). The C preprocessor handles the preprocessor directives such as #define, #include in the source code.

Parser

Parser is implemented manually in file c_parser.c. Compared to earlier releases of GCC, the new parser generates a lower level AST. There were special tree codes for loops, for example FOR_STMT denoted a for() loop. In this release the loops are represented as conditional statements and gotos, that is trees of codes COND_EXPR, LABEL_EXPR, and GOTO_EXPR. This probably means that it is impossible to raise AST representation to original source code.

Preprocessor

The preprocessor is implemented as a library. The C language lexer function c_lex() calls the libcpp function cpp_get_token() which handles the preprocessor keywords. The state of the preprocessor is defined by variable cpp_reader *parse_in. Type struct cpp_reader contains most importantly the list of text buffers being processed. Each buffer corresponds to a source file (.c or .h). Function cpp_get_token() calls appropriate functions for legitimate preprocessor keywords. For example, when #include is encountered, function do_include_common() is invoked. It allocates a new buffer and places it on top of the buffer stack making it the current buffer. When a new file is compiled the buffer is popped off the stack and the compilation of the old file continues.

Function do_define() is called whenever a new macro is defined using #define keyword.

| Take home: | The preprocessor takes care of the preprocessor directives, for example #include and #ifdef. |

From Source Code to AST

After running the preprocessor GCC constructs an abstract syntax tree (AST) for each function of the source file. An AST is a number of connected nodes of type struct tree. Each node has a tree code that defines the type of the tree. Macro TREE_CODE() is used to refer to the code. Tree codes are defined in files tree.def and c-common.def. Trees with different tree codes are grouped into tree classes. The following tree classes are defined among others in GCC:

| Tree Class | Explanation |

| 'd' | declarations |

| '<' | comparison |

| '2' | binary arithmetic |

There are two types of trees: statements and expressions. Statements correspond to the C constructs, for example an expression followed by a ';', a conditional statement, a loop statement, etc. Expressions are what the statements are built up from. Examples of expressions are an assignment expression, an arithmetic expression, a function call, etc. Examples of tree codes are given in the following table:

| Tree Code | Tree Class | Explanation | Operands |

| MODIFY_EXPR | 'e' | an assignment expression | TREE_OPERAND(t,0) - left hand side; TREE_OPERAND(t,1) - right hand side; |

| CALL_EXPR | 'e' | function call | TREE_OPERAND(t,0) - function definition; TREE_OPERAND(t,1) - function arguments; |

| FUNCTION_DECL | 'd' | variable declaration | DECL_SOURCE_FILE(t) - source file; DECL_NAME(t) - variable name; |

| ARRAY_TYPE | 't' | array type | TREE_TYPE(t) - type of an array element; TYPE_DOMAIN(t) - type of index; |

| DECL_STMT | 'e' | variable declaration | TREE_OPERAND(t,0) - variable; DECL_INITIAL(TREE_OPERAND(t,1)) - initial value; |

In addition to the tree code that defines the type of the tree, a number of operands different for each tree type are available. For example, an assignment expression has two operands which correspond to the left and right sides of the expression. Macro TREE_OPERAND is used to refer to the operands. Macro IDENTIFIER_POINTER is used to find the name of an identifier that an IDENTIFIER_NODE tree represents. The following table presents several tree nodes, their purpose, and their operands.

Each tree has a type that corresponds to the type of C expression that it represents For example, the type of the MODIFY_EXPR node is the type of the left-hand side operand. NOP_EXPR and CONVERT_EXPR trees are used for type casting.

Tree NULL_TREE is equivalent to NULL. Function debug_tree() prints out the tree to stderr.

When a new identifier is lexed, it is added to the pool of strings that GCC maintains. The tree code of an identifier is IDENTIFIER_NODE. When the same identifier is lexed again, the same tree node is returned. Function get_identifier() returns the tree node for an identifier.

A new variable declaration is processed in a number of function calls. First, function start_decl() is called with the name of the declaration, its type as returned by the lexer, and its attributes. The function calls grokdeclarator() that checks the type and argument nodes and returns a tree with the code appropriate for the declaration: VAR_DECL for variables, TYPE_DECL for types, etc. The declarartion is then added to the scope. A scope contains all declarations created within a function, but does not contain global declarations. There is also the global scope that contains global declarations. When a function is parsed, its declarations are attached to its body as a BLOCK node. When a declaration is created, the identifier node is associated with the declaration node using IDENTIFIER_SYMBOL_VALUE. Function lookup_name() returns the declaration for a given identifier. When a declaration leaves the scope the tree attributes C_DECL_INVISIBLE is asserted.

A symbol table is not maintained in GCC. Instead, the compiler uses the pool of identifiers and C_DECL_INVISIBLE attribute. Language hook lang_hooks.decls.getdecls() returns the variables in the scope chained together.

Functions start_init() and finish_init() are called for an initialized declaration. Function finish_decl() finalizes the declaration. For an array declaration it computes the size of an initialized array. Then function layout_decl() is called. It computes the size and alignment of the declaration.

Parsing a function depends on the presence of its body. A function declaration is parsed using the same functions as those used for variable declarations. For a function definition, function start_function() is called. Then the compiler parses the body of the function. When the function ends function finish_function() is called.

Functions start_decl() and start_function() take declaration's attributes parameter as one of their arguments. The attributes are described in the GCC Manual. Attributes are extensions of GNU implementation of C. The following table presents a few of them and explains their purpose:

| Attribute | Explanation |

| constructor | call function automatically before main() |

| destructor | call function automatically after main() |

| alias | alias to another function |

For each type of C statement there is a function that constructs a tree node of the corresponding type. For example, function build_function_call() builds a CALL_EXPR node for a function call. It takes the identifier of the function name and the arguments as its parameters. The function finds the function declaration using lookup_name() and type casts the arguments using default_conversion().

After a function has been parsed, use macro DECL_SAVED_TREE to access its body. It is represented with a BIND_EXPR tree which binds local variables to statements. BIND_EXPR_VARS gives a chain of declared variables. BIND_EXPR_BODY returns a tree of STATEMENT_LIST type.

The following API allows one to traverse a statement list and to manipulate it:

| Function | Purpose |

| tsi_start(stmt_list) | get an iterator pointing at list head |

| tsi_last(stmt_list) | get an iterator pointing at list tail |

| tsi_end_p(iter) | is end of list? |

| tsi_stmt(iter) | get current element |

| tsi_split_statement_list_before(&iter) | split elements at iter |

| tsi_link_after(&iter, stmt, mode) | link chain after iter |

| tsi_next(&iter) | next element of the list |

| append_to_statement_list(tree, &stmt_list) | append tree to stmt_list |

It is possible to instrument function prolog/epilog at this level as demonstrated in gimplify_function_tree(). To add a statement to function epilog, use TRY_FINALLY_EXPR tree. Its first operand are the old statements, the second argument is the epilog statements. This type of treet instructs the following passes to execute the statements when a common exit basic block of a function is created.

To instrument function prolog, prepend the tree with the desired statements. Therefore, BIND_EXPR_BODY will have the prolog and the TRY_FINALLY_EXPR tree.

The ASTs are then converted into the SSA and eventually to the RTL representations. Whether the conversion occurs after parsing each function or when the whole file is parsed is controlled by the compiler option -funit-at-a-time. It is false by default.

| Take home: | GCC parser builds the AST representation of the source file. ASTs are built up of tree nodes. Each node has a code. Tree nodes correspond to the statements and expressions of C. Function debug_tree() prints out the tree. |

From AST to GIMPLE

An AST is gimplified when gimplify_function_tree() is eventually called from finish_function().

GIMPLE representation is based on SIMPLE described in [2]

According to this paper, the goal is to represent the tree as basic statements:

| x=a binop b | x=a | x=cast b | f(args) |

| *p=a binop b | *p=a | *p=cast b | - |

| x=unop a | x=*q | x=&y | x=f(args) |

| *p=unop a | *p=*q | *p=&y | *p=f(args) |

Temp variables are created as necessary in functions create_tmp_var() and declare_tmp_vars().

At this stage, GCC optimizes of complex conditional expressions, that is

if (a || b) stmt;

is translated into

if (a) goto L1; if (b) goto L1; else goto L2; L1: stmt; L2:

Also, each branch of a conditional expression is wrapped into STATEMENT_LIST tree.

From GIMPLE to RTL

Register Transfer Language represents an abstract machine with an infinite number of registers. Type rtx describes an instruction. Each RTL expression has a code and machine mode.

Similarly to ASTs, codes are grouped in a number of classes. They are defined in mode-classes.def.

| Classes | Explanation |

| RTX_CONST_OBJ | represent a constant object (e.g, CONST_INT) |

| RTX_OBJ | represent an object (e.g, REG, MEM) |

| RTX_COMPARE | comparison (e.g, LT, GT) |

| RTX_COMM_COMPARE | commutative comparison (e.g, EQ, NE, ORDERED) |

| RTX_UNARY | unary arithmetic expression (e.g, NEG, NOT) |

| RTX_COMM_ARITH | commutative binary operation (e.g,, PLUS, MULT) |

| RTX_TERNARY | non-bitfield three input operation (IF_THEN_ELSE) |

| RTX_BIN_ARITH | non-commutative binary operation (e.g., MINUS, DIV) |

| RTX_BITFIELD_OPS | bit-field operation (ZERO_EXTRACT, SIGN_EXTRACT) |

| RTX_INSN | machine insn (INSN, JUMP_INSN, CALL_INSN) |

| RTX_MATCH | something that matches in insns (e.g, MATCH_DUP) |

| RTX_AUTOINC | autoincrement addressing modes (e.g. POST_DEC) |

| RTX_EXTRA | everything else |

Machine modes listed in file machmode.def specify a size and format of data at the machine level. At the syntax tree level, each ..._TYPE and each ..._DECL node has a machine mode which describes data of that type or the data of the variable declared.

A list of instructions is built when function is compiled. Function emit_insn() adds an instruction to the list. A variable declaration AST has its RTL already generated. Use DECL_RTL to access it. Function emit_cmp_and_jump_insns() outputs a conditional statement. emit_label() prints out a label. These functions chain instructions one after another. Macros PREV_INSN and NEXT_INSN are used to traverse the list.

It is possible to access the first and last instructions using first_insn and last_insn. get_insns() gives the first insn of the current sequence or current function.

Use debug_rtx() to print out an RTL instruction on the screen and function print_rtl() to print a list of RTL expressions.

A number of functions create the nodes. For example, gen_label_rtx() build a label. The most generic functions are located in the target-specific directory. For example, x86 architecture rtl generation files genrtl.c and genrtl.h are in ./host-i686-pc-linux-gnu.

From RTL to Object

Each target architecture has its description represented as struct gcc_target targetm. Default initializers are available in file targhooks.c

The backend generates the assembly code for the specified target platform. Function output_asm_insn() is called for each instruction written to the assembly file. Function final_start_function() generates the prolog of the function before it is saved to the assembly file.

Lowering Passes

The processing of a function includes its lowering when a number of optimization passes are applied to it in function tree_lowering_passes(). As a result of lowering a function its control-flow graph is generated. A subsequent call to function cgraph_create_edges() uses basic block information to augment edges of the callgraph with invocations that the current function performs. References to functions yet undefined are saved in function record_references().

| Name | Meaning |

| remove_useless_stmts | N/A |

| mudflap_1 | narrow-pointer bounds-checking by tree rewriting |

| lower_cf | Lowers GIMPLE into unstructured form |

| pass_lower_eh | Exception handling semantics and decomposition for trees |

| pass_build_cfg | Create basic blocks |

| pass_lower_complex_O0 | complex operation lowering without optimization |

| pass_lower_vector | Lower vector operations to scalar operations |

| pass_warn_function_return | Emit return warnings |

| pass_early_tree_profile | set up hooks for tree-based profiling |

Switch Statement

Let us consider how a switch statement is translated from the source code to GIMPLE to RTL.

Function c_parser_switch_statement() is called when the statement is encountered in the source code. A typical switch statement has a number of case statements that might have breaks. Therefore, the parser has c_break_label tree that marks the place where switch ends. The function parses the body of the statement and generates LABEL_EXPR tree for the break label if at least one break was found. Function c_finish_case() attaches the body to the SWITCH_EXPR tree as one of its operands. In addition, this tree has two other operands: switch condition and switch labels. The operands are accessed using macro SWITCH_COND(), SWITCH_BODY(), and SWITCH_LABELS(). The labels are not filled in at the parse time.

A switch statement is gimplified in function gimplify_switch_expr(). The idea is to separate the body from the decision part and generate switch labels to make it possible to redirect execution to the appropriate case after verifying the condition. We will consider the case when default label exists. This function has two pointers to the statement list: pre_p which is the list of side effects and expr_p which is the statement itself.

The body of the switch is gimplified in gimplify_to_stmt_list(). Case labels are saved in field case_labels of variable struct gimplify_ctx gimplify_ctxp. Then the function creates a TREE_VEC of labels and initializes them with the corresponding case label. The TREE_VEC is assigned to SWITCH_LABELS operand of switch statement which is then appended to the pre_p list. The original statement is then overwritten with the SWITCH_BODY using expr_p pointer. Finally, the SWITCH_BODY operand of the switch statement in the side effects list is wiped out so that it contains labels only.

From this point on it is clear that compiler tries to represent the original statement using a jump table which maps each possible index value to the address of the corresponding case. Function expand_case() implements this idea. It generates a table_label at which jump instructions for each possible index value are generated. Then function try_tablejump() is called which expands index tree into index rtl and invokes do_tablejump(). This function generates absolute index rtl which combines the base address table_label and index offset. It emits jump instruction to the proper entry of the jump table afterwards. The execution continues in function expand_case(). The jump table is generated using SWITCH_LABELS:

labelvec[i] = gen_rtx_LABEL_REF (Pmode, label_rtx (n->code_label));

A number of jump instructions are emitted finally:

if (CASE_VECTOR_PC_RELATIVE || flag_pic)

emit_jump_insn (gen_rtx_ADDR_DIFF_VEC (CASE_VECTOR_MODE,

gen_rtx_LABEL_REF (Pmode, table_label),

gen_rtvec_v (ncases, labelvec),

const0_rtx, const0_rtx));

| Take home: | The backend generates the assembly code for the specified target platform. |

- ^ https://web.archive.org/web/20160410185222/https://www.redhat.com/magazine/002dec04/features/gcc/

- ^ L. Hendren, C. Donawa, M. Emami, G. Gao, Justiani, and B. Sridharan. Designing the McCAT Compiler Based on a Family of Structured Intermediate Representations. In Proc. of the 5th International Workshop on Languages and compilers for Parallel Computing, 1992.

Compilation of a function

Function Prolog and Epilog

A prolog is used to initialize the function, for example set up its stack frame. A function epilog is used to finalize execution. At each level of compilation, there are common actions performed for each function. Therefore, a number of prolog/epilog generation functions exist in GCC.

At the GIMPLE level prolog is generated in function gimplify_function_tree(). It adds profiling hooks if instructed so using -finstrument-functions.

At the RTL level, function expand_function_start is used. It starts the RTL for a new function, and set variables used for emitting RTL. It also makes the label for return statements to jump to and decides whether to return the value in memory or in a register. After this, it calls assign_parms() which maps the formal-ins to the actual-ins.

Machine-level prolog and epilog are added in pass flow2, function thread_prologue_and_epilogue_insns() which relies upon machine description file, for example i386.md. The description has a number of entries. The prolog and epilog entries is used in this case. They are set to ix86_expand_prologue() and ix86_expand_epilogue() respectively that generate RTL prologue and epilog for i386 architecture. The machine-specific code takes care of registered used in the function so that they are preserved between function calls. The push instructions are added as the very first instructions of a function.

The machine-specific code is generated as an RTL expression rather than assembly because this is the representation used at this pass of the compiler. The RTL representation is the most general across various targets. Therefore, a push instruction of i386 corresponds to a number of RTL expressions:

static rtx

gen_push (rtx arg)

{

return gen_rtx_SET (VOIDmode,

gen_rtx_MEM (Pmode,

gen_rtx_PRE_DEC (Pmode,

stack_pointer_rtx)),

arg);

}

At the assembly output level, prolog is generated in functions assemble_start_function() and final_start_function(). Their output is mostly related to debugging.

One should note that the prolog functions are executed from the higher-level representation to the lower-level ones. At the run-time, the execution order of the added code is opposite. That is, the machine-level prolog is executed first. In case of i386, it saves the registers used in the function and sets up a new stack frame. Then the parameters are received and the profiling hooks are called.

Local Control Flow Analysis

A control-flow graph is built up of basic blocks which is a sequence of instructions with only one entry and one exit. Type struct basic_block describes it. There is a pointer to the statement list that defines the basic block. Each basic block also has a pointer to the previous and next BB. Therefore, it is possible to link them in a list. Fields preds and succs give access to control/data flow edges into and out of the block.

Function create_basic_block() takes the first and the last statements and inserts the new BB after a given one. make_edge() links the two BBs.

Use macro FOR_EACH_BB to traverse a CFG. Each function flow graph has an entry and an exit block accessed using ENTRY_BLOCK_PTR and EXIT_BLOCK_PTR respectively.

Function build_tree_cfg() takes a GIMPLE tree and generates the CFG. First, it initializes the CFG with two BBs: ENTRY_BLOCK and EXIT_BLOCK. It calls make_blocks(), then make_edges().

Function calls

Global Control-Flow Analysis

The functions of a file are used to generate a callgraph in file cgraphunit.c. The two relevant functions are cgraph_finalize_compilation_unit() which is called from function pop_file_scope() after the file has been parsed and cgraph_finalize_function() which is called from finish_function().

The effect of each function depends on the compilation mode. The unit-at-a-time mode instructs the compiler to build the callgraph only after each function has been parsed. When this option is not present a function is converted as soon as it is parsed.

cgraph_finalize_function() calls cgraph_analyze_function() that converts it to RTL. Otherwise, the function is queued in cgraph_nodes_queue. At the end cgraph_finalize_compilation_unit() takes care of the queue. cgraph_nodes is the global variable representing the callgraph. Function dump_cgraph allows one to print out the callgraph.

Parameter Passing

In this chapter we will find out how functions call each other. Typically, a function passes a number of parameters when it makes a call. A stack frame is created when the function begins. It is possible, however, that the stack frame of the previous function gets reused. This type of function call is called a sibling call. When the function body is not large enough the run-time overhead of setting up a stack frame is too high. In this case the callee function gets inlined into the parent function.

Function expand_call() takes a CALL_EXPR tree and generates RTL expression. It has to decide argument passing mode. struct arg_data contains necessary information for each argument.

| Field Name | Explanation |

| tree tree_value | Tree node for this argument |

| enum machine_mode mode | Mode for value |

| rtx value | Current RTL value for argument, or 0 if it isn't precomputed |

| rtx initial_value | Initially-compute RTL value for argument; only for const functions. |

| rtx reg | Register to pass this argument in, 0 if passed on stack |

| rtx tail_call_reg | Register to pass this argument in when generating tail call sequence |

| rtx parallel_value | If REG is a PARALLEL, this is a copy of VALUE pulled into the correct form for emit_group_move. |

| int unsignedp | If REG was promoted from the actual mode of the argument expression, indicates whether the promotion is sign- or zero-extended |

| int partial | Number of bytes to put in registers. 0 means put the whole are in registers or not passed in registers. |

| int pass_on_stack | Nonzero if argument must be passed on stack. Note that some arguments may be passed on the stack even though pass_on_stack is zero, just because FUNCTION_ARG says so |

| struct locate_and_pad_arg_data locate | Some fields packaged up for locate_and_pad_parm |

| rtx stack | Location on the stack at which parameter should be stored |

| rtx stack_slot | Location on the stack of the start of this argument slot |

| rtx save_area | Place that this stack area has been saved, if needed |

| rtx *aligned_regs | If an argument's alignment does not permit direct copying into registers, copy in smaller-sized pieces into pseudos. These are stored in a block pointed to by this field. |

| int n_aligned_regs | says how many word-sized pseudos we made |

Generating a function call is impossible without certain machine-specific information, for example the number of hardware registers of different types. A number of macros defined in each architecture .h file take care of connecting the middle-end with the back-end:

| Macro Name | Explanation |

| INIT_CUMULATIVE_ARGS | Initialize CUMULATIVE_ARGS data structure for a call to a function whose data type is FNTYPE. |

| FUNCTION_ARG | Define where to put the arguments to a function. Value is zero to push the argument on the stack, or a hard register in which to store the argument. |

| FUNCTION_ARG_ADVANCE | Update the data in CUM to advance over an argument. |

Function init_cumulative_args() in file i386.c takes care of this in case of x86 architecture. It takes into account function attributes regparm and fastcall that a user might specify in which case the number of available registers is set accordingly. However, if the function takes a variable number of arguments then all parameters are passed in the stack.

Parameters' location is decided in function initialize_argument_information(). A machine-specific function_arg() returns the rtl for an argument if it goes into a register:

ret = gen_rtx_REG (mode, regno);

It is possible that a parameter is passed both on the stack and in a register, for example if the parameter's type is addressable.

Depending on certain conditions, sibling call instruction chain is generated in addition to the normal chain. Let us consider the case when only normal chain is generated. Variable rtx argblock is the address of space preallocated for stack parameters (on machines that lack push insns), or 0 if space not preallocated.

A number of machine-specific variables shape up the stack. ACCUMULATE_OUTOING_ARGS instructs the compiler to preallocate the sufficient number of bytes for all arguments of any function call in the function prolog. After that, function arguments are saved in that area without modifying stack frame size. ACCUMULATE_OUTOING_ARGS depends on variable target_flags. It depends on machine configuration and command-line options. In case of ACCUMULATE_OUTOING_ARGS, i386-specific variable

const int x86_accumulate_outgoing_args = m_ATHLON_K8 | m_PENT4 | m_NOCONA | m_PPRO;

and a command-line option -maccumulate-outgoing-args enable this feature. This means that it is enabled on a Pentium4 and that push/pop instructions are not used to pass funcitons' parameters.

If we preallocated stack space, compute the address of each argument and store it into the ARGS array.

Precompute parameters as needed for a function call. this routine fills in the INITIAL_VALUE and VALUE fields for each precomputed argument. precompute_arguments()

Given a FNDECL and EXP, return an rtx suitable for use as a target address in a call instruction

funexp = rtx_for_function_call (fndecl, addr);

Precompute all register parameters. It isn't safe to compute anything once we have started filling any specific hard regs. precompute_register_parameters (num_actuals, args, ®_parm_seen);

Now store (and compute if necessary) all non-register parms. These come before register parms, since they can require block-moves, which could clobber the registers used for register parms. Parms which have partial registers are not stored here, but we do preallocate space here if they want that.

store_one_arg (&args[i], argblock, flags,

adjusted_args_size.var != 0,

reg_parm_stack_space)

Do the register loads required for any wholly-register parms or any parms which are passed both on the stack and in a register. Their expressions were already evaluated.

load_register_parameters (args, num_actuals, &call_fusage, flags,

pass == 0, &sibcall_failure);

Finally, emit_call_1() generates instructions to call function FUNEXP, and optionally pop the results. The CALL_INSN is the first insn generated.

When the placement of the arguments is decided variable struct args_size args_size saves the total size of stack arguments. It records the size of a sequence of arguments as the sum of a tree-expression and a constant. The tree part is necessary to handle arguments of variable size, for example arrays arguments which size is not known at compile-time. The C language does not allow variable-sized arguments.

One might wonder how the callee function finds out where the arguments arrive. It also uses machine-specific information that the caller used. Rerunning INIT_CUMULATIVE_ARGS, FUNCTION_ARG, and FUNCTION_ARG_ADVANCE in the callee decides whether an argument should arrive in a register or on the stack identically to expand_call.

Stackguard

Stackguard is implemented in the following files.

These files translates GIMPLE to RTL.They take advantage of the CFG to instrument function prolog and epilog.

1) cfgexpand.c

Stackguard is created in function expand_used_vars(). Depending on stackguard flag either all arrays or character arrays only are allocated first.

tree_expand_cfg() takes care of prolog when it calls stack_protect_prologue(). The corresponding function stack_protect_epilogue() is called from calls.c to take care of tail calls and from tree_expand_cfg();

2) function.c

stack_protect_prologue(), stack_protect_epilogue()

The functions use machine definition targetm.

3) targhooks.c

Contains default initializers of target architecture hooks.

default_stack_protect_guard() builds a VAR_DECL tree node that represents variable __stack_chk_guard.

default_stack_protector_fail() builds a call to function __stack_chk_fail().

These ASTs are converted into RTL trees using expand_expr_stmt(). The prolog and epilog functions add RTL expressions directly as well. For example, to detect a compromise function epilog generates a conditional statement that compares the canary word on the stack with its initial value:

emit_cmp_and_jump_insns (x, y, EQ, NULL_RTX, ptr_mode, 1, label);

where x and y are corresponding declaration RTLs:

x = validize_mem (DECL_RTL (cfun->stack_protect_guard)); y = validize_mem (DECL_RTL (guard_decl));

Stackguard implements a certain amount of static analysis to make sure that suspicious functions with arguments of fixed length do not have vulnerabilities. The underlying mechanism is that of builtins. Stackguard has file strcpy.h that defines functions of interest, for example strcpy(). The new definition uses builtins __ssp_bos() to find out object size and __builtin___strcpy_chk():

- define __ssp_bos(ptr) __builtin_object_size (ptr, __SSP_FORTIFY_LEVEL > 1)

- define strcpy(dest, src) \

((__ssp_bos (dest) != (size_t) -1) \ ? __builtin___strcpy_chk (dest, src, __ssp_bos (dest)) \ : __strcpy_ichk (dest, src))

The builtins are evaluated at compile time. Function warning() called in maybe_emit_chk_warning() prints out a message if the destination buffer is overflown.

In case when Stackguard is unable to find out statically if the buffer is overrun function __strcpy_chk() is called at run-time. Library libssp provides the function. However, the protected program does not require linking with this library explicitly. Instead, GCC instructs the loader to use a shared library.

GEM Framework

Hooks

GEM framework is designed to facilitate development of compiler extensions. The idea of GEM is similar to the idea of Linux Security Modules (LSM), a project that defines hooks throughout Linux kernel that allow one to enforce a security policy.

GEM defines a number of hooks throughout GCC's source code. It is implemented as a patch to GCC. With GEM, a compiler extension is developed as a stand-alone program. It is compiled into a dynamically-linked module which is specified as the command line argument when GCC is invoked. GCC loads the module and calls its initialization function. The module then registers its hooks that are call-back functions in GCC.

In addition to the compiler hooks, GEM provides macros and functions that simplify extension development. In this chapter we will first introduce the hooks that GEM framework adds to GCC. Then we describe the typical issues in extension programming.

The project home page is at http://research.alexeysmirnov.name/gem

GEM adds several hooks throughout GCC source code. New hooks are added to GEM as necessary.

- Hook gem_handle_option to function handle_option() which processes each command line option. The hook takes the current option as its argument. If the hook returns value GEM_RETURN then GCC ignores the option.

- Hook gem_c_common_nodes_and_builtins which is called after all standard types are created. The GCC extension can create additional types.

- Hook gem_macro_name allows one to save the name of the macro being defined. Another GEM hook gem_macro_def is called when the macro definition is parsed. Using the macro name of the new macro definition it is possible to re-define the macro. This hook is added to function create_iso_definition().

- Hooks gem_start_decl and gem_start_function are called when a function or variable declaration/definition starts.

- Hook gem_build_function_call allows one to modify the name and the arguments of a function call.

- Hook gem_finish_function is inserted to finish_function() which is called from from grammar file. The compiler extension receives the function body of the function before it is translated into RTL.

- Hooks gem_output_asm_insn and gem_final_start_function are added to function output_asm_insn() which is called for each instruction of the assembly code and function final_start_function() called when the assembly code is written to the file, respectively. The former hook receives the text that is written to the file which allows it to modify the output. The latter hook can modify function's prolog.

| Take home: | GEM hooks are defined mostly at the AST level. A few hooks are defined at the assembly level. The new hooks are added as necessary. |

Traversing an AST

When the function's AST is constructed one can instrument it. GEM's gem_finish_function hook receives the AST of a function. The idea is to traverse the AST and instrument the AST nodes as necessary. Function walk_tree() takes the AST, the callback function, the optional data, NULL by default, and the walk_subtrees parameter, NULL by default. The callback function is called for each node of the AST before the operands are traversed. If the callback function modifies the walk_subtree() variable then the operands are not processed.

The following code demonstrates the idea:

static tree walk_tree_callback(tree *tp, int *walk_subtrees, void *data) {

tree t=*tp;

enum tree_code code = TREE_CODE(t);

switch (code) {

case CALL_EXPR:

instrument_call_expr(t);

break;

case MODIFY_EXPR:

instrument_modify_expr(t);

break;

}

}

walk_tree(&t_body, walk_tree_callback, NULL, NULL);

| Take home: | Function walk_tree() traverses an AST applying user-defined callback function to each tree node. |

Instrumenting an AST

In this section we describe functions that create new tree nodes and how to add the new nodes to an AST.

Lookup of a Declaration in the Symbol Table

void gem_find_symtab(tree *t_var, char *name) {

tree t_ident = get_identifier(name);

if (t_ident) *t_var = lookup_name(t_ident); else *t_var=NULL_TREE;

}

Building Tree Nodes

The walk_tree callback function can instrument the AST. Functions build1() and build() construct new tree nodes. The former function takes one operand, the latter one takes more than one operand. The following code computes the address of the operand, same as '&' C operator:

t = build1(ADDR_EXPR, TREE_TYPE(t), t);

The following example refers to an array element arr[0]:

t = build(ARRAY_REF, integer_type_node, arr, integer_zero_node);

The following example builds an integer constant:

t = build_int_cst(NULL_TREE, 123);

Building a string constant is more difficult. The following example demonstrates the idea:

tree gem_build_string_literal(int len, const char *str) {

tree t, elem, index, type;

t = build_string (len, str);

elem = build_type_variant (char_type_node, 1, 0);

index = build_index_type (build_int_2(len-1, 0));

type = build_array_type (elem, index);

T_T(t) = type;

TREE_READONLY(t)=1;

TREE_STATIC(t)=1;

TREE_CONSTANT(t)=1;

type=build_pointer_type (type);

t = build1 (ADDR_EXPR, type, t);

t = build1 (NOP_EXPR, build_pointer_type(char_type_node), t);

return t;

}

To build a function call one needs to find the function's declaration and build the list of arguments. Then the CALL_EXPR is constructed:

gem_find_symtab(&t_func_decl, "func"); t_arg1 = build_tree_list(NULL_TREE, arg1); t_arg2 = build_tree_list(NULL_TREE, arg2); ... TREE_CHAIN(t_arg1)=t_arg2; ... TREE_CHAIN(t_argn)=NULL_TREE; t_call = build_function_call(t_func_decl, t_arg1);

If you want to build a list of statements { stmt1; stmt2; ... }, then you need to use function append_to_statement_list():

tree list=NULL_TREE;

for (i=0; i<num_stmt; i++) {

BUILD_FUNC_CALL1(t_call, t_send, t_arr[i], NULL_TREE);

append_to_statement_list(t_call, &list);

}

Adding Nodes to a Tree

GCC 4.1 has an interface that allows one to add a chain of nodes into another chain of nodes implemented in file tree-iterator.c. Functions tsi_start() and tsi_last() create a tree statement iterator and assigns it to the first or the last tree in the list, respectively. Functions tsi_link_before() and tsi_link_after() link a statement using the iterator either before or after the current statement. There is also function append_to_statement_list() that adds a node to a list. If the specified list argument is NULL_TREE then a new statement list is allocated.

Building Function and Variable Declarations

A global declaration is added in hook gem_c_common_nodes_and_builtins(). In this following example we build a structure type and create a global variable of this type. The structure has a field of type unsigned int and a function pointer field.

t_log = make_node(RECORD_TYPE);

decl_chain = NULL_TREE;

field_decl = build_decl(FIELD_DECL, get_identifier("addr"), unsigned_type_node);

TREE_CHAIN(field_decl)=decl_chain;

decl_chain=field_decl;

DECL_FIELD_CONTEXT(decl_chain) = t_log;

...

t_func_type = build_function_type_list(void_type_node, unsigned_type_node, NULL_TREE);

field_decl = build_decl(FIELD_DECL, get_identifier("add_addr"), build_pointer_type(t_func_type);

TREE_CHAIN(field_decl)=decl_chain;

decl_chain=field_decl;

DECL_FIELD_CONTEXT(decl_chain) = t_log;

...

TYPE_FIELDS(t_log) = nreverse(decl_chain);

layout_type(t_log);

pushdecl(build_decl(TYPE_DECL, get_identifier("log_t"), t_log));

decl = build_decl(VAR_DECL, get_identifier("log"), build_pointer_type(t_log));

DECL_EXTERNAL(decl)=1;

pushdecl(decl);

When to Instrument

In this section we will describe when each of GEM hooks is used.

- Add new function and type declarations in hook gem_c_common_nodes_and_builtins.

- Instrument an AST after it is parsed in hook gem_finish_function.

- Modify attributes of a declaration in hooks gem_start_decl and gem_finish_decl. Let us say we would like to replace local array declarations char arr[10] with a heap array char *arr=(char*)malloc(10);

void l2h_start_decl(void *p_decl, void *p_declspecs, init initialized, void *p_attr) {

struct c_declarator *decl = *((struct c_declarator**)p_decl);

if (current_function_decl == NULL_TREE) return;

if (decl->kind == cdk_array) {

decl->kind = cdk_pointer;

decl->u.pointer_quals = 0;

}

}

void l2h_finish_decl(tree decl, tree *init, tree spec) {

...

gem_find_symtab(&t_malloc, "malloc");

BUILD_FUNC_CALL1(t_call, t_malloc, build_int_cst(NULL_TREE, size), NULL_TREE);

*init = build1(NOP_EXPR, build_pointer_type(char_type_node), t_call);

DECL(decl) = build_int_cst(NULL_TREE, 0); // if this field is NULL the init is ignored

}

- Replace function call with a proxy function

Function Prolog/Epilog

The assembly instructions are written to the assembly file:

#define OUTPUT_ASM_INST(inst) \

p=inst; \

putc('\t', asm_out_file); \

while (*p++) putc(p, asm_out_file); \

putc('\n', asm_out_file);

OUTPUT_ASM_INST("pushl %%eax");

OUTPUT_ASM_INST("popl %%eax");

| Take home: | Assembly instructions are added to function prolog and epilog using hooks gem_output_asm_insn and gem_final_start_function. |

C Function Overloading

Function overloading in C

The C function overloading extension aims at including a C++ feature to C language which allows use functions with the same name but different argument types. The cfo/test.c example of GEM demonstrates this feature:

void ec_aa_add(int from, char *to); void ec_aa_add(int from, int to); ...

are used to add an element to a pool data structure.

The idea behind the implementation of the extension is to rewrite each function declaration so that the new name includes the type information of function's arguments. In the above case, the modified names are ec_aa_add_int_char_ptr and ec_aa_add_int_int. The compilation continues normally with the updated names.

Because of the above modification, the call names need modification as well. The renaming takes into account the types of the arguments so that the appropriate function is called. For example, the compiler will modify

ec_aa_add(1,2);

to

ec_aa_add_int_int(1,2);

Three hooks are used in this extension. Functions cfo_start_decl() and cfo_start_function() intercept declarations. They call cfo_alias_decl() that replaces the name using argument types. To preserve the library code for the programs that do not use CFO extension, the following technique is used. If the function name is encountered first time, an alias is created to its old name so that one can invoke the function using either of them. Thus, the legacy code will use the old name, while the CFO-compiled code will use the type-augmented name. Finally, the declaration name is updated to include type information.

Hook cfo_build_function_call() is invoked from the parser when a new function call is found. It replaces the name of callee function with the name that takes into account the types of actual-in arguments.

Return Address Defense

Return Address Defense

A prerequisite to understanding the proposed extension is the following paper:

Tzi-Cker Chiueh, Fu-Hau Hsu,"RAD: A Compile-time Solution to Buffer Overflow Attacks," 21st IEEE International Conference on Distributed Computing Systems (ICDCS), Phoenix, Arizona, USA, April 2001.

http://www.ecsl.cs.sunysb.edu/tr/TR96.pdf

The paper proposed to instrument function prolog and epilog. The return address is saved in a well-protected buffer so that overwriting stack frame does not hurt it. The copy of the address is cross-checked with the one on the stack to detect a possible attack.

Implementing the proposed method has a number of difficulties. Different architectures have different stack frame layout. For example, the x86 prolog has the code to save clobbered registers which is generated in the machine-specific component of GCC. Therefore, instrumenting prolog at the RTL level will not take into account those registers because their number is unknown at that time. There are two approaches to this problem. The first is to instrument prolog at the machine level which is not portable across different architectures. The second approach is to instrument the call-site instead.

The idea of the proposed implementation is to find the approximation of the return address using the address of a code label immediately following the call instruction. It is also possible to find the address on the stack where the return address is written using the number of actual arguments that are pushed on the stack. In case of x86 frame layout, the space is reserved at the frame creation time, therefore the stack pointer not modified when a function is called. The return address approximation and the stack pointer are saved in a well-protected buffer, similarly to the original RAD approach. The following code snippet illustrates the idea:

func1() {

...

jmp L2

L1: // the return address is on stack

push $esp // we also need stack pointer

rad_prolog() // save the two arguments in a safe buffer

add $esp, 8 // make sure no traces left

func2(); // the original call-site

jmp L3

L2: call L1

L3: ....

When the instrumented code is executed, the control flow is directed to a label after the original call instruction. At that point, a call instruction saves the current EIP value and directs the control flow back to label L1. Therefore, the return address approximation argument is already on stack. We also need to push the ESP as the second argument. Function rad_prolog adds the pair to a safe buffer. The arguments are popped and the original function is called. The added call instruction is jumped over upon return.

We also need to instrument function epilog. GIMPLE level works in this case because there is a TRY_FINALLY_EXPR code. The most recent pair of return address and its location in the well-protected buffer are used to validate the return address on the stack. An alarm is generated in case the two values mismatch.

This extension does not use any machine-specific information. Therefore, it is portable across different architectures, even though we were unable to test it under anything except x86.

Adding Syntactic Sugar

toString() method for each structure as in Java

Invoke a block of code from a function as in Ruby

Linux implementation of lists allows to invoke a block of code on each element of the list:

list_for_each_prev(pos, head) {

struct nfs_page *p = nfs_list_entry(pos);

if (page_index(p->wb_page) < pg_idx)

break;

}

list_for_each_prev takes the code in the brackets as a parameter. The trick is to use a macro that rolls out to a for() loop whose body becomes the code in the brackets. The goal of this project is to allow programmers to use code blocks in function calls.

Dereference function results when a structure is returned

C allows one to dereference the return value of a function if it is a pointer to a structure:

get_struct()->field=0;

If the function returns the structure, not a pointer to it, then a compile-time error is generated:

get_struct().field=0; > request for member `field' in something not a structure or union

This extension addresses the problem of dereferencing structures that are return values.

Use functions to initialize a variable

When a variable is defined and initialized the initializer is constant. You will get an error if you try to use a function, no matter what this function is:

int getint() { return 1; }

int i=getint();

> initializer element is not constant

When a variable is used the function it was initialized with is called.

Default values of function arguments as in C++

void func(int a=0) {

printf("a=%d\n", a);

}

int main() {

func();

}

> syntax error before '=' token

Reference parameters as in C++

void test(int &a, int &b); int x,y; test(x,y);

GCC switches in object file

GCC switches in object fileusage: /usr/local/bin/paster serve [options] CONFIG_FILE [start|stop|restart|status] Serve the described application

If start/stop/restart is given, then it will start (normal operation), stop (--stop-daemon), or do both. You probably want ``--daemon`` as well for stopping.

Options:

-h, --help show this help message and exit

-v, --verbose

-q, --quiet

-nNAME, --app-name=NAME

Load the named application (default main)

-sSERVER_TYPE, --server=SERVER_TYPE

Use the named server.

--server-name=SECTION_NAME

Use the named server as defined in the configuration

file (default: main)

--daemon Run in daemon (background) mode

--pid-file=FILENAME Save PID to file (default to paster.pid if running in

daemon mode)

--log-file=LOG_FILE Save output to the given log file (redirects stdout)

--reload Use auto-restart file monitor

--reload-interval=RELOAD_INTERVAL

Seconds between checking files (low number can cause

significant CPU usage)

--status Show the status of the (presumably daemonized) server

--user=USERNAME Set the user (usually only possible when run as root)

--group=GROUP Set the group (usually only possible when run as root)

--stop-daemon Stop a daemonized server (given a PID file, or default

paster.pid file)

[app:main] use = egg:PasteEnabledPackage option1 = foo option2 = bar

[server:main] use = egg:PasteScript#wsgiutils host = 127.0.0.1 port = 80 sudo update-rc.d startup.sh defaults

Type information at run-time

There is no type information in C language available at run-time. The idea is to allow program to get the names and offsets of a structure's field at run time, the symbolic name of an enum declaration, etc. For example, instead of writing

enum tree_code code;

...

switch (code) {

case VAR_DECL:

printf("VAR_DECL\n");

break;

case BLOCK:

printf("BLOCK\n");

break;

...

}

one could write

printf("%s\n", type_info(code).name);

Improving Code Style

These exercises are from http://gcc.gnu.org/projects/beginner.html

Break up enormous source files

Not terribly hard. Watch out for file-scope globals. Suggested targets:

| File Size | File Name |

| 494K | java/parse.y |

| 413K | combine.c |

| 408K | dwarf2out.c |

| 375K | cp/pt.c |

| 367K | fold-const.c |

| 356K | loop.c |

There are several other files in this size range, which I have left out because touching them at all is unwise (reload, the Fortran front end). You can try, but I am not responsible for any damage to your sanity which may result.

Break up enormous functions

This is in the same vein as the above, but significantly harder, because you must take care not to change any semantics. The general idea is to extract independent chunks of code to their own functions. Any inner block that has a half dozen local variable declarations at its head is a good candidate. However, watch out for places where those local variables communicate information between iterations of the outer loop!

With even greater caution, you may be able to find places where entire blocks of code are duplicated between large functions (probably with slight differences) and factor them out.

Break up enormous conditionals

Harder still, because it's unlikely that you can tell what the conditional tests, and even less likely that you can tell if that's what it's supposed to test. It is definitely worth the effort if you can hack it, though. An example of the sort of thing we want changed:

if (mode1 == VOIDmode

|| GET_CODE (op0) == REG || GET_CODE (op0) == SUBREG

|| (modifier != EXPAND_CONST_ADDRESS

&& modifier != EXPAND_INITIALIZER

&& ((mode1 != BLKmode && ! direct_load[(int) mode1]

&& GET_MODE_CLASS (mode) != MODE_COMPLEX_INT

&& GET_MODE_CLASS (mode) != MODE_COMPLEX_FLOAT)

/* If the field isn't aligned enough to fetch as a memref,

fetch it as a bit field. */

|| (mode1 != BLKmode

&& SLOW_UNALIGNED_ACCESS (mode1, alignment)

&& ((TYPE_ALIGN (TREE_TYPE (tem))

< GET_MODE_ALIGNMENT (mode))

|| (bitpos % GET_MODE_ALIGNMENT (mode) != 0)))

/* If the type and the field are a constant size and the

size of the type isn't the same size as the bitfield,

we must use bitfield operations. */

|| ((bitsize >= 0

&& (TREE_CODE (TYPE_SIZE (TREE_TYPE (exp)))

== INTEGER_CST)

&& 0 != compare_tree_int (TYPE_SIZE (TREE_TYPE (exp)),

bitsize[check spelling])))))

|| (modifier != EXPAND_CONST_ADDRESS

&& modifier != EXPAND_INITIALIZER

&& mode == BLKmode

&& SLOW_UNALIGNED_ACCESS (mode, alignment)

&& (TYPE_ALIGN (type) > alignment

|| bitpos % TYPE_ALIGN (type) != 0)))

{

Delete garbage

#if 0 blocks that have been there for years, unused functions, unused entire files, dead configurations, dead Makefile logic, dead RTL and tree forms, and on and on and on. Depending on what it is, it may not be obvious if it's garbage or not. Go for the easy ones first.

Use predicates for RTL objects

GCC has simple predicates to see if a given rtx is of some specific class. These predicates simply look at the rtx_code of the given RTL object and return nonzero if the predicate is true. For example, if an rtx represents a register, then REG_P (rtx) is nonzero.

Unfortunately, lots of code in the middle end and in the back ends does not use these predicates and instead compare the rtx_code in place: (GET_CODE (rtx) == REG). Find all the places where such comparisons can be replaced with a predicate. Also, for many common comparisons there is no predicate yet. See which ones are worth having a predicate for, and add them. You can find a number of suggestions in the mailing list archives.

Security Enhancements

Return address protection (RAD)

Repair of control-hijacking attacks (DIRA)

Array bounds checking using segmentation hardware (CASH)

Detecting integer overflows (DIVINE)

Dynamic Information Flow Tracking (GDIF)

Links

Contributors

GNU Free Documentation License

As of July 15, 2009 Wikibooks has moved to a dual-licensing system that supersedes the previous GFDL only licensing. In short, this means that text licensed under the GFDL only can no longer be imported to Wikibooks, retroactive to 1 November 2008. Additionally, Wikibooks text might or might not now be exportable under the GFDL depending on whether or not any content was added and not removed since July 15. |

Version 1.3, 3 November 2008 Copyright (C) 2000, 2001, 2002, 2007, 2008 Free Software Foundation, Inc. <http://fsf.org/>

Everyone is permitted to copy and distribute verbatim copies of this license document, but changing it is not allowed.

0. PREAMBLE

The purpose of this License is to make a manual, textbook, or other functional and useful document "free" in the sense of freedom: to assure everyone the effective freedom to copy and redistribute it, with or without modifying it, either commercially or noncommercially. Secondarily, this License preserves for the author and publisher a way to get credit for their work, while not being considered responsible for modifications made by others.

This License is a kind of "copyleft", which means that derivative works of the document must themselves be free in the same sense. It complements the GNU General Public License, which is a copyleft license designed for free software.

We have designed this License in order to use it for manuals for free software, because free software needs free documentation: a free program should come with manuals providing the same freedoms that the software does. But this License is not limited to software manuals; it can be used for any textual work, regardless of subject matter or whether it is published as a printed book. We recommend this License principally for works whose purpose is instruction or reference.

1. APPLICABILITY AND DEFINITIONS

This License applies to any manual or other work, in any medium, that contains a notice placed by the copyright holder saying it can be distributed under the terms of this License. Such a notice grants a world-wide, royalty-free license, unlimited in duration, to use that work under the conditions stated herein. The "Document", below, refers to any such manual or work. Any member of the public is a licensee, and is addressed as "you". You accept the license if you copy, modify or distribute the work in a way requiring permission under copyright law.

A "Modified Version" of the Document means any work containing the Document or a portion of it, either copied verbatim, or with modifications and/or translated into another language.

A "Secondary Section" is a named appendix or a front-matter section of the Document that deals exclusively with the relationship of the publishers or authors of the Document to the Document's overall subject (or to related matters) and contains nothing that could fall directly within that overall subject. (Thus, if the Document is in part a textbook of mathematics, a Secondary Section may not explain any mathematics.) The relationship could be a matter of historical connection with the subject or with related matters, or of legal, commercial, philosophical, ethical or political position regarding them.

The "Invariant Sections" are certain Secondary Sections whose titles are designated, as being those of Invariant Sections, in the notice that says that the Document is released under this License. If a section does not fit the above definition of Secondary then it is not allowed to be designated as Invariant. The Document may contain zero Invariant Sections. If the Document does not identify any Invariant Sections then there are none.

The "Cover Texts" are certain short passages of text that are listed, as Front-Cover Texts or Back-Cover Texts, in the notice that says that the Document is released under this License. A Front-Cover Text may be at most 5 words, and a Back-Cover Text may be at most 25 words.

A "Transparent" copy of the Document means a machine-readable copy, represented in a format whose specification is available to the general public, that is suitable for revising the document straightforwardly with generic text editors or (for images composed of pixels) generic paint programs or (for drawings) some widely available drawing editor, and that is suitable for input to text formatters or for automatic translation to a variety of formats suitable for input to text formatters. A copy made in an otherwise Transparent file format whose markup, or absence of markup, has been arranged to thwart or discourage subsequent modification by readers is not Transparent. An image format is not Transparent if used for any substantial amount of text. A copy that is not "Transparent" is called "Opaque".

Examples of suitable formats for Transparent copies include plain ASCII without markup, Texinfo input format, LaTeX input format, SGML or XML using a publicly available DTD, and standard-conforming simple HTML, PostScript or PDF designed for human modification. Examples of transparent image formats include PNG, XCF and JPG. Opaque formats include proprietary formats that can be read and edited only by proprietary word processors, SGML or XML for which the DTD and/or processing tools are not generally available, and the machine-generated HTML, PostScript or PDF produced by some word processors for output purposes only.

The "Title Page" means, for a printed book, the title page itself, plus such following pages as are needed to hold, legibly, the material this License requires to appear in the title page. For works in formats which do not have any title page as such, "Title Page" means the text near the most prominent appearance of the work's title, preceding the beginning of the body of the text.

The "publisher" means any person or entity that distributes copies of the Document to the public.

A section "Entitled XYZ" means a named subunit of the Document whose title either is precisely XYZ or contains XYZ in parentheses following text that translates XYZ in another language. (Here XYZ stands for a specific section name mentioned below, such as "Acknowledgements", "Dedications", "Endorsements", or "History".) To "Preserve the Title" of such a section when you modify the Document means that it remains a section "Entitled XYZ" according to this definition.