Introduction to Programming Languages/Print version

| This is the print version of Introduction to Programming Languages You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Introduction_to_Programming_Languages

Preface

Preface

[edit | edit source]There exists an enormous variety of programming languages in use today. Testimony of this fact are the 650 plus different programming languages listed in Wikipedia. A good understanding of this great diversity is important for many reasons. First, it opens new perspectives to the computer scientists. Problems at first hard in one language might have a very easy solution in another. Thus, knowing which language to use in a given domain might decrease considerably the effort to build an application.

Furthermore, programming languages are just by themselves a fascinating topic. Their history blends together with the history of computer science. Many of the recipients of the Turing Award, such as John McCarthy or John Backus were directly involved in the project of some programming languages. And some of the most vibrating discussions in computer science were motivated by the design of programming languages. For instance, many modern programming languages no longer provide the goto command. It has not been like this in early designs. It took many years of discussions, plus a letter signed by Edsger Dijkstra, himself a Turing Award laureate, to ostracize the goto command into oblivion.

This wikibook is an attempt to describe a bit of the programming languages zoo. The material present here has been collected from blogs, language manuals, forums and many other sources; however, many examples have been taken from Dr. Webber's book. Thus, we follow the organization used in the slides that he has prepared for his book Modern Programming Languages. Thus, we start describing the ML programming language. Next, we move on to Python and finally to Prolog. We use each of these particular languages to introduce fundamental notions related to the design and the implementation of general purpose programming languages.

Programming Language Paradigms

Programming Language Paradigms

[edit | edit source]Programming languages can be roughly classified in two categories: imperative and declarative. This classification, however, is not strict. It only means that some programming languages foster more naturally a particular way to develop programs. Imperative programming puts emphasis on how to do something while declarative programming expresses what is the solution to a given problem. Declarative Languages can be further divided into Functional and Logic languages. Functional Languages treat the computation as the evaluation of mathematical functions whereas Logic Languages treat the computation as axioms and derivation rules.

Imperative Languages

[edit | edit source]Imperative languages follow the model of computation described in the Turing Machine; hence, they maintain the fundamental notion of a state. In that formalism, the state of a program is given by the configuration of the memory tape, and the pointer in table of rules. In a modern computer, the state is given by the values stored in the memory and in the registers. In particular, a special register, the program counter, defines the next instruction to be executed. Instructions are a very important concept in imperative programming. An imperative program issues to the machines orders that define the next actions to be taken. These actions change the state of the machine. In other words, imperative programs are similar to a recipe in which the necessary steps to do something are defined and ordered. Instructions are combined together to make up commands. There are three main categories of commands: assignments, branches and sequences.

- An assignment changes the state of the machine, by updating its memory with a new value. Usually an assignment is represented by a left-hand side, which denotes a memory location, and a right-hand side, which indicates the value that will be stored there.

- A branch changes the state of the program by updating the program counter. Examples of branches include commands such as if, while, for, switch, etc.

- Sequences are used to chain commands together, hence building more expressive programs. Some languages require a special symbol to indicate the termination of commands. In C, for instance, we must finish them with a semicolon. Other languages, such as Pascal, require a special symbol in between commands that form a sequence. In Pascal this special symbol is again the semicolon.

The program below, written in C, illustrates these concepts. This function describes how to get the factorial of an integer number. The first thing that we must do to accomplish this objective is to assign the number 1 into the memory cell called f. Next, while the memory cell called n holds a value that is greater than 1, we must update f with the value of that cell multiplied by the current value of n, and we must decrease by one the value stored at n. At the end of these iterations we have the factorial of the input n stored in f.

int fact(int n) {

int f = 1;

while (n > 1) {

f = f * n;

n = n - 1;

}

return f;

}

Imperative languages are the dominant programming paradigm in the industry. There are many hypothesis that explain this dominance, and for a good discussion, we can recommend Philip Wadler's excellent paper. Examples of imperative languages include C, Pascal, Basic, Assembler.

There are other multi-paradigm languages that also support partially or even fully the imperative paradigm like C++, JavaScript but as multi-paradigm languages they are not good examples as real utilization of the languages would not fit the description.

Declarative Languages

[edit | edit source]A program in a declarative language declares one truth. In other words, such a program describes a proof that some truth holds. These programs are much more about "what" is the solution of a problem, than "how" to get that solution. These programs are made up of expressions, not commands. An expression is any valid sentence in the programming language that returns a value. These languages have a very important characteristics: referential transparency. This property implies that any expression can be replaced by its value. For instance, a function call that computes the factorial of 5 can be replaced by its result, 120. Declarative languages are further divided into two very important categories: functional languages and logic languages.

Functional programming is based on a formalism called the lambda calculus. Like the Turing Machine, the lambda calculus is also used to define which problems can be solved by computers. A program in the functional paradigm is similar to the notation that we use in mathematics. Functions have no state, and every data is immutable. A program is the composition of many functions. These languages have made popular some techniques such as higher order functions and parametric polymorphism. The program below, written in Standard ML, is the factorial function. Notice this version of factorial is quite different from our last program, which was written in C. This time we are not explaining what to do to get the factorial. We are simply stating what is the factorial of a given number n. It is usual, in the declarative world, to use the thing to explain itself. The factorial of a number n is the chain of multiplications n * (n-1) * (n-2) * ... * 3 * 2 * 1. We do not know beforehand how large is n. Thus, to provide a general description of the factorial of n, we say that this quantity is n multiplied by the factorial of n-1. Inductively we know how to compute that last factorial. Given that we also know how to multiply two integer numbers, we have the final quantity.

fun fact n = if n < 1 then 1 else n * fact(n-1)

There are many functional languages in use today. Noticeable examples include, in addition to Standard ML, languages such as Ocaml, Lisp, Haskell and F Sharp. Functional languages are not heavily used in the software industry. Nevertheless, there have been some very mature projects written in such languages. For example, the Facebook social network's chat service was written in the functional language Erlang.

Logic programming is the second subcategory in the declarative programming paradigm. Logic programs describe problems as axioms and derivation rules. They rely on a powerful algorithm called unification to prove properties about the axioms, given the inference rules. Logic languages are built on top of a formalism called Horn Clauses. There exist only a few members in the family of logic programming languages. The most well-known among these members is Prolog. The key property of referential transparency, found in functional programming, is also present in logic programming. Programs in this paradigm do not describe how to reach the solution of a problem. Rather, they explain what is this solution. The program below, written in Prolog, computes the factorial function. Just like the SML program, this version of the factorial function also describes what is the factorial of a number, instead of which computations must be performed to obtain it.

fact(N, 1) :- N < 2.

fact(N, F) :- N >= 2, NX is N - 1, fact(NX, FX), F is N * FX.

Prolog and related languages are even less used in the industry than the functional languages. Nevertheless, logic programming remains very important in the academia. For example, Prolog and Lisp are the languages of choice for artificial intelligence enthusiasts.

Programming Paradigm as a Programmer's choice

[edit | edit source]There are some languages in which developing imperative programs is more natural. Similarly, there are programming languages in which developing declarative programs, be it functional or logic, is more natural.

The paradigm decision may depend on a myriad of factors. From the complexity of the problem being addressed (Object Oriented Programming evolved in part from the need to simplify and model complex problems) to issues like programmer and code interchangeability, consistency and patternization, in a consistent effort to make programming an engineered and industrial activity.

However, in the last stages of decision, the programming philosophy can be said to be more a decision of the programmer, than an imposition of the programming language itself. For instance, the function below, written in C, finds factorials in a very declarative way:

int fact(int n) { return n > 1 ? n * fact(n - 1) : 1; }

On the other hand, it is also possible to craft an imperative implementation of the factorial function in a declarative language. For instance, below we have an imperative implementation written in SML. In this example, the construction ref denotes a reference to a memory location. The bang ! reads the value stored at that location, and the command while has the same semantics as in imperative languages.

fun fact n =

let

val fact = ref 1

val counter = ref n

in

while (!counter > 1) do

(fact := !fact * !counter;

counter := !counter - 1);

!fact

end;

Incidentally, we observe that many features of functional languages end up finding a role in the design of imperative languages. For instance, Java and C++ today rely on parametric polymorphism, in the form of generics or templates, to provide developers with ways to build software that is more reusable. Parametric polymorphism is a feature typically found in statically typed functional languages. Another example of this kind of migration is type inference, today present in different degrees in languages such as C# and Scala. Type inference is another feature commonly found in statically typed functional languages. This last programming language, Scala, is a good example of how different programming paradigms meet together in the design of modern programming languages. The function below, written in Scala, and taken from this language's tutorial, is an imperative implementation of the well-known quicksort algorithm:

def sort(a: Array[Int]) {

def swap(i: Int, j: Int) { val t = a(i); a(i) = a(j); a(j) = t }

def sort1(l: Int, r: Int) {

val pivot = a((l + r) / 2)

var i = l

var j = r

while (i <= j) {

while (a(i) < pivot) i += 1

while (a(j) > pivot) j -= 1

if (i <= j) {

swap(i, j)

i += 1

j -= 1

}

}

if (l < j) sort1(l, j)

if (j < r) sort1(i, r)

}

if (a.length > 0)

sort1(0, a.length - 1)

}

And below we have this very algorithm implemented in a more declarative way in the same language. It is easy to see that the declarative approach is much more concise. Once we get used to this paradigm, one could argue that the declarative version is also more clear too. However, the imperative version is more efficient, because it does the sorting in place; that is, it does not allocate extra space to perform the sorting.

def sort(a: List[Int]): List[Int] = {

if (a.length < 2)

a

else {

val pivot = a(a.length / 2)

sort(a.filter(_ < pivot)) ::: a.filter(_ == pivot) ::: sort(a.filter(_ > pivot))

}

}

Grammars

A programming language is described by the combination of its semantics and its syntax. The semantics gives us the meaning of every construction that is possible in that programming language. The syntax gives us its structure. There are many different ways to describe the semantics of a programming language; however, after decades of study, there is mostly one technology to describe its syntax. We call this formalism the context free grammars.

Notice that context-free grammars are not the only kind of grammar that computers can use to recognize languages. In fact, there exist a whole family of formal grammars, which have been first studied by Noam Chomsky, and today form what we usually call the Chomsky's hierarchy. Some members of this hierarchy, such as the regular grammars are very simple, and recognize a relatively small number of languages. Nevertheless, these grammars are still very useful. Regular grammars are at the heart of a compiler's lexical analysis, for instance. Other types of grammars are very powerful. As an example, the unrestricted grammars are as computationally powerful as the Turing Machines. Nevertheless, in this book we will focus on context-free grammars, because they are the main tool that a compiler uses to convert a program into a format that it can easily process.

Grammars

[edit | edit source]A grammar lets us transform a program, which is normally represented as a linear sequence of ASCII characters, into a syntax tree. Only programs that are syntactically valid can be transformed in this way. This tree will be the main data-structure that a compiler or interpreter uses to process the program. By traversing this tree the compiler can produce machine code, or can type check the program, for instance. And by traversing this very tree the interpreter can simulate the execution of the program.

The main notation used to represent grammars is the Backus-Naur Form, or BNF for short. This notation, invented by John Backus and further improved by Peter Naur, was first used to describe the syntax of the Algol programming language. A BNF grammar is defined by a four-elements tuple represented by (T, N, P, S). The meaning of these elements is as follows:

- T is a set of tokens. Tokens form the vocabulary of the language and are the smallest units of syntax. These elements are the symbols that programmers see when they are typing their code, e.g., the while's, for's, +'s, ('s, etc.

- N is a set of nonterminals. Nonterminals are not part of the language per se. Rather, they help to determine the structure of the derivation trees that can be derived from the grammar. Usually we enclose these symbols in angle brackets, to distinguish them from the terminals.

- P is a set of productions rules. Each production is composed of a left-hand side, a separator and a right-hand side, e.g., <non-terminal> := <expr1> ... <exprN>, where ':=' is the separator. For convenience, productions with the same left-hand side can be abbreviated using the symbol '|'. The pipe, in this case, is used to separate different alternatives.

- S is a start symbol. Any sequence of derivations that ultimately produces a grammatically valid program starts from this special non-terminal.

As an example, below we have a very simple grammar, that recognizes arithmetic expressions. In other words, any program in this simple language represents the product or the sum of names such as 'a', 'b' and 'c'.

<exp> ::= <exp> "+" <exp>

<exp> ::= <exp> "*" <exp>

<exp> ::= "(" <exp> ")"

<exp> ::= "a"

<exp> ::= "b"

<exp> ::= "c"

This grammar could be also represented in a more convenient way using a sequence of bar symbols, e.g.:

<exp> ::= <exp> "+" <exp> | <exp> "*" <exp> | "(" <exp> ")" | "a" | "b" | "c"

Parsing

Parsing

[edit | edit source]Parsing is the problem of transforming a linear sequence of characters into a syntax tree. Nowadays we are very good at parsing. In other words, we have many tools, such as lex and yacc, for instance, that helps us in this task. However, in the early days of computer science parsing was a very difficult problem. This was one of the first, and most fundamental challenges that the first compiler writers had to face. All of that must be dealt as an example.

If the program text describes a syntactically valid program, then it is possible to convert this text into a syntax tree. As an example, the figure below contains different parsing trees for three different programs written in our grammar of arithmetic expressions:

There are many algorithms to build a parsing tree from a sequence of characters. Some are more powerful, others are more practical. Basically, these algorithms try to find a sequence of applications of the production rules that end up generating the target string. For instance, let's consider the grammar below, which specifies a very small subset of the English grammar:

<sentence> ::= <noun phrase> <verb phrase> . <noun phrase> ::= <determiner> <noun> | <determiner> <noun> <prepositional phrase> <verb phrase> ::= <verb> | <verb> <noun phrase> | <verb> <noun phrase> <prepositional phrase> <prepositional phrase> ::= <preposition> <noun phrase> <noun> ::= student | professor | book | university | lesson | programming language | glasses <determiner> ::= a | the <verb> ::= taught | learned | read | studied | saw <preposition> ::= by | with | about

Below we have a sequence of derivations showing that the sentence "the student learned the programming language with the professor" is a valid program in this language:

<sentence> ⇒ <noun phrase> <verb phrase> .

⇒ <determiner> <noun> <verb phrase> .

⇒ the <noun> <verb phrase> .

⇒ the student <verb phrase> .

⇒ the student <verb> <noun phrase> <prepositional phrase> .

⇒ the student learned <noun phrase> <prepositional phrase> .

⇒ the student learned <determiner> <noun> <prepositional phrase> .

⇒ the student learned the <noun> <prepositional phrase> .

⇒ the student learned the programming language <prepositional phrase> .

⇒ the student learned the programming language <preposition> <noun phrase> .

⇒ the student learned the programming language with <noun phrase> .

⇒ the student learned the programming language with <determiner> <noun> .

⇒ the student learned the programming language with the <noun> .

⇒ the student learned the programming language with the professor .

Ambiguity

Ambiguity

[edit | edit source]Compilers and interpreters use grammars to build the data-structures that they will use to process programs. Therefore, ideally a given program should be described by only one derivation tree. However, depending on how the grammar was designed, ambiguities are possible. A grammar is ambiguous if some phrase in the language generated by the grammar has two distinct derivation trees. For instance, the grammar below, which we have been using as our running example, is ambiguous.

<exp> ::= <exp> "+" <exp>

| <exp> "*" <exp>

| "(" <exp> ")"

| "a" | "b" | "c"

In order to see that this grammar is ambiguous we can observe that it is possible to derive two different syntax trees for the string "a * b + c". The figure below shows these two different derivation trees:

Sometimes, the ambiguity in the grammar can compromise the meaning of the sentences that we derive from that grammar. As an example, our English grammar is ambiguous. The sentence "The professor saw the student with the glasses" has two possible derivation trees, as we show in the side figure. In the upper tree, the prepositional phrase "with the glasses" is modifying the verb. In other words, the glasses are the instruments that the professor has used to see the student. On the other hand, in the derivation tree at the bottom the same prepositional expression is modifying "the student". In this case, we can infer that the professor saw a particular student that was possibly wearing glasses at the time he or she was seen.

A particularly famous example of ambiguity in compilers happens in the if-then-else construction. The ambiguity happens because many languages allow the conditional clause without the "else" part. Lets consider a typical set of production rules that we can use to derive conditional statements:

<cmd> ::= if <bool> then <cmd>

| if <bool> then <cmd> else <cmd>

Upon stumbling on a program like the code below, we do not know if the "else" clause is paired with the outermost or with the innermost "then". In C, as well as in the vast majority of languages, compilers solve this ambiguity by pairing an "else" with the closest "then". Therefore, according to this semantics, the program below will print the value 2 whenever a > b and c <= d:

if (a > b) then if (c > d) then print(1) else print(2)

However, the decision to pair the "else" with the closest "then" is arbitrary. Language designers could have chosen to pair the "else" block with the outermost "then" block, for instance. In fact, that grammar we saw above is ambiguous. We demonstrate this ambiguity by producing two derivation trees for the same sentence, as we do in the example figure below:

As we have seen in the three examples above, we can show that a grammar is ambiguous by providing two different parsing trees for the same sentence. However, the problem of determining if a given grammar is ambiguous is in general undecidable. The main challenge, in this case, is that some grammars can produce an infinite number of different sentences. To show that the grammar is ambiguous, we would have to choose, among all these sentences (and there are an infinite number of them), one that could be generated by two different derivation trees. Because the number of potential candidates might be infinite, we cannot simply go over all of them trying to decide if it has two derivation trees or not.

Precedence and Associativity

Precedence and Associativity

[edit | edit source]

The semantics of a programming language is not defined by its syntax. There are, however, some aspects of a program's semantics that are completely determined by how the grammar of the programming language is organized. One of these aspects is the order in which operators are applied to their operands. This order is usually defined by the precedence and the associativity between the operators. Most of the algorithms that interpreters or compilers use to evaluate expressions tend to analyze first the operators that are deeper in the derivation tree of that expression. For instance, lets consider the following C snippet, taken from Modern Programming Languages: a = b < c ? * p + b * c : 1 << d (). The side figure shows the derivation tree of this assignment.

Given this tree, we see that the first star, e.g, *p is a unary operator, whereas the second, e.g, *c is binary. We also see that we will first multiply variables b and c, instead of summing up the contents of variable p with b. This evaluation order is important not only to interpret the expression, but also to type check it or even to produce native code for it.

Precedence: We say that an operator op1 has greater precedence than another operator op2 if op1 must be evaluated before op2 whenever both operators are in the same expression. For instance, it is a usual convention that we evaluate divisions before subtractions in arithmetic expressions that contain both operators. Thus, we generally consider that 4 - 4 / 2 = 2. However, if we were to use a different convention, then we could also consider that 4 - 4 / 2 = (4 - 4) / 2 = 0.

An ambiguous grammar might compromise the exact meaning of the precedence rules in a programming language. To illustrate this point, we will use the grammar below, that recognizes expressions containing subtractions and divisions of numbers:

<exp> ::= <exp> - <exp>

| <exp> / <exp>

| (<exp>)

| <number>

According to this grammar, the expression 4 - 4 / 2 has two different derivation trees. In the one where the expression 4 - 4 is more deeply nested, we have that 4 - 4 / 2 = 0. On the other hand, in the tree where we have 4/2 more deeply nested, we have that 4 - 4 / 2 = 2.

It is possible to re-write the grammar to remove this ambiguity. Below we have a slightly different grammar, in which division has higher precedent than subtraction, as it is usual in mathematics:

<exp> ::= <exp> - <exp>

| <mulexp>

<mulexp> ::= <mulexp> / <mulexp>

| (<exp>)

| <number>

As a guideline, the farther the production rule is from the starting symbol, the deeper its nodes will be nested in the derivation tree. Consequently, operators that are generated by production rules that are more distant from the starting symbol of the grammar tend to have higher precedence. This, of course, only applies if our evaluation algorithm starts by computing values from the leaves of the derivation tree towards its root. Going back to the example above, we can only build the derivation tree of the expression 4 - 4 / 2 in one unique way.

By adding the mulexp node into our grammar, we have given division higher precedence over subtraction. However, we might still have problems to evaluate parsing trees unambiguously. These problems are related to the associativity of the operators. As an example, the expression 4 - 3 - 2 can be interpreted in two different ways. We might consider 4 - 3 - 2 = (4 - 3) - 2 = -1, or we might consider 4 - 3 - 2 = 4 - (3 - 2) = 3. The two possible derivation trees that we can build for this expression are shown below:

In arithmetics, mathematicians have adopted the convention that the leftmost subtraction must be solved first. Again, this is just a convention: mathematics would still work, albeit in a slightly different way, had we decided, a couple hundred years ago, that sequences of subtractions should be solved right-to-left. In terms of syntax, we can modify our grammar to always nest more deeply the leftmost subtractions, as well as the leftmost divisions. The grammar below behaves in this fashion. This grammar is no longer ambiguous. Any string that it can generate has only one derivation tree. Thus, there is only one way to build a parsing tree for our example 4 - 3 - 2.

<exp> ::= <exp> - <mulexp>

| <mulexp>

<mulexp> ::= <mulexp> / <rootexp>

| <rootexp>

<rootexp> ::= ( <exp> )

| <number>

Because subtractions are more deeply nested towards the left side of the derivation tree, we say that this operator is left-associative. In typical programming languages, most of the operators are left-associative. However, programming languages also have binary operators that are right-associative. A well-known example is the assignment in C. An assignment command, such as int a = 2 modifies the state of variable a. However, this command is also an expression: it return the last value assigned. In this case, the assignment expression returns the value 2. This semantics allows programmers to chain together sequences of assignments, such as int a = b = 2;. Another example of right-associative operator is the list constructor in ML. This operator, which we denote by :: receives an element plus a list, and inserts the element at the beginning of the list. An expression such as 1::2::3::nil is equivalent to 1::(2::(3::nil)). It could not be different: the type of the operand requires the first operator to be an element, and the second to be a list. Had we evaluated it in a different way, e.g., 1::2::3::nil = ((1::2)::3)::nil, then we would have two elements paired together, which would not pass through the ML type system.

Logic Grammars

In this chapter we will explore how grammars are used in practice, by compilers and interpreters. We will be using definite clause grammars (DCG), a feature of the Prolog programming language to demonstrate our examples. Henceforth, we shall call DCGs Logic Grammars. Prolog is particularly good at grammars. As we will see in this chapter, this programming language provides many abstractions that help the developer to parse and process languages.

Logic Grammars

[edit | edit source]Prolog equips developers with a special syntax to implement grammars. This notation is very similar to the BNF formalism that we had seen before. As an example, the English grammar from the last chapter could be rewritten in the following way using prolog:

sentence --> noun_phrase, verb_phrase . noun_phrase --> determiner, noun . noun_phrase --> determiner, noun, prepositional_phrase . verb_phrase --> verb . verb_phrase --> verb, noun_phrase . verb_phrase --> verb, noun_phrase, prepositional_phrase . prepositional_phrase --> preposition, noun_phrase . noun --> [student] ; [professor] ; [book] ; [university] ; [lesson] ; [programming language] ; [glasses]. determiner --> [a] ; [the] . verb --> [taught] ; [learned] ; [read] ; [studied] ; [saw]. preposition --> [by] ; [with] ; [about] .

If we copy the text above, and save it into a file, such as grammar.pl, then we can parse sentences. Below we give a screenshot of a typical section of the Prolog Swipl interpreter, showing how we can use the grammar. Notice that a query consists of a non-terminal symbol, such as sentence, a list containing the sentence to be parsed, plus an empty list. We will not explain this seemingly mysterious syntax in this book, but the interested reader can find more information on-line:

1 ?- consult(grammar). 2 % ex1 compiled 0.00 sec, 0 bytes 3 true. 4 5 ?- sentence([the, professor, saw, the, student], []). 6 true ; 7 false. 8 9 ?- sentence([the, professor, saw, the, student, with, the, glasses], []). 10 true ; 11 true ; 12 false. 13 14 ?- sentence([the, professor, saw, the, bird], []). 15 false.

Every time Prolog finds a derivation tree for a sentence it outputs the value true for that query. If the same sentence has more than one derivation tree, then it succeeds for each and all of them. In the above example, we got two positive answers for the sentence "The professor saw the student with the glasses", which, as we had seen in the previous chapter, has two different parsing trees. If Prolog cannot find a parsing tree for the sentence, then it outputs the value false. This happened in line 15 of the above example. It also happened in lines 7 and 12. Prolog tries to find every possible way to parse a sentence. If it cannot, even after having found a few successful derivations, then it will give back false to the user.

Attribute Grammars

[edit | edit source]It is possible to embedded attributes into logic grammars. In this way, we can use Prolog to build attribute grammars. We can use attributes for many different purposes. For instance, below we have modified our English grammar to count the number of words in a sentence. Some non-terminals are now associated with an attribute W, an integer that represents how many words are derived from that non-terminal. In the compiler jargon we say that W is an synthesized attribute, because it is built as a function of attributes taken from child nodes.

sentence(W) --> noun_phrase(W1), verb_phrase(W2), {W is W1 + W2} .

noun_phrase(2) --> determiner, noun .

noun_phrase(W) --> determiner, noun, prepositional_phrase(W1), {W is W1 + 1} .

verb_phrase(1) --> verb .

verb_phrase(W) --> verb, noun_phrase(W1), {W is W1 + 1} .

verb_phrase(W) --> verb, noun_phrase(W1), prepositional_phrase(W2), {W is W1 + W2} .

prepositional_phrase(W) --> preposition, noun_phrase(W1), {W is W1 + 1} .

noun --> [student] ; [professor] ; [book] ; [university] ; [lesson] ; [glasses].

determiner --> [a] ; [the] .

verb --> [taught] ; [learned] ; [saw] ; [studied] .

preposition --> [by] ; [with] ; [about] .

The queries that use the attribute grammar must have a parameter that will be replaced by the final value that the Prolog's execution environment finds for the attribute. Below we have a Prolog section with three different queries. Ambiguities still lead us to two answers in the second query.

?- consult(grammar). % ex1 compiled 0.00 sec, 0 bytes true. ?- sentence(W, [the, professor, saw, the, student], []). W = 5 ; false. ?- sentence(W, [the, professor, saw, the, student, with, the, glasses], []). W = 7 ; W = 7 ; false. ?- sentence(W, [the, professor, saw, the, bird], []). false.

Attributes can increase the computational power of grammars. A context free grammar cannot, for instance, recognize the sentence a^nb^nc^n of strings having the same number of a's, b's and c's in sequence. We say that this language is not context-free. However, an attribute grammar can easily parse this language:

abc --> as(N), bs(N), cs(N).

as(0) --> [].

as(M) --> [a], as(N), {M is N + 1}.

bs(0) --> [].

bs(M) --> [b], bs(N), {M is N + 1}.

cs(0) --> [].

cs(M) --> [c], cs(N), {M is N + 1}.

Syntax Directed Interpretation

Syntax Directed Interpretation

[edit | edit source]An interpreter is a program that simulates the execution of programs written in a particular programming language. There are many ways to implement interpreters, but a typical implementation relies on the parsing tree as the core data structure. In this case, the interpreter is a visitor that traverses the derivation tree of the program, simulating the semantics of each node of this tree. As an example, we shall build an interpreter for the language of arithmetic expressions whose logic grammar is given below:

expr --> mulexp, [+], expr.

expr --> mulexp.

mulexp --> rootexp, [*], mulexp.

mulexp --> rootexp.

rootexp --> ['('], expr, [')'].

rootexp --> number.

number --> digit.

number --> digit, number.

digit --> [0] ; [1] ; [2] ; [3] ; [4] ; [5] ; [6] ; [7] ; [8] ; [9].

Any program in this simple programming language is ultimately a number. That is, we can ascribe to a program written in this language the meaning of the number that the program represents. Consequently, interpreting a program is equivalent to finding the number that this program denotes. The attribute grammar below implements such interpreter:

expr(N) --> mulexp(N1), [+], expr(N2), {N is N1 + N2}.

expr(N) --> mulexp(N).

mulexp(N) --> rootexp(N1), [*], mulexp(N2), {N is N1 * N2}.

mulexp(N) --> rootexp(N).

rootexp(N) --> ['('], expr(N), [')'].

rootexp(N) --> number(N, _).

number(N, 1) --> digit(N).

number(N, C) --> digit(ND), number(NN, C1), {

C is C1 * 10,

N is ND * C + NN

}.

digit(N) --> [0], {N is 0}

; [1], {N is 1}

; [2], {N is 2}

; [3], {N is 3}

; [4], {N is 4}

; [5], {N is 5}

; [6], {N is 6}

; [7], {N is 7}

; [8], {N is 8}

; [9], {N is 9}.

Notice that we can use more than one attribute per node of our derivation tree. The node number, for instance, has two attributes, which we use to compute the value of a sequence of digits. Below we have a few examples of queries that we can issue in this language, assuming that we have saved the grammar in a file called interpreter.pl:

?- consult(interpreter).

% num2 compiled 0.00 sec, 4,340 bytes

true.

?- expr(N, [2, *, 1, 2, 3, +, 3, 2, 1], []).

N = 567 ;

false.

?- expr(N, [2, *, '(', 1, 2, 3, +, 3, 2, 1, ')'], []).

N = 888 ;

false.

Syntax Directed Translation

Syntax Directed Translation

[edit | edit source]A compiler is a program that converts code written in a programming language into code written in a different programming language. Typically a compiler is used to convert code written in a high-level language into machine code. Like in the case of interpreters, grammars also provide the key data structure that a compiler uses to do its work. As an example, we will implement a very simple compiler that converts programs written in our language of arithmetic expressions to the polish notation. The attribute grammar that does this job can be seen below:

expr(L) --> mulexp(L1), [+], expr(L2), {append([+], L1, LX), append(LX, L2, L)}.

expr(L) --> mulexp(L).

mulexp(L) --> rootexp(L1), [*], mulexp(L2), {append([*], L1, LX), append(LX, L2, L)}.

mulexp(L) --> rootexp(L).

rootexp(L) --> ['('], expr(L), [')'].

rootexp(L) --> number(L).

number([N]) --> digit(N).

number([ND|LL]) --> digit(ND), number(LL).

digit(N) --> [0], {N is 0}

; [1], {N is 1}

; [2], {N is 2}

; [3], {N is 3}

; [4], {N is 4}

; [5], {N is 5}

; [6], {N is 6}

; [7], {N is 7}

; [8], {N is 8}

; [9], {N is 9}.

In this example we dump the transformed program into a list L. The append(L1, L2, LL) predicate is true whenever the list LL equals the concatenation of lists L1 and L2. The notation [ND|LL] implements the cons operation so common in functional programming. In other words, [ND|LL] represents a list that contains a head element ND, and a tail LL. As an example of use, the execution section below shows a set of queries using our new grammar, this time implemented in a file called compiler.pl:

?- consult(compiler).

true.

?- expr(L, [2, *, 1, 2, 3, +, 3, 2, 1], []).

L = [+, *, 2, 1, 2, 3, 3, 2, 1] ;

false.

?- expr(L, [2, *, '(', 1, 2, 3, +, 3, 2, 1, ')'], []).

L = [*, 2, +, 1, 2, 3, 3, 2, 1] ;

false.

Syntax Directed Type Checking

Syntax Directed Type Checking

[edit | edit source]Compilers and interpreters can rely on grammars to implement many different forms of program verification. A well-known static verification that compilers of statically typed languages perform is type checking. Before generating machine code for a program written in a statically typed language, the compiler must ensure that the source program abides by the typing discipline imposed by that language. The type checker verifies, for instance, if the operands have the type expected by the operator. A important step of this kind of verification is to determine the type of arithmetic expressions. In our language of arithmetic expressions every program is an integer number. However, below we show a slightly modified version of that very language, in which numbers can either be integers or floating point.

expr --> mulexp, [+], expr.

expr --> mulexp.

mulexp --> rootexp, [*], mulexp.

mulexp --> rootexp.

rootexp --> ['('], expr, [')'].

rootexp --> dec_number.

dec_number --> number, [.], number.

dec_number --> number.

number --> digit.

number --> digit, number.

digit(N) --> [0] ; [1] ; [2] ; [3] ; [4] ; [5] ; [6] ; [7] ; [8] ; [9].

Many programming languages allow the intermixing of integer and floating point data types in arithmetic expressions. C is one of these languages. The type of a sum involving an integer and a floating point number is a floating point number. However, there are languages that do not allow this kind of mixture. In Standard ML, for instance, we can only sum up together two integers, or two real numbers. Lets adopt the C approach, for the sake of this example. Thus, the attribute grammar below not only implements an interpreter for our new language of arithmetic expressions, but also computes the type of an expression.

meet(integer, integer, integer).

meet(_, float, float).

meet(float, _, float).

expr(N, T) --> mulexp(N1, T1), [+], expr(N2, T2), {

N is N1 + N2,

meet(T1, T2, T)

}.

expr(N, T) --> mulexp(N, T).

mulexp(N, T) --> rootexp(N1, T1), [*], mulexp(N2, T2), {

N is N1 * N2,

meet(T1, T2, T)

}.

mulexp(N, T) --> rootexp(N, T).

rootexp(N, T) --> ['('], expr(N, T), [')'].

rootexp(N, T) --> dec_number(N, T).

dec_number(N, float) --> number(N1, _), [.], number(N2, C2), {

CC is C2 * 10,

N is N1 + N2 / CC} .

dec_number(N, integer) --> number(N, _).

number(N, 1) --> digit(N).

number(N, C) --> digit(ND), number(NN, C1), {

C is C1 * 10,

N is ND * C + NN

}.

digit(N) --> [0], {N is 0}

; [1], {N is 1}

; [2], {N is 2}

; [3], {N is 3}

; [4], {N is 4}

; [5], {N is 5}

; [6], {N is 6}

; [7], {N is 7}

; [8], {N is 8}

; [9], {N is 9}.

If we save the grammar above in a file called type_checker.pl, we can use it as in the execution section below. As we can see, expressions involving integers and floats have the floating point type. We have defined a meet operator that combines the different data types that we have. This name, meet, is a term commonly found in the jargon used in lattice theory.

?- consult(type_checker). % type_checker compiled 0.00 sec, 5,544 bytes true. ?- expr(N, T, [1, 2], []). N = 12, T = integer ; false. ?- expr(N, T, [1, 2, +, 3, '.', 1, 4], []). N = 15.14, T = float ; false.

Compiled Programs

There are different ways in which we can execute programs. Compilers, interpreters and virtual machines are some tools that we can use to accomplish this task. All these tools provide a way to simulate in hardware the semantics of a program. Although these different technologies exist with the same core purpose - to execute programs - they do it in very different ways. They all have advantages and disadvantages, and in this chapter we will look more carefully into these trade-offs. Before we continue, one important point must be made: in principle any programming language can be compiled or interpreted. However, some execution strategies are more natural in some languages than in others.

Compiled Programs

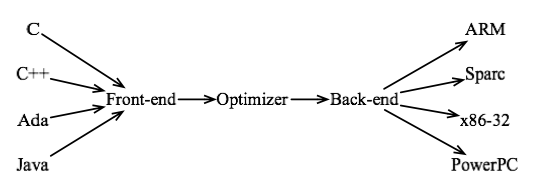

[edit | edit source]Compilers are computer programs that translate a high-level programming language to a low-level programming language. The product of a compiler is an executable file, which is made of instructions encoded in a specific machine code. Hence, an executable program is specific to a type of computer architecture. Compilers designed for distinct programming languages might be quite different; nevertheless, they all tend to have the overall macro-architecture described in the figure below:

A compiler has a front end, which is the module in charge of transforming a program, written in a high-level source language into an intermediate representation that the compiler will process in the next phases. It is in the front end that we have the parsing of the input program, as we have seen in the last two chapters. Some compilers, such as gcc can parse several different input languages. In this case, the compiler has a different front end for each language that it can handle. A compiler also has a back end, which does code generation. If the compiler can target many different computer architectures, then it will have a different back-end for each of them. Finally, compilers generally do some code optimization. In other words, they try to improve the program, given a particular criterion of efficiency, such as speed, space or energy consumption. In general the optimizer is not allowed to change the semantics of the input program.

The main advantage of execution via compilation is speed. Because the source program is translated directly to machine code, this program will most likely be faster than if it were interpreted. Nevertheless, as we will see in the next section, it is still possible, although unlikely, that an interpreted program run faster than its machine code equivalent. The main disadvantage of execution by compilation is portability. A compiled program targets a specific computer architecture, and will not be able to run in a different hardware.

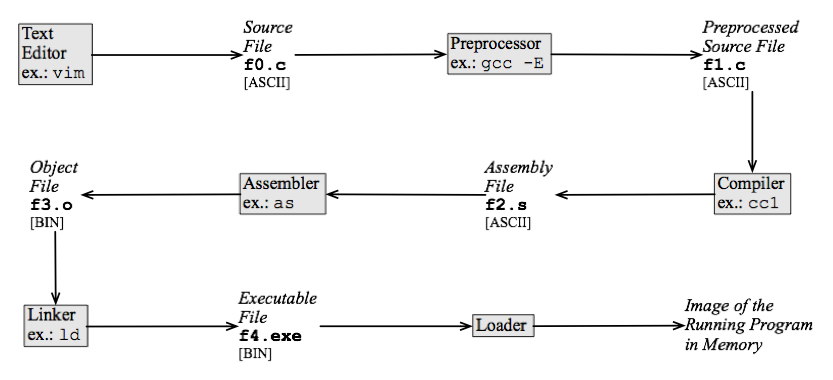

The life cycle of a compiled program

[edit | edit source]A typical C program, compiled by gcc, for instance, will go through many transformations before being executed in hardware. This process is similar to a production line in which the output of a stage becomes the input to the next stage. In the end, the final product, an executable program, is generated. This long chain is usually invisible to the programmer. Nowadays, integrated development environments (IDE) combine the several tools that are part of the compilation process into a single execution environment. However, to demonstrate how a compiler works, we will show the phases present in the execution of a standard C file compiled with gcc. These phases, their products and some examples of tools are illustrated in the figure below.

The aim of the steps seen above is to translate a source file to a code that a computer can run. First of all, the programmer uses a text editor to create a source file, which contains a program written in a high-level programming language. In this example, we are assuming C. There exist every sort of text editor that can be used here. Some of them provide supporting in the form of syntax highlighting or an integrated debugger, for instance. Lets assume that we have just edited the following file, which we want to compile:

#define CUBE(x) (x)*(x)*(x)

int main() {

int i = 0;

int x = 2;

int sum = 0;

while (i++ < 100) {

sum += CUBE(x);

}

printf("The sum is %d\n", sum);

}

After editing the C file, a preprocessor is used to expand the macros present in the source code. Macro expansion is a relatively simple task in C, but it can be quite complicated in languages such as lisp, for instance, which take care of avoiding typical problems of macro expansion such as variable capture. During the expansion phase, the body of the macro replaces every occurrence of its name in the program's source code. We can invoke gcc's preprocessor via a command such as gcc -E f0.c -o f1.c. The result of preprocessing our example program is the code below. Notice that the call CUBE(x) has been replaced by the expression (x)*(x)*(x).

int main() {

int i = 0;

int x = 2;

int sum = 0;

while (i++ < 100) {

sum += (x)*(x)*(x);

}

printf("The sum is %d\n", sum);

}

In the next phase we convert the source program into assembly code. This phase is what we normally call compilation: a text written in the C grammar will be converted into a program written in the x86 assembly grammar. It is during this step that we perform the parsing of the C program. In Linux we can translate the source file, e.g., f1.c to assembly via the command cc1 f1.c -o f2.s, assuming that cc1 is the system's compiler. This command is equivalent to the call gcc -S f1.c -o f2.s. The assembly program can be seen in the left side of the figure below. This program is written in the assembly language used in the x86 architecture. There are many different computer architectures, such as ARM, PowerPC and Alpha. The assembly language produced for any of them would be rather different than the program below. For comparison purposes, we have printed the ARM version of the same program at the right side of the figure. These two assembly languages follow very different design philosophies: x86 uses a CISC instruction set, while ARM follows more closely the RISC approach. Nevertheless, both files, the x86's and the ARM's have a similar syntactic skeleton. The assembly language has a linear structure: a program is a list-like sequence of instructions. On the other hand, the C language has a syntactic structure that looks more like a tree, as we have seen in a previous Chapter. Because of this syntactic gap, this phase contains the most complex translation step that the program will experiment during its life cycle.

# Assembly of x86 # Assembly of ARM

.cstring _main:

LC0: @ BB#0:

.ascii "The sum is %d\12\0" push {r7, lr}

.text mov r7, sp

.globl _main sub sp, sp, #16

_main: mov r1, #2

pushl %ebp mov r0, #0

movl %esp, %ebp str r0, [r7, #-4]

subl $40, %esp str r0, [sp, #8]

movl $0, -20(%ebp) stm sp, {r0, r1}

movl $2, -16(%ebp) b LBB0_2

movl $0, -12(%ebp) LBB0_1:

jmp L2 ldr r0, [sp, #4]

L3: ldr r3, [sp]

movl -16(%ebp), %eax mul r1, r0, r0

imull -16(%ebp), %eax mla r2, r1, r0, r3

imull -16(%ebp), %eax str r2, [sp]

addl %eax, -12(%ebp) LBB0_2:

L2: ldr r0, [sp, #8]

cmpl $99, -20(%ebp) add r1, r0, #1

setle %al cmp r0, #99

addl $1, -20(%ebp) str r1, [sp, #8]

testb %al, %al ble LBB0_1

jne L3 @ BB#3:

movl -12(%ebp), %eax ldr r0, LCPI0_0

movl %eax, 4(%esp) ldr r1, [sp]

movl $LC0, (%esp) LPC0_0:

call _printf add r0, pc, r0

leave bl _printf

ret ldr r0, [r7, #-4]

mov sp, r7

pop {r7, lr}

mov pc, lr

It is during the translation from the high-level language to the assembly language that the compiler might apply code optimizations. These optimizations must obey the semantics of the source program. An optimized program should do the same thing as its original version. Nowadays compilers are very good at changing the program in such a way that it becomes more efficient. For instance, a combination of two well-known optimizations, loop unwinding and constant propagation can optimize our example program to the point that the loop is completely removed. As an example, we can run the optimizer using the following command, assuming again that cc1 is the default compiler that gcc uses: cc1 -O1 f1.c -o f2.opt.s. The final program that we produce this time, f2.opt.s is surprisingly concise:

.cstring LC0: .ascii "The sum is %d\12\0" .text .globl _main _main: pushl %ebp movl %esp, %ebp subl $24, %esp movl $800, 4(%esp) movl $LC0, (%esp) call _printf leave ret

The next step in the compilation chain consists in the translation of the assembly language to binary code. The assembly program is still readable by people. The binary program, also called an object file can, of course, be read by human beings, but there are not many human beings who are up to this task these days. Translating from assembly to binary code is a rather simple task, because both these languages have the same syntactic structure. Only their lexical structure differs. Whereas the assembly file is written with ASCII [w:Assembly_language#Opcode_mnemonics_and_extended_mnemonics|[mnemonics]], the binary file contains sequences of zeros and ones that the hardware processor recognizes. A typical tool used in this phase is the as assembler. We can produce an object file with the command below as f2.s -o f3.o.

The object file is not executable yet. It does not contain enough information to specify where to find the implementation of the printf function, for example. In the next step of the compilation process we change this file so that the address of functions defined in an external libraries be visible. Each operating system provides programmers with a number of libraries that can be used together with code that they create. A special software, the linker can find the address of functions in these libraries, thus fixing the blank addresses in the object file. Different operating systems use different linkers. A typical tool, in this case, is ld or collect2. For instance, in order to produce the executable program in a Mac OS running Leopard, we can use the command collect2 -o f4.exe -lcrt1.10.5.o f3.o -lSystem.

At this point we almost have an executable file, but our linked binary program is bound to suffer a last transformation before we can see its output. All the addresses in the binary code are relative. We must replace these addresses by absolute values, which point correctly to the targets of the function calls and other program objects. This last step is the responsibility of a program called loader. The loader dumps an image of the program into memory and runs it.

Interpreted Programs

Interpreted Programs

[edit | edit source]Interpreters execute programs in a different way. They do not produce native binary code; at least not in general. Instead, an interpreter converts a program to an intermediate representation, usually a tree, and uses an algorithm to traverse this tree emulating the semantics of each of its nodes. In the previous chapter we had implemented a small interpreter in Prolog for a programming language whose programs represent arithmetic expressions. Even though that was a very simple interpreter, it contained all the steps of the interpretation process: we had a tree representing the abstract syntax of a programming language, and a visitor going over every node of this tree performing some interpretation-related task.

The source program is meaningless to the interpreter in its original format, e.g., a sequence of ASCII characters. Thus, like a compiler, an interpreter must parse the source program. However, contrary to the compiler, the interpreter does not need to parse all the source code before executing it. That is, only those pieces of the program text that are reachable by the execution flow of the program need to be translated. Thus, the interpreter does a kind of lazy translation.

Advantages and disadvantages of interpretation over compilation

[edit | edit source]The main advantage of an interpreter over a compiler is portability. The binary code produced by the compiler, as we have emphasized before, is tailored specifically to a target computer architecture. The interpreter, on the other hand, processes the source code directly. With the rise of the World Wide Web, and the possibility of downloading and executing programs from remote servers, portability became a very important issue. Because client web applications must run in many different machines, it is not effective for the browser to download the binary representation of the remote software. Source code must come instead.

A compiled program usually runs faster than an interpreted program, because there are less intermediaries between the compiled program and the underlying hardware. However, we must bear in mind that compiling a program is a lengthy process, as we had seen before. Therefore, if the program is meant to be executed only once, or at most a few times, then interpreting it might be faster than compiling and running it. This type of scenario is common in client web applications. For instance, JavaScript programs are usually interpreted, instead of compiled. These programs are downloaded from a remote web server, and once the browser section expires, their code is usually lost.

To change a program's source code is a common task during the development of an application. When using a compiler, each change implies a potentially long waiting time. The compiler needs to translate the modified files and to link all the binaries to create an executable program, before running that program. The larger is the program, the longer is this delay. On the other hand, because an interpreter does not translate all the source code before running it, the time necessary to test the modifications is significantly shorter. Therefore, interpreters tend to favour the development of software prototypes.

Example: bash-script: Bash-script is a typical interpreter commonly used in the Linux operating system. This interpreter provides to users a command-line interface; that is, it gives users a prompt where they can type commands. These commands are read and then interpreted. Commands can also be grouped into a single file. A bash script is a file containing a list of commands to be executed by the bash shell. Bash is a scripting language. In other words, bash makes it very easy for the user to call applications implemented in other programming languages different than bash itself. A such script can be used to automatically execute a sequence of commands that the user often needs. The following lines are very simple commands that could be stored in a script file, called, for instance, my_info.sh:

#! /bin/bash

# script to present some information

clear

echo 'System date:'

date

echo 'Current directory:'

pwd

The first line (#! /bin/bash) in the script specifies which shell should be used to interpret the commands in the script. Usually an operating system provides more than one shell. In this case we are using bash. The second line (# script to present some information) is a comment and does not have any effect when the script is executed. The life cycle of a bash script is much simpler than the life cycle of a C program. The script file can be edited using a text editor such as vim. After that, it is necessary to change its permission in the Linux file system so that we can make it executable. A script call can be done by prefixing the file's name with its location in the filesystem. So, an user can run the script in a shell by typing "path/my_info.sh", where path indicates the path necessary to find the script:

$> ./my_info.sh System date: Seg Jun 18 10:18:46 BRT 2012 Current directory: /home/IPL/shell

Virtual Machines

[edit | edit source]A virtual machine is a hardware emulated in software. It combines together an interpreter, a runtime supporting system and a collection of libraries that the interpreted code can use. Typically the virtual machine interprets an assembly-like program representation. Therefore, the virtual machine bridges the gap between compilers and interpreters. The compiler transforms the program, converting it from a high-level language into low-level bytecodes. These bytecodes are then interpreted by the virtual machine.

One of the most important goals of virtual machines is portability. A virtualized program is executed directly by the virtual machine in such a way that this program's developer can be oblivious to the hardware where this virtual machine runs. As an example, Java programs are virtualized. In fact, the Java Virtual Machine (JVM) is probably the most well-known virtual machine in use today. Any hardware that supports the Java virtual machine can run Java programs. The virtual machine, in this case, ensures that all the different programs will have the same semantics. A slogan that describes this characteristic of Java programs is "write once, run anywhere". This slogan illustrates the cross-plataform benefits of Java. In order to guarantee this uniform behaviour, every JVM is distributed with a very large software library, the Java Application Program Interface. Parts of this library are treated in a special way by the compiler, and are implemented directly at the virtual machine level. Java [threads], for instance, are handled in such a way.

The Java programming language is very popular nowadays. The portability of the Java runtime environment is one of the key factors behind this popularity. Java was initially conceived as a programming language for embedded devices. However, by the time Java was been released, the World Wide Web was also making its revolutionary début. In the early 90's, the development of programs that could be downloaded and executed in web browsers was in high demand. Java would fill up this niche with the Java Applets. Today Java applets felt out of favour when compared to other alternatives such as JavaScript and Flash programs. However, by the time other technologies begun to be popular in the client side of web applications, Java was already one of the most used programming languages in the world. And, many years past the initial web revolution, the world watches a new unfolding in computer history: the rise of the smartphones as general purpose hardware. Again portability is at a premium, and again Java is an important player in this new market. The Android virtual machine, Dalvik is meant to run Java programs.

Just-in-Time Compilation

[edit | edit source]In general a compiled program will run faster than its interpreted version. However, there are situations in which the interpreted code is faster. As an example, the shootout benchmark game contains some Java benchmarks that are faster than the equivalent C programs. The core technology behind this efficiency is the Just-in-Time Compiler, or JIT for short. The JIT compiler translates a program to binary code while this program is being interpreted. This setup opens up many possibilities for speculative code optimizations. In other words, the JIT compiler has access to the runtime values that are being manipulated by the program; thus, it can use these values to produce better code. Another advantage of the JIT compiler is that it does not need to compile every part of the program, but only those pieces of it that are reachable by the execution flow. And even in this case, the interpreter might decide to compile only the heavily executed parts of a function, instead of the whole function body.

The program below provides an example of a toy JIT compiler. If executed correctly, the program will print Result = 1234. Depending on the protection mechanisms adopted by the operating system, the program might not executed correctly. In particular, systems that apply Data Execution Prevention (DEP), will not run this program till the end. Our "JIT compiler" dumps some assembly instructions into an array called program, and then diverts execution to this array.

#include <stdio.h>

#include <stdlib.h>

int main(void) {

char* program;

int (*fnptr)(void);

int a;

program = malloc(1000);

program[0] = 0xB8;

program[1] = 0x34;

program[2] = 0x12;

program[3] = 0;

program[4] = 0;

program[5] = 0xC3;

fnptr = (int (*)(void)) program;

a = fnptr();

printf("Result = %X\n",a);

}

In general a JIT works in a way similar to the program above. It compiles the interpreted code, and dumps the result of this compilation, the binary code, into a memory array that is marked as executable. Then the JIT changes the execution flow of the interpreter to point to the newly written memory area. In order to give the reader a general picture of a JIT compiler, the figure below shows Trace Monkey, one of the compilers used by the Mozilla Firefox browser to run JavaScript programs.

TraceMonkey is a trace based JIT compiler. It does not compile whole functions. Rather, it converts to binary code only the most heavily executed paths inside a function. TraceMonkey is built on top of a JavaScript interpreter called SpiderMonkey. SpiderMonkey interprets bytecodes. In other words, the JavaScript source file is converted to a sequence of assembly-like instructions, and these instructions are interpreted by SpiderMonkey. The interpreter also monitors the program paths that are executed more often. After a certain program path reaches an execution threshold, it is translated to machine code. This machine code is a trace, that is, a linear sequence of instructions. The trace is then transformed in native code by nanojit, a JIT compiler used in the Tamarin JavaScript engine. Once the execution of this trace finishes, either due to normal termination or due to an exceptional condition, control comes back to the interpreter, which might find other traces to compile.

Binding

Binding

[edit | edit source]A source file has many names whose properties need to be determined. The meaning of these properties might be determined at different phases of the life cycle of a program. Examples of such properties include the set of values associated with a type; the type of a variable; the memory location of the compiled function; the value stored in a variable, and so forth. Binding is the act of associating properties with names. Binding time is the moment in the program's life cycle when this association occurs.

Many properties of a programming language are defined during its creation. For instance, the meaning of key words such as while or for in C, or the size of the integer data type in Java, are properties defined at language design time. Another important binding phase is the language implementation time. The size of integers in C, contrary to Java, were not defined when C was designed. This information is determined by the implementation of the compiler. Therefore, we say that the size of integers in C is determined at the language implementation time.

Many properties of a program are determined at compilation time. Among these properties, the most important are the types of the variables in statically typed languages. Whenever we annotate a variable as an integer in C or Java, or whenever the compiler infers that a variable in Haskell or SML has the integer data type, this information is henceforward used to generate the code related to that variable. The location of statically allocated variables, the layout of the [activation records] of function and the control flow graph of statically compiled programs are other properties defined at compilation time.

If a program uses external libraries, then the address of the external functions will be known only at link time. It is in this moment that the runtime environment finds where is located the printf function that a C program calls, for instance. However, the absolute addresses used in the program will only be known at loading time. At that moment we will have an image of the executable program in memory, and all the dependences will have been already solved by the loader.

Finally, there are properties which we will only know once the program executes. The actual values stored in the variables is perhaps the most important of these properties. In dynamically typed languages we will only know the types of variables during the execution of the program. Languages that provide some form of late binding will only lets us know the target of a function call at runtime, for instance.

As an example, let us take a look at the program below, implemented in C. In line 1, we have defined three names: int, i and x. One of them represents a type while the others represent the declaration of two variables. The specification of the C language defines the meaning of the keyword int. The properties related to this specification are bound when the language is defined. There are other properties that are left out of the language definition. An example of this is the range of values for the int type. In this way, the implementation of a compiler can choose a particular range for the int type that is the most natural for a specific machine. The type of variables i and x in the first line is bound at compilation time. In line 4, the program calls the function do_something whose definition can be in another source file. This reference is solved at link time. The linker tries to find the function definition for generating the executable file. At loading time, just before a program starts running, the memory location for main, do_something, i and x are bound. Some bindings occur when the program is running, i.e., at runtime. An example is the possible values attributed to i and x during the execution of the program.

int i, x = 0;

void main() {

for (i = 1; i <= 50; i++)

x += do_something(x);

}

The same implementation can also be done in Java, which is as follows:

public class Example {

int i, x = 0;

public static void main(String[] args) {

for (i = 1; i <= 50; i++) {

x += do_something(x);

}

}

}

Concepts of Functional Languages

Concepts of Functional Languages

[edit | edit source]Functional programming is a form of declarative programming, a paradigm under which the computation of a program is described by its essential logic. This approach is in contrast to imperative programming, where specific instructions describe how a computation is to be performed. The only form of computation in a strictly functional language is a function. As in mathematics, a function establishes, by means of an equation, a relation between certain input and output. To the functional programmer, the exact implementation underneath a given computation is not visible. In this sense, we tend to say that functional programming allows one to focus on what is to be computed.

While functional programming languages have not traditionally joined the success of imperative languages in the industry, they have gained more traction in the recent years. This increasing popularity of the functional programming style is due to numerous factors. Among the virtues of typical functional languages like Haskell is the absence of mutable data and associated side-effects, a characteristic referred to as purity, which we shall study further. Together with the introduction of novel parallel architectures, we have seen an accompanying growth of concurrent programming techniques. Because functional languages are agnostic of global state, they provide a natural framework for implementations free of race conditions. In addition, they ease the design fault-tolerant functions, since only local data is of concern.

Another reason that has contributed to the emergence of the functional style is the appearance of the so-called hybrid languages. A prominent example among those is the Scala programming language. Scala offers a variety of features from the functional programming world like high-order functions and pattern matching, yet with a closely resembling object-oriented appearance. Even imperative constructs such as for loops can be found in Scala, a characteristic that helps reducing the barrier between functional and imperative programming. In addition, Scala compiles to bytecode of the Java Virtual Machine (JVM), enabling easy integration between the two languages and, consequently, allowing programmers to gradually move from one to the other. The same approach of compiling to the JVM was followed by Closure, a relatively new general-purpose language. Finally, both Java and C++, which were originally designed under strong imperative principles, feature nowadays lambdas and other functional programming constructs.

Type Definition

Data Types

[edit | edit source]The vast majority of the programming languages deal with typed values, i.e., integers, booleans, real numbers, people, vehicles, etc. There are however, programming languages that have no types at all. These programming languages tend to be very simple. Good examples in this category are the core lambda calculus, and Brain Fuc*. There exist programming languages that have some very primitive typing systems. For instance, the x86 assembly allows to store floating point numbers, integers and addresses into the same registers. In this case, the particular instruction used to process the register determines which data type is being taken into consideration. For instance, the x86 assembly has a subl instruction to perform integer subtraction, and another instruction, fsubl, to subtract floating point values. As another example, BCPL has only one data type, a word. Different operations treat each word as a different type. Nevertheless, most of the programming languages have more complex types, and we shall be talking about these typing systems in this chapter.

The most important question that we should answer now is "what is a data type". We can describe a data type by combining two notions:

- Values: a type is, in essence, a set of values. For instance, the boolean data type, seen in many programming languages, is a set with two elements: true and false. Some of these sets have a finite number of elements. Others are infinite. In Java, the integer data type is a set with 232 elements; however, the string data type is a set with an infinite number of elements.

- Operations: not every operation can be applied on every data type. For instance, we can sum up two numeric types; however, in most of the programming languages, it does not make sense to sum up two booleans. In the x86 assembly, and in BCPL, the operations distinguish the type of a memory location from the type of others.

Types exist so that developers can represent entities from the real world in their programs. However, types are not the entities that they represent. For instance, the integer type, in Java, represents numbers ranging from -231 to 231 - 1. Larger numbers cannot be represented. If we try to assign, say, 231 to an integer in Java, then we get back -231. This happens because Java only allows us to represent the 31 least bits of any binary integer.

Types are useful in many different ways. Testimony of this importance is the fact that today virtually every programming language uses types, be it statically, be it at runtime. Among the many facts that contribute to make types so important, we mention:

- Efficiency: because different types can be represented in different ways, the runtime environment can choose the most efficient alternative for each representations.

- Correctness: types prevent the program from entering into undefined states. For instance, if the result of adding an integer and a floating point number is undefined, then the runtime environment can trigger an exception whenever this operation might happen.

- Documentation: types are a form of documentation. For instance, if a programmer knows that a given variable is an integer, then he or she knows a lot about it. The programmer knows, for example, that this variable can be the target of arithmetic operations. The programmer also knows much memory is necessary to allocate that variable. Furthermore, contrary to simple comments, that mean nothing to the compiler, types are a form of documentation that the compiler can check.

Types are a fascinating subject, because they classify programming languages along many different dimensions. Three of the most important dimensions are:

- Statically vs Dynamically typed.

- Strongly vs Weakly typed.

- Structurally vs Nominally typed.

In any programming language there are two main categories of types: primitive and constructed. Primitive types are atomic, i.e., they are not formed by the combination of other types. Constructed, or composite types, as the name already says, are made of other types, either primitive or also composite. In the rest of this chapter we will be showing examples of each family of types.

Primitive Types

Primitive Types

[edit | edit source]Every programming language that has types builds these types around a finite set of primitive types. Primitive types are atomic. In other words, they cannot be de-constructed into simpler types. As an example, the SML programming language has five primitive types:

- bool: these are the true or false.

- int: these are the integer numbers, which can be positive or negative.

- real: these are the real numbers. SML might represent them with up to 12 meaningful decimal digits after the point, e.g., 2.7 / 3.33 = 0.810810810811.

- char: these are the characters. They have a rather cumbersome syntax, when compared to other types. E.g.: #"a".

- string: these are the chains of characters. However, contrary to most of the programming languages, strings in SML cannot be de-constructed as lists of characters.

Different languages might provide different primitive types. Java, for instance, has the following primitive types:

- bool (1-bit)

- byte (1-byte signed)

- char (2-byte unsigned)

- short (2-byte signed)

- int (4-byte signed)

- long (8-byte signed)

- float (4-byte floating point)

- double (8-byte floating point)

Some authors will say that strings are primitive types in Java. Others will say that strings are composite: they are chains of characters. Nevertheless, independent on how strings are classified, we all agree that strings are built-in types. A built-in type is a data type that is not user-defined. Because the built-in type is part of the core language, its elements can be treated specially by the compiler. Java's strings, for instance, have the special syntax of literals between quotes. Furthermore, in Java strings are implemented as read-only constants. If we try, say, to insert a character in the middle of a string, then what we can do is to build a new string composing the original value with the character we want to insert.

In some languages the primitive types are part of the language specification. Thus, these types have the same semantics in any system where the language is supported. This is the case of the primitive types in Java and in SML, for instance. For example, the type integer, in Java, contains 232 elements in any setup that runs the Java Virtual Machine. However, there exist systems in which the semantics of a primitive type depends on the implementation of the programming language. C is a typical example. The size of integers in this programming language might vary from one architecture to the others. We can use the following function to find out the largest integer in C:

#include<stdio.h>

int main(int argc, char** argv) {

unsigned int i = ~0U;

printf("%d\n", i);

i = i >> 1;

printf("%d\n", i);

}

This function is executed in constant time, because of the operations that manipulate bits. In languages that do not support this type of operations, in general we cannot find the largest integer so quickly. For instance, the function below, in SML, finds the largest integer in , where is the number of bits in the integer type:

fun maxInt current inc = maxInt (current + inc) (inc * 2)

handle Overflow => if inc = 1 then current else maxInt current 1

Constructed Types

Constructed Types