Software design patterns

WARNING: the page is not completely expanded, because the included content is too big and breaks the 2048kb post‐expansion maximum size of Mediawiki.

This is the print version of Introduction to Software Engineering You won't see this message or any elements not part of the book's content when you print or preview this page.

| This is the print version of Introduction to Software Engineering You won't see this message or any elements not part of the book's content when you print or preview this page. |

Table of contents

Software Engineering

Process & Methodology

- Introduction

- Methodology

- V-Model

- Agile Model

- Standards

- Life Cycle

- Rapid Application Development

- Extreme Programming

Planning

Architecture & Design

UML

Implementation

Testing

Software Quality

Deployment & Maintenance

Project Management

Tools

- Introduction

- Modelling and Case Tools

- Compiler

- Debugger

- IDE

- GUI Builder

- Source Control

- Build Tools

- Software Documentation

- Static Code Analysis

- Profiling

- Code Coverage

- Project Management

- Continuous Integration

- Bug Tracking

- Decompiler

- Obfuscation

Re-engineering

Other

References

Editors

Authors

License

Preface

When preparing an undergraduate class on Software Engineering, I found that there are a lot of good articles in Wikipedia covering different aspects related to software engineering. For a beginner, however, it is not so easy to find her or his way through that jungle of articles. It is not evident what is important and what is less relevant, where to start and what to skip in a first reading. Also, these articles contain too much information and too few examples. Hence the idea for this book came about: to take the relevant articles from Wikipedia, combine them, edit them, fill in the missing pieces, put them in context and create a wikibook out of them.

The hope is that this can be used as a textbook for an introductory software engineering class. The advantage for the instructor is that she can just pick the pieces that fit into her course and create a collection. The advantage for the student is that he can have a printed or pdf version of the textbook at a reasonable price (free) and with reasonable licenses (creative commons).

As for the philosophy behind this book: brevity is preferred to completeness, and examples are preferred to theory. If this effort was successful, you be the judge of it, and if you have suggestions for improvement, just use the ’Edit’ button!

My special thanks go to Adrignola and Kayau who did the tedious work of importing the original articles (with all their history) from Wikipedia to Wikibooks!

Software Engineering

Introduction

This book is an introduction to the art of software engineering. It is intended as a textbook for an undergraduate level course.

Software engineering is about teams. The problems to solve are so complex or large, that a single developer cannot solve them anymore. Software engineering is also about communication. Teams do not consist only of developers, but also of testers, architects, system engineers, customer, project managers, etc. Software projects can be so large that we have to do careful planning. Implementation is no longer just writing code, but it is also following guidelines, writing documentation and also writing unit tests. But unit tests alone are not enough. The different pieces have to fit together. And we have to be able to spot problematic areas using metrics. They tell us if our code follows certain standards. Once we are finished coding, that does not mean that we are finished with the project: for large projects maintaining software can keep many people busy for a long time. Since there are so many factors influencing the success or failure of a project, we also need to learn a little about project management and its pitfalls, but especially what makes projects successful. And last but not least, a good software engineer, like any engineer, needs tools, and you need to know about them.

Developers Work in Teams

In your beginning semesters you were coding as individuals. The problems you were solving were small enough so one person could master them. In the real world this is different:- the problem sizes and time constraints are such that only teams can solve those problems.

For teams to work effectively they need a language to communicate (UML). Also teams do not consist only of developers, but also of testers, architects, system engineers and most importantly the customer. So we need to learn about what makes good teams, how to communicate with the customer, and how to document not only the source code, but everything related to the software project.

New Language

In previous courses we learned languages, such as Java or C++, and how to turn ideas into code. But these ideas are independent of the language. With Unified Modeling Language (UML) we will see a way to describe code independently of language, and more importantly, we learn to think in a level of abstraction which is higher by one level. UML can be an invaluable communication and documentation tool.

We will learn to see the big picture: patterns. This gives us yet one higher level of abstraction. Again this increases our vocabulary to communicate more effectively with our peers. Also, it is a fantastic way to learn from our seniors. This is essential for designing large software systems.

Measurement

Also just being able to write software, doesn’t mean that the software is any good. Hence, we will discover what makes good software, and how to measure software quality. On one hand we should be able to analyse existing source code through static analysis and measuring metrics, but also how do we guarantee that our code meets certain quality standards? Testing is also important in this context, it guarantees high quality products.

New Tools

Up to now, you may have come to know about an IDE, a compiler and a debugger. But there are many more tools at the disposal of a software engineer. There are tools that allow us to work in teams, to document our software, to assist and monitor the whole development effort. There are tools for software architects, tools for testing and profiling, automation and re-engineering.

History

When the first modern digital computers appeared in the early 1940s,[1] the instructions to make them operate were wired into the machine. At this time, people working with computers were engineers, mostly electrical engineers. This hardware centric design was not flexible and was quickly replaced with the "stored program architecture" or von Neumann architecture. Thus the first division between "hardware" and "software" began with abstraction being used to deal with the complexity of computing.

Programming languages started to appear in the 1950s and this was also another major step in abstraction. Major languages such as Fortran, ALGOL, and COBOL were released in the late 1950s to deal with scientific, algorithmic, and business problems respectively. E.W. Dijkstra wrote his seminal paper, "Go To Statement Considered Harmful",[2] in 1968 and David Parnas introduced the key concept of modularity and information hiding in 1972[3] to help programmers deal with the ever increasing complexity of software systems. A software system for managing the hardware called an operating system was also introduced, most notably by Unix in 1969. In 1967, the Simula language introduced the object-oriented programming paradigm.

These advances in software were met with more advances in computer hardware. In the mid 1970s, the microcomputer was introduced, making it economical for hobbyists to obtain a computer and write software for it. This in turn led to the now famous Personal Computer (PC) and Microsoft Windows. The Software Development Life Cycle or SDLC was also starting to appear as a consensus for centralized construction of software in the mid 1980s. The late 1970s and early 1980s saw the introduction of several new Simula-inspired object-oriented programming languages, including Smalltalk, Objective-C, and C++.

Open-source software started to appear in the early 90s in the form of Linux and other software introducing the "bazaar" or decentralized style of constructing software.[4] Then the World Wide Web and the popularization of the Internet hit in the mid 90s, changing the engineering of software once again. Distributed systems gained sway as a way to design systems, and the Java programming language was introduced with its virtual machine as another step in abstraction. Programmers collaborated and wrote the Agile Manifesto, which favored more lightweight processes to create cheaper and more timely software.

The current definition of software engineering is still being debated by practitioners today as they struggle to come up with ways to produce software that is "cheaper, better, faster". Cost reduction has been a primary focus of the IT industry since the 1990s. Total cost of ownership represents the costs of more than just acquisition. It includes things like productivity impediments, upkeep efforts, and resources needed to support infrastructure.

References

- ↑ Leondes (2002). intelligent systems: technology and applications. CRC Press. ISBN 9780849311215.

- ↑ Dijkstra, E. W. (1968). "Go To Statement Considered Harmful" (PDF). Wikipedia:Communications of the ACM. 11 (3): 147–148. doi:10.1145/362929.362947. Retrieved 2009-08-10.

{{cite journal}}: Unknown parameter|month=ignored (help) - ↑

Parnas, David (1972). "On the Criteria To Be Used in Decomposing Systems into Modules". Wikipedia:Communications of the ACM. 15 (12): 1053–1058. doi:10.1145/361598.361623. Retrieved 2008-12-26.

{{cite journal}}: Unknown parameter|month=ignored (help) - ↑ Raymond, Eric S. The Cathedral and the Bazaar. ed 3.0. 2000.

Further Reading

History of software engineering

Software Engineer

Software engineering is done by the software engineer, an engineer who applies the principles of software engineering to the design and development, testing, and evaluation of software and systems that make computers or anything containing software work. There has been some controversy over the term engineer[1], since it implies a certain level of academic training, professional discipline, adherence to formal processes, and especially legal liability that often are not applied in cases of software development. In 2004, the U. S. Bureau of Labor Statistics counted 760,840 software engineers holding jobs in the U.S.; in the same period there were some 1.4 million practitioners employed in the U.S. in all other engineering disciplines combined.[2]

Overview

Prior to the mid-1990s, software practitioners called themselves programmers or developers, regardless of their actual jobs. Many people prefer to call themselves software developer and programmer, because most widely agree what these terms mean, while software engineer is still being debated. A prominent computing scientist, E. W. Dijkstra, wrote in a paper that the coining of the term software engineer was not a useful term since it was an inappropriate analogy, "The existence of the mere term has been the base of a number of extremely shallow --and false-- analogies, which just confuse the issue...Computers are such exceptional gadgets that there is good reason to assume that most analogies with other disciplines are too shallow to be of any positive value, are even so shallow that they are only confusing."[3]

The term programmer has often been used to refer to those without the tools, skills, education, or ethics to write good quality software. In response, many practitioners called themselves software engineers to escape the stigma attached to the word programmer.

The label software engineer is used very liberally in the corporate world. Very few of the practicing software engineers actually hold Engineering degrees from accredited universities. In fact, according to the Association for Computing Machinery, "most people who now function in the U.S. as serious software engineers have degrees in computer science, not in software engineering". [4]

Education

About half of all practitioners today have computer science degrees. A small, but growing, number of practitioners have software engineering degrees. In 1987 Imperial College London introduced the first three-year software engineering Bachelor's degree in the UK and the world. Since then, software engineering undergraduate degrees have been established at many universities. A standard international curriculum for undergraduate software engineering degrees was recently defined by the ACM[5]. As of 2004, in the U.S., about 50 universities offer software engineering degrees, which teach both computer science and engineering principles and practices. ETS University and UQAM were mandated by IEEE to develop the SoftWare Engineering Body of Knowledge (SWEBOK) [6], which has become an ISO standard describing the body of knowledge covered by a software engineer.

In business, some software engineering practitioners have Management Information Systems (MIS) degrees. In embedded systems, some have electrical engineering or computer engineering degrees, because embedded software often requires a detailed understanding of hardware. In medical software, practitioners may have medical informatics, general medical, or biology degrees. Some practitioners have mathematics, science, engineering, or technology degrees. Some have philosophy (logic in particular) or other non-technical degrees, and others have no degrees.

Profession

Most software engineers work as employees or contractors. They work with businesses, government agencies (civilian or military), and non-profit organizations. Some software engineers work for themselves as freelancers. Some organizations have specialists to perform each of the tasks in the software development process. Other organizations required software engineers to do many or all of them. In large projects, people may specialize in only one role. In small projects, people may fill several or all roles at the same time.

There is considerable debate over the future employment prospects for Software Engineers and other Information Technology (IT) Professionals. For example, an online futures market called the Future of IT Jobs in America[7] attempts to answer whether there will be more IT jobs, including software engineers, in 2012 than there were in 2002.

Some students in the developed world may have avoided degrees related to software engineering because of the fear of offshore outsourcing and of being displaced by foreign workers.[8] Although government statistics do not currently show a threat to software engineering itself; a related career, computer programming, does appear to have been affected.[9][10] Some career counselors suggest a student to also focus on "people skills" and business skills rather than purely technical skills, because such "soft skills" are allegedly more difficult to offshore.[11] It is the quasi-management aspects of software engineering that appear to be what has kept it from being impacted by globalization.[12]

References

- ↑ Sayo, Mylene. "[http://www.peo.on.ca/enforcement/June112002newsrelease.html What's in a Name? Tech Sector battles Engineers on "software engineering"]". Retrieved 2008-07-24.

{{cite web}}: External link in|title= - ↑ Bureau of Labor Statistics, U.S. Department of Labor, USDL 05-2145: Occupational Employment and Wages, November 2004

- ↑ http://www.cs.utexas.edu/users/EWD/transcriptions/EWD06xx/EWD690.html E.W.Dijkstra Archive: The pragmatic engineer versus the scientific designer

- ↑ http://computingcareers.acm.org/?page_id=12 ACM, Computing - Degrees & Careers, Software Engineering

- ↑ http://sites.computer.org/ccse/ Curriculum Guidelines for Undergraduate Degree Programs in Software Engineering

- ↑ http://www.computer.org/portal/web/swebok Guide to the Software Engineering Body of Knowledge

- ↑ Future of IT Jobs in America

- ↑ As outsourcing gathers steam, computer science interest wanes

- ↑ Computer Programmers

- ↑ Software developer growth slows in North America | InfoWorld | News | 2007-03-13 | By Robert Mullins, IDG News Service

- ↑ Hot Skills, Cold Skills

- ↑ Dual Roles: The Changing Face of IT

UML

Introduction

Software engineers speak a funny language called Unified Modeling Language, or UML for short. Like a musician has to learn musical notation before being able to play piano, we need to learn UML before we are able to engineer software. UML is useful in many parts of the software engineering process, for instance: planning, architecture, documentation, or reverse engineering. Therefore, it is worth our efforts to know it.

Designing software is a little like writing a screenplay for a Hollywood movie. The characteristics, actions, and interactions of the characters are carefully planned, as is the relevant components of their environment. As an introductory example, consider our friend Bill, a customer, who is at a restaurant for dinner. His waiter is Linus, who takes the orders and brings the food. In the kitchen is Larry, the cook. Steve is the cashier. In this way, we've provided useful and easily accessed information about the operation of a restaurant in the screenplay.

Use Case Diagram

The use case model is a representation of the system's intended functions and its environment.The first thing a software engineer does is to draw a Use Case diagram. All the actors are represented by little stick figures, all the actions are represented by ovals and are called use cases. The actors and use cases are connected by lines. Very often there is also one or more system boundaries. Actors, which usually are not part of the system, are drawn outside the system area.

Use Case diagrams are very simple, so even managers can understand them. But they are very helpful in understanding the system to be designed. They should list all parties involved in the system and all major actions that the system should be able to perform. The important thing about them is that you don't forget anything, it is less important that they are super-detailed, for this we have other diagram types.

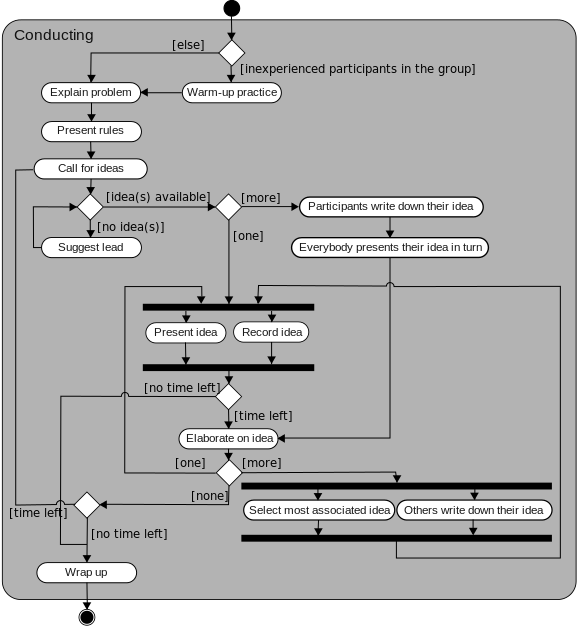

Activity Diagram

Next, we will draw an Activity diagram. The Activity diagram gives more detail to a given use case and it often depicts the flow of information, hence it is also called a Flowchart. Where the Use Case diagram has no timely order, the Activity diagram has a beginning and an end, and it also depicts decisions and repetitions.

If the Use Case diagram names the actors and gives us the headings for each scene (use case) of our play, the Activity diagram tells the detailed story behind each scene. Some managers may be able to understand Activity diagrams, but don't count on it.

Sequence Diagram

Once we are done drawing our Activity diagrams, the next step of refinement is the Sequence diagram. In this diagram we list the actors or objects horizontally and then we depict the messages going back and forth between the objects by horizontal lines. Time is always progressing downwards in this diagram.

The Sequence diagram is a very important step in what is called the process of object-oriented analysis and design. This diagram is so important, because on the one hand it identifies our objects/classes and on the other hand it also gives us the methods for each of those classes, because each message turns into a method. Sequence diagrams can become very large, since they basically describe the whole program. Make sure, you cover every path in your Sequence diagrams, but try to avoid unnecessary repetition. Managers will most likely not understand Sequence diagrams.

Collaboration/Communication Diagram

The Collaboration diagram is an intermediate step to get us from the Sequence diagram to the Class diagram. It is similar to the Sequence diagram, but it has a different layout. Instead of worrying about the timeline, we worry about the interactions between the objects. Each object is represented by a box, and interactions between the objects are shown by arrows.

This diagram shows the responsibility of objects. If an object has too much responsibility, meaning there are too many lines going in and out of a box, probably something is wrong in your design. Usually you would want to split the box into two or more smaller boxes. At this stage in your design, this can still be done easily. Try to do that once you started coding, or even later, it will become a nightmare.

Class Diagram

For us as software engineers, at least the object-oriented kind, the Class diagram is the most important one. A Class diagram consists of classes and lines between them. The classes themselves are drawn as boxes, having two compartments, one for methods and one for attributes.

You start with the Collaboration diagram, and the first thing you do is take all the boxes and call them classes now. Next, instead of having many lines going between the objects, you replace them by one line. But for every line you remove, you must add a method entry to the class's method compartment. So at this stage the Class diagram looks quite similar to the Collaboration diagram.

The things that make a Class diagram different are the attributes and the fact that there is not only one type of line, but several different kinds. As for the attributes, you must look at each class carefully and decide which variables are needed for this class to function. If the class is merely a data container this is easy, if the class does some more complicated things, this may not be so easy.

As for the lines, we call them relationships between the objects, and basically there are three major kinds:

- the association (has a): a static relationship, usually one class is attribute of another class, or one class uses another class

- the aggregation (consists of): for instance an order consists of order details

- and the inheritance (is a): describes a hierarchy between classes

Now with the Class diagram finished, you can lean back: if you have a good UML Modelling tool you simply click on the 'Generate Code' button and it will create stubs for all the classes with methods and attributes in your favorite programming language. By the way, don't expect your manager to understand class diagrams.

UML Models and Diagrams

The Unified Modeling Language is a standardized general-purpose modeling language and nowadays is managed as a de facto industry standard by the Object Management Group (OMG).[1] UML includes a set of graphic notation techniques to create visual models of software-intensive systems.[2]

History

UML was invented by James Rumbaugh, Grady Booch and Ivar Jacobson. After Rational Software Corporation hired James Rumbaugh from General Electric in 1994, the company became the source for the two most popular object-oriented modeling approaches of the day: Rumbaugh's Object-modeling technique (OMT), which was better for object-oriented analysis (OOA), and Grady Booch's Booch method, which was better for object-oriented design (OOD). They were soon assisted in their efforts by Ivar Jacobson, the creator of the object-oriented software engineering (OOSE) method. Jacobson joined Rational in 1995, after his company, Objectory AB,[3] was acquired by Rational.

Definition

The Unified Modeling Language (UML) is used to specify, visualize, modify, construct and document the artifacts of an object-oriented software-intensive system under development.[4] UML offers a standard way to visualize a system's architectural blueprints, including elements such as activities, actors, business processes, database schemas, components, programming language statements, and reusable software components.[5]

UML combines techniques from data modeling (entity relationship diagrams), business modeling (work flows), object modeling, and component modeling. It can be used with all processes, throughout the software development life cycle, and across different implementation technologies.[6]

Models and Diagrams

It is important to distinguish between the UML model and the set of diagrams of a system. A diagram is a partial graphic representation of a system's model. The model also contains documentation that drive the model elements and diagrams.

UML diagrams represent two different views of a system model [7]:

- Static (or structural) view: emphasizes the static structure of the system using objects, attributes, operations and relationships. The structural view includes class diagrams and composite structure diagrams.

- Dynamic (or behavioral) view: emphasizes the dynamic behavior of the system by showing collaborations among objects and changes to the internal states of objects. This view includes sequence diagrams, activity diagrams and state machine diagrams.

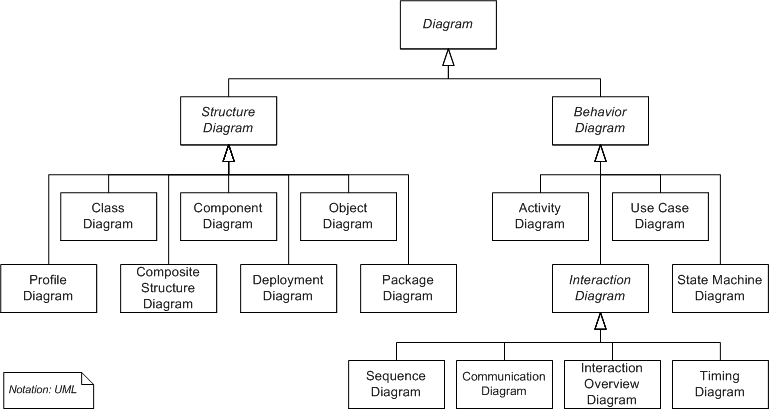

Diagrams Overview

In UML 2.2 there are 14 types of diagrams divided into two categories.[8] Seven diagram types represent structural information, and the other seven represent general types of behavior, including four that represent different aspects of interactions. These diagrams can be categorized hierarchically as shown in the following diagram:

Structure Diagrams

Structure diagrams emphasize the things that must be present in the system being modeled. Since structure diagrams represent the structure, they are used extensively in documenting the software architecture of software systems.

- Class diagram: describes the structure of a system by showing the system's classes, their attributes, and the relationships among the classes.

- Component diagram: describes how a software system is split up into components and shows the dependencies among these components.

- Composite structure diagram: describes the internal structure of a class and the collaborations that this structure makes possible.

- Deployment diagram: describes the hardware used in system implementations and the execution environments and artifacts deployed on the hardware.

- Object diagram: shows a complete or partial view of the structure of a modeled system at a specific time.

- Package diagram: describes how a system is split up into logical groupings by showing the dependencies among these groupings.

- Profile diagram: operates at the metamodel level to show stereotypes as classes with the <<stereotype>> stereotype, and profiles as packages with the <<profile>> stereotype. The extension relation (solid line with closed, filled arrowhead) indicates what metamodel element a given stereotype is extending.

Behaviour Diagrams

Behavior diagrams emphasize what must happen in the system being modeled. Since behavior diagrams illustrate the behavior of a system, they are used extensively to describe the functionality of software systems.

- Use case diagram: describes the functionality provided by a system in terms of actors, their goals represented as use cases, and any dependencies among those use cases.

- Activity diagram: describes the business and operational step-by-step workflows of components in a system. An activity diagram shows the overall flow of control.

- state machine diagram: describes the states and state transitions of the system.

Interaction Diagrams

Interaction diagrams, a subset of behaviour diagrams, emphasize the flow of control and data among the things in the system being modeled:

- Sequence diagram: shows how objects communicate with each other in terms of a sequence of messages. Also indicates the lifespans of objects relative to those messages.

- Communication diagram: shows the interactions between objects or parts in terms of sequenced messages. They represent a combination of information taken from Class, Sequence, and Use Case Diagrams describing both the static structure and dynamic behavior of a system.

- Interaction overview diagram: provides an overview in which the nodes represent communication diagrams.

- Timing diagrams: a specific type of interaction diagram where the focus is on timing constraints.

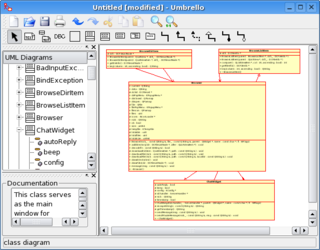

UML Modelling Tools

To draw UML diagrams, all you need is a pencil and a piece of paper. However, for a software engineer that seems a little outdated, hence most of us will use tools. The simplest tools are simply drawing programs, like Microsoft Visio or Dia. The diagrams generated this way look nice, but are not really that useful, since they do not include the code generation feature.

Hence, when deciding on a UML modelling tool (sometimes also called CASE tool)[9] you should make sure, that it allows for code generation and even better, it should also allow for reverse engineering. Combined, these two are also referred to as round-trip engineering. Any serious tool should be able to do that. Finally, UML models can be exchanged among UML tools by using the XMI interchange format, hence you should check that your tool of choice supports this.

Since the Rational Software Corporation so to say 'invented' UML, the most well-known UML modelling tool is IBM Rational Rose. Other tools include Rational Rhapsody, Visual Parardigm, MagicDraw UML, StarUML, ArgoUML, Umbrello, BOUML, PowerDesigner, Visio and Dia. Some of popular development environments also offer UML modelling tools, i. e. Eclipse, NetBeans, and Visual Studio. [10]

References

- ↑ http://www.omg.org/ Object Management Group

- ↑ http://en.wikipedia.org/w/index.php?title=Unified_Modeling_Language&oldid=413683022 Unified Modeling Language

- ↑ Objectory AB, known as Objectory System, was founded in 1987 by Ivar Jacobson. In 1991, It was acquired and became a subsidiary of Ericsson.

- ↑ Wikipedia:FOLDOC (2001). Unified Modeling Language last updated 2002-01-03. Accessed 6 feb 2009.

- ↑ Grady Booch, Ivar Jacobson & Jim Rumbaugh (2000) OMG Unified Modeling Language Specification, Version 1.3 First Edition: March 2000. Retrieved 12 August 2008.

- ↑ Satish Mishra (1997). "Visual Modeling & Unified Modeling Language (UML) : Introduction to UML". Rational Software Corporation. Accessed 9 Nov 2008.

- ↑ Jon Holt Institution of Electrical Engineers (2004). UML for Systems Engineering: Watching the Wheels IET, 2004, ISBN 0863413544. p.58

- ↑ UML Superstructure Specification Version 2.2. OMG, February 2009.

- ↑ http://en.wikipedia.org/wiki/Computer-aided_software_engineering Computer-aided software engineering

- ↑ http://en.wikipedia.org/wiki/List_of_Unified_Modeling_Language_tools List of Unified Modeling Language Tools

Labs

Lab 1a: StarUML (30 min)

We need to learn about a UML modelling tool. StarUML[1] is a free UML modelling tool that is quite powerful, it allows for forward and reverse engineering. It supports Java, C++ and C#. A small disadvantage is that it is no longer supported, hence it is limited to Java 1.4 and C# 2.0, which is very unfortunate.

Start StarUML, and select ’Empty Project’ at the startup. Then in the Model Explorer add a new model by right-clicking on the ’untitled’ thing. After that you can create all kinds of UML diagrams by right-clicking on the model.

More details can be found at the following StarUML Tutorial. [2]

Lab 1b: objectiF (30 min)

Another UML modelling tool, which is free for personal use is objectiF.[3] To learn how to use objectiF, take a look at their tutorial.[4]

Lab 2: Restaurant Example (30 min)

Take the restaurant example from class and create all the different UML diagrams we created in class using StarUML or your favorite UML modelling tool.

Once you are done with the class diagram, try out the 'Generate Code' feature. In StarUML you right-click with your mouse in the class diagram, and select 'Generate Code'. Look at the classes generated and compare them with your model.

Important Note: the restaurant example actually is not a very good example, because it may give you the impression that actors become objects. They don't. Actors never turn into objects, actors are always outside the system, hence the Tetris example below is much better.

Lab 3: Tetris (60 min)

Most of us know the game of Tetris.[5] [6] (However, I had students who did not know it, so in case you don't please learn about it and play it for a little before continuing with this lab). If you recall from class, when inventing UML, the Three Amigos started with something that was called Object Oriented Analysis and Object Oriented Design. So let's do it.

Object Oriented Analysis and Design

We want to program the game Tetris. But before we can start we need to analyze the game. We first need to identify 'objects' and second we must find out how they relate and talk to each other. So for this lab do the following:

- build teams of 5 to 6 students per team

- looking at the game, try to identify objects, for instance the bricks that are dropping are objects

- have each student represent an object, e.g., a brick

- what properties does that student/brick have? (color,shape,...)

- how many bricks can there be, how do they interact?

- is the player who plays the game also an object or is she an actor?

- how does the player interact with the bricks?

- what restrictions exist on the movement of the bricks, how would you implement them?

- how would you calculate a score for the game?

Game Time

Once you have identified all the objects, start playing the real game. For this one student is the 'actor', one student will write the minutes (protocol), and the other students will be objects (such as bricks). Now start the game: the actor and the different objects interact by talking to each other. The student who writes the minutes, will write down who said what to whom, i.e., everything that was said between the objects and the actor and the objects amongst themselves.

Play the game for at least one or two turns. Does your simulation work, did you forget something? Maybe you need to add another object, like a timer.

UML

We now need to make the connection between the play and UML.

- Use Case diagram: this is pretty straightforward, you identify the actor as the player, and write down all the use cases that the player can do. One question you should check, if the timer is an actor. The Use Case diagram tells you how your system interacts with its environment. Draw the Use Case diagram for Tetris.

- Activity diagram: from your game you find that there is some repetition, and some decisions have to be made at different stages in the game. This can be nicely represented with an Activity diagram. Draw a high level Activity diagram for the game.

Next we come to our minutes (protocol): this contains all the information we need to draw a Sequence diagram and finally arrive at the Collaboration diagram.

- Sequence diagram: take a look at your minutes (protocol). First, identify the objects and put them horizontally. Then go through your minutes line for line. For every line in your minutes, draw the corresponding line in your Sequence diagram. As you can see the Sequence diagram is in one-to-one correspondence with the minutes.

- Collaboration diagram: from the Sequence diagram it is easy to create the Collaboration diagram. Just do it.

- Class diagram (Association): from the Collaboration diagram, you can infer the classes and the methods the classes need. As for the attributes, some you have already identified (like the color and shape of the bricks), others you may have to think about for a little. Draw the class diagram, and for every class try to guess what attributes are needed for the class to work properly.

- Class diagram (Inheritance): if you have some more experience with object-oriented languages, you may try to identify super classes, i.e., try to use inheritance to take into account common features of objects.

If you performed this lab properly, you will have learned a lot. First, you have seen that through a simulation it is possible to identify objects, connections between the objects, hidden requirements and defects in your assumptions. Second, using a carefully written protocol of your simulation is enough to create Sequence, Collaboration and Class diagrams. So there is no magic in creating these diagrams, it is just playing a game. Although you may think this is just a funny or silly game, my advice to you: play this game for every new project you start.

References

- ↑ http://staruml.sourceforge.net/en/ StarUML - The Open Source UML/MDA Platform

- ↑ http://cnx.org/content/m15092/latest/ StarUML Tutorial

- ↑ http://www.microtool.de/objectif/en/index.asp objectiF - Tool for Model-Driven Software Development with UML

- ↑ http://www.microtool.de/mT/pdf/objectiF/01/Tutorials/JavaTutorial.pdf Developing Java Applications with UML

- ↑ http://en.wikipedia.org/wiki/Tetris Tetris

- ↑ http://www.percederberg.net/games/tetris/index.html Java Tetris

Questions

- Give the names of two of the inventors of UML?

- Who mananges the UML standard these days?

- Draw a UseCase diagram for the game of Breakout.

- Take a look at the following Activity diagram. Describe the flow of information in your own words. # In class we introduced the example of a restaurant, where Ralph was the customer who wanted to eat dinner. His waiter was Linus, the cook was Larry and Steve was the cashier. Ralph was ordering a hamburger and beer. Please draw an Sequence diagram, that displays the step from the ordering of the food until Ralph gets his beer and burger.

- Consider the following class diagram. Please write Java classes that would implement this class diagram. # You are supposed to write code for a money machine. Draw a UseCase diagram.

- Turn the following Sequence diagram into a class diagram.

- What is the difference between Structure diagrams and Behavior diagrams?

Process & Methodology

Introduction

First we need to take a brief look at the big picture. The software development process is a structure imposed on the development of a software product. It is made up of a set of activities and steps with the goal to find repeatable, predictable processes that improve productivity and quality.

Software Development Activities

The software development process consists of a set of activities and steps, which are

- Requirements

- Specification

- Architecture

- Design

- Implementation

- Testing

- Deployment

- Maintenance

Planning

The important task in creating a software product is extracting the requirements or requirements analysis. Customers typically have an abstract idea of what they want as an end result, but not what software should do. Incomplete, ambiguous, or even contradictory requirements are recognized by skilled and experienced software engineers at this point. Frequently demonstrating live code may help reduce the risk that the requirements are incorrect.

Once the general requirements are gathered from the client, an analysis of the scope of the development should be determined and clearly stated. This is often called a scope document.

Certain functionality may be out of scope of the project as a function of cost or as a result of unclear requirements at the start of development. If the development is done externally, this document can be considered a legal document so that if there are ever disputes, any ambiguity of what was promised to the client can be clarified.

Implementation, Testing and Documenting

Implementation is the part of the process where software engineers actually program the code for the project.

Software testing is an integral and important part of the software development process. This part of the process ensures that defects are recognized as early as possible.

Documenting the internal design of software for the purpose of future maintenance and enhancement is done throughout development. This may also include the writing of an API, be it external or internal. It is very important to document everything in the project.

Deployment and Maintenance

Deployment starts after the code is appropriately tested, is approved for release and sold or otherwise distributed into a production environment.

Software Training and Support is important and a lot of developers fail to realize that. It would not matter how much time and planning a development team puts into creating software if nobody in an organization ends up using it. People are often resistant to change and avoid venturing into an unfamiliar area, so as a part of the deployment phase, it is very important to have training classes for new clients of your software.

Maintaining and enhancing software to cope with newly discovered problems or new requirements can take far more time than the initial development of the software. It may be necessary to add code that does not fit the original design to correct an unforeseen problem or it may be that a customer is requesting more functionality and code can be added to accommodate their requests. If the labor cost of the maintenance phase exceeds 25% of the prior-phases' labor cost, then it is likely that the overall quality of at least one prior phase is poor. In that case, management should consider the option of rebuilding the system (or portions) before maintenance cost is out of control.

References

External links

- Don't Write Another Process

- No Silver Bullet: Essence and Accidents of Software Engineering", 1986

- Gerhard Fischer, "The Software Technology of the 21st Century: From Software Reuse to Collaborative Software Design", 2001

- Lydia Ash: The Web Testing Companion: The Insider's Guide to Efficient and Effective Tests, Wiley, May 2, 2003. ISBN 0-471-43021-8

- SaaSSDLC.com — Software as a Service Systems Development Life Cycle Project

- Software development life cycle (SDLC) [visual image], software development life cycle

- Selecting an SDLC", 2009

- Heraprocess.org — Hera is a light process solution for managing web projects

Methodology

A software development methodology or system development methodology in software engineering is a framework that is used to structure, plan, and control the process of developing an information system.

History

The software development methodology framework didn't emerge until the 1960s. According to Elliott (2004) the systems development life cycle (SDLC) can be considered to be the oldest formalized methodology framework for building information systems. The main idea of the SDLC has been "to pursue the development of information systems in a very deliberate, structured and methodical way, requiring each stage of the life cycle from inception of the idea to delivery of the final system, to be carried out in rigidly and sequentially".[1] within the context of the framework being applied. The main target of this methodology framework in the 1960s was "to develop large scale functional business systems in an age of large scale business conglomerates. Information systems activities revolved around heavy data processing and number crunching routines".[1]

As a noun

As a noun, a software development methodology is a framework that is used to structure, plan, and control the process of developing an information system - this includes the pre-definition of specific deliverables and artifacts that are created and completed by a project team to develop or maintain an application.[2]

A wide variety of such frameworks have evolved over the years, each with its own recognized strengths and weaknesses. One software development methodology framework is not necessarily suitable for use by all projects. Each of the available methodology frameworks are best suited to specific kinds of projects, based on various technical, organizational, project and team considerations.[2]

These software development frameworks are often bound to some kind of organization, which further develops, supports the use, and promotes the methodology framework. The methodology framework is often defined in some kind of formal documentation. Specific software development methodology frameworks (noun) include

- Rational Unified Process (RUP, IBM) since 1998.

- Agile Unified Process (AUP) since 2005 by Scott Ambler

As a verb

As a verb, the software development methodology is an approach used by organizations and project teams to apply the software development methodology framework (noun). Specific software development methodologies (verb) include:

- 1970s

- Structured programming since 1969

- Cap Gemini SDM, originally from PANDATA, the first English translation was published in 1974. SDM stands for System Development Methodology

- 1980s

- Structured Systems Analysis and Design Methodology (SSADM) from 1980 onwards

- Information Requirement Analysis/Soft systems methodology

- 1990s

- Object-oriented programming (OOP) has been developed since the early 1960s, and developed as a dominant programming approach during the mid-1990s

- Rapid application development (RAD) since 1991

- Scrum, since the late 1990s

- Team software process developed by Watts Humphrey at the SEI

- Extreme Programming since 1999

Verb approaches

Every software development methodology framework acts as a basis for applying specific approaches to develop and maintain software. Several software development approaches have been used since the origin of information technology. These are:[2]

- Waterfall: a linear framework

- Prototyping: an iterative framework

- Incremental: a combined linear-iterative framework

- Spiral: a combined linear-iterative framework

- Rapid application development (RAD): an iterative framework

- Extreme Programming

Waterfall development

The Waterfall model is a sequential development approach, in which development is seen as flowing steadily downwards (like a waterfall) through the phases of requirements analysis, design, implementation, testing (validation), integration, and maintenance. The first formal description of the method is often cited as an article published by Winston W. Royce[3] in 1970 although Royce did not use the term "waterfall" in this article.

The basic principles are:[2]

- Project is divided into sequential phases, with some overlap and splashback acceptable between phases.

- Emphasis is on planning, time schedules, target dates, budgets and implementation of an entire system at one time.

- Tight control is maintained over the life of the project via extensive written documentation, formal reviews, and approval/signoff by the user and information technology management occurring at the end of most phases before beginning the next phase.

Prototyping

Software prototyping, is the development approach of activities during software development, the creation of prototypes, i.e., incomplete versions of the software program being developed.

The basic principles are:[2]

- Not a standalone, complete development methodology, but rather an approach to handling selected parts of a larger, more traditional development methodology (i.e. incremental, spiral, or rapid application development (RAD)).

- Attempts to reduce inherent project risk by breaking a project into smaller segments and providing more ease-of-change during the development process.

- User is involved throughout the development process, which increases the likelihood of user acceptance of the final implementation.

- Small-scale mock-ups of the system are developed following an iterative modification process until the prototype evolves to meet the users’ requirements.

- While most prototypes are developed with the expectation that they will be discarded, it is possible in some cases to evolve from prototype to working system.

- A basic understanding of the fundamental business problem is necessary to avoid solving the wrong problem.

Incremental development

Various methods are acceptable for combining linear and iterative systems development methodologies, with the primary objective of each being to reduce inherent project risk by breaking a project into smaller segments and providing more ease-of-change during the development process.

The basic principles are:[2]

- A series of mini-Waterfalls are performed, where all phases of the Waterfall are completed for a small part of a system, before proceeding to the next increment, or

- Overall requirements are defined before proceeding to evolutionary, mini-Waterfall development of individual increments of a system, or

- The initial software concept, requirements analysis, and design of architecture and system core are defined via Waterfall, followed by iterative Prototyping, which culminates in installing the final prototype, a working system.

Spiral development

The spiral model is a software development process combining elements of both design and prototyping-in-stages, in an effort to combine advantages of top-down and bottom-up concepts.

The basic principles are:[2]

- Focus is on risk assessment and on minimizing project risk by breaking a project into smaller segments and providing more ease-of-change during the development process, as well as providing the opportunity to evaluate risks and weigh consideration of project continuation throughout the life cycle.

- "Each cycle involves a progression through the same sequence of steps, for each part of the product and for each of its levels of elaboration, from an overall concept-of-operation document down to the coding of each individual program."[4]

- Each trip around the spiral traverses four basic quadrants: (1) determine objectives, alternatives, and constraints of the iteration; (2) evaluate alternatives; Identify and resolve risks; (3) develop and verify deliverables from the iteration; and (4) plan the next iteration.[4][5]

- Begin each cycle with an identification of stakeholders and their win conditions, and end each cycle with review and commitment.[6]

Rapid application development

Rapid application development (RAD) is a software development methodology, which involves iterative development and the construction of prototypes. Rapid application development is a term originally used to describe a software development process introduced by James Martin in 1991.

The basic principles are:[2]

- Key objective is for fast development and delivery of a high quality system at a relatively low investment cost.

- Attempts to reduce inherent project risk by breaking a project into smaller segments and providing more ease-of-change during the development process.

- Aims to produce high quality systems quickly, primarily via iterative Prototyping (at any stage of development), active user involvement, and computerized development tools. These tools may include Graphical User Interface (GUI) builders, Computer Aided Software Engineering (CASE) tools, Database Management Systems (DBMS), fourth-generation programming languages, code generators, and object-oriented techniques.

- Key emphasis is on fulfilling the business need, while technological or engineering excellence is of lesser importance.

- Project control involves prioritizing development and defining delivery deadlines or “timeboxes”. If the project starts to slip, emphasis is on reducing requirements to fit the timebox, not in increasing the deadline.

- Generally includes joint application design (JAD), where users are intensely involved in system design, via consensus building in either structured workshops, or electronically facilitated interaction.

- Active user involvement is imperative.

- Iteratively produces production software, as opposed to a throwaway prototype.

- Produces documentation necessary to facilitate future development and maintenance.

- Standard systems analysis and design methods can be fitted into this framework.

Other practices

Other methodology practices include:

- Object-oriented development methodologies, such as Grady Booch's object-oriented design (OOD), also known as object-oriented analysis and design (OOAD). The Booch model includes six diagrams: class, object, state transition, interaction, module, and process.[7]

- Top-down programming: evolved in the 1970s by IBM researcher Harlan Mills (and Niklaus Wirth) in developed structured programming.

- Unified Process (UP) is an iterative software development methodology framework, based on Unified Modeling Language (UML). UP organizes the development of software into four phases, each consisting of one or more executable iterations of the software at that stage of development: inception, elaboration, construction, and guidelines. Many tools and products exist to facilitate UP implementation. One of the more popular versions of UP is the Rational Unified Process (RUP).

- Agile software development refers to a group of software development methodologies based on iterative development, where requirements and solutions evolve via collaboration between self-organizing cross-functional teams. The term was coined in the year 2001 when the Agile Manifesto was formulated.

- Integrated software development refers to a deliverable based software development framework using the three primary IT (project management, software development, software testing) life cycles that can be leveraged using multiple (iterative, waterfall, spiral, agile) software development approaches, where requirements and solutions evolve via collaboration between self-organizing cross-functional teams.

Software Development Process Evolves Various Processings Hence, software development is not an easy task, it evolves various processings. According to IBM Research: “Software development refers to a set of computer science activities dedicated to the process.

Creating Designing Deploying Supporting Software

Subtopics

View model

A view model is framework which provides the viewpoints on the system and its environment, to be used in the software development process. It is a graphical representation of the underlying semantics of a view.

The purpose of viewpoints and views is to enable human engineers to comprehend very complex systems, and to organize the elements of the problem and the solution around domains of expertise. In the engineering of physically intensive systems, viewpoints often correspond to capabilities and responsibilities within the engineering organization.[8]

Most complex system specifications are so extensive that no one individual can fully comprehend all aspects of the specifications. Furthermore, we all have different interests in a given system and different reasons for examining the system's specifications. A business executive will ask different questions of a system make-up than would a system implementer. The concept of viewpoints framework, therefore, is to provide separate viewpoints into the specification of a given complex system. These viewpoints each satisfy an audience with interest in some set of aspects of the system. Associated with each viewpoint is a viewpoint language that optimizes the vocabulary and presentation for the audience of that viewpoint.

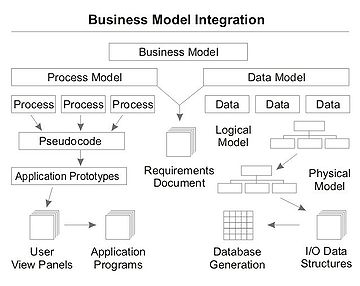

Business process and data modelling

Graphical representation of the current state of information provides a very effective means for presenting information to both users and system developers.

- A business model illustrates the functions associated with the business process being modeled and the organizations that perform these functions. By depicting activities and information flows, a foundation is created to visualize, define, understand, and validate the nature of a process.

- A data model provides the details of information to be stored, and is of primary use when the final product is the generation of computer software code for an application or the preparation of a functional specification to aid a computer software make-or-buy decision. See the figure on the right for an example of the interaction between business process and data models.[9]

Usually, a model is created after conducting an interview, referred to as business analysis. The interview consists of a facilitator asking a series of questions designed to extract required information that describes a process. The interviewer is called a facilitator to emphasize that it is the participants who provide the information. The facilitator should have some knowledge of the process of interest, but this is not as important as having a structured methodology by which the questions are asked of the process expert. The methodology is important because usually a team of facilitators is collecting information across the facility and the results of the information from all the interviewers must fit together once completed.[9]

The models are developed as defining either the current state of the process, in which case the final product is called the "as-is" snapshot model, or a collection of ideas of what the process should contain, resulting in a "what-can-be" model. Generation of process and data models can be used to determine if the existing processes and information systems are sound and only need minor modifications or enhancements, or if re-engineering is required as a corrective action. The creation of business models is more than a way to view or automate your information process. Analysis can be used to fundamentally reshape the way your business or organization conducts its operations.[9]

Computer-aided software engineering

Computer-aided software engineering (CASE), in the field software engineering is the scientific application of a set of tools and methods to a software which results in high-quality, defect-free, and maintainable software products.[10] It also refers to methods for the development of information systems together with automated tools that can be used in the software development process.[11] The term "computer-aided software engineering" (CASE) can refer to the software used for the automated development of systems software, i.e., computer code. The CASE functions include analysis, design, and programming. CASE tools automate methods for designing, documenting, and producing structured computer code in the desired programming language.[12]

Two key ideas of Computer-aided Software System Engineering (CASE) are:[13]

- Foster computer assistance in software development and or software maintenance processes, and

- An engineering approach to software development and or maintenance.

Typical CASE tools exist for configuration management, data modeling, model transformation, refactoring, source code generation, and Unified Modeling Language.

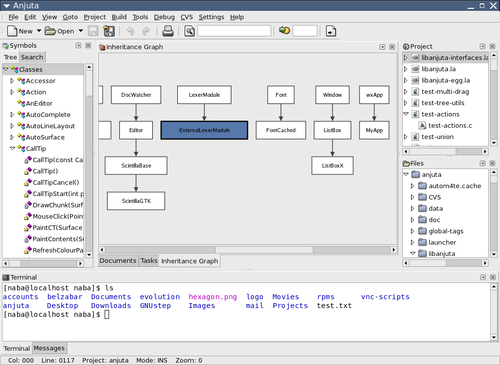

Integrated development environment

An integrated development environment (IDE) also known as integrated design environment or integrated debugging environment is a software application that provides comprehensive facilities to computer programmers for software development. An IDE normally consists of a:

- source code editor,

- compiler and/or interpreter,

- build automation tools, and

- debugger (usually).

IDEs are designed to maximize programmer productivity by providing tight-knit components with similar user interfaces. Typically an IDE is dedicated to a specific programming language, so as to provide a feature set which most closely matches the programming paradigms of the language.

Modeling language

A modeling language is any artificial language that can be used to express information or knowledge or systems in a structure that is defined by a consistent set of rules. The rules are used for interpretation of the meaning of components in the structure. A modeling language can be graphical or textual.[14] Graphical modeling languages use a diagram techniques with named symbols that represent concepts and lines that connect the symbols and that represent relationships and various other graphical annotation to represent constraints. Textual modeling languages typically use standardised keywords accompanied by parameters to make computer-interpretable expressions.

Example of graphical modelling languages in the field of software engineering are:

- Business Process Modeling Notation (BPMN, and the XML form BPML) is an example of a process modeling language.

- EXPRESS and EXPRESS-G (ISO 10303-11) is an international standard general-purpose data modeling language.

- Extended Enterprise Modeling Language (EEML) is commonly used for business process modeling across layers.

- Flowchart is a schematic representation of an algorithm or a stepwise process,

- Fundamental Modeling Concepts (FMC) modeling language for software-intensive systems.

- IDEF is a family of modeling languages, the most notable of which include IDEF0 for functional modeling, IDEF1X for information modeling, and IDEF5 for modeling ontologies.

- LePUS3 is an object-oriented visual Design Description Language and a formal specification language that is suitable primarily for modelling large object-oriented (Java, C++, C#) programs and design patterns.

- Specification and Description Language(SDL) is a specification language targeted at the unambiguous specification and description of the behaviour of reactive and distributed systems.

- Unified Modeling Language (UML) is a general-purpose modeling language that is an industry standard for specifying software-intensive systems. UML 2.0, the current version, supports thirteen different diagram techniques, and has widespread tool support.

Not all modeling languages are executable, and for those that are, using them doesn't necessarily mean that programmers are no longer needed. On the contrary, executable modeling languages are intended to amplify the productivity of skilled programmers, so that they can address more difficult problems, such as parallel computing and distributed systems.

Programming paradigm

A programming paradigm is a fundamental style of computer programming, in contrast to a software engineering methodology, which is a style of solving specific software engineering problems. Paradigms differ in the concepts and abstractions used to represent the elements of a program (such as objects, functions, variables, constraints...) and the steps that compose a computation (assignation, evaluation, continuations, data flows...).

A programming language can support multiple paradigms. For example programs written in C++ or Object Pascal can be purely procedural, or purely object-oriented, or contain elements of both paradigms. Software designers and programmers decide how to use those paradigm elements. In object-oriented programming, programmers can think of a program as a collection of interacting objects, while in functional programming a program can be thought of as a sequence of stateless function evaluations. When programming computers or systems with many processors, process-oriented programming allows programmers to think about applications as sets of concurrent processes acting upon logically shared data structures.

Just as different groups in software engineering advocate different methodologies, different programming languages advocate different programming paradigms. Some languages are designed to support one paradigm (Smalltalk supports object-oriented programming, Haskell supports functional programming), while other programming languages support multiple paradigms (such as Object Pascal, C++, C#, Visual Basic, Common Lisp, Scheme, Python, Ruby, and Oz).

Many programming paradigms are as well known for what methods they forbid as for what they enable. For instance, pure functional programming forbids using side-effects; structured programming forbids using goto statements. Partly for this reason, new paradigms are often regarded as doctrinaire or overly rigid by those accustomed to earlier styles.[citation needed] Avoiding certain methods can make it easier to prove theorems about a program's correctness, or simply to understand its behavior.

Software framework

A software framework is a re-usable design for a software system or subsystem. A software framework may include support programs, code libraries, a scripting language, or other software to help develop and glue together the different components of a software project. Various parts of the framework may be exposed via an API.

Software development process

A software development process is a framework imposed on the development of a software product. Synonyms include software life cycle and software process. There are several models for such processes, each describing approaches to a variety of tasks or activities that take place during the process.

A largely growing body of software development organizations implement process methodologies. Many of them are in the defense industry, which in the U.S. requires a rating based on 'process models' to obtain contracts. The international standard describing the method to select, implement and monitor the life cycle for software is ISO 12207.

A decades-long goal has been to find repeatable, predictable processes that improve productivity and quality. Some try to systematize or formalize the seemingly unruly task of writing software. Others apply project management methods to writing software. Without project management, software projects can easily be delivered late or over budget. With large numbers of software projects not meeting their expectations in terms of functionality, cost, or delivery schedule, effective project management appears to be lacking.

See also

- Lists

- List of software engineering topics

- List of software development philosophies

- Related topics

- Domain-specific modeling

- Lightweight methodology

- Object modeling language

- Structured programming

- Integrated IT Methodology

References

- ↑ a b Geoffrey Elliott (2004) Global Business Information Technology: an integrated systems approach. Pearson Education. p.87.

- ↑ a b c d e f g h Centers for Medicare & Medicaid Services (CMS) Office of Information Service (2008). Selecting a development approach. Webarticle. United States Department of Health and Human Services (HHS). Revalidated: March 27, 2008. Retrieved 15 July 2015.

- ↑ Wasserfallmodell > Entstehungskontext, Markus Rerych, Institut für Gestaltungs- und Wirkungsforschung, TU-Wien. Accessed on line November 28, 2007.

- ↑ a b Barry Boehm (1996., "A Spiral Model of Software Development and Enhancement". In: ACM SIGSOFT Software Engineering Notes (ACM) 11(4):14-24, August 1986

- ↑ Richard H. Thayer, Barry W. Boehm (1986). Tutorial: software engineering project management. Computer Society Press of the IEEE. p.130

- ↑ Barry W. Boehm (2000). Software cost estimation with Cocomo II: Volume 1.

- ↑ Georges Gauthier Merx & Ronald J. Norman (2006). Unified Software Engineering with Java. p.201.

- ↑ Edward J. Barkmeyer ea (2003). Concepts for Automating Systems Integration NIST 2003.

- ↑ a b c d Paul R. Smith & Richard Sarfaty (1993). Creating a strategic plan for configuration management using Computer Aided Software Engineering (CASE) tools. Paper For 1993 National DOE/Contractors and Facilities CAD/CAE User's Group.

- ↑ Kuhn, D.L (1989). "Selecting and effectively using a computer aided software engineering tool". Annual Westinghouse computer symposium; 6-7 Nov 1989; Pittsburgh, PA (USA); DOE Project.

- ↑ P. Loucopoulos and V. Karakostas (1995). System Requirements Engineering. McGraw-Hill.

- ↑ CASE definition In: Telecom Glossary 2000. Retrieved 26 Oct 2008.

- ↑ K. Robinson (1992). Putting the Software Engineering into CASE. New York : John Wiley and Sons Inc.

- ↑ Xiao He (2007). "A metamodel for the notation of graphical modeling languages". In: Computer Software and Applications Conference, 2007. COMPSAC 2007 - Vol. 1. 31st Annual International, Volume 1, Issue , 24–27 July 2007, pp 219-224.

External links

- Selecting a development approach at cms.gov.

- Software Methodologies Book Reviews An extensive set of book reviews related to software methodologies and processes

V-Model

The V-model represents a software development process (also applicable to hardware development) which may be considered an extension of the waterfall model. Instead of moving down in a linear way, the process steps are bent upwards after the coding phase, to form the typical V shape. The V-Model demonstrates the relationships between each phase of the development life cycle and its associated phase of testing. The horizontal and vertical axes represents time or project completeness (left-to-right) and level of abstraction (coarsest-grain abstraction uppermost), respectively.

Verification Phases

Requirements analysis

In the Requirements analysis phase, the requirements of the proposed system are collected by analyzing the needs of the user(s). This phase is concerned about establishing what the ideal system has to perform. However it does not determine how the software will be designed or built. Usually, the users are interviewed and a document called the user requirements document is generated.

The user requirements document will typically describe the system’s functional, interface, performance, data, security, etc requirements as expected by the user. It is used by business analysts to communicate their understanding of the system to the users. The users carefully review this document as this document would serve as the guideline for the system designers in the system design phase. The user acceptance tests are designed in this phase. See also Functional requirements.

There are different methods for gathering requirements of both soft and hard methodologies including; interviews, questionnaires, document analysis, observation, throw-away prototypes, use cases and status and dynamic views with users.

System Design

Systems design is the phase where system engineers analyze and understand the business of the proposed system by studying the user requirements document. They figure out possibilities and techniques by which the user requirements can be implemented. If any of the requirements are not feasible, the user is informed of the issue. A resolution is found and the user requirement document is edited accordingly.

The software specification document which serves as a blueprint for the development phase is generated. This document contains the general system organization, menu structures, data structures etc. It may also hold example business scenarios, sample windows, reports for the better understanding. Other technical documentation like entity diagrams, data dictionary will also be produced in this phase. The documents for system testing are prepared in this phase. V model is also similar with waterfall model.

Architecture Design

The phase of the design of computer architecture and software architecture can also be referred to as high-level design. The baseline in selecting the architecture is that it should realize all which typically consists of the list of modules, brief functionality of each module, their interface relationships, dependencies, database tables, architecture diagrams, technology details etc. The integration testing design is carried out in the particular phase.

Module Design

The module design phase can also be referred to as low-level design. The designed system is broken up into smaller units or modules and each of them is explained so that the programmer can start coding directly. The low level design document or program specifications will contain a detailed functional logic of the module, in pseudocode:

- database tables, with all elements, including their type and size

- all interface details with complete API references

- all dependency issues

- error message listings

- complete input and outputs for a module.

The unit test design is developed in this stage.

Validation Phases

Unit Testing

In computer programming, unit testing is a method by which individual units of source code are tested to determine if they are fit for use. A unit is the smallest testable part of an application. In procedural programming a unit may be an individual function or procedure. Unit tests are created by programmers or occasionally by white box testers. The purpose is to verify the internal logic code by testing every possible branch within the function, also known as test coverage. Static analysis tools are used to facilitate in this process, where variations of input data are passed to the function to test every possible case of execution.

Integration Testing

In integration testing the separate modules will be tested together to expose faults in the interfaces and in the interaction between integrated components. Testing is usually black box as the code is not directly checked for errors.

System Testing

System testing will compare the system specifications against the actual system.After the integration test is completed, the next test level is the system test. System testing checks if the integrated product meets the specified requirements. Why is this still necessary after the component and integration tests? The reasons for this are as follows:

Reasons for system test

- In the lower test levels, the testing was done against technical specifications, i.e., from the technical perspective of the software producer. The system test, though, looks at the system from the perspective of the customer and the future user. The testers validate whether the requirements are completely and appropriately met.

- Example: The customer (who has ordered and paid for the system) and the user (who uses the system) can be different groups of people or organizations with their own specific interests and requirements of the system.

- Many functions and system characteristics result from the interaction of all system components, consequently, they are only visible on the level of the entire system and can only be observed and tested there.

User Acceptance Testing

Acceptance testing is the phase of testing used to determine whether a system satisfies the requirements specified in the requirements analysis phase. The acceptance test design is derived from the requirements document. The acceptance test phase is the phase used by the customer to determine whether to accept the system or not.

Acceptance testing helps

- to determine whether a system satisfies its acceptance criteria or not.

- to enable the customer to determine whether to accept the system or not.

- to test the software in the "real world" by the intended audience.

Purpose of acceptance testing:

- to verify the system or changes according to the original needs.

Procedures

- Define the acceptance criteria:

- Functionality requirements.

- Performance requirements.

- Interface quality requirements.

- Overall software quality requirements.

- Develop an acceptance plan:

- Project description.

- User responsibilities.

- Acceptance description.

- Execute the acceptance test plan.

References

- ↑ Clarus Concept of Operations. Publication No. FHWA-JPO-05-072, Federal Highway Administration (FHWA), 2005

Further reading

- Roger S. Pressman:Software Engineering: A Practitioner's Approach, The McGraw-Hill Companies, ISBN 007301933X

- Mark Hoffman & Ted Beaumont: Application Development: Managing the Project Life Cycle, Mc Press, ISBN 1883884454

- Boris Beizer: Software Testing Techniques. Second Edition, International Thomson Computer Press, 1990, ISBN 1-85032-880-3

External links

Agile Model

Agile software development is a group of software development methodologies based on iterative and incremental development, where requirements and solutions evolve through collaboration between self-organizing, cross-functional teams. The Agile Manifesto[1] introduced the term in 2001.

History

Predecessors

Incremental software development methods have been traced back to 1957.[2] In 1974, a paper by E. A. Edmonds introduced an adaptive software development process.[3]

So-called "lightweight" software development methods evolved in the mid-1990s as a reaction against "heavyweight" methods, which were characterized by their critics as a heavily regulated, regimented, micromanaged, waterfall model of development. Proponents of lightweight methods (and now "agile" methods) contend that they are a return to development practices from early in the history of software development.[2]

Early implementations of lightweight methods include Scrum (1995), Crystal Clear, Extreme Programming (1996), Adaptive Software Development, Feature Driven Development, and Dynamic Systems Development Method (DSDM) (1995). These are now typically referred to as agile methodologies, after the Agile Manifesto published in 2001.[4]

Agile Manifesto

In February 2001, 17 software developers[5] met at a ski resort in Snowbird, Utah, to discuss lightweight development methods. They published the "Manifesto for Agile Software Development"[1] to define the approach now known as agile software development. Some of the manifesto's authors formed the Agile Alliance, a nonprofit organization that promotes software development according to the manifesto's principles.

Agile Manifesto reads, in its entirety, as follows:[1]

We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

That is, while there is value in the items on the right, we value the items on the left more.

Twelve principles underlie the Agile Manifesto, including:[6]

- Customer satisfaction by rapid delivery of useful software

- Welcome changing requirements, even late in development

- Working software is delivered frequently (weeks rather than months)

- Working software is the principal measure of progress