Local Area Network design/Quality of service in IEEE 802 LANs

Quality of service in traffic forwarding is required when there is a limited amount of resources such that the offered traffic exceeds the capacity of draining data creating congestions.

Usually LANs are over-provisioned, because it's very cheaper to expand the network than enforce quality of service → in the worst case, the channel occupancy is equal to 30-40% the available bandwidth → apparently there is no need for quality of service because there are no congestions.

Problems may occur in some possible scenarios:

-

Backbone not well dimensioned.

-

Data transfer from several clients to a single server.

-

Data transfer from a fast server to a slow client.

- backbone not well dimensioned: a bridge having too small buffers in the backbone may lead to micro-congestions on uplinks, which are not persistent but are a lot and short-term (micro) because client traffic is extremely bursty;

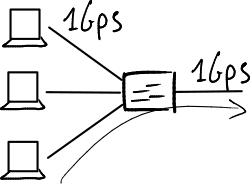

- data transfer from several clients to a single server: a bridge having too small buffers as a single access point to a server may lead to persistent congestions due to concurrency of several clients at the same time;

- data transfer from a fast server to a slow client: a slow client (in terms of link speed, CPU capacity, etc.) may lead to temporary congestions on the client itself because it can not drain traffic coming from a fast server.

Quality of service is potentially a nice feature, but has contraindications which make the need for it not so strong: quality of service is just one of the problems to be solved to make the network efficient, and often improvements which it brings are not perceived by the end user.

IEEE 802.1p

[edit | edit source]The IEEE 802.1p standard defines 8 classes of service, called priority levels, and each of them is assigned a different (logical) queue.

A frame can be marked with a specific class of service in field 'Priority Code Point' (PCP) of the VLAN tag: ![]() Virtual LANs#Frame tagging.[1] The standard also offers the capability of selecting the desired priority scheduling algorithm: round robin, weighted round robin, weighted fair queuing.

Virtual LANs#Frame tagging.[1] The standard also offers the capability of selecting the desired priority scheduling algorithm: round robin, weighted round robin, weighted fair queuing.

It would be better to let the source, at application layer, perform the marking because just the source exactly knows the traffic type (voice traffic or data traffic), but most of users would declare all their packets as high-priority because they would not be honest → marking needs to be performed by access bridges which are under the control of the provider. However recognizing the traffic type is very difficult for bridges and makes them very expensive, because it requires to go up to the application layer and may not work with encrypted traffic → distinction can be simplified for bridges in two ways:

- per-port marking: the PC is connected to a port and the telephone to another port, so the bridge can mark the traffic based on the input port;

- edge-device marking: the PC is connected to the telephone and the telephone to the bridge → all the traffic from PC crosses the telephone, which just marks it as data traffic, while it marks its traffic as voice traffic.

The standard suggests which type of traffic each priority level is destined to (e.g. 6 = voice traffic), but lets the freedom to change these associations → interoperability problems among different vendors may rise.

IEEE 802.3x

[edit | edit source]The 802.3x standard implements a flow control at the Ethernet layer, in addition to the flow control existing at the TCP layer: given a link, if the downstream node (bridge or host) has its buffers full it can send to the upstream node at the other endpoint of the link a PAUSE packet asking it to stop the data transmission on that link for a certain amount of time, called pause time which is expressed in 'pause quanta' (1 quantum = time to transmit 512 bits). The upstream node therefore stores packets arriving during the time pause into its output buffer, and will send them when the input buffer of the downstream node will be ready to receive other packets → packets are no longer lost due to buffer congestions.

Two flow control modes exist:

- asymmetrical mode: only a node sends the PAUSE packet, the other one just receives the packet and stops the transmission;

- symmetrical mode: both the nodes at the endpoints of the link can transmit and receive PAUSE packets.

On every node the flow control mode can be configured, but the auto-negotiation phase has to determine the actual configuration so that the chosen mode is coherent on both the nodes at the endpoints of the link.

Sending PAUSE packets may be problematic in the backbone: a bridge with full buffers is able to make the traffic be stopped only on the link it is directly connected to but, if the intermediate bridges in the upstream path do not feel the need for in turn sending PAUSE packets because having larger buffers, it is not able to 'shut up' the host which is sending too many packets → until the access bridge in turn sends a PAUSE packet to the concerned host, the network appears blocked also to all the other hosts which are not responsible for the problem → the PAUSE packets send by non-access bridges have no capability of selecting the exceeding traffic to slow down the responsible host, but they affect traffic from all the hosts.

This is why it is recommended to disable flow control in the backbone and use PAUSE packets just between access bridges and hosts. Often the asymmetrical flow control mode is chosen, where only hosts can send PAUSE packets: generally buffers of access bridges are big enough, and several commercial bridges accept PAUSE packets from hosts, blocking data transmission on the concerned port, but they can not send them.

However sending PAUSE packets may be problematic also for hosts, because it may trigger a livelock in the kernel of the operating system: the CPU of the slow host is so busy in processing packets coming from the NIC interface that can not find a moment to send a PAUSE packet → packets accumulate in RAM bringing it to saturation.

References

[edit | edit source]- ↑ Two marking fields for quality of service exist, one at the data-link layer and another at the network layer:

- the 'Priority Code Point' (PCP) field, used by the IEEE 802.1p standard, is located in the header of the Ethernet frame;

- the 'Differentiated Service Code Point' (DSCP), used by the Differentiated Services (DiffServ) architecture, is located in the header of the IP packet, in particular in the 'Type of Service' field of the IPv4 header and in the 'Priority' field of the IPv6 header.