Microprocessor Design/Cache

Cache

[edit | edit source]A cache is a small amount of memory which operates more quickly than main memory. Data is moved from the main memory to the cache, so that it can be accessed faster. Modern chip designers put several caches on the same die as the processor; designers often allocate more die area to caches than the CPU itself. Increasing chip performance is typically achieved by increasing the speed and efficiency of chip cache.

The cache memory performance is the most significant factor in achieving high processor performance.[1]

Cache works by storing a small subset of the external memory contents, typically out of it's original order. Data and instructions that are being used frequently, such as a data array or a small instruction loop, are stored in the cache and can be read quickly without having to access the main memory. Cache runs at the same speed as the rest of the processor, which is typically much faster than the external RAM operates at. This means that if data is in the cache, accessing it is faster than accessing memory.

Cache helps to speed up processors because it works on the principle of locality.

In this chapter, we will discuss several possible cache arrangements, in increasing order of complexity:

- No cache, single-CPU, physical addressing

- Single cache, single-CPU, physical addressing

- Cache hierarchy: L1, L2, L3, etc.

- cache replacement policies: associativity, random replacement, LRU, etc.

- Split cache: I-cache and D-cache, on top of a unified cache hierarchy

- caching with multiple CPUs

- cache hardware that supports virtual memory addressing

- the TLB as a kind of cache

- how single-address-space virtual memory addressing interacts with cache hardware

- how per-process virtual memory addressing interacts with cache hardware

No cache

[edit | edit source]Most processors today, such as the processors inside standard keyboards and mice, don't have any cache. Many historically important computers, such as Cray supercomputers, don't have any cache.[1] The vast majority of software neither knows nor cares about the specific details of the cache, or if there is even a cache at all.

Processors without a cache are usually limited in performance by the main memory access time. Without a cache, the processor fetches each instruction, one at a time, from main memory, and every LOAD or STORE goes to main memory before executing the next instruction.

One way to improve performance is to substitute faster main memory. Alas, that usually has a financial limit: hardly anyone is willing to pay a penny a bit for a gigabyte of really fast main memory.

Even if money is no object, eventually one reaches physical limits to main memory access time. Even with the fastest possible memory money can buy, the memory access time for a unified 1 gigabyte main memory is limited by the time it takes a signal to get from the CPU to the most distant part of the memory and back.

Single cache

[edit | edit source]Using exactly the same technology, it takes less time for a signal to traverse a small block of memory than a large block of memory.

The performance of a processor with a cache is no longer limited by the main memory access time. The performance of a processor with a cache is usually limited in performance by the (much faster) cache memory access time: if the cache access time of a processor could be decreased, the processor would have higher performance. [1] However, cache memory is generally much easier to speed up than main memory: really fast memory is much more affordable when we only buy small amounts of it. If it will improve the performance of a system significantly, lots of people are willing to pay a penny a bit for a kilobyte of really fast cache memory.

Principle of Locality

[edit | edit source]There are two types of locality, spatial and temporal. Modern computer programs are typically loop-based, and therefore we have two rules about locality:

- Spatial Locality

- When a data item is accessed, it is likely that data items in sequential memory locations will also be accessed. Consider the traversal of an array, or the act of storing local variables on a stack. In these cases, when one data item is accessed, it is a good idea to load the surrounding memory area into the cache at the same time.

- Temporal Locality

- When data item is accessed, it is likely that the same data item will be accessed again. For instance, variables are typically read and written to in rapid succession. It is a good idea to keep recently used items in the cache, and not over-write data that has been recently used.

Hit or Miss

[edit | edit source]A hit when talking about cache is when the processor finds data in the cache that it is looking for. A miss is when the processor looks for data in the cache, but the data is not available. In the event of a miss, the cache controller unit must gather the data from the main memory, which can cost more time for the processor.

Measurements of "the hit ratio" are typically performed on benchmark applications. The actual hit ratio varies widely from one application to another. In particular, video and audio streaming applications often have a hit ratio close to zero, because each bit of data in the stream is read once for the first time (a compulsory miss), used, and then never read or written again. Even worse, many cache algorithms (in particular, LRU) allow this streaming data fill the cache, pushing out of the cache information that will be used again soon (cache pollution).[2]

Cache performance

[edit | edit source]A processor with a cache first looks in the cache for data (or instructions). On a miss, the processor then fetches the data (or instructions) from main memory. On a miss, this process takes *longer* than an equivalent processor without a cache.

There are three ways a cache gives better net performance than a processor without a cache:

- A hit (read from the cache) is faster than the time it takes a processor without a cache to fetch from main memory. The trick is to design the cache so we get hits often enough that their increase in performance more than makes up for the loss in performance on the occasional miss. (This requires a cache that is faster than main memory).

- Multiprocessor computers with a shared main memory often have a bottleneck accessing main memory. When a local cache succeeds in satisfying memory operations without going all the way to main memory, main memory bandwidth is freed up for the other processors, and the local processor doesn't need to wait for the other processors to finish their memory operations.[1]

- Many systems are designed so the processor often read multiple items from cache simultaneously -- either 3 separate caches for instruction, data, and TLB; or a multiported cache; or both -- which takes less time than reading the same items from main memory one at a time.

The last two ways improve overall performance even if the cache is no faster than main memory.

A processor without a cache has a constant memory reference time T of

A processor with a cache has an average memory access time of[1]

where

- m is the miss ratio

- Tm is the time to make a main memory reference

- Th is the time to make a cache reference on a hit

- E accounts for various secondary factors (memory refresh time, multiprocessor contention, etc.)

Flushing the Cache

[edit | edit source]When the processor needs data, it looks in the cache. If the data is not in the cache, it will then go to memory to find the data. Data from memory is moved to the cache and then used by the processor. Sometimes the entire cache contains useless or old data, and it needs to be flushed. Flushing occurs when the cache controller determines that the cache contains more potential misses than hits. Flushing the cache takes several processor cycles, so much research has gone into developing algorithms to keep the cache up to date.

Cache Hierarchy

[edit | edit source]Cache is typically divided between multiple levels. The most common levels are L1, L2, and L3. L1 is the smallest but the fastest. L3 is the largest but the slowest. Many chips do not have L3 cache. Some chips that do have an L3 cache actually have an external L3 module that exists on the motherboard between the microprocessor and the RAM.

Inclusive, exclusive, and other cache hierarchy

[edit | edit source]When there are several levels of cache, and a copy of the data in some location in main memory has been cached in the L1 cache, is there another copy of that data in the L2 cache?

- No. Some systems are designed to have strictly exclusive cache levels: any particular location in main memory is cached in at most one cache level.

- Yes. Other systems are designed to have a strictly inclusive cache levels: whenever some location in main memory is cached in any one level, the same location is also cached in all higher levels. All the data in the L2 cache can also be found in L3 (and also in main memory).

All the data in a L1 cache can also be found in L2 and L3 (and also in main memory).

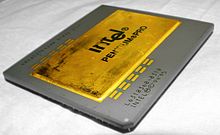

- Maybe. In some systems, such as the Intel Pentium 4, some data in the L1 cache is also in the L2 cache, while other data in the L1 cache is not in the L2 cache. This kind of cache policy does not yet have a popular name.

Size of Cache

[edit | edit source]

There are a number of factors that affect the size of cache on a chip:

- Moore's law provides an increasing number of transistors per chip. After around 1989, more transistors are available per chip than a designer can use to make a CPU. These extra transistors are easily converted to large caches.

- Processor components become smaller as transistors become smaller. This means there is more area on the die for additional cache.

- More cache means fewer delays in accessing data, and therefore better performance.

Because of these factors, chip caches tend to get larger and larger with each generation of chip.

Cache Tagging

[edit | edit source]Cache can contain non-sequential data items in no particular order. A block of memory in the cache might be empty and contain no data at all. In order for hardware to check the validity of entries in the cache, every cache entry needs to maintain the following pieces of information:

- A status bit to determine if the block is empty or full

- The memory address of the data in the block

- The data from the specified memory address (a "block in the cache", also called a "line in the cache"[1])

When the processor looks for data in the cache, it sends a memory address to the cache controller. the cache controller checks the address against all the address fields in the cache. If there is a hit, the cache controller returns the data. If there is a miss, the cache controller must pass the request to the next level of cache or to the main memory unit.

The cache controller splits an effective memory address (MSB to LSB) into the tag, the index, and the block offset.[3][4] Some authors refer to the block offset as simply the "offset"[5] or the "displacement".[6][7]

The memory address of the data in the cache is known as the tag.

Memory Stall Cycles

[edit | edit source]If the cache misses, the processor will need to stall the current instruction until the cache can fetch the correct data from a higher level. The amount of time lost by the stall is dependent on a number of factors. The number of memory accesses in a particular program is denoted as Am; some of those accesses will hit the cache, and the rest will miss the cache. The rate of misses, equal to the probability that any particular access will miss, is denoted rm. The average amount of time lost for each miss is known as the miss penalty, and is denoted as Pm. We can calculate the amount of time wasted because of cache miss stalls as:

Likewise, if we have the total number of instructions in a program, N, and the average number of misses per instruction, MPI, we can calculate the lost time as:

If instead of lost time we measure the miss penalty in the amount of lost cycles, the calculation will instead produce the number of cycles lost to memory stalls, instead of the amount of time lost to memory stalls.

Read Stall Times

[edit | edit source]To calculate the amount of time lost to cache read misses, we can perform the same basic calculations as above:

Ar is the average number of read accesses, rr is the miss rate on reads, and Pr is the time or cycle penalty associated with a read miss.

Write Stall Times

[edit | edit source]Determining the amount of time lost to write stalls is similar, but an additional additive term that represents stalls in the write buffer needs to be included:

Where Twb is the amount of time lost because of stalls in the write buffer. The write buffer can stall when the cache attempts to synchronize with main memory.

Hierarchy Stall Times

[edit | edit source]In a hierarchical cache system, miss time penalties can be compounded when data is missed in multiple levels of cache. If data is missed in the L1 cache, it will be looked for in the L2 cache. However, if it also misses in the L2 cache, there will be a double-penalty. The L2 needs to load the data from the main memory (or the L3 cache, if the system has one), and then the data needs to be loaded into the L1. Notice that missing in two cache levels and then having to access main memory takes longer than if we had just accessed memory directly.

Design Considerations

[edit | edit source]L1 cache is typically designed with the intent of minimizing the time it takes to make a hit. If hit times are sufficiently fast, a sizable miss rate can be accepted. Misses in the L1 will be redirected to the L2 and that is still significantly faster than accesses to main memory. L1 cache tends to have smaller block sizes, but benefits from having more available blocks for the same amount of space. In order to make L1 hit times minimal, L1 are typically direct-mapped or even narrowly 2-way set associative.

L2 cache, on the other hand, needs to have a lower miss rate to help avoid accesses to main memory. Accesses to L2 cache are much faster than accesses to memory, so we should do everything possible to ensure that we maximize our hit rate. For this reason, L2 cache tends to be fully associative with large block sizes. This is because memory is typically read and written in sequential memory cells, so large block sizes can take advantage of that sequentiality.

L3 cache further continues this trend, with larger block sizes, and minimized miss rate.

block size

[edit | edit source]A very small cache block size increases the miss ratio, since a miss will fetch less data at a time. A very large cache block size also increases the miss ratio, since it causes the system to fetch a bunch of extra information that is used less than the data it displaces in the cache. [1]

Associativity

[edit | edit source]In order to increase the read speed in a cache, many cache designers implement some level of associativity. An associative cache creates a relationship between the original memory location and the location in the cache where that data is stored. The relationship between the address in main memory and the location where the data is stored is known as the mapping of the cache. In this way, if the data exists in the cache at all, the cache controller knows that it can only be in certain locations that satisfy the mapping.

Direct-Mapped

[edit | edit source]A direct-mapped system uses a hashing algorithm to assign an identifier to a memory address. A common hashing algorithm for this purpose is the modulo operation. The modulo operation divides the address by a certain number, p, and takes the remainder r as the result. If a is the main memory address, and n is an arbitrary non-negative integer, then the hashing algorithm must satisfy the following equation:

If p is chosen properly by the designer, data will be evenly distributed throughout the cache.

In a direct-mapped system, each memory address corresponds to only a single cache location, but a single cache location can correspond to many memory locations. The image above shows a simple cache diagram with 8 blocks. All memory addresses therefore are calculated as n mod 8, where n is the memory address to read into the cache. Memory addresses 0, 8, and 16 will all map to block 0 in the cache. Cache performance is worst when multiple data items with the same hash value are read, and performance is best when data items are close together in memory (such as a sequential block of program instructions, or a sequential array).

Most external caches (located on the motherboard, but external to the CPU) are direct-mapped or occasionally 2-way set associative, because it's complicated to build higher-associativity caches out of standard components.[8] If there is such a cache, typically there is only one external cache on the motherboard, shared between all CPUs.

The replacement policy for a direct-mapped cache is the simplest possible replacement policy: the new data must go in the one and only one place in the cache it corresponds to. (The old data at the location in the cache, if its dirty bit is set, must be written to main memory first).

2-Way Set Associative

[edit | edit source]In a 2-way set associative cache system, the data value is hashed, but each hash value corresponds to a set of cache blocks. Each block contains multiple data cells, and a data value that is assigned to that block can be inserted anywhere in the block. The read speeds are quick because the cache controller can immediately narrow down its search area to the block that matches the address hash value.

The LRU replacement policy for a 2-way set associative cache is one of the simplest replacement policies: The new data must go in one of a set of 2 possible locations. Those 2 locations share a LRU bit that is updated whenever either one is read or written, indicating which one of the two entries in the set was the most-recently used. The new data goes in the *other* location (the least-recently used location). (The old data at that LRU location in the cache, if its dirty bit is set, must be written to main memory first).

2 way skewed associative

[edit | edit source]The 2-way skewed associative cache is "the best tradeoff for .... caches whose sizes are in the range 4K-8K bytes" -- André SeznecAndré Seznec. "A Case for Two-Way Skewed-Associative Caches". Retrieved 2007-12-13.[9]

Fully Associative

[edit | edit source]In a fully-associative cache, hash algorithms are not employed and data can be inserted anywhere in the cache that is available. A typical algorithm will write a new data value over the oldest unused data value in the cache. This scheme, however, requires the time an item is loaded or accessed to be stored, which can require lots of additional storage.

Cache Misses

[edit | edit source]There are three basic types of misses in a cache:

- Conflict Misses

- Compulsory Misses

- Capacity Misses

Conflict Misses

[edit | edit source]

A conflict miss occurs in a direct-mapped and 2-way set associative cache when two data items are mapped to the same cache locations. In a data miss, a recently used data item is overwritten with a new data item.

Compulsory Misses

[edit | edit source]The image above shows the difference between a conflict miss and a compulsory miss. A compulsory miss is an instance where the cache must miss because it does not contain any data. For instance, when a processor is first powered-on, there is no valid data in the cache and the first few reads will always miss.

The compulsory miss demonstrates the need for a cache to differentiate between a space that is empty and one that is full. Consider when we turn the processor on, and we reset all the address values to zero, an attempt to read a memory location with a hash value of zero will hit. We do not want the cache to hit if the blocks are empty.

Capacity Misses

[edit | edit source]Capacity misses occur when the cache block is not large enough to hold the data item.

Cache Write Policy

[edit | edit source]Data writes require the same time delay as a data read. For this reason, caching systems typically will write data to the cache as well. However, when writing to the cache, it is important to ensure that the data is also written to the main memory, so it is not overwritten by the next cache read. If data in the cache is overwritten without being stored in main memory, the data will be lost.

It is imperative that caches write data to the main memory, but exactly when that data is written to the main memory is called the write policy. There are two write policies: write through and write back.

Write operations take as long to perform as read operations in main memory. Many cached processors therefore will perform write operations on the cache as well as read operations.

Write Through

[edit | edit source]When data is written to memory, a write request is sent simultaneously to the main memory and to the cache. This way, the result data is available in the cache before it can be written (and then read again) from the main memory. When writing to the cache, it's important to make sure the main memory and the cache are synchronized and they contain the same data.

In a write through system, data that is written to the cache is immediately written to the main memory as well. If many writes need to occur is sequential instructions, the write buffer may get backed up and cause a stall.

Write Back

[edit | edit source]In a write back system, the cache controller keeps track of which data items have been synchronized to main memory. The data items which have not been synchronized are called "dirty", and the cache controller prevents dirty data from being overwritten.

The cache controller will synchronize data during processor cycles where no other data is being written to the cache.

Write bypass

[edit | edit source]Some processors send writes directly to main memory, bypassing the cache. If that location is *not* already cached, then nothing more needs to be done. If that location *is* already cached, then the old data in the cache(s) needs to be marked "invalid" ("stale") so if the CPU ever reads that location, the CPU will read the latest value from main memory rather than some earlier value(s) in the cache(s).

Stale Data

[edit | edit source]It is possible for the data in main memory to be changed by a component besides the microcontroller. For instance, many computer systems have memory-mapped I/O, or a DMA controller that can alter the data. Some computer systems have several CPUs connected to a common main memory. It is important that the cache controller check that data in the cache is correct. Data in the cache that is old and may be incorrect is called "stale".

The three most popular[citation needed] approaches to dealing with stale data ("cache coherency protocols") are:

- Use simple cache hardware that ignores what the other CPUs are doing.

- Set all caches to write-through all STOREs (write-through policy). Use additional cache hardware to listen in ("snoop") whenever some other device writes to main memory, and invalidate local cache line whenever some other device writes to the corresponding cached location in main memory.

- Design caches to use the MESI protocol.

With simple cache hardware that ignores what the other CPUs are doing, cache coherency is maintained by the OS software. The OS sets up each page in memory as either (a) exclusive to one particular CPU (which is allowed to read, write, and cache it); all other CPUs are not allowed to read or write or cache that page; (b) shared read/write between CPUs, and set to "non-cacheable", in the same way that memory-mapped I/O devices are set to non-cacheable; or (c) shared read-only; all CPUs are allowed to cache but not write that page.

Split cache

[edit | edit source]High-performance processors invariably have 2 separate L1 caches, the instruction cache and the data cache (I-cache and D-cache). This "split cache" has several advantages over a unified cache:[8]

- Wiring simplicity: the decoder and scheduler are only hooked to the I-cache; the registers and ALU and FPU are only hooked to the D-cache.

- Speed: the CPU can be reading data from the D-cache, while simultaneously loading the next instruction(s) from the I-cache.

Multi-CPU systems typically have a separate L1 I-cache and L1 D-cache for each CPU, each one direct-mapped for speed.

Open question: To speed up running Java applications in a JVM (and similar interpreters and CPU emulators), would it help to have 3 separate caches -- a machine instruction cache indexed by the program counter PC, a byte code cache indexed by the VM's instruction pointer IP, and a data cache ?

On the other hand, in a high-performance processor, other levels of cache, if any -- L2, L3, etc. -- as well as main memory -- are typically unified, although there are several exceptions (such as the Itanium 2 Montecito). The advantages of a unified cache (and a unified main memory) are:[8]

- Some programs spend most of their time in a small part of the program processing lots of data. Other programs run lots of different subroutines against a small amount of data. A unified cache automatically balances the proportion of the cache used for instructions and the proportion used for data -- to get the same performance on a split cache would require a larger cache.

- when instructions are written to memory -- by an OS loading an executable file from storage, or from a just-in-time compiler translating bytecode to executable code -- a split cache requires the CPU to flush and reload the instruction cache; a unified cache doesn't require that.

Error detection

[edit | edit source]Each cache row entry may have error detection bits. Since the cache only holds a copy of information in the main memory (except for the write-back queue), when an error is detected, the desired data can be re-fetched from the main memory -- treated as a kind of miss-on-invalid -- and the system can continue as if no error occurred. A few computer systems use Hamming error correction to correct single-bit errors in the "data" field of the cache without going all the way back to main memory.[10]

Specialized cache features

[edit | edit source]Many CPUs use exactly the same hardware for the instruction cache and the data cache. (And, of course, the same hardware is used for instructions as for data in a unified cache. The revolutionary idea of a Von Neumann architecture is to use the same hardware for instructions and for data in the main memory itself). For example, the Fairchild CLIPPER used 2 identical CAMMU chips, one for the instruction cache and one for the data cache.[1]

Because the various caches are used slightly differently, some CPU designers customize each cache in different ways.

- Some CPU designers put the "branch history bits" used for branch prediction in the instruction cache. There's no point to adding such information to a data-only cache.

- Many instruction caches are designed in such a way that the only way to deal with stale instructions is to invalidate the entire cache and reload. Data caches are typically designed with more fine-grained response, with extra hardware that can invalidate and reload only the particular cache lines that have gone stale.

- The virtual-to-physical address translation process often has a lot of specialized hardware associated with it to make it go faster -- the TLB cache, hardware page-walkers, etc. We will discuss this in more detail in the next chapter, Virtual Memory.

References

[edit | edit source]- ↑ a b c d e f g h Alan Jay Smith. "Design of CPU Cache Memories". Proc. IEEE TENCON, 1987. [1] [2]

- ↑ Paul V. Bolotoff. "Functional Principles of Cache Memory". 2007.

- ↑ John L. Hennessy, David A. Patterson. "Computer Architecture: A Quantitative Approach". 2011. ISBN 012383872X, ISBN 9780123838728. page B-9. [3]

- ↑ David A. Patterson, John L. Hennessy. "Computer organization and design: the hardware/software interface". 2009. ISBN 0123744938, ISBN 9780123744937 "Chapter 5: Large and Fast: Exploiting the Memory Hierarchy". p. 484. [4]

- ↑ Gene Cooperman. "Cache Basics". 2003. [5]

- ↑ Ben Dugan. "Concerning Caches". 2002. [6]

- ↑ Harvey G. Cragon. "Memory systems and pipelined processors". 1996. ISBN 0867204745, ISBN 9780867204742. "Chapter 4.1: Cache Addressing, Virtual or Real" p. 209 [7]

- ↑ a b c Paul V. Bolotoff. "Functional Principles of Cache Memory". 2007.

- ↑ Micro-Architecture "Skewed-associative caches have ... major advantages over conventional set-associative caches."

- ↑ Paul V. Bolotoff. Functional Principles of Cache Memory. 2007.

Further reading

[edit | edit source]- Parallel Computing and Computer Clusters/Memory

- simulators available for download at University of Maryland: Memory-Systems Research: "Computational Artifacts" can be used to measure cache performance and power dissipation for a microprocessor design without having to actually build it. This makes it much quicker and cheaper to explore various tradeoffs involved in cache design. ("Given a fixed size chip, if I sacrifice some L2 cache in order to make the L1 cache larger, will that make the overall performance better or worse?" "Is it better to use an extremely fast cycle time cache with low associativity, or a somewhat slower cycle time cache with high associativity giving a better hit rate?")