Minix 3/Architecture and Design of MINIX 3

Kernel

[edit | edit source]The kernel is the central part in most computer operating systems which manages the system's resources and the communication between hardware and software components. As a basic component of an operating system, a kernel provides abstraction layers for hardware, especially for memory, processors and I/O that allows hardware and software to communicate. It also makes these facilities available to userland applications through inter-process communication mechanisms and system calls.

These tasks are done differently by different kernels, depending on their design and implementation. While monolithic kernels will try to achieve these goals by executing all the code in the same address space to increase the performance of the system, microkernels run most of their services in user space, aiming to improve maintainability and modularity of the codebase.

Overview

[edit | edit source]Most, but not all, OS rely on the kernel concept. The existence of a kernel as a single piece of software responsible for the communication between the hardware and the software results from complex compromises relating to questions of performance, memory efficiency, security and processor architectures.

In most cases, the boot loader starts the kernel as a process in supervisor mode, but after initialization, it does not remain as a process as we know it, but instead as a whole set of functions that can be invoked by userland applications to perform operations that require a higher privilege level, such as disk access. Kernel execution streams are a continuation of execution streams of userland processes that are paused when performing system calls and resumed when those return. The initial main kernel stream remains as the "idle process" and "collects" unused processor time.

Kernel development is considered one of the most complex and difficult tasks in programming. Its central position in an operating system implies the necessity for good performance, which defines the kernel as a critical piece of software and makes its correct design and implementation difficult. A kernel might even not be allowed to use the abstraction mechanisms it provides to other software. Many reasons prevent a kernel from using facilities it provides, such as: interrupt management, memory management and lack of reentrancy, thus making its development even more difficult for software engineers.

Different Kernel Design Approaches

[edit | edit source]Naturally, the above listed tasks and features can be provided in many ways that differ from each other in design and implementation. While monolithic kernels execute all of their code in the same address space (kernel space) to increase the performance of the system, microkernels try to run most of their services in user space, aiming to improve maintainability and modularity of the codebase. Most kernels do not fit exactly into one of these categories, but are rather found in between these two designs. These are called hybrid kernels. More exotic designs, such as nanokernels and exokernels, are mostly investigated by researchers and are not in widespread use.

Monolithic Kernels

[edit | edit source]In a monolithic kernel, all OS services run along with the main kernel thread, thus also residing in the same memory area. This approach provides rich and powerful hardware access. Monolithic systems are easier to design and implement than other solutions, and are extremely efficient if well-written. The main disadvantages of monolithic kernels are the dependencies between system components - a bug in a device driver might crash the entire system - and the fact that large kernels become very difficult to maintain.

Microkernels

[edit | edit source]The microkernel approach consists of defining a simple abstraction over the hardware, with a set of primitives or system calls to implement minimal OS services such as memory management, multitasking, and inter-process communication. Other services, including those normally provided by the kernel such as networking, are implemented in user-space programs, referred to as servers. Microkernels are easier to maintain than monolithic kernels, but the large number of system calls and context switches might slow down the system because they typically generate more overhead than plain function calls.

Microkernels generally underperform traditional designs, sometimes dramatically. This is due in large part to the overhead of moving in and out of the kernel, a context switch, to move data between the various applications and servers. It was originally believed that careful tuning could reduce this overhead dramatically, but by the mid-1990s most researchers had abandoned this approach. Recently, newer microkernels, optimized for performance, have addressed these problems.

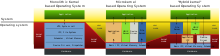

Monolithic kernels vs Microkernels

[edit | edit source]

As the computer kernel grows, a number of problems become evident. One of the most obvious is that the memory footprint increases. This is mitigated to some degree by perfecting the virtual memory system, but not all computer architectures have virtual memory support. To reduce the kernel's footprint, extensive editing has to be performed to carefully remove unneeded code, which can be very difficult with non-obvious interdependencies between parts of a kernel with millions of lines of code.

Due to the problems that monolithic kernels pose, they were considered obsolete by the early 1990s. As a result, the design of Linux using a monolithic kernel rather than a microkernel was the topic of a famous flame war between Linus Torvalds and Andrew Tanenbaum. There is merit on both sides of the argument presented in the Tanenbaum/Torvalds debate.

Most common kernels are monolithic; Windows NT, Linux and Apple's XNU kernel (the kernel behind Mac OS X) are a few examples, although there are many more. The majority of microkernels are either research kernels, or kernels for use in embedded systems for one task only.

Monolithic kernels tend to be easier to design correctly, and therefore may grow more quickly than a microkernel-based system. However, a bug in a monolithic system usually crashes the entire system; if the system is designed correctly, this doesn't happen in a microkernel with servers running apart from the main thread. Monolithic kernel proponents reason that incorrect code doesn't belong in a kernel, and that microkernels offer little advantage over correct code. There are success stories in both camps. Microkernels are often used in embedded robotic or medical computers where crash tolerance is important and most of the OS components reside in their own private, protected memory space. This is impossible with monolithic kernels, even with modern module-loading ones. However, the monolithic model tends to be more efficient through the use of shared kernel memory, rather than the slower message passing system of microkernel designs.