Next Generation Sequencing (NGS)/Print version

→

Introduction

ABOUT THIS BOOK

- The first four chapters are general introductions to broad concepts of bioinformatics and NGS in particular. They are 'required pre-requisites', and will be referred to in the rest of the book:

- In the Introduction, we give a nearly complete overview of the field, starting with sequencing technologies, their properties, strengths and weaknesses, covering the various biological processes they can assay, and finishing with a section on common sequencing terminology. Finally we finish with an overview of a typical sequencing workflow.

- In Big Data we deal with some of the (perhaps unexpected) difficulties that arise when dealing with typical volumes of NGS data. From shipping hard drives around the world, to the amount of memory you'll need in your computer to assemble the data when they arrive, these issues often take novices by surprise. We'll get into the file formats, archives, and algorithms that have been developed to deal with these problems.

- In Bioinformatics from the outside we will discuss the interfaces used by bioinformaticians. We will present the command line with its text interface and blinking cursor, but also more user friendly graphical user interfaces (GUIs) which were developed specially for bioinformatics pipelines.

- In Pre-processing we will discuss the best practices of controlling the quality of a NGS dataset, and cleaning out low quality data.

- The next five chapters describe the analyses which can be done using a reference genome sequence, assuming one is available:

- In Alignment we will discuss how to map a set of reads to a reference dataset.

- In DNA Variation we will describe how to call variants (either SNVs, CNVs or breakends) using mapped reads.

- In RNA we will explain how to determine exons, isoforms and gene expression levels from mapped RNA-seq reads.

- In Epigenetics we will describe pull down assays which are used to determine epigenetic traits such as histone or CpG methylation.

- In Chromatin structure we will discuss technologies used to determine the structure of the chromatin, e.g. the placement of the histones or the physical proximity of different chromosomal regions when the DNA lies in the nucleus.

- Finally the last two chapters will describe analyses in the absence of a reference genome:

- De novo assembly will describe how to assemble a genome from NGS reads.

- De novo RNA assembly will explain how to assemble a transcriptome from NGS reads only.

Introduction

Platforms and Technologies

NGS platforms employ different technologies to decode the identity of nucleotides in DNA, or detect covalent modifications such as methylation on the nucleotides.

NGS platforms evolve quickly. Usually, new technologies & platforms are announced at the Advances in Genome Biology & Technology (AGBT) conference [1]

For educational purposes, some reviews of NGS platforms published in 2011 [2]. Read more about the sequencing technologies here

File format and terminology

FASTA

The FASTA format, generally indicated with the suffix .fa or .fasta, is a straightforward, human readable format. Normally, each file consists of a set of sequences, where each sequence is represented by a one line header, starting with the '>' character, followed by the corresponding nucleotide sequence, in multiple lines of regular width (generally 60 or 80 characters wide). In practice, some tools may produce a sequence with a header and a single long line of sequence. For more detailed information see the FASTA Wikipedia page.

FASTQ

FASTQ is a text file format (human readable) that provides 4 lines of data per sequence.

- Sequence identifier

- The sequence

- Comments

- Quality scores

FASTQ format is commonly used to store sequencing reads, in particular from Illumina and Ion Torrent platforms.

Paired-end reads may be stored either in one FASTQ file (alternating) or in two different FASTQ files. Paired-end reads may have sequence identifiers ended by "/1" and "/2" respectively.

Example FASTQ entry for one Illumina read:

@EAS20_8_6_1_3_1914/1 CGCGTAACAAAAGTGTCTATAATCACGGCAGAAAAGTCCACATTGATTATTTGCACGGCGTCACACTTTGCTATGCCATAGCATTTTTATCCATAAGATT + HHHHHHHHHFHGGHHHHHHHHHHHHHHHHHHHHEHHHHHHHHHHHHHHGHHHGHHHGHIHHHHHHHHHHHHHHHGCHHHHFHHHHHHHGGGCFHBFBCCF

Generally a FASTQ file is stored in files with the suffix .fq or .fastq using Gzip file compression indicated by the suffix .gz or .gzip.

For more detailed information see the FASTQ Wikipedia page.

SFF

SFF is a binary file format used to encode sequencing reads from the 454 platform.

http://en.wikipedia.org/wiki/Standard_Flowgram_Format

SAM/BAM

File formats used to encode short reads alignment. See Next_Generation_Sequencing_(NGS)/Alignment for more information.

FASTG

FASTG is an emerging file format for genome assemblies that take ambiguities into account. FASTG is like FASTA, but the G stands for ‘graph’.

VCF

The Variant Call Format (VCF) is a specification used in bioinformatics for storing gene sequence variations. See [1] for more information.

Read lengths

As of Feb 2013, the read-length of second generation sequencing platforms are shorter than conventional Sanger sequencing, creating challenges in reads mapping and assembly.

- The most well used Illumina platforms can produce read-length up to 250bp. In practice, ~100bp is mostly accessible to researchers worldwide.

- Ion Torrent: Varies, typically peak at 400bp

- SOLiD: 50-75bp

Paired-/Single-ends

- Single-end reads means the sequence fragment are sequenced from 1 direction only.

- In paired-end sequencing, a single fragment are sequenced from both 5' and 3' end, giving rise to forward and reverse read. The sequenced fragments could be separated by a certain bases (inner insert size) or can be overlapping, giving rise to a contiguous longer single-end fragment after merging. The uses of paired-end reads can improve the accuracy of reads mapping onto a reference genome. The typical fragment size (external inserts size) is 200bp to 500bp

Mate-pairs

Mate-pair is different from paired-end in the sense of how the sequence library is made. In mate-pair sequencing, 2-5kb fragments are selected and sequenced from both ends, thus giving information how nucleotides far apart are linked together. Mate-pairs are more ideal for studying genomic structural rearrangement and help de novo genome assembly. They also facilitate sensitive structural variant (SV) detection across a widened SV size-spectrum and in repetitive areas of the genome.

Colorspace

Colorspace is a 2-base encoding system commercialized by Life Tech and used in SOLiD platforms. Technology overview is described here.

Quality scores

Quality score is an indication of probability of the base call being incorrect. Quality score is used in the FASTQ format.

Various encoding schemes are available, including, most commonly, [Phred quality scores].

Error profiles & Sequencing biases

Uses of NGS

DNA

To find mutations from tumor cells .

RNA

To reconstruct transcriptome (genome-based or de novo) using reverse transcription so that researchers can count how many reads align onto annotated parts of the transcriptome. This is used to compare gene expression in samples that are dramatically different from each other and to build biochemical pathways of an organism.

ChIP

ChIP-sequencing, also known as ChIP-seq, is a method used to analyze protein interactions with DNA. ChIP-seq combines chromatin immunoprecipitation (ChIP) with massively parallel DNA sequencing to identify the binding sites of DNA-associated proteins. It can be used to map global binding sites precisely for any protein of interest. Previously, ChIP-on-chip was the most common technique utilized to study these protein–DNA relations.

Chromatin structure

General NGS Workflow Overview

References

Big Data

Big Data

Data Deluge

The first problem you face is probably the large size of the NGS FASTQ files - the "data deluge" problem. You no longer only have to deal with microplate readings, or digitalized gel photos; the size of NGS data can be huge. For example, compressed FASTQ files from a 60x human whole genome sequencing can still require 200Gb. A small project with 10–20 whole genome sequencing (WGS) samples can generate ~4TB of raw data. Even these estimates do not include the disk space required for downstream analysis.

Storing data

Referenced from a post from BioStars[1]:

- Very high end: enterprise cluster and SAN.

- High end: Two mirrored servers in separate buildings or Cloud.

- Typical: External hard drives and/or NAS with raid-5/6

Moving data

Moving data between collaborators is also non-trivial. For RNA-Seq samples, FTP may suffice, but for WGS data, shipping hard drives may be the only solution.

Externalizing compute requirements from the research group

It is difficult for a single lab to maintain sufficient computing facilities. A single lab will probably own some basic computing hardware; however, many tasks will have huge computational demands (e.g. memory for de novo genome assembly) that require them to be performed elsewhere. An institution / core facility may host a centralized cluster. Alternatively, one might consider doing the task on the cloud.

- NIH maintains a centralized computing cluster called Biowulf.

- Bioinformatics cloud computing is suggested.[2][3] EBI has adopted a cloud-based platform called Helix Nebula.[4]

References

- ↑ Wo, H. (24 March 2011). "Question: Huge Ngs Data Storage And Transferring". Biostars. Biostar Genomics, LLC. Retrieved 28 April 2016.

- ↑ Akhlaghpour, H. (3 July 2012). "Genomic Analysis in the Cloud". YouTube. Google. Retrieved 28 April 2016.

- ↑ Schadt, E.E.; Linderman, M.D.; Sorenson, J.; Lee, L.; Nolan, G.P. (2010). "Computational solutions to large-scale data management and analysis". Nature Reviews Genetics. 11 (9): 647–57. doi:10.1038/nrg2857. PMC 3124937. PMID 20717155.

{{cite journal}}: CS1 maint: PMC format (link) CS1 maint: multiple names: authors list (link) - ↑ Lueck, R. (16 January 2013). "Big data and HPC on-demand: Large-scale genome analysis on Helix Nebula – the Science Cloud" (PDF). Trust-IT Services. Retrieved 28 April 2016.

Bioinformatics from the outside

Bioinformatics from the outside

- For an in-depth introduction to UNIX, see the Guide to Unix or A Quick Introduction to Unix.

Unix command line: History

The first version of Unix was developed by Bell Labs (part of AT&T) in 1969, making it more than forty years old. Its roots go back to when computers were large and rare, time on them very expensive and shared between many users. Unix was developed so as to allow multiple users to work simultaneously. Unix actually grew out of a desire to play a game called Space Travel and the features that made it an operating system were incidental. Initially it only supported one user and the name Unix, originally UNICS, is a pun on MULTICS, a multi-user system available at the time.

While this might seem strange and unnecessary in a world where everyone has their own laptop, computing is again moving back to remote central services with many users. The compute power required for mapping next-generation sequencing data or de novo assembly is beyond what is available or desirable to have sitting on your lap. In many ways, the “cloud” (or whatever has replaced it by the time you read this) requires ways of working that have more in common with traditional Unix machines than the personal computing emphasised by Windows and Apple Macintosh.

USA federal monopoly law prevented AT&T from commercialising Unix but interest in using it increased outside of Bell Labs and eventually they decided to give it away freely, including the source code, which allowed other institutions to modify it. Perhaps the most important of these institutions was the University of Berkeley. (A significant proportion of Mac OS X has its roots in the Berkeley Standard Distribution (BSD) that distributed a set of tools to make Unix more useful and made changes that significantly increased performance.) The involvement of several universities in its development meant Unix was ideally placed when the internet was created and many of the fundamental technologies were developed and tested using Unix machines. Again, these improvements were given away freely. Some of the code was repurposed to provide networking for early versions of Windows and even today several utilities in Windows Vista incorporate Berkeley code.

As well as being a key part in the development of the early internet, a Unix machine was also the first web server, a NeXT cube. NeXT was an early attempt to make a Unix machine for desktop use. Extremely advanced for its time but also very expensive, it never really caught on outside of the finance industry. Apple eventually bought NeXT, its operating system becoming OS X, and this heritage can still be seen in its programming interfaces. Apple is now the largest manufacturer of Unix machines; every Apple computer, the iPhone, and most recent iPods have a Unix base underneath their facade.

By the early 90s Unix became increasingly commercially important. This inevitably lead to legal trouble: with so many people giving away improvements freely and having them integrated into the system, who actually owned it? The legal trouble cast uncertainty over the freely available Unix versions, creating an opening for another free operating system.

The vacuum was filled by Linux, a freely available computer operating system similar to Unix started by Linus Torvalds in 1991 as a hobby. More correctly, Linux is just the kernel, the central program from which all others are run. Many more tools in addition to this are required to make an operating system. These tools are provided by the GNU project.[1]

Importantly, Linux was written from scratch and did not contain any of the original Unix code and so was free of legal doubt. Coinciding with the penetration of the internet onto university campuses, and the availability of cheap but sufficiently powerful personal computers, Linux rapidly matured with over one hundred developers collaborating over the internet within two years. The real advances driving Linux were social rather than technological, disparate volunteers donating time on the understanding that, in return for giving their work away freely, anything based on their work is also given away freely and so they in turn benefit from improvements.

The idea that underpins this sharing and ensures that nobody can profit from anyone else's work without sharing is “copyleft”, described in a simple legal document called the GNU General Public Licence,[2] which turns the notion of copyright on its head. (It should be noted that the GNU project, and the philosophy behind it, predate Linux by almost a decade.) Today, Linux has become the dominant free Unix-like operating system with millions of users and support from many large companies.

Getting and installing Ubuntu

Here we describe the Ubuntu distribution (packaging) of Linux, which is one of the most widely used, but all the examples are fairly generic and should work with most Linux, Unix, and Mac OS X computers. There are many different guides on the web about how to install Ubuntu but we recommend installing it as a virtual machine on your current computer.

The Ubuntu Linux distribution is generally easy to use and it is updated (for free) every six months. The examples and versions used here are for version of Ubuntu is 11.10, named after its release date in October 2011, and also known as “Oneiric Ocelot”; the next (most current) version, 12.04 or “Precise Pangolin” was released in April 2012 and is designated a Long Term Support (LTS) edition, meaning that it will be receive fixes and maintenance upgrades for five years before being retired, and is the best option if you don't want to be regularly upgrading your system.

Acclimatisation

A significant effort has been undertaken to make Ubuntu easy to use, so even novice computer users should have little trouble using it. There are quite a few tutorials available for users new to Ubuntu. The official material is available[3] but a quick search on the web will locate much more. In addition, there is a lot of documentation installed on the machine itself. You can access this by moving the mouse towards Ubuntu Desktop at the top left of the screen and clicking on the help menu that appears. In general, the name of the program you are currently using is displayed at the top-left of the screen and moving the mouse to top of the screen will reveal the programs menus in a similar fashion to how they are displayed on the Mac (although, confusingly, some programs display their menus within their own window rather like a Windows computer).

An alternative way to get help is to click on the circular symbol (a stylised picture of three people holding hands) at the top left of the screen and type help in the search box that appears. For want of a better name, we will refer to the people-holding-hands button as the Ubuntu button although the help text that appears describes it as “Dash home”.

Ubuntu comes free with many tools, including web browsers, file managers, word processors, etc. There are free equivalents available for most of the everyday software people use, and you can browse what is available by clicking on the Ubuntu Software Centre, whose icon at the left of the screen looks like a paper shopping bag full of goodies. The Ubuntu Software Centre is just a starting point and there are many other sources available, both of prepackaged software specifically for Ubuntu, and source code that will require compiling. Search the web for “Ubuntu software repositories” for more information on obtaining additional software.

While there are explicit key combinations for copy and pasting text, just like on Windows or Mac, control-c and control-v in Ubuntu, this convention is not respected by all programs. Unix has traditionally been more mouse centred with the left mouse button used to highlight text and the middle button used to copy it. You may find yourself accidentally doing this occasionally if you are not used to using the middle mouse button. Starting applications from icons, opening folders, etc... only requires a single click, rather than the double click required on Windows, making the action of pressing buttons and selecting things from menus more consistent with each other. Accidentally double clicking will generally result in an action being done twice, not normally a bad thing but it does mean that impatient users can quickly find their desktop covered in windows.

Perhaps the most important difference you are likely to encounter on a daily basis is that the names of files and directories are case sensitive: README.txt, readme.txt and readme.TXT all refer to different files. This is different from both Windows and Mac OS X, where upper and lower-case characters are preserved in the name but the file can be referred to using any case. (Despite the Unix heritage of OS X, Apple chose this behaviour to maintain compatibility with earlier versions of the Mac operating system)

Fetching the examples

There are many examples in this tutorial to be tried, enclosed in boxes like the one below, which explains the format of the examples. The example below shows how to automatically download and unpack the file ready for use.

Basics

The command line

While Ubuntu has all the graphical tools you might expect in a modern operating system, so new users rarely need to deal with its Unix foundations, we will be working with the command-line. An obvious question is, why is the command-line still the main way of interacting with Unix or, more relevantly, why we are making you use it? Part of the answer to the first question is that the origins of Unix predate the development of graphical interfaces and this is what all the tools and programs have evolved from. The reason the command-line remains popular is that it is an extremely efficient way to interact with the computer: once you want to do something complex enough that there isn't a handy button for it, graphical interfaces force you to go through many menus and manually perform a task that could have been automated. Alternatively, you must resort to some form of programming (Mac OS X Automator, Microsoft Office macros, etc) which is the functional equivalent of using the command line.

Unix is built around many little tools designed to work together. Each program does one task and returns its output in a form easily understood by other programs. These properties allow simple programs to be combined together to produce complex results, rather like building something out of Lego bricks. The forward to the 1978 report in the Bell System Technical Journal[4] describes the Unix philosophy as:

"(i) Make each program do one thing well. To do a new job, build afresh rather than complicate old programs by adding new features.

(ii) Expect the output of every program to become the input to another, as yet unknown, program. Don't clutter output with extraneous information. Avoid stringently columnar or binary input formats. Don't insist on interactive input.

(iii) Design and build software, even operating systems, to be tried early, ideally within weeks. Don't hesitate to throw away the clumsy parts and rebuild them.

(iv) Use tools in preference to unskilled help to lighten a programming task, even if you have to detour to build the tools and expect to throw some of them out after you've finished using them."

The rest of this tutorial will be based using the command-line through a “terminal”. This terminology dates back to the early days of Unix when there would be many “terminals”, basically a simple screen and keyboard, connected to a central computer. The terminal program can be found by clicking on the Ubuntu button and typing terminal in the search box, as shown in Illustration 1. You can also easily access the terminal using just the keyboard, by pressing control-alt-T. Once open, the text size can be changed using the View/Zoom menu options or the font changed entirely using the Edit/Profile Preferences menu option.

While we are using Linux during the workshop, you may not have access to a machine later or may not wish to use Linux exclusively on your computer. While you could install Linux as 'dual-boot' on your computer, or run it in a virtual machine (A Virtual Machine (VM) is a program on your computer that acts like another computer and can run other operating systems. Several VM's are available, VirtualBox http://www.virtualbox.org/ is free and regularly updated), the knowledge of the command-line is fairly transferable between platforms. Mac OS X also has a command-line hidden away (/Applications/Utilities/Terminal) and, with a small number of eccentricities, everything that works on the Linux command-line should work for OS X. Windows has its own incompatible version of a command-line but Cygwin http://www.cygwin.com/ can be installed and provides an entire Unix-like environment within Windows.

At the beginning of the command-line is the command prompt, showing that the computer is ready to accept commands. The prompt is text of the form user@computer:directory$. Figure 2 has a user called tim in the directory ~ on a computer called coffee-grinder. Having all this information is handy when you are working with multiple remote computers at the same time. The prompt is configurable and may vary between computers; you may notice later that other prompts are slightly different. Some basic commands are shown in Table 1; try typing them at the command-line and press return after the command to tell the computer to run the command.

Files and directories

All files in Unix are arranged in a tree-like structure: directories are represented as branches leading from a single trunk (the “root”) and may, in turn, have other branches leading from them (directories inside directories) and individual files are the leaves of the tree. The tree structure is similar to that of every other common operating system and most file browsers can display the filesystem in a tree-like fashion, for example: part of the filesystem for an Ubuntu Linux computer is displayed in Figure 4.

Where Unix differs from other operating systems is that the filesystem is used much more for organising different types of files. The essential system programs are all in /bin and their shared code (libraries) are in /lib; similarly user programs are in/usr/bin, with libraries in /usr/lib and manual pages in /usr/share/man.

There are two different ways of specifying the location of a file or directory in the tree: the absolute path and the relative path from where we currently are in the filesystem (the current working directory). An absolute path is one that starts at the root and does not depend on the location of the current working directory. Starting with a / to signify the root, the absolute path describes all the directories (branches) we must follow to get to the file in question. each directory name is separated by a /.

For example, home/user/Music/TheKinks/SunnyAfternoon.mp3 refers to the file SunnyAfternoon.mp3 inside the directory TheKinks, which is inside the directory Music, which is inside the user's directory, which is inside on the directory home, which is connected to the root. If you are familiar with Microsoft Windows, you might notice that the path separator is different. Unix-based systems use a forward-slash (/) rather than the backward-slash (\) used on Windows. You may have noticed that the paths of web pages are also separated by forward-slashes, revealing their Unix origins as a path to a file on a remote machine.

For convenience, a few directories have special symbols that are synonyms for them and the most common of these are listed in Figure 5. Most of these have a special meaning when at the beginning of a path otherwise they are just a symbol. For example dir/~/ is the directory ~ inside the directory dir in the current directory, whereas ~/dir/ is the directory dir inside the home directory (usually /home/user on Linux, /Users/user on Mac OS X). In both cases the '/' symbols are separators rather than the root directory.

The current location, the working directory, can be displayed at the command-line using the pwd command. Rather than referring to a file by its absolute path, we can refer it by using a path relative to where we are: a file in the current directory can be referred to by its name, a file in a directory inside our working directory can be referred to by directory/filename (and so on for files inside of directories inside of directories inside of our working directory, etc...). Note that these paths are very similar to how we describe absolute paths except that they do not start with /; absolute paths are relative paths relative to the root (alternatively we could read the initial / as “goto root” and consider them to be relative paths). As shown in Figure 5, the directory above the current directory can be referred to as .. so, if the working directory is /home/user, then the root directory can be referred to as ../.. (go up one directory, then go up another directory). The symbol .. can be freely mixed into paths: the directory examples below the current directory could have path examples/../examples/../examples (needless to say, simply using just examples is recommended).

Commands

Commands are just programs elsewhere on the computer and entering their name on the command-line runs them. Commands have a predicable format:

command -flags target

The command is the name of the program to run, the (optional) flags modify its behaviour and the target is what the command is to operate on, often the name of a file. Many commands require neither flags nor target but Unix tools are generally extremely configurable and even simple commands like date (some utilities also have parodies, see ddate or sl for example) have many optional flags to change the format of their output.

As mentioned in Files and directories, there are special directories to contain executable programs and programs within them can be run by typing their name at the command-line. The reason you can run the programs in these directories simply by typing their names is that the operating system knows to look in those directories for programs. In general you will not have permission to place files in these directories and experienced Unix users create their own, normally ~/bin/ ,to place programs they use frequently. Creating this directory does not make it special; you still have to tell the operating system to go look for programs there as well. The operating system has a variable, $PATH, which is a list of directories in which the computer looks for programs. To add a directory to that list, use the command "export PATH=~/bin:$PATH" where "~/bin" is the directory you want to add. This command is often added to the file ~/.bashrc, which is a list of commands to be run automatically every time a new terminal is opened. If a program is not in a special directory, you cannot run it just by typing its name because the computer doesn't know where to find it. This is true even if the program is in the current directory. Programs which are not in special directories can still be run, but you have to include the path to where it can be found. If the program is in your current working directory, this can be as simple as typing ./program (program is in current directory). If the program is elsewhere just type the absolute or relative path to were it is. You can always use the command-line's autocompletion features (see “tab-completion” below) to reduce the amount of typing needed. In order to alleviate the need to type paths to commonly-used programs, it is a good idea to add their paths to the PATH variable in ~/.bashrc.

One thing you'll quickly discover is that the mouse does not move the cursor in the terminal. The terminal interface predates the popularity of mice by decades and alternative methods of efficiently moving around and editing have been developed. There are keyboard short-cuts defined for most common operations, and a few of these are listed in Figure 6. Probably the most useful shortcut is the tab key. It can be used to complete command names and paths in the filesystem (called 'tab-completion'). Pressing tab once will complete a path up to the first ambiguity encountered and pressing again gives a list of possible completions (you can type the next letter or so of the one you want and press tab again to attempt further auto-completion).

A record is kept of the commands you have entered, and the history command can be used to list them so you can refer back to what you did earlier. The history can also be searched: Control-r starts a search and the computer will match against your history as you type; typing enter accepts the current line, typing Control-r again goes to the next match and Control-g cancels the search. History can also be referred to by entry number, listed using the history command: entering !n on the command-line will repeat history entry n, entering !! will repeat the last command.

There are many commands, often quite terse, for manipulating files and a few of the more useful of these are shown in Table 4. Many of the commands for Unix have short names, often only two or three letters, so errors typing can easily have unintended and severe consequences! Be careful what you enter, because Unix rarely gives you a second chance to correct mistakes. Some Unix machines have the sl command to encourage accurate typing.

Figure 7 shows few commands for manipulating files and brief explanations.

On the Unix command line, some symbols can have special meanings. A slash, '/', indicates the end of a directory name, an asterisk, '*' is a wildcard, etc. However, there are many circumstances when it is preferable for symbols not to have a special meaning, the most common example being when the file name contains a space (a space is a special character in the sense that it is interpreted as a break between command-line options). The character in question can be “escaped” by prefixing it with a '\' to remove its special meaning so, for example: / is the root directory but \/ is a file called '/'.

Files beginning with a . character are hidden by default and will not appear in the output of ls or equivalent (or in the file browser when you're using a graphical user interface). Generally, hidden files are those used directly by the computer or programs, containing configuration information not intended for the average user to understand or use.

Reading and writing permission

All files and directories have a set of permissions associated with them, describing who is allowed to read or write that file. There are three basic permissions: read r, write w, and execute x. The meanings of read and write are fairly obvious, but execute has two meanings depending on context. For normal files, execute permission is used on files with executable code (i.e. programs) to give users permission to run that program. For a directory, x permission allows a user to open that directory and see the files it contains. There are three categories of user: owner u (generally the user who created the file), group g (the group of users that the owner belongs to), and other o (everyone else). The permissions for each file are described as a string of nine characters, three for each user category. The three positions assigned to each user category correspond to the three types of permissions ('r,w, and x, in that order). If that user category has a given permission, the appropriate letter will appear. If not, the letter will be replaced with a dash '-'. For example, if a user category has permission to read and execute a file, but not write it, their triplet will look like r-x. The permission string rwxr-x--- means that the owner has permission to read, write or execute, users in the same group have read and execute permission and other users have no permissions.

The owner of a file can change its permissions. Some programs will do this automatically if they are being run by the file's owner, giving the impression that the permissions have been ignored. Running rm -f is the most common time a user will run into this behaviour: by default rm will prompt to remove write-protected files (i.e. files you don't have permission to write) but the -f (force) flag turns tells it not to bother asking and just remove the file.

Dealing with multiple files

Often, especially when running scripts or organising files, you will need to be dealing with multiple files at once. Rather than typing each file name out explicitly, we can give the computer a pattern instead of a filename. All filenames are checked against the pattern and the computer automatically generates a list of all the matching files to use when running the command. Patterns are created using symbols that have a special meaning. For example: * means match anything (or nothing), so a*b is a pattern that matches any filename beginning with a and ending with b including the file ab. Figure 8 contains a list of special symbols useful for constructing patterns.

As mentioned above, pattern matching occurs before a command is run and the pattern is replaced by the list of matches. The command never sees the pattern, just the results of the match.

Running multiple programs

From early on in its development, Unix was designed to run multiple programs simultaneously on remote machines and support for this is integrated into the command-line. Jobs (scripts, programs, or other fairly self-contained things running from the command line) can be divided into two types, foreground jobs and background jobs, based on how they affect the terminal. A foreground job temporarily replaces the command-line and you cannot enter new commands until it has finished, whereas a background job runs independently and allows you to continue with other tasks. Only foreground jobs receive input from the keyboard, so interactive programs like PAUP* should be run as foreground (although you could set up a compute intensive analysis, background it and continue with other tasks while it is running. Later, when the calculations have finished, the program can be made foreground again so interaction can continue). Although background jobs leave your command-line mostly free to do other things, they do send their output to the terminal you launched them from, so you might see it popping up in the middle of another task, which can be confusing. If you are running multiple background jobs, their output will be interleaved based on when it was produced, with no indication of which program produced the output.

As hinted in Figure 9, there is a difference between a job and a process. A process is a single program running on the machine, and each process is uniquely numbered with a pid (process ID). You can list all the processes you are running, including the command-line itself (generally called bash, but in some unix distributions it may be zsh or tcsh) using ps (or ps -a if you want to see what all the other users of the machine are doing). The command-line itself is just a process (program) running on the computer, albeit one specially designed for starting, stopping and manipulating other processes. Processes are the fundamental method of keeping track of what is running on the computer. Jobs, on the other hand, are things entered on the command-line and many include several programs logically connected together by pipes (see In, out and pipes

for details) to achieve a task.

The command-line

splits the jobs into several processes and runs them, possibly simultaneously. See illustrative example in Figure 10.

In, out and pipes

Where possible, Unix commands behave sort of like filters, or the mathematical concept of a function: they read from input, manipulate that input, and write the output. This might sound trivial, tautologous even, but it enables simple commands to be combined to produce complex results. Every command reads from stdin (short for standard in) and writes to stdout (short for standard out). By default stdin is whatever gets typed in the terminal from and keyboard, and stdout is connected to the current command-line, so results are displayed on the screen. stdout can easily be redirected to a file instead by using the greater-than operator, >. > filename redirects stdout to the file specified for later perusal. By chaining many simple commands together, complex transformations of the input can be achieved. The following is an advanced example, showing how a complex output can be achieved using a series of smaller steps. You may not yet have sufficient understanding of the shell to follow everything in this example but try to work through it and see what each step is doing. The main pages for each command (see Getting help) might be useful.

Compression

The aim of compression is to make files smaller, which is useful for both saving disk space and making it quicker to send files over the internet. Some types of programs that send data over the internet have the ability to transparently compress files before sending and uncompress at the other end. Some web servers implement this but the most important example for us are scp and sftp (two command-line programs used to transfer files over networks) which can each be given the -C option to request compression.

Simply put, compression programs look for frequently repeated patterns in the file and remove this redundancy in a manner that can be undone later. Text files tend to compress very well, with 100MB worth of Wikipedia being compressable into less than 16MB (See The Hutter prize http://prize.hutter1.net/), and, in particular, biological sequences tend to be very compressible since the size of the alphabet of nucleotides or amino acids is small.

The two most common tools for compressing files are gzip and bzip2, with their respective tools for uncompressing being gunzip and bunzip2. gzip is the de-facto standard; bzip2 tends to produce smaller files but takes longer to compress them. On the Windows platform, the Zip (often known as WinZip (http://www.winzip.com/)) compression method is favoured and many Unix platforms provide zip and unzip tools to deal with these files. Non-Linux Unix platforms, like Mac OS X for example, have older tools called compress and uncompress that are rarely used any more. Support for compress 'd files on Linux can be patchy and unreliable. For example, a machine one author has access to has a compress manual page but no actual tool installed.

A final method to be aware of, that is becoming more popular, is 7-zip (7za). 7-zip can produce smaller files than all the above methods, again at the expense of taking longer to compress. A list of file suffixes that can be used to identify what files are compressed using what method is provided in Figure 11.

Compression works better if files are combined and then compressed together, rather than compressing them individually, since this allows the compression program to spot repeated patterns between the files. On Unix, the process of packing/unpacking several files into / from a single file has been historically separate from the process of the compression, in keeping with the philosophy of having little tools that do one thing well. The Unix tool for packing and unpacking files is tar “Tape Archiver”, the odd name because its heritage goes back to 1979 when writing files to magnetic tape was a common method of storage.

Below is an example of using tar to compress and then extract files in an archive:

ls chimp.fasta human.fasta macaque.fasta orangutan.fasta # Pack into single file. The suffix is your responsibility. 'c' means create, and #'f' means that the next argument is the filename to write to. tar -cf sequences.tar *.fasta Note that the original files are untouched ls chimp.fasta human.fasta macaque.fasta orangutan.fasta sequences.tar # Delete all sequences rm *.fasta # 'x' means extract tar -xf sequences.tar ls chimp.fasta human.fasta macaque.fasta orangutan.fasta sequences.tar

Over time, the features of tar have increased to make it more convenient and modern versions are now capable of packing and compressing files, as in the example above.

ls chimp.fasta human.fasta macaque.fasta orangutan.fasta sequences.tar # Pack and gzip sequences simultaneously ( 'z' tells tar to use gzip) tar -zcf sequences.tgz *.fasta #List the contents without extracting tar -ztf sequences.tgz chimp.fasta human.fasta macaque.fasta orangutan.fasta # More recent versions of tar can also bzip2 files tar -jcf sequences.tbz2 *.fasta tar -jtf sequences.tbz2 chimp.fasta human.fasta macaque.fasta orangutan.fasta

Compression and decompression are actually done by the same program. Decompression program names like 'gunzip' are actually just convenient aliases that tell the computer to call the gzip program with the unzipping flags.

Working on remote computers

Why use a remote computer? There are many reasons: First, central computing resources tend to be much larger, more reliable and more powerful than your laptop or PC – if you need to do a lot of work or use a lot of data then you may have no option but to use a bigger computer.

There is also a world of difference between server-quality hardware and stuff on your desk. Uninterruptible power supplies, (i.e. backup batteries for when the power goes out) are one example. Servers also tend to have redundant components and memory that can detect and correct errors. At the top end, servers can detect and isolate faulty parts, report the problem, and continue running. Often the first time users of a central server know that a fault occurred is when an engineer turns up with a replacement part.

If you have a job that will take a long time to run, for instance Bayesian phylogenetic methods, you may not want to commit to leaving your personal computer untouched for long enough to complete the analysis (and you really trust your colleagues not to turn it off?) whereas central facilities are permanently on and have batteries to prevent small glitches in the power supply from affecting the computers. Lastly, and most importantly, central computers tend to have much more rigorous and tested policies for backing up data – Do you do regular backups? Are they kept in a separate physical location from the original? When was the last time you checked that the backup actually worked?

SSH (short for Secure SHell) is a method of connecting to other computers and giving access to a command-line on them; once we have a command-line we can interact with the remote computer just like we interact with the local one using the command-line. SSH replaces an older method of connecting to remote computers called telnet, which sends everything – including your password – as normal undisguised text so anyone can read it. It is not a good idea to use telnet unless you know what you are doing and you have no other option. Similarly, avoid FTP `File Transfer Protocol' for transferring files if you have sftp or scp available.

As well as keeping communications between your computer and a remote computer secure, SSH also allows you to verify that the remote computer is the computer it claims to be – no point keeping traffic secure if you send it to the wrong place – and prevents someone sitting in the middle of the connection listening to each message then passing it on, pretending to each side to be the other. (This is known as a Man-in-the-Middle attack http://en.wikipedia.org/wiki/Man-in-the-middle_attack. Both sides think they are communicating with the other but are actually communicating with an intermediary who copies all messages then forwards them on.) The method use to verify identity, without possibility of forgery, and even if someone else can copy and manipulate all messages is very interesting and has many other uses. See http://en.wikipedia.org/wiki/Public-key_cryptography and http://en.wikipedia.org/wiki/Digital_signature for details. If verification fails, you will be warned with a message like in Figure 13 and the computer will refuse to connect.

By far, the majority of these warnings are caused by inept computer administration rather than malice (for instance, if someone has upgraded the other machine incorrectly so it appears to be a different computer, you will get this kind of error). If you are sure it is safe, the warning can be dealt with by deleting the appropriate line for the computer from the ~/.ssh/known_hosts file. Graphical programs can also be run on remote machines, but expect pauses unless you have a very, very fast internet connection. The system that enables this is called the X Windows system (or just X, or X11) (X is the successor to the W Windows System, if you are wondering where the X came from). You can use the -X flag when you run ssh to allow the remote computer to programs in new windows on your local display, provided you have software on your local computer that understand the instructions being sent. Linux computers use such software by default for display and Mac OS X comes with software that can be used (and is started automatically by ssh in the following example). On Windows, the Cygwin software provides the required functionality. Below is an example of using ssh with the -X flag.

Transferring files

It is possible to transfer files between computers using SSH alone but this is not recommended since more friendly interfaces exist. Of course, there are many graphical file transfer programs available. Without recommending particular programs, Cyber-duck http://cyberduck.ch/ for the Mac OS X and WinSCP http://winscp.net/ for Windows appear to be usefule options, but there are many more. Alternatively, under Mac and Unix, it is possible to mount directories on remote computers so there appear to be local; search for sshfs for details. When transferring files, silent errors are extremely rare but can happen and so we'd like to be able to verify that the file received is identical to the one sent. Short files could be checked by eye, but this can't be automated without transferring the file again (which might also get an error). A common technique to verify correct transfer is to calculate the md5 (Message Digest algorithm 5) of both files and compare these values. The md5 is short string of characters that identifies a file and two different files are extremely unlikely to share the same string – if a file changes, its md5 will (very probably) change and so we know that that a change occurred. It is extremely difficult to deliberately create two files that have the same sum. The chances of two non-identical random files having the same md5 is about 3.4e38. When checking large numbers of files, the chance that there are two files in the set with the same md5 increases rapidly but will still be small enough for realistic uses. More rarely, you may come across SHA sums, shasum on both Unix and Mac computers, which are very similar to md5's but have an even smaller chance that two files share the same string.

Getting help

General help with Ubuntu has already been covered in “Acclimatisation“, alternatively, just find someone to ask. As with everything else, the web is a rich source of good, bad, and down-right weird tutorials.

If you are have little or no programming experience, Python (http://python.org/) is a good choice for learning how to do useful bioinformatics scripting, especially in conjunction with the Biopython module (http://biopython.org/). Unix is generally very well documented, although the documentation is often aimed at more experienced users. The manual pages all tend to follow the same format, and it's a good idea to become familiar with it. The page will start with a description of what the command does and a summary of all its flags. Optional flags will be enclosed in square brackets. Next comes a full description of the command and detailed descriptions of what each flag does. Sometimes there is also a section containing examples of usage. Mac OS X is generally very consistent about man pages but Linux derivatives can be a mixed bag.

Variables and programming

So far, we have only used the command-line to run other programs and to chain them together to achieve more complex results. The command-line tools can be used like a programming language in its own right, and we can write little programs to automate common tasks; often this referred to as scripting rather than programming although the distinction is not really relevant.

Obviously learning to program is not something that can be taught in an hour or two, and even experienced programmers take several days to become productive in a new language, so this section can give little more than a taste of what is possible; however, it should be possible to show how you could save a lot of time with a little investment up front. If you are doing similar things to large number of files, many sequences for example, typing the same command over and over on the command line is time-consuming, tedious, and prone to error, especially as you get bored. Scripting can save you a lot of time and allow you to get on with something else while the computer takes on that task for you. Think about the last time you needed to rename 100 files, or change the format of thousands of gene alignments so they are compatible with your phylogeny program. Learning a little bit of scripting can speed up these tasks tremendously. As with everything, there are many tutorials available on the web and a search for bash scripting tutorial or bash scripting introduction will yield many examples of varying completeness and comprehensibility.

In order to provide you with a little bit of programming background, we've prepared a small general tutorial below:

The first thing to introduce are variables. A variable is just a name for another piece of data, a useful analogy is that of a labelled box: every time we see the label, we replace it conceptually with the contents of the box. The ability to manipulate variables, changing the state of the computer, is fundamental to programming. Here we'll introduce two useful cases: shortening common directory paths and performing the same operations on many files. In bash scripting, variables called with a dollar sign, followed by the name of the variable: $NAME. There are some restrictions on the characters that can be part of a variable name, and variable names cannot start with a number. As a rule of thumb it's a good idea to only use upper- or lower-case letters in your variable names

A variable can refer to the name of a file and we can write things at the command-line using the variable instead of the name explicitly – change the variable and we run exactly the same commands on a different file. One way to take advantage of this this would be to set the variable to one of several files and use the history to repeat a set of commands. Of course, if the commands write their output to a file then that would have to be renamed each time otherwise the output for each file would be written over that for the previous. Shell scripting provides an alternative: the computer can be told to set the variable to each of many file names in turn and the value of the variable can be edited automatically to provide the name of a unique output file.

A common Unix practice is to place frequently used sets of functions into a file, called a script, for reuse thereby preventing errors retyping them. Writing a script also means that complex operations with many steps can be tested before you commit to running them over many files, something that could potentially take days if we are dealing with large numbers of genes. Scripts can be written and modified in any common text editor but must be saved in text format; nano is a good basic editor that is fairly intuitive to use but there are many others more specifically designed with programmers in mind. Alternatively you could use gedit, a program more like Notepad on Windows (to access gedit, click the Ubuntu button and search for gedit; entering gedit & at the command-line will also work).

Line endings – compatibility problems

Even after the standard alphabet for computers was established (ASCII – American Standard Code for Information Interchange) there was no agreement about how to how to indicated the end of a line. ASCII provides two possibilities: line-feed '\n' and carriage-return '\r' , based on how old type-writers and tele-type terminals used to work: a carriage-return moves the carriage, the position to print the next character at, back to be beginning of the line and line-feed moves the paper one line down but doesn't change where the carriage is. On Unix a '\n' character is taken to mean “line-feed and carriage return” and this is used to separate lines of text. On Windows, lines are separated by the pair of characters '\r\n' (in that order) and old versions of Apple operating systems (prior to OS X) use '\r' to separate lines. The situation on Mac OS X is more complex since it must deal with both its Mac and Unix heritage; officially '\n' ' now separates lines in files but programs have to be able to deal with both conventions.

To further complicate things, some methods of transferring files between machines try to automatically convert the line endings for you. This is generally a mistake. Specifically an old file transfer method called FTP “File Transfer Protocol” has two modes: text and binary, text mode will attempt to translate line endings. Unix platforms default to binary and are generally safe. The only case where you need to be careful is transferring files from Windows using the command-line FTP application. If you transfer a binary file over FTP in text mode, the received file will be corrupted irretrievably. If in doubt, see Transferring files for how to verify that your file has transferred correctly.

If you've managed to read through to here, you're probably thinking: a) that's complicated, and b)why haven't I noticed this? The answer is that it used to cause problems in the past but programmers are aware of the issues nowadays and programs tend to do the right thing. Some programming languages like Perl even deal with these problems transparently so even programmers don't need to be aware of them any more.

GUIs

Galaxy

Galaxy is an open source GUI toolset for a variety of NGS applications. It can be accessed through the Penn State University server[5] or an institution may set up their own server. Users can save and publish data histories for future use or for others to use. Its tutorials and graphic interface make it simple to learn and easy to use.

Users may also want to run their own galaxy instance, for testing, tool development or production use. A preconfigured Docker container makes this straightforward.

The following demonstrates an example setup.

The user:

- downloads and installs Docker (https://www.docker.com)

- pulls the galaxy-stable image from Docker Hub (https://hub.docker.com/)

docker pull bgruening/galaxy-stable

- runs the docker container

docker run -itp 8080:80 -v /local/dir/:/export bgruening/galaxy-stable

- can now access the fully functional galaxy instance at http://localhost:8080

GeneTalk

5 steps to analyze VCF files in GeneTalk at www.gene-talk.de

- Upload VCF file

- Edit pedigree and phenotype information for segregation filtering

- Filter your VCF file by editing the following filtering options

- Functional filter (filter out variants that have effects on protein level)

- Linkage filter (filter out variants that are on specified chromosomes)

- Gene panel filter (filter variants by genes or gene panles, subscribe to publically available gene panels or create own ones)

- Frequency filter (show only variants with a genotype frequency lower than specified)

- Inheritance filter (filter out variants by presumed mode of inheritance)

- Annotation filter (show only variants that are listed in databases)

- View results and annotations

- Write your own annotations

BaseSpace

References

- ↑ "GNU Operating System". Free Software Foundation, Inc. 11 April 2016. Retrieved 28 April 2016.

- ↑ "What is Copyleft?". Free Software Foundation, Inc. 3 October 2015. Retrieved 28 April 2016.

- ↑ Ubuntu Documentation Team. "Official Ubuntu Documentation". Canonical Ltd. Retrieved 28 April 2016.

- ↑ McIlroy, M.D.; Pinson, E.N.; Tague, B.A. (1978). "Unix Time-Sharing System: Forward". The Bell System Technical Journal. 57 (6): 1899–1904.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ↑ "Galaxy". usegalaxy.org. Galaxy Project. Retrieved 28 April 2016.

Pre-processing

Pre-processing

FASTQ files – discussion of the various quality encodings

FASTQ files extend sequence information in FASTA files by also including information about sequence quality. A FASTQ file typically consists of four lines.

- A line starting with @ and containing the sequence identifier

- The actual sequence

- A line starting with + followed by an optional sequence identifier

- A line with quality values encoded in ASCII space

As such the 2nd and 4th line must have the same length One such entry is given below showing one sequence "ATGTCT"...

@HWI-ST999:102:D1N6AACXX:1:1101:1235:1936 1:N:0: ATGTCTCCTGGACCCCTCTGTGCCCAAGCTCCTCATGCATCCTCCTCAGCAACTTGTCCTGTAGCTGAGGCTCACTGACTACCAGCTGCAG + 1:DAADDDF<B<AGF=FGIEHCCD9DG=1E9?D>CF@HHG??B<GEBGHCG;;CDB8==C@@>>GII@@5?A?@B>CEDCFCC:;?CCCAC

Here a quality value ranging from -5 to 41 is added to an offset and the resulting character is taken from an ASCII table. As such the whole data can be represented as text. Whilst Illumina made multiple changes to the quality format and eventually returned to almost Sanger encoding, the most important difference is whether the offset is 33 as in Sanger and Illumina v1.8 and later or 64 as in previous Illumina (and Solexa) formats. As you can see from the chart if you find any of the following characters: !"#$%&'()*+,-./0123456789: your offset must be 33 whereas any of the following characters KLMNOPQRSTUVWXYZ[\]^_`abcdefgh point towards an offset of 64. The above example is thus base offset by 33 as we find a 1 as first character. Also bear in mind that the @ and + signs are valid characters for quality so even if a line started by @ or + this could just be the beginning of the quality string.

See the quality chart below which is modified from the wikipedia article.

SSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSS..................................................... ..........................XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX...................... ...............................IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...................... .................................JJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJJ...................... LLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLLL.................................................... !"#$%&'()*+,-./0123456789:;<=>?@ABCDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrstuvwxyz{|}~ | | | | | | 33 59 64 73 104 126 0........................26...31.......40 -5....0........9.............................40 0........9.............................40 3.....9.............................40 0........................26...31........41

S - Sanger and Illumina 1.8+, Offset 33, Quality range (0, 40) (!,I) X - Solexa, Offset 64, Quality range (-5, 40) (;,h) I - Illumina 1.3+ Offset 64, Quality range (0, 40) (@,h) J - Illumina 1.5+ Offset 64, Quality range (3, 40) (B,h) L - Illumina 1.8+ Offset 33, Quality range (0, 41) (!,J)

Presentation of the metrics used in QC

When you turn to quality control there are various metrics to consider.

Sequence Quality

The simplest is obiviously the quality score introduced in the FASTQ files above. As such it gives already a valid idea about base call quality. As often quality of reads degrades over the course of a sequence it is common practice to determine the average quality of the first, second, third,...nth base by just averaging over all reads in a file. Also to give some idea about the spread usually bar plots showing quantiles are given. This would give us an idea about what kind of trimming and quality filtering the data requires.

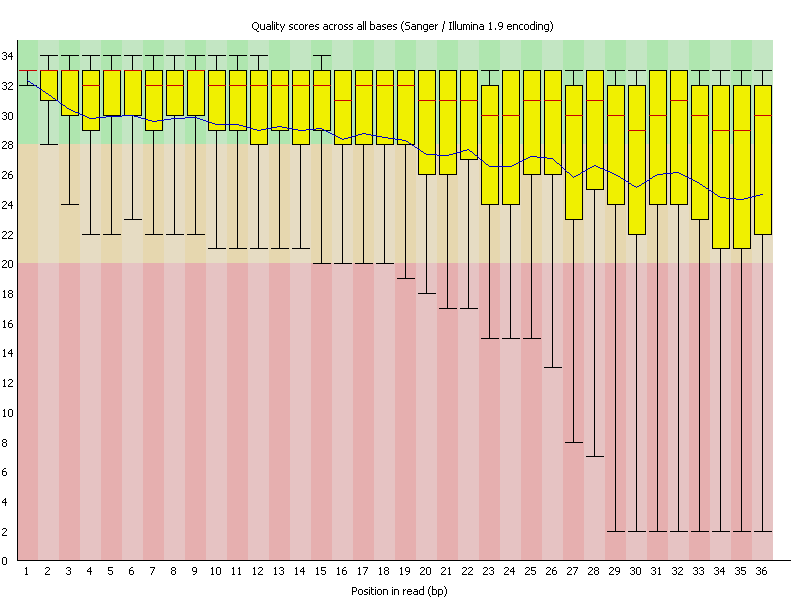

As an example here sequence data was investigated for quality using FastQC. As you can see the sequence reads are 36 bases long and the average sequence quality (depicted by the blue line) is steadily declining. In many new Illumina kits the sequence quality goes up a bit first before it steadily declines.

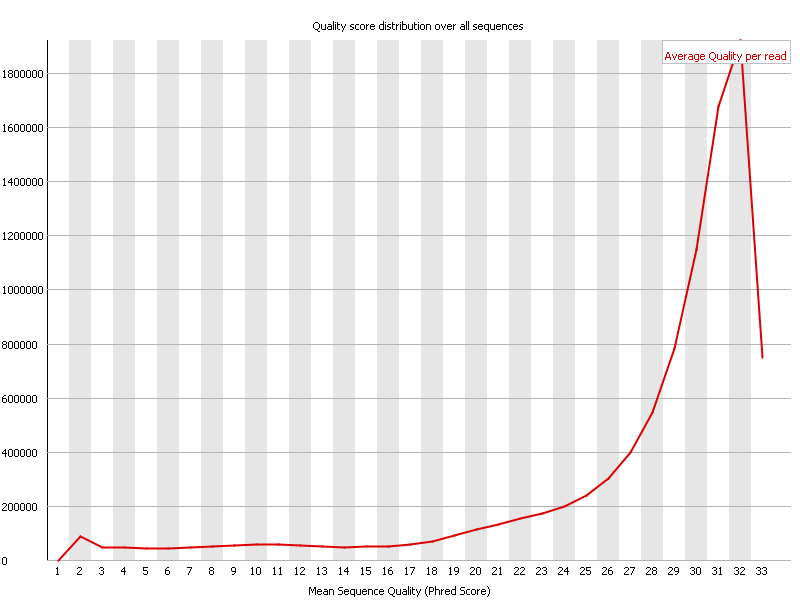

However instead of going over each base one can average the quality of each read instead and show a cumulative plot of the sequence quality of these.

In the above screenshot one can observe that most reads have an average quality of 32. This is to be considered very good in general, however given that these reads are somewhat on the short side, it is probably at best an OK result.

Per Base Sequence Content

Another important metric is to look for base content at each position. Assuming the data is a random sample from the sequence space, at each position the contribution should be identical. Thus one needed to see straight lines. In reality it often happens that the first few bases might indeed show some erratic behavior, which could be due to non completely random primers. In the shown example however the reads are completely off. As you can see there is considerable bias in each base over the whole reads. In fact this bias is so strong, that you can read the overrepresented bases of the read.

As an example if you look at the last few bases you can read them as CTTGAAA-end of sequence.

Adapter sequence present or not?

If we now turn our attention to the overrepresented sequences in FastQC we can immediately figure out where this came from:

| Sequence | Count | Percentage | Possible Source |

|---|---|---|---|

| GATCGGAAGAGCTCGTATGCCGTCTTCTGCTTGAAA | 1870684 | 19.446980406066654 | Illumina Single End Adapter 1 (100% over 33bp) |

| GAAGAGCTCGTATGCCGTCTTCTGCTTGAAAAAAAA | 95290 | 0.9906017065918623 | Illumina Single End Adapter 1 (100% over 28bp) |

Intro to errors and quality scores/encoding

As mentioned above the base caller assigns a quality score which is then available for each base. This gives the estimated reliability for this base. Please note that depending on your sequencing platform the typical mistakes are different. Illumina's most prevalent form of mistake is a nucleotide exchange whereas 454, Ion Torrent/Proton and other similar platforms have major issues with homopolymers such as AAAAAA where the correct number of As can often not be determined exactly.

Preprocessing Steps

Sequence Quality Trimming

In order to cope with lower quality data it is common to remove low quality bases. Typically one would remove lower quality bases from the e.g. the 3' end using a sliding windows approach as the per base quality gradually drops.

Alternative clipping strategies (Adaptor clipping)

In addition to removing lower base quality data, one would also remove adapters, PCR primers and other artifacts. In practice one would combine the adapter clipping with quality trimming approaches.

K-mer filtering/correction strategies

There are different ways to correct for base errors using kmer approaches because some errors can not be simply clipped off and even a very good quality value does not mean that a read is really error free. This is an important step prior to an assembly but potentially less crucial for alignments.

One basic idea is based on kmers in the read string. The original idea going back at least until 2001 (Pevzner 2001) generates a spectrum of kmers first, then kmers which are above a certain threshold (called solid) and kmers below this threshold are potentially arising from mistakes.

If a read is split into multiple kmers a single sequencing error will result in converting several overlapping kmers from strong to weak ones. An error correction step could now try to find the smallest number of changes required to make all kmers in the read strong.

Variants such as Quake also take the base quality into account to be better able to discriminate between low copy true kmers and high copy error kmers.

Digital Normalization and Partitioning

When considering especially RNA sequencing, it is well known that a normalization of RNA using molecular biology techniques (lab normalization) can help in providing better contigs and that a better general representation is achieved. This is because using lab normalization depletes common -or highly represented- sequences. Thus, when sampling from sequence space, after lab normalization it is less likely to find the previously very common sequences and thus more likely to find the previously underrepresented sequences. Apart from the advantage of having a higher likelihood to find underrepresented sequences there is the additional advantage that it is now less likely to find the same sequencing error multiple times in two or or more independent reads due to sheer oversampling. The latter makes it less likely that assembly software would erroneously create multiple contigs out of one true mRNA, due to these correlated SNPs. That said, lab normalization is neither easy and if it is outsourced it can be costly. Thus, one can instead use digital normalization. The basic idea is to downsample reads that have a lot of abundant kmwers. In addition this has the added benefit (to the ones above) that the number of reads to process becomes smaller, and thus it might be much more feasible (and faster) to assemble a transcriptome. One way to go about this is to use Titus Brown's tool set: http://ged.msu.edu/papers/2012-diginorm/

Paired end merging

A number of tools will take Illumina paired-end data and merge the reads if an overlap can be detected between them, potentially correcting errors by a taking the higher quality basecall at discrepant positions. This may improve assembler performance by reducing the data complexity, and may also improve the resulting contigs by removing erroneous data and improving the assembly of repeats. Tools to accomplish this include COPE and FLASH.

Removal of other undesirable sequences

Depending on the design of an experiment, there may be other sequences which are desirable to remove or mask from the reads prior to assembly. For example, if sequencing pools of BAC or cosmid DNA, it may be desired to remove most if not all of the vector backbone. Similarly, E.coli sequences will contaminate BAC or cosmid DNA preparations and could be removed in advance. Removing these post-assembly is an option as well. The PhiX control viral DNA is a common contaminant in Illumina sequencing data. Fast search tools such as SMALT can be used to map reads against a reference genome in order to identify those which should be removed.

Exercise

QC workflow (Data from machine -> pre-trimming quality plots -> trimming -> post-trimming quality plots)

A typical workflow might thus be to first get the data from the machine, and evaluate the typical quality plots as shown in the previous section. This gives a valid and important insight into the read quality and might potentially raise awareness about library preparation problems that might have occurred. After this problems have been identified and noted down, one would try to remove several errors by using trimming tools such as Trimmomatic to remove low quality bases from the sequence end and (potentially more importantly) to also remove remaining adapters etc from the reads. After having thus processed the reads, one would once again judge the quality to inform about remaining quality issues. As an example, even after removing known adapters from the sequences as in the above case, one might still see a per base sequence bias and would want to remove this bias or at least keep it in mind. We will discuss one exemplary workflow here.

The dataset

Download SRR074262 from the SRA (this is the example from above).

Fastq output analysis

Download FastQC (or analyze your data in RobiNA for similar plots). FastQC is relatively self explanatory. Open the FastQ file you just downloaded. FastQC will run through your dataset and you generate the plots shown in the introduction by clicking on the individual categories on the left hand side.

Adapter removal only

We will use Trimmomatic to simply remove adapters. java -jar trimmomatic-0.30.jar SE -phred33 SRR074262.fastq aclipped.fq.gz ILLUMINACLIP:TruSeq2-SE.fa:2:30:10 MINLEN:25 This tells trimmomatic that the quality encoding is phred 33 (modern Illumina) and it will store the results in the compressed file adapter_clipped.fq.gz. Finally it will use TruSeq3 adapters provided by trimmomatic.

Trimmomatic report

Input Reads: 9619406 Surviving: 7443332 (77.38%) Dropped: 2176074 (22.62%) TrimmomaticSE: Completed successfully

FastQC automatically recognizes gz files, so having the file compressed is ok. Indeed when we open the file aclipped.fq.gz in FASTQC the adapters have been removed.

| Sequence | Count | Percentage | Possible Source |

|---|---|---|---|

| GGGGAGGAGAGAGCCATTGTTGAGGCGGCCATTGAG | 46910 | 0.630228505190954 | No Hit |

| AGGGGAGGAGAGAGCCATTGTTGAGGCGGCCATTGA | 21029 | 0.28252132244000405 | No Hit |

Adapter Removal and quality clipping

We will use Trimmomatic to remove adapters and use a sliding window approach to remove lower quality bases at the end

java -jar trimmomatic-0.30.jar SE -phred33 SRR074262.fastq aclippedtrim.fq.gz ILLUMINACLIP:TruSeq2-SE.fa:2:30:10 LEADING:3 TRAILING:3 SLIDINGWINDOW:4:15 MINLEN:25

Input Reads: 9619406 Surviving: 6513310 (67.71%) Dropped: 3106096 (32.29%) TrimmomaticSE: Completed successfully

Read correction

Links to useful tools for preprocessing

Links to resources used in this chapter

Links to other QC tools e.g. FASTX toolkit and spectral alignment for error correction

Alignment

This page in a nutshell: Given sequencing data (reads) and the reference sequence for the species, comparing the reads to the reference is an easy way to detect small variations in the sequenced sample, such as SNPs and short InDels. |

Introduction

Alignment, also called mapping,[1] of reads is an essential step in re-sequencing. Having sequenced an organism of a species before, and having constructed a reference sequence, re-sequencing more organisms of the same species allows us to see the genetic differences to the reference sequence, and, by extension, to each other. Alignments of data from these re-sequenced organisms is a relatively simple method of detecting variation in samples. There are certain instances (such as new genes in the sequenced sample that are not found in the existing reference sequence) that can not be detected by alignment alone; however, while other approaches, such as de novo assembly, are potentially more powerful, they are also much harder or, for some organisms, impossible to achieve with current sequencing methods.

Next-generation sequencing generally produces short reads or short read pairs, meaning short sequences of <~200 bases (as compared to long reads by Sanger sequencing, which cover ~1000 bases). To compare the DNA of the sequenced sample to its reference sequence, we need to find the corresponding part of that sequence for each read in our sequencing data. This is called aligning or mapping the reads against the reference sequence. Once this is done, we can look for variation (e.g. SNPs) within the sample. This poses a number of problems:

- The short reads do not come with position information, that is, we do not know what part of the genome they came from; we need to use the sequence of the read itself to find the corresponding region in the reference sequence.

- The reference sequence can be quite long (~3 billion bases for human), making it a daunting task to find a matching region.

- Since our reads are short, there may be several, equally likely places in the reference sequence from which they could have been read. This is especially true for repetitive regions.

- If we were only looking for perfect matches to the reference, we would never see any variation. Therefore, we need to allow some mismatches and small structural variation (InDels) in our reads.

- Any sequencing technology produces errors. Similar to the "real" variation, we need to tolerate a low level of sequencing errors in our reads, and separate them from the "real" variation later.

- We need to do that for each of the millions of reads in our sequencing data.

Short reads

Raw short reads often come in (or can be converted into) a file format called FASTQ.[2] It is a plain text format, containing the sequence and quality scores for every read, where each single read normally occupies four consecutive lines:

@Read_id_1 CTGATGTGCCGCCTCACTTCGGTGGT + @@@DDDDDH8<BAHG@BHGIHIII>( @Read_id_2 TGATGTGCCGCCTCACTACGGTGGTG + FHHHHHJIJIJIJIIIJJIIJGIGII @Read_id_3 ...

The four lines are:

- The name/ID of the read, preceded by a "@". For read pairs, there will be two entries with that name, either in the same or a second FASTQ file.

- The sequence of the read.

- A "+" sign. In very old FASTQ files, this is followed by the read name from the first line. Today, this line is present for

historical reasonsbackwards compatibility only. - The quality scores of the bases from line 2. The scores are generated by the sequencing machine, and encoded as ASCII (33+score) characters. The line should have the same length as line 2, as there is one quality score per base.

Alignment

For each of the short reads in the FASTQ file, a corresponding location in the reference sequence (or that no such region exists) needs to be determined. This is achieved by comparing the sequence of the read to that of the reference sequence. A mapping algorithm will try to locate a (hopefully unique) location in the reference sequence that matches the read, while tolerating a certain amount of mismatch to allow subsequence variation detection. Reads aligned (mapped) to a reference sequence will look like this:

GCTGATGTGCCGCCTCACTTCGGTGGTGAGGTG Reference sequence CTGATGTGCCGCCTCACTTCGGTGGT Short read 1 TGATGTGCCGCCTCACTACGGTGGTG Short read 2 GATGTGCCGCCTCACTTCGGTGGTGA Short read 3 GCTGATGTGCCGCCTCACTACGGTG Short read 4 GCTGATGTGCCGCCTCACTACGGTG Short read 5

You can see the reference sequence on the top row, and five short reads stacked below; this is called a pileup. While two of the reads are a perfect match to the reference, the three other reads show a mismatch each, highlighted in red ("A" in the read, instead of "T" in the reference). Since there are multiple reads showing the mismatch, at the same position, with the same difference, one could conclude that it is an actual genetic difference (point mutation or SNP), rather than a sequencing error or mismapping.

Mapping algorithms

There are several alignment algorithms in existence; you can find an (incomplete) list further down in software packages. Some notes on mapping algorithms:

- The reference sequence, the short reads, or both, are often pre-processed into an indexed form for rapid searching. (See BWT)

Sources of errors

There are several potential sources for errors in an alignment, including (but not limited to):

- Polymerase Chain Reaction artifacts (PCR artifacts). Many NGS methods involve one or multiple PCR steps. PCR errors will show as mismatches in the alignment, and especially errors in early PCR rounds will show up in multiple reads, falsely suggesting genetic variation in the sample. A related error would be PCR duplicates, where the same read pair occurs multiple times, skewing coverage calculations in the alignment.

- Sequencing errors. The sequencing machine can make an erroneous call, either for physical reasons (e.g. oil on an Illumina slide), or due to properties of the sequenced DNA (e.g., homopolymers). As sequencing errors are often random, they can be filtered out as singleton reads during variant calling.