OpenMP/Printable version

| This is the print version of OpenMP You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/OpenMP

Overview

This book is about OpenMP, a language extension to C (and C++) that allows for easy parallel programming. To understand what OpenMP makes possible, it is first important to know what parallel programming is and what it isn't.

Parallel programming means writing software that operates on several compute devices at the same time. For the purpose of this book, a "compute device" is primarily a processor (CPU) or a processor core within a multicore CPU, which is the focus of OpenMP 3. In other settings, a compute device may mean a full-blown computer within a cluster of computers, or a different type of device such as a graphic processing unit (GPU). An extension of OpenMP to cluster computing has been devised by Intel, and OpenMP 4 adds supports for executing code on non-CPU devices, but these are outside the scope of this book.

Parallel programming is mainly done to speed up computations that have to be fast. Ideally, if you have n compute devices (cores) to run a program, you'd want the program to be n times as fast as when it runs on a single device. This ideal situation never occurs in practice because the compute devices need to communicate with each other, sending and waiting for messages such as "give me the result of your computation". Still, parallel programming can speed up many applications by a factor almost equal to n.

Parallel programming should be distinguished from concurrent programming, which means breaking down a program into processes that conceptually run at the same time. There is considerable overlap between parallel and concurrent programs: both involve decomposing a task into distinct subtasks that can be executed separately, and concurrent programs can benefit from having their tasks run on multiple compute devices in parallel. However, full concurrent programming is a different art from that of parallel programming in that usually, different types of communication occur between concurrent tasks than between parallel tasks.

OpenMP adds support for parallel programming to C in a very clean way. Unlike thread libraries, little change is needed to existing programs to have them run on multiple processors in parallel. In fact, the basic constructs of OpenMP are so non-intrusive that programs using them but compiled by a compiler that doesn't support OpenMP will still work (although sequentially, of course). To see what that means, consider the following C function:

void broadcast_sin(double *a, size_t n)

{

#pragma omp parallel for

for (size_t i = 0; i < n; i++) {

a[i] = sin(a[i]);

}

}

This function "broadcasts" the sine function over an array, storing its results back into that same array. When OpenMP is enabled in the compiler, it will in fact do so using multiple threads, but without OpenMP, it will still function and produce the exact same effect.

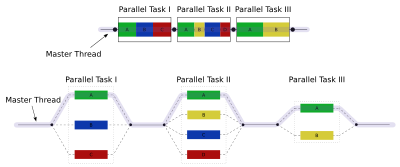

OpenMP programs work according to a so-called fork–join model, illustrated on the right. The OpenMP runtime maintains a thread pool, and every time a parallel section is encountered, it distributes work over the threads in the pool. When all threads are done, sequential execution is resumed.

Setup

Setting up your compiler

[edit | edit source]To do any useful OpenMP programming, you will need a compiler that supports it.

- The GNU C Compiler (GCC) has OpenMP 3.1 support since version 4.7. This compiler comes with practically any Linux system; for instance, on Ubuntu you can install it (and a set of related tools) with

sudo apt-get install build-essential. To find out the version of GCC that you have installed, issuegcc --versionon the command line. - The Intel C Compiler supports OpenMP.

- The Clang compiler, shipped with Apple's Xcode, does not support OpenMP as of April 2014, but an experimental version by Intel does.

- Microsoft Visual C++ apparently has limited support for OpenMP, depending on the version.

- The LLVM-GCC compiler, shipped with older versions of Apple's Xcode, does not support OpenMP.

The OpenMP website has a more complete list of compilers with OpenMP support.

Apart from a compiler, we'll also be using the make tool in this book. You can do without if you want to use a different build system.

Loops

A motivating example

[edit | edit source]Assume we need to parallelize the following sequential code.

function VectorAdd(int *a, int *b, int N)

{

for(int i=0; i<N; i++)

{

a[i] = a[i] + b[i];

}

}

What we can do is assign each thread to carry out a subset of iterations.

#include <omp.h> // for OpenMP library

function VectorAddParallel(int *a, int *b, int N)

{

omp_set_num_threads(24);

#pragma omp parallel

{

int id, i, Nthrds, istart, iend;

id = omp_get_thread_num();

Nthrds= omp_get_num_threads();

istart= id * N / Nthrds;

iend= (id+1) * N / Nthrds;

if (id == Nthrds-1)

iend= N;

for(int i=istart;i<iend;i++)

{

a[i] = a[i] + b[i];

}

}

}

The omp_set_num_threads(24) in above code tells the computer to spawn 24 threads when we enter the parallel region (scope of #pragma omp parallel). However, there is no guarantee that we would be given the exact number of threads as we ask for, due to resource limitations and environment constraints. Therefore, we call omp_get_num_threads() within the parallel region to know actual the number of threads spawned. Then we assign the starting and ending iterations for each thread. For example, thread with id 0 (returned by omp_get_thread_num()) will execute all iterations between istart and iend. Each thread will get a private instance of loop index variable, and private instances of variables declared within the parallel region.

We can simplify above function with OpenMP's for worksharing construct. It may be combined with the parallel directive as #pragma omp parallel for.

#include <omp.h> // for OpenMP library

function VectorAddParallelSimplified(int *a, int *b, int N)

{

#pragma omp parallel for

for(int i=0;i<N;i++)

{

a[i] = a[i] + b[i];

}

}

The compiler directive #pragma omp for has no effect unless it is used within a parallel region. Also, it is safe to assume code that uses OpenMP library functions but does not use #pragma omp parallel anywhere, is not parallelized by OpenMP.

Reductions

Summing floating point numbers

[edit | edit source]For our first parallel program, we turn to an age-old problem: summing an array of floating point numbers. The basic algorithm to solve this problem is so simple that it allows us to focus on OpenMP features rather than algorithmic details, but we'll see in a bit that the problem is actually less trivial than it appears at first.

Without further ado, here's a sequential algorithm to sum a list of floating point numbers:

#include <stddef.h> // for size_t

float sum(const float *a, size_t n)

{

float total;

size_t i;

for (i = 0, total = 0.; i < n; i++) {

total += a[i];

}

return total;

}

As far as algorithms go, this one is as simple as it gets. Put the definition above in a file isum.c (iterative sum).

- If you have experience dealing with floating point numbers, you might be tempted to make

totaladoubleinstead of afloatfor added precision. Don't do that just yet, as we'll solve the precision issue in a different way in a bit.

To test our algorithm, we need a driver program, which we'll put in a file main.c:

#include <stdio.h>

#include <stdlib.h>

float sum(const float *, size_t);

#define N 1000000 // we'll sum this many numbers

int main()

{

float *a = malloc(N * sizeof(float));

if (a == NULL) {

perror("malloc");

return 1;

}

// fill the array a

for (size_t i = 0; i < N; i++) {

a[i] = .000001;

}

printf("%f\n", sum(a, N));

return 0;

}

And finally we need some way to build this program. On Linux/Unix/OS X, the following Makefile should do the job. It assumes you're using GCC.

# C99 extensions are not necessary for OpenMP, but very convenient

CFLAGS = -fopenmp -Wall -std=c99

LDFLAGS = -fopenmp

OBJS = main.o isum.o

# when copy-pasting the following, be aware that the indent must be a tab, not spaces

sum: $(OBJS)

$(CC) $(LDFLAGS) -o sum $(OBJS)

Now compile the program with make sum, run it and see how fast it is with a tool such as time. If it's too fast to measure, consider changing N to a larger number, or run sum in a loop instead of just once.

A parallel way of summing

[edit | edit source]We had to go through a bit of setup, but now we're ready to make a parallel sum algorithm for floating point numbers. Here it is:

#include <stddef.h> // for size_t

float sum(const float *a, size_t n)

{

float total = 0.;

#pragma omp parallel for reduction(+:total)

for (size_t i = 0; i < n; i++) {

total += a[i];

}

return total;

}

#pragma omp parallel for turns the loop into a parallel loop. If you have two cores, OpenMP will (probably) use two threads that each run half of the loop. The reduction(+:total) declares that we're reducing the input array by summing into the variable total, so after the partial loops are done, their results must be summed into this variable.

Put this in isum.c and recompile. Now run the program. Do you get the same output as before?

- Exercise: run the program with various settings for the environment variable

OMP_NUM_THREADS, which controls the size of the thread pool that OpenMP uses. Try 1, 2, 4 and 8. Do you see the same results for each setting? Now try absurdly large number of threads, e.g. 16000. How does this affect performance?

- Exercise: the dot product of two vectors is the sum of products of their respective entries, . Adapt the

sumfunction to adotfunction for computing dot products in parallel.

Tasks

If you followed along with the previous chapter and did the exercises, you'll have discovered that the sum function we developed was quite unstable. (To be fair, we set up the data to show the instability.) In fact, the naive way of summing has round-off error: the error is proportional to the number of elements we sum. We can fix this by changing the summation algorithm. There are three candidates for a stable sum algorithm:

- Sort the number from small to large, then sum them as before. Problem: this changes the complexity of the problem from linear to .

- Use Kahan's algorithm. While this algorithm takes linear time and is very stable, it is quite slow in practice and harder to parallelize then our third alternative, which is

- divide-and-conquer recursion. The round-off error for such an algorithm is , i.e. proportional to the logarithm of the number of elements.

The basic divide and conquer summation is very easy to express in C:

float sum(const float *a, size_t n)

{

// base cases

if (n == 0) {

return 0;

}

else if (n == 1) {

return *a;

}

// recursive case

size_t half = n / 2;

return sum(a, half) + sum(a + half, n - half);

}

If you use this definition of sum in the program we developed for the previous chapter, you'll see that it produces exactly the expected result. But this algorithm doesn't have a loop, so how do we make a parallel version using OpenMP?

We'll use the tasks construct in OpenMP, treating the problem as task-parallel instead of data parallel. In a first version, the task-recursive version of sum looks like

float sum(const float *a, size_t n)

{

// base cases

if (n == 0) {

return 0;

}

else if (n == 1) {

return 1;

}

// recursive case

size_t half = n / 2;

float x, y;

#pragma omp parallel

#pragma omp single nowait

{

#pragma omp task shared(x)

x = sum(a, half);

#pragma omp task shared(y)

y = sum(a + half, n - half);

#pragma omp taskwait

x += y;

}

return x;

}

We introduced two tasks, each of which sets a variable that is declared shared with the other task. If we did not declare the variables shared, each task would set its own local variable, then throw away the results. We then wait for the tasks to complete with #pragma omp taskwait and combine the recursive results.

You may be surprised by the #pragma omp parallel followed immediately by #pragma omp single nowait. The thing is that the first pragma causes all of the threads in the pool to execute the next block of code. The single directive causes all threads but one (usually the first to encounter the block) to not execute it, while the nowait turns off the barrier on the single; there's already a barrier on the enclosing parallel region, to which the other threads will rush.

Unfortunately, if you actually try to run this code, you'll find that it's still not extremely fast. The reason is that the tasks are much too fine-grained: near the bottom of the recursion tree, invocations are splitting two-element arrays into subtasks that process one element each. We can solve this problem by introducing, apart from the base and recursive cases, an "intermediate case" for the recursion which is recursive, but does not involve setting up parallel tasks: if the recursion hits a prespecified cutoff, it will no longer try to set up tasks for the OpenMP thread pool, but will just do the recursive sum itself.

- Exercise: introduce the additional case in the recursion and measure how fast the program is. Don't peek ahead to the next program, because it contains the solution to this exercise.

Now we effectively have two recursions rolled into one: one with a parallel recursive case, and a serial one. We can disentangle the two to get better performance, by doing less checks at each level. We also separate the parallel setup code to a driver function.

#include <stddef.h>

#define CUTOFF 100 // arbitrary

static float parallel_sum(const float *, size_t);

static float serial_sum(const float *, size_t);

float sum(const float *a, size_t n)

{

float r;

#pragma omp parallel

#pragma omp single nowait

r = parallel_sum(a, n);

return r;

}

static float parallel_sum(const float *a, size_t n)

{

// base case

if (n <= CUTOFF) {

return serial_sum(a, n);

}

// recursive case

float x, y;

size_t half = n / 2;

#pragma omp task shared(x)

x = parallel_sum(a, half);

#pragma omp task shared(y)

y = parallel_sum(a + half, n - half);

#pragma omp taskwait

x += y;

return x;

}

static float serial_sum(const float *a, size_t n)

{

// base cases

if (n == 0) {

return 0.;

}

else if (n == 1) {

return a[0];

}

// recursive case

size_t half = n / 2;

return serial_sum(a, half) + serial_sum(a + half, n - half);

}

This technique works better when the code inside the parallel tasks spends more time computing and less time doing memory accesses, because those may need to be synchronized between processors.