Sensory Systems/Neurosensory Implants/Cochlear Implants

Cochlear Implants

[edit | edit source]

A cochlear implant (CI) is a surgically implanted electronic device that replaces the mechanical parts of the auditory system by directly stimulating the auditory nerve fibers through electrodes inside the cochlea. Candidates for cochlear implants are people with severe to profound sensorineural hearing loss in both ears and a functioning auditory nervous system. They are used by post-lingually deaf people to regain some comprehension of speech and other sounds as well as by pre-lingually deaf children to enable them to gain spoken language skills. (Diagnosis of hearing loss in newborns and infants is done using otoacoustic emissions, and/or the recording of auditory evoked potentials.) A quite recent evolution is the use of bilateral implants allowing recipients basic sound localization.

Parts of the cochlear implant

[edit | edit source]The implant is surgically placed under the skin behind the ear. The basic parts of the device include:

External:

- a microphone which picks up sound from the environment

- a speech processor which selectively filters sound to prioritize audible speech and sends the electrical sound signals through a thin cable to the transmitter,

- a transmitter, which is a coil held in position by a magnet placed behind the external ear, and transmits the processed sound signals to the internal device by electromagnetic induction,

Internal:

- a receiver and stimulator secured in bone beneath the skin, which converts the signals into electric impulses and sends them through an internal cable to electrodes,

- an array of up to 24 electrodes wound through the cochlea, which send the impulses to the nerves in the scala tympani and then directly to the brain through the auditory nerve system

Signal processing for cochlear implants

[edit | edit source]In normal hearing subjects, the primary information carrier for speech signals is the envelope, whereas for music, it is the fine structure. This is also relevant for tonal languages, like Mandarin, where the meaning of words depends on their intonation. It was also found that interaural time delays coded in the fine structure determine where a sound is heard from rather than interaural time delays coded in the envelope, although it is still the speech signal coded in the envelope that is perceived.

The speech processor in a cochlear implant transforms the microphone input signal into a parallel array of electrode signals destined for the cochlea. Algorithms for the optimal transfer function between these signals are still an active area of research. The first cochlear implants were single-channel devices. The raw sound was band-passed filtered to include only the frequency range of speech, then modulated onto a 16 kHz wave to allow the electrical signal to electrically couple to the nerves. This approach was able to provide very basic hearing, but was extremely limited in that it was completely unable to take advantage of the frequency-location map of the cochlea.

The advent of multi-channel implants opened the door to try a number of different speech-processing strategies to facilitate hearing. These can be roughly divided into Waveform and Feature-Extraction strategies.

Waveform Strategies

[edit | edit source]These generally involve applying a non-linear gain on the sound (as an input audio signal with a ~30dB dynamic range must be compressed into an electrical signal with just a ~5dB dynamic range), and passing it through parallel filter banks. The first waveform strategy to be tried was Compressed Analog approach. In this system, the raw audio is initially filtered with a gain-controlled amplifier (the gain-control reduces the dynamic range of the signal). The signal is then passed through parallel band-pass filters, and the output of these filters goes on to stimulate electrodes at their appropriate locations.

A problem with the Compressed Analog approach was that the there was a strong interaction-effect between adjacent electrodes. If electrodes driven by two filters happened to be stimulating at the same time, the superimposed stimulation could cause unwanted distortion in the signals coming from hair cells that were within range of both of these electrodes. The solution to this was the Continuous Interleaved Sampling Approach - in which the electrodes driven by adjacent filters stimulate at slightly different times. This eliminates the interference effect between nearby electrodes, but introduces the problem that, due to the interleaving, temporal resolution suffers.

Feature-Extraction Strategies

[edit | edit source]These strategies focus less on transmitting filtered versions of the audio signal and more on extracting more abstract features of the signal and transmitting them to the electrodes. The first feature-extraction strategies looked for the formants (frequencies with maximum energy) in speech. In order to do this, they would apply wide band filters (e.g. 270 Hz low-pass for F0 - the base formant, 300 Hz-1 kHz for F1, and 1 kHz-4 kHz for F2), then calculate the formant frequency, using the zero-crossings of each of these filter outputs, and formant-amplitude by looking at the envelope of the signals from each filter. Only electrodes corresponding to these formant frequencies would be activated. The main limitation of this approach was that formants primarily identify vowels, and consonant information, which primarily resides in higher frequencies, was poorly transmitted. The MPEAK system later improved on this design my incorporating high-frequency filters which could better simulate unvoiced sounds (consonants) by stimulating high-frequency electrodes, and formant frequency electrodes at random intervals.[1][2][3]

Current Developments

[edit | edit source]

Currently, the leading strategy is the SPEAK system, which combines characteristics of Waveform and Feature-Detection strategies. In this system, the signal passes through a parallel array of 20 band-pass filters. The envelope is extracted from each of these and several of the most powerful frequencies are selected (how many depends on the shape of the spectrum), and the rest are discarded. This is known as a 'n-of-m" strategy. The amplitudes of these are then logarithmically compressed to adapt the mechanical signal range of sound to the much narrower electrical signal range of hair cells.

Multiple microphones

[edit | edit source]On its newest implants, the company Cochlear uses 3 microphones instead of one. The additional information is used for beam-forming, i.e. extracting more information from sound coming from straight ahead. This can improve the signal-to-noise ratio when talking to other people by up to 15dB, thereby significantly enhancing speech perception in noisy environments.

Integration CI – Hearing Aid

[edit | edit source]Preservation of low-frequency hearing after cochlear implantation is possible with careful surgical technique and with careful attention to electrode design. For patients with remaining low-frequency hearing, the company MedEl offers a combination of a cochlea implant for the higher frequencies, and classical hearing aid for the lower frequencies. This system, called EAS for electric-acoustic stimulation, uses with a lead of 18mm, compared to 31.5 mm for the full CI. (The length of the cochlea is about 36 mm.) This results in a significant improvement of music perception, and improved speech recognition for tonal languages.

Fine Structure

[edit | edit source]

For high frequencies, the human auditory system uses only tonotopic coding for information. For low frequencies, however, also temporal information is used: the auditory nerve fires synchronously with the phase of the signal. In contrast, the original CIs only used the power spectrum of the incoming signal. In its new models, MedEl incorporates the timing information for low frequencies, which it calls fine structure, in determining the timing of the stimulation pulses. This improves music perception, and speech perception for tonal languages like Mandarin.

Mathematically, envelope and fine-structure of a signal can be elegantly obtained with the Hilbert Transform (see Figure). The corresponding Python code is available under.[4]

Virtual Electrodes

[edit | edit source]The numbers of electrodes available is limited by the size of the electrode (and the resulting charge and current densities), and by the current spread along the endolymph. To increase the frequency specificity, one can stimulate two adjacent electrodes. Subjects report to perceive this as a single tone at a frequency intermediate to the two electrodes.

Simulation of a cochlear implant

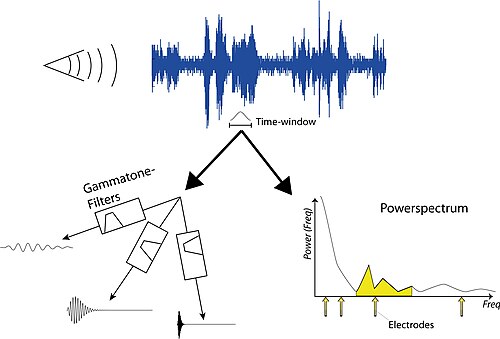

[edit | edit source]Sound processing in cochlear implant is still subject to a lot of research and one of the major product differentiations between the manufacturers. However, the basic sound processing is rather simple and can be implemented to gain an impression of the quality of sound perceived by patients using a cochlear implant. The first step in the process is to sample some sound and analyze its frequency. Then a time-window is selected, during which we want to find the stimulation strengths of the CI electrodes. There are two ways to achieve that: i) through the use of linear filters ( see Gammatone filters); or ii) through the calculation of the powerspectrum (see Spectral Analysis).

Cochlear implants and Magnetic Resonance Imaging

[edit | edit source]With more than 150 000 implantations worldwide, Cochlear Implants (CIs) have now become a standard method for treating severe to profound hearing loss. Since the benefits of CIs become more evident, payers become more willing to support CIs and due to the screening programs of newborns in most industrialized nations, many patients get CIs in infancy and will likely continue to have them throughout their lives. Some of them may require diagnostic scanning during their lives which may be assisted by imaging studies with Magnetic resonance imaging (MRI). For large segments of the population, including patients suffering from stroke, back pain or headache, MRI has become a standard method for diagnosis. MRI uses pulses of magnetic fields to generate images and current MRI machines are working with 1.5 Tesla magnet fields. 0.2 to 4.0 Tesla devices are common and the radiofrequency power can peak as high as 6 kW in a 1.5 Tesla machine.

Cochlear implants have been historically thought to incompatible with MRI with magnetic fields higher than 0.2 T. The external parts of the device always have to be removed. There are different regulations for the internal parts of the device. Current US Food and Drug Administration (FDA) guidelines allow limited use of MRI after CI implantation. The pulsar and Sonata (MED-EL Corp, Innsbruck, Austria) devices are approved for 0.2 T MRI with the magnet in place. The Hi-res 90K (Advanced Bionics Corp, Sylmar, CA, USA) and the Nucleus Freedom (Cochlear Americas, Englewood, CO, USA) are approved for up to 1.5 T MRI after surgical removal of the internal magnet. Each removal and replacement of the magnet can be done using a small incision under local anesthesia, but the procedure is likely to weaken the pocket of the magnet and to risk infection of the patient.

Cadaver studies have shown that there is a risk that the implant may be displaced from the internal device in a 1.5 T MRI scanner. However, the risk could be eliminated when a compression dressing was applied. Nevertheless, the CI produces an artifact that could potentially reduce the diagnostic value of the scan. The size of the artifact will be larger relative to the size of the patient’s head and this might be particularly challenging for MRI scans with children. A recent study by Crane et al., 2010 found out that the artifact around the area of the CI had a mean anterior-posterior dimension of 6.6 +/- 1.5 cm (mean +/- standard deviation) and a left-right dimension averaging 4.8 +/- 1.0 cm (mean +/- standard deviation) (Crane et al., 2010). ([5])

- ↑ http://www.utdallas.edu/~loizou/cimplants/tutorial/tutorial.htm

- ↑ www.ohsu.edu/nod/documents/week3/Rubenstein.pdf

- ↑ www.acoustics.bseeber.de/implant/ieee_talk.pdf

- ↑ T. Haslwanter (2012). "Hilbert Transformation [Python]". private communications.

- ↑ Crane BT, Gottschalk B, Kraut M, Aygun N, Niparko JK (2010) Magnetic resonance imaging at 1.5 T after cochlear implantation. Otol Neurotol 31:1215-1220