Social Statistics, Chapter 2: Linear Regression Models

Linear Regression Models

[edit | edit source]Persons all over the world worry about crime, especially violent crime. Americans have more reason to worry than most. The United States is a particularly violent country. The homicide rate in the United States is roughly three times that in England, four times that in Australia, and five times that in Germany. Japan, a country of over 125 million persons, experiences fewer murders per year than Pennsylvania, with fewer than 12.5 million persons. Thankfully, American murder rates have fallen by almost 50% in the past 20 years, but they're still far too high.

Violent crime is, by definition, traumatic for victims and their families. A person who has been a victim of violent crime may never feel truly safe in public again. Violent crime may also be bad for society. Generalizing from the individual to the social level, if persons feel unsafe, they may stay home, avoid public places, and withdraw from society. This concern can be conceptualized into a formal theory: where crime rates are high, persons will feel less safe leaving their homes. A database that can be used to evaluate this theory has been assembled in Figure 2-1 using data available for download from the Australian Bureau of Statistics website. Australian data have been used here because Australia has just 8 states and territories (versus 50 for the United States), making it easier to label specific states on a scatter plot.

| STATE_TERR | CODE | VICTIM_PERS | UNSAFE_OUT | VICTIM_VIOL | STRESS | MOVED5YR | MED_INC |

|---|---|---|---|---|---|---|---|

| Australian Capital Territory | ACT | 2.8 | 18.6 | 9.9 | 62.1 | 39.8 | $712 |

| New South Wales | NSW | 2.8 | 17.4 | 9.3 | 57.0 | 39.4 | $565 |

| Northern Territory | NT | 5.7 | 30.0 | 18.2 | 63.8 | 61.3 | $670 |

| Queensland | QLD | 3.0 | 17.3 | 13.5 | 64.4 | 53.9 | $556 |

| South Australia | SA | 2.8 | 21.8 | 11.4 | 58.2 | 38.9 | $529 |

| Tasmania | TAS | 4.1 | 14.3 | 9.8 | 59.1 | 39.6 | $486 |

| Victoria | VIC | 3.3 | 16.8 | 9.7 | 57.5 | 38.8 | $564 |

| Western Australia | WA | 3.8 | 20.9 | 12.8 | 62.8 | 47.3 | $581 |

The cases in the Australian crime database are the eight states and territories of Australia. The columns include two metadata items (the state or territory name and postal code). Six variables are also included:

VICTIM_PERS– The percent of persons who were the victims of personal crimes (murder, attempted murder, assault, robbery, and rape) in 2008UNSAFE_OUT– The percent of persons who report feeling unsafe walking alone at night after darkVICTIM_VIOL– The percent of persons who report having been the victim of physical or threatened violence in the past 12 monthsSTRESS– The percent of persons who report having experienced at least one major life stressor in the past 12 monthsMOVED5YR– The percent of persons who have moved in the previous 5 yearsMED_INC– State median income

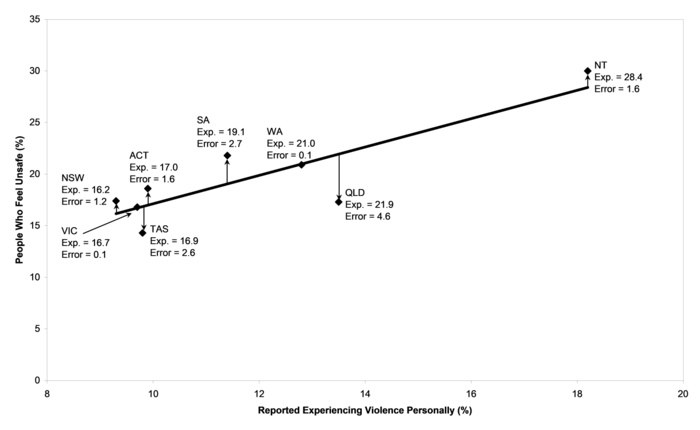

The theory that where crime rates are high, persons will feel less safe leaving their homes can be operationalized using these data into the specific hypothesis that the relationship between the variables VICTIM_PERS and UNSAFE_OUT will be positively related across the 8 Australian states and territories. In this statistical model, VICTIM_PERS (the crime rate) is the independent variable and UNSAFE_OUT (persons' feelings of safety) is the dependent variable. The actual relationship between the two variables is plotted in Figure 2-2. Each point in the scatter plot has been labeled using its state postal code. This scatter plot does, in fact, show that the relationship is positive. This is consistent with the theory that where crime rates are high, persons will feel less safe leaving their homes.

As usual, Figure 2-2 includes a reference line the runs through the middle of all of the data points. Also as usual, there is a lot of error in the scatter plot. Fear of going out alone at night does rise with the crime rate, but not in every case. To help clarify the overall trend in fear of going out, Figure 2-2 also includes a new, additional piece of information: the amount of error that is associated with each observation (each state). Instead of thinking of a scatter plot as just a collection of points that trends up or down, it is possible to think of a scatter plot as a combination of trend (the line) and error (deviation from the line). This basic statistical model—a trend plus error—is the most widely used statistical model in the social sciences.

In Figure 2-2, three states fall almost exactly on the trend line: New South Wales, Queensland, and Western Australia. The persons in these three states have levels of fear about going out alone at night that are just what might be expected for states with their levels of crime. In other words, there is almost no error in the statistical model for fear in these states. Persons living in other states and territories have more fear (South Australia, Australian Capital Territory, Northern Territory) or less fear (Victoria, Tasmania) than might be expected based on their crime rates. Tasmania in particular has relatively high crime rates (the second highest in Australia) but very low levels of fear (the lowest in Australia). This means that there is a lot of error in the statistical model for Tasmania. While there is definitely an upward trend in the line shown in Figure 2-2, there is so much error in individual cases that we might question just how useful actual crime rates are for understanding persons' feelings of fear about going out at night.

This chapter introduces the linear regression model, which divides the relationship between a dependent variable and an independent variable into a trend plus error. First and foremost, the linear regression model is just a way of putting a line on a scatter plot (Section 2.1). There are many possible ways to draw a line through data, but in practice the linear regression model is the one way that is used in all of social statistics. Second, line on a scatter plot actually represents a hypothesis about how the dependent variable is related to an independent variable (Section 2.2). Like any line, it has a slope and an intercept, but social scientists are mainly interested in evaluating hypotheses about the slope. Third, it's pretty obvious that a positive slope means a positive relationship between the two variables, while a negative slope means a negative relationship (Section 2.3). The steeper the slope, the more important the relationship between the two variables is likely to be. An optional section (Section 2.4) explains some of the mathematics behind how regression lines are actually drawn.

Finally, this chapter ends with an applied case study of the relationship between property crime and murder rates in the United States (Section 2.5). This case study illustrates how linear regression models are used to put lines on scatter plots, how hypotheses about variables are turned into hypotheses about the slopes of these lines, and the difference between positive and negative relationships. All of this chapter's key concepts are used in this case study. By the end of this chapter, you should have a basic understanding of how regression models can shed light on the relationships connecting independent and dependent variables in the social sciences.

2.1. Introducing the Linear Regression Model

[edit | edit source]When social scientists theorize about the social world, they don't usually theorize in straight-line terms. Most social theorists would never come up with a theory that said "persons' fear of walking along at night will rise in exactly a straight line as the crime rate in their neighborhoods rise." Instead, theories about the social world are much more vague: "persons will feel less safe leaving their homes where crime rates are high." All the theories that were examined in Chapter 1 were also stated in vague language that said nothing about straight lines:

- Rich parents tend to have rich children

- Persons eat junk food because they can't afford to eat high-quality food

- Racial discrimination in America leads to lower incomes for non-whites

- Higher spending on education leads to better student performance on tests

When theories say nothing about the specific shape of the relationship between two variables, a simple scatter plot is—technically—the appropriate way to evaluate them. With one look at the scatter plot anyone can see whether the dependent variable tends to rise, fall, or stay the same across values of the independent variable. The real relationship between the two variables might be a line, a curve, or an even more complicated pattern, but that is unimportant. The theories say nothing about lines or curves. The theories just say that when the independent variable goes up, the dependent variable goes up as well.

However, there are problems with scatter plots. Sometimes it can be hard to tell whether they trend upwards or not. For example, many Americans believe that new immigrants to America have large numbers of children, overwhelming schools and costing the taxpayers a lot of money. Figure 2-3 plots the relationship between birth rates (independent variable) and levels of international immigration (dependent variable) for 3193 US counties. Does the birth rate rise with greater immigration? It's hard to say from just the scatter plot, without a line. It turns out that birth rates do rise with immigration rates, but only very slightly.

As Figure 2-3 illustrates, another problem with scatter plots is that they become difficult to read when there are a large number of cases in the database being analyzed. Scatter plots also become difficult to read when there is more than one independent variable, as there will be later in this book. The biggest problem with using scatter plots to evaluate theories, though, is that different persons might have different opinions about then. One person might see a rising trend while someone else thinks the trend is generally flat or declining. Without a reference line to give a firm answer, it may be impossible to reach agreement on whether the theory being evaluated is or is not correct. For these (and other) reasons, social scientists don't usually rely on scatter plots. Scatter plots are widely used in the social sciences, but they are used to get an overall impression of the data, not to evaluate theories.

Instead, social scientists evaluate theories using straight lines like the reference lines that were drawn on the scatter plots above and in Chapter 1. These lines are called regression lines, and are based on statistical models called linear regression models. Linear regression models are statistical models in which expected values of the dependent variable are thought to rise or fall in a straight line according to values of the independent variable. Linear regression models (or just "regression models") are statistical models, meaning that they mathematical simplifications of the real world. Real variables may not rise or fall in a straight line, but in the linear regression model we simplify things to focus only on this one aspect of variables.

Of course, dependent variables don't really rise or fall in straight lines as regression models would suggest. Social scientists use straight lines because they are convenient, even though they may not always be theoretically appropriate. Other kinds of relationships between variables are possible, but there are many good reasons for using straight lines instead. Some of them are:

- A straight line is the simplest way two variables could be related, so it should be used unless there's a good reason to suspect a more complicated relationship

- Straight lines can be compared using just their slopes and intercepts (you don't need every piece of data, as with comparing scatter plots)

- Usually there's so much error in social science models that we can't tell the difference between a straight line relationship and other relationships anyway

The straight line a linear regression model is drawn through the middle of the cloud of points in a scatter plot. It is drawn in such a way that each point along the line represents the most likely value of the dependent variable for any given value of the independent variable. This is the value that the dependent variable would be expected to have if there were no error in the model. Expected values are the values that a dependent variable would be expected to have based solely on values of the independent variable. Figure 2-4 depicts a linear regression model of persons' fear of walking along at night. The dependent variable from Figure 2-2, the percent of persons who feel unsafe, is regressed on a new independent variable, the percent of persons who reported experiencing violence personally. There is less error in Figure 2-4 than we saw in Figure 2-2. Tasmania in particular now falls very close to the reference line of expected values.

The expected values for the percent of persons who feel unsafe walking at night have been noted right on the scatter plot. They are the values of the dependent variable that would have been expected based on the regression model. For example, in this model the expected percentage of persons who feel unsafe walking at night in Tasmania would be 16.9%. In other words, based on reported levels of violence experienced by persons in Tasmania, we would expect about 16.9% of Tasmanians to feel unsafe walking alone at night. According to our data, 14.3% of persons in Tasmania report feeling unsafe walking at night (see the variable UNSAFE_OUT in Figure 2-1 and read across the row for Tasmania). Since the regression model predicted 16.9% and the actual value was 14.3%, the error for Tasmania in Figure 2-4 was 2.6% ().

Regression error is the degree to which an expected value of a dependent variable in a linear regression model differs from its actual value. Regression error is expressed as deviation from the trend of the straight line relationship connecting the independent variable and the dependent variable. In general, regression models that have very little regression error are preferred over regression models that have a lot of regression error. When there is very little regression error, the trend of the regression line will tend to be steeper and the relationship between the independent variable and the dependent variable will tend to be stronger.

There is a lot of regression error in the regression model depicted in Figure 2-4, but less regression error than was observed in Figure 2-2. In particular, the regression error for Tasmania in Figure 2-2 was 7.1%—much higher than in Figure 2-4. This suggests that persons' reports of experiencing violence personally are better predictors of persons' feelings of safety at night than are the actual crime rates in a state. Persons' experiences of safety and fear are very personal, not necessarily based on crime statistics for society as a whole. If policymakers want to make sure that persons feel safe enough to go out in public, they have to do more than just keep the crime rate down. They also have to reduce persons' personal experiences—and persons' perceptions of their personal experiences—of violence and crime. This may be much more difficult to do, but also much more rewarding for society. Policymakers should take a broad approach to making society less violent in general instead of just putting potential criminals in jail.

2.2: The Slope of a Regression Line

[edit | edit source]In the social sciences, even good linear regression models like that depicted in Figure 2-4 tend to have a lot of error. A major goal of regression modeling is to find an independent variable that fits the dependent variable with more trend and less error. An example of a relationship that is almost all trend (with very little error) is depicted in Figure 2-5. The scatter plot in Figure 2-5 uses state birth rates as the independent variable and state death rates as the dependent variable. States with high birth rates tend to have young populations, and thus low death rates. Utah has been excluded because its very high birth rate (over 20 children for every 1000 persons every year) doesn't fit on the chart, but were Utah included its death rate would fall very close to the regression line. One state has an exceptionally high death rate (West Virginia) and one state has an exceptionally low death rate (Alaska).

Thinking about scatter plots in terms of trends and error, the trend in Figure 2-5 is clearly down. Death rates fall as birth rates rise, but by how much? The slope of the regression line gives the answer. Remember that the regression line runs through the expected values of the dependent variable. Slope is the change in the expected value of the dependent variable divided by the change in the value of the independent variable. In other words, it is the change in the regression line for every one point increase in the independent variable. In Figure 2-5, when the independent variable (birth rate) goes up by 1 point, the expected value of the dependent variable (death rate) down by 0.4 points. The slope of the regression line is thus −0.4 / 1, or −0.4. The slope is negative because the line trends down. If the line trended up, the slope would be positive.

An example of a regression line with a positive slope is depicted in Figure 2-6. This line reflects a simple theory of why persons relocate to new communities. Americans are very mobile—much more mobile than persons in most other countries—and frequently move from place to place within America. One theory is that persons go where the jobs are: persons move from places that have depressed economies to places that have vibrant economies. In Figure 2-6, this theory has be operationalized into the hypothesis that counties with higher incomes (independent variable) tend to attract the most migration (dependent variable). In other words, county income is positively related to migration. Figure 2-6 shows that this hypothesis is correct—at least for one state (South Dakota). The slope of the regression line in Figure 2-6 indicates that when county income goes up by $10,000, migration tends to go up by around 8%. The slope is actually .

The positive slope of the regression line in Figure 2-6 doesn't mean that persons always move to counties that have the highest income levels. There is quite a lot of error around the regression line. Lincoln County especially seems to far outside the range of the data from the other counties. Lincoln County is South Dakota's richest and its third most populous. It has grown rapidly over the past ten years as formerly rural areas have been developed into suburbs of nearby Sioux Falls in Minnehaha County. Many other South Dakota counties have highly variable migration figures because they are so small that the opening or closing of one employer can have a big effect on migration. Of the 66 counties in South Dakota, 49 are home to fewer than 10,000 persons. So it's not surprising that the data for South Dakota show a high level of regression error.

If it's true that persons move from places that have depressed economies to places that have expanding economies, the relationship between median income and net migration should be positive in every state, not just South Dakota. One state that is very different from South Dakota in almost every way is Florida. Florida has only two counties with populations under 10,000 persons, and the state is on average much richer than South Dakota. More importantly, lots of persons move to Florida for reasons that have nothing to do with jobs, like climate and lifestyle. Since many persons move to Florida when they retire, the whole theory about jobs and migration may be irrelevant there. To find out, Figure 2-7 depicts a regression of net migration rates on median county income for 67 Florida counties.

As expected, the Florida counties have much more regression error than the South Dakota counties. They also have a smaller slope. In Florida, every $10,000 increase in median income is associated with a 5% increase in the net migration rate, for a slope of . This is just over half the slope for South Dakota. As in South Dakota, one county is growing much more rapidly than the rest of the state. Flagler County in Florida is growing for much the same reason as Lincoln County in Nebraska: it is a formerly rural county that is rapidly developing. Nonetheless, despite the fact that the relationship between income and migration is weaker in Florida than in South Dakota, the slope of the regression line is still positive. This adds more evidence in favor of the theory that persons move from places that have depressed economies to places that have vibrant economies.

2.3: Outliers and Robustness

[edit | edit source]Because there is so much error in the statistical models used by social scientists, it is not uncommon for different operationalizations of the same theory to give different results. We saw this in Chapter 1 when different operationalizations of junk food consumption gave different results for the relationship between state income and junk food consumption (Figure 1-2 versus Figure 1-3). Social scientists are much more impressed by a theory when the theory holds up under different operationalization choices, as in Figure 2.6 and Figure 2.7. Ideally, all statistical models that are designed to evaluate a theory would yield the same results, but in reality they do not. Statistical models can be particularly unstable when they have high levels of error. When there is a lot of error in the model, small changes in the data can lead to big changes in model results.

Robustness is the extent to which statistical models give similar results despite changes in operationalization. With regard to linear regression models, robustness means that the slope of the regression line doesn't change much when different data are used. In a robust regression model, the slope of the regression line shouldn't depend too much on what particular data are used or the inclusion or exclusion of any one case. Linear regression models tend to be most robust when:

- They are based on large numbers of cases

- There is relatively little regression error

- All the cases fall neatly in a symmetrical band around the regression line

Regression models based on small numbers of cases with lots of error and irregular distributions of cases can be very unstable (not robust at all). Such a model is depicted in Figure 2-8. Many persons feel unsafe in large cities because they believe that crime, and particular murder, is very common in large cities. After all, in big cities like New York there are murders reported on the news almost every day. On the other hand, big cities by definition have lots of persons, so their actual murder rates (murders per 100,000 persons) might be relatively low. Using data on the 10 largest American cities, Figure 2-8 plots the relationship between city size and murder rates. The regression line trends downward with a slope of −0.7: as the population of a city goes up by 1 million persons, the murder rate goes down by 0.7 per 100,000. The model suggests that bigger cities are safer than smaller ones.

However, there are several reasons to question the robustness of the model depicted in Figure 2-8. Evaluating this model against the three conditions that are associated with robust models, it fails on every count. First, the model is based on a small number of cases. Second, there is an enormous amount of regression error. Third and perhaps most important, the cases do not fall neatly in a symmetrical band around the regression line. Of the ten cities depicted in Figure 2-8, eight are clustered on the far left side of the scatter plot, one (Los Angeles) is closer to the middle but still in the left half, and one (New York) is far on the extreme right side. New York is much larger than any other American city and falls well outside the cloud of points formed by the rest of the data. It stands alone, far away from all the other data points.

Outliers are data points in a statistical model that fall far away from most of the other data points. In Figure 2-8, New York is a clear outlier. Statistical results based on data that include outliers often are not robust. One outlier out of a hundred or a thousand points usually doesn't matter too much for a statistical model, but one outlier out of just ten points can have a big effect. Figure 2-9 plots exactly the same data as Figure 2-8, but without New York. The new regression line based on the data for the 9 remaining cities has a completely different slope from the original regression line. When New York was included, the slope was negative (−0.7), which indicated that larger cities were safer. With New York excluded, the slope is positive (0.8), indicating that larger cities are more dangerous. The relationship between city size and murder rates clearly is not robust.

It is tempting to argue that outliers are "bad" data points that should always be excluded, but once researchers start excluding points they don't like it can be hard to stop. For example, in Figure 2-9 after New York has been excluded there seems to be a new outlier, Philadelphia. All the other cities line up nicely along the trend line, with Philadelphia sitting all on its own in the upper left corner of the scatter plot. Excluding Philadelphia makes the slope of the regression line even stronger: it increases from 0.8 to 2.0. Then, with Philadelphia gone, Los Angeles appears to be an outlier. Excluding Los Angeles raises the slope even further, to 6.0. The danger here is obvious. If we conduct analyses only on the data points we like, we end up with a very skewed picture of the real relationships connecting variables out in the real world. Outliers should be investigated, but robustness is always an issue for interpretation, not something that can be proved by including or excluding specific cases.

2.4. Least Squared Error

[edit | edit source]- Optional/advanced

In linear regression models, the regression line represents the expected value of the dependent variable for any given value of the independent variable. It makes sense that the most likely place to find the expected value of the dependent variable would be right in the middle of the scatter plot connecting it to the independent variable. For example, in Figure 2-5 the most likely death rate for a state with a birth rate of 15 wouldn't be 16 or 0, but somewhere in the middle, like 8. The death rate indicated by the regression line seems like a pretty average death rate for a state falling in the middle of the range in its birth rate. This seems reasonable so far as it goes. Obviously the regression line has to go somewhere in the middle, but how do we decide exactly where to draw it? One idea might be to draw the regression line so as to minimize the amount of error in the scatter plot. If a scatter plot is a combination of trend and error, it makes sense to want as much trend and as little error as possible. A line through the very middle of a scatter plot must have less error than other lines, right? Bizarrely, the answer is no. This strange fact is illustrated in Figure 2-10, Figure 2-11, and Figure 2-12. These three figures show different lines on a very simple scatter plot. In this scatter plot, there are just four data points:

- X = 1, Y = 2

- X = 1, Y = 8

- X = 5, Y = 5

- X = 5, Y = 8

The actual regression line connecting the independent variable (X) to the dependent variable (Y) is graphed in Figure 2-10. This line passes right through the middle of all four points. Each point is 4 units away from the regression line, so the regression error for each point is 4. The total amount of error for the whole scatter plot is . No other line could be drawn on the scatter plot that would result in less error. So far so good.

The problem is that the regression line (A) isn't the only line that minimizes the amount of error in the scatter plot. Figure 2-11 depicts another line (B). This line doesn't quite run through the middle of the scatter plot. Instead, it's drawn closer to the two low points and farther away from the two high points. It's clearly not as good a line as the regression line, but it turns out to have the same amount of error. The error associated with line B is . It seems that both line A and line B minimize the amount of error in the scatter plot.

That's not all. Figure 2-12 depicts yet another line (C). Line C is an even worse line than line B. It's all the way at the top of the scatter plot, very close to the two high points and very far away from the two low points. It's not at all in the middle of the cloud of points. But the total error is the same: . In fact, any line that runs between the points—any line at all—will give the same error. Many different trends give the same error. This makes it impossible to choose any one line based just on its total error. Another method is necessary.

That method that is actually used to draw regression lines is to draw the line that has the least amount of squared error. Squared error is just that: the error squared, or multiplied by itself. So for example if the error is 4, the squared error is 16 (). For line A in Figure 2-10, the total squared error is or . For line B in Figure 2-11, the total squared error is or . For line C in Figure 2-12, the total squared error is or . The line with the least squared error is line A, the regression line that runs through the very middle of the scatter plot. All other lines have more error.

It turns out that the line with the least squared error is always unique—there's only one line that minimizes the total amount of squared error—and always runs right through the center of the scatter plot. As an added bonus, computers can calculate regression lines using least squared error quickly and efficiently. The use of least squared error is so closely associated with linear regression models that they are often called "least squares regression models." All of the statistical models used in the rest of this book are based on the minimization of squared error. Least squared error is the mathematical principle that underlies almost all of social statistics.

2.5: Case Study: Property Crime and Murder Rates

[edit | edit source]Murder is a rare and horrific crime. It is a tragedy any time a human life is ended prematurely, but that tragedy is even worse when a person's death is intentional, not accidental. Sadly, some of the students using this textbook will know someone who was murdered. Luckily, most of us do not. But almost all of us know someone who has been the victim of a property crime like burglary or theft. Many of us have even been property crime victims ourselves. Property crimes are very common not just in the United States but around the world. In fact, levels of property crime in the US are not particularly high compared to other rich countries. This is odd, because the murder rate in the US are very high. It seems like all kinds of crime should rise and fall together. Do they?

One theory of crime might be that high rates of property crime lead to high rates of murder, as persons move from petty crime to serious crime throughout their criminal careers. Since international data on property crimes might not be equivalent from country to country, it makes sense to operationalize this theory using a hypothesis and data about US crime rates. A specific hypothesis linking property crime to murder would be the hypothesis that property crime rates are positively associated with murder rates for US cities with populations over 100,000 persons. This operationalization excludes small cities because it is possible that smaller cities might have no recorded crimes in any given year.

Data on crime rates of all kinds are available from the US Federal Bureau of Investigation (FBI). In Figure 2-13 these data are used to plot the relationship between property crime rates and murder rates for the 268 American cities with populations of over 100,000 persons. A linear regression model has been used to place a trend line on the scatter plot. The trend line represents the expected value of the murder rate for any given level of property crime. So for example in a city that has a property crime rate of 5,000 per 100,000 persons, the expected murder rate would be 10.2 murders per 100,000 persons. A few cities have murder rates that would be expected given their property crime rates, but there is an enormous amount of regression error. Murder rates are scattered widely and do not cluster closely around the regression line.

The slope of the regression line is positive, as expected. This tends to confirm the theory that high rates of property crime are associated with high rates of murder. Every increase of 1,000 in the property crime rate is associated, on average, with an increase of 2.7 in the murder rate. This is likely to be a robust result, since it is based on a large number of cases. On the other hand, there is a high level of error and the cases do not fall neatly in a symmetrical band around the regression line, so we might show some caution in interpreting our results. There is also one major outlier: New Orleans. The murder rate for New Orleans is far higher than that of any other US city, and New Orleans falls well outside the boundaries of the rest of the data. Excluding New Orleans, however, results in no change in the slope of the regression line, which remains 2.7 whether New Orleans is included or not.

Overall, the theory that high rates of property crime are associated with high rates of murder seems to be broadly valid for US cities as a whole, but the murder rate in any particular US city doesn't seem to correspond closely to the property crime rate. If they want to bring down their murder rates, it wouldn't hurt for US cities to try bringing down their property crime rates, but it likely wouldn't solve the problem. Cities with property crime rates in the range of 5,000–6,000 can have murder rates ranging anywhere from near zero to 30 or more. Policies to reduce murder rates should probably be targeted specifically at reducing violence in society, not broadly at reducing crime in general.

Chapter 2 Key Terms

[edit | edit source]- Expected values are the values that a dependent variable would be expected to have based solely on values of the independent variable.

- Linear regression models are statistical models in which expected values of the dependent variable are thought to rise or fall in a straight line according to values of the independent variable.

- Outliers are data points in a statistical model that are far away from most of the other data points.

- Regression error is the degree to which an expected value of a dependent variable in a linear regression model differs from its actual value.

- Robustness is the extent to which statistical models give similar results despite changes in operationalization.

- Slope is the change in the expected value of the dependent variable divided by the change in the value of the independent variable.