Switches, Routers, Bridges and LANs/Print version

Introduction

[edit | edit source]| This page or section is an undeveloped draft or outline. You can help to develop the work, or you can ask for assistance in the project room. |

The need for a layered approach

[edit | edit source]Users have very high expectations of computer networks: we expect minimal delay and high reliability even when communicating across the globe. But the hardware that provides this communication sometimes fails, or needs to be upgraded. Nor is the internet a static system: new networks are connected every day, and some old networks stop working temporarily or permanently. The systems that are used for computer networking need to detect and work around errors, and do so automatically without waiting for human intervention. Errors can occur on any level, from tiny amounts of electrical interference or cosmic rays that might alter a single bit of information on a wire, to an entire trans-Atlantic network trunk cable being cut by a ship's anchor.

Writing a single protocol that can deal with all these eventualities is achievable in theory, but in practice the need to deal with all the possible sources of error would quickly make it unmanageably complex. In addition, there's a political problem with deploying one single monolithic protocol: everyone would have to use the same protocol, and everyone would have to adopt it at the same time.

The solution that developed is a common one in software engineering: the use of abstraction. Networking is implemented in terms of a series of layers, each of which only solves a small part of the whole problem of networked communications. However, each layer can rely on the services provided by the layers below it, so that the entire stack working together can solve a problem that no single layer solves. In addition, the layered model means that different parts of the network can solve the lower-layer problem in different ways, provided that they all implement the same interface that the higher-level layers rely on.

The OSI model

[edit | edit source]An early attempt to standardize the layers of networking was made in 1978 by the Open Systems Interconnection (OSI) project which was started by the International Organization for Standardization (ISO). The product of this group described 7 network layers and specified a suite of protocols to operate at each of these layers. Though the protocols specified didn't catch on and were superseded by TCP/IP, the concepts of the 7 layers stuck, and are still used to this day to describe networking protocols. There is no strict need for protocols to comply with the OSI 7-layer model, and indeed many protocols blur the boundaries or collapse the functions of several layers into one protocol, but the layer numbers are still useful for informal communication.

Layers in the OSI model

[edit | edit source]Layer 1: the physical layer

[edit | edit source]The lowest layer of the stack deals with the physical details of sending signals from one place to another. Typically this information is carried encoded in electrical signals or laser light, but in principle any means of communication could be used. For example, if you had a way of encoding binary data into sound transmitted from a speaker to a microphone (with a second speaker and microphone to send data back the other way) then you could use the rest of the standard protocols on top of this physical layer without having to change them.

The physical layer needn't provide completely reliable transmission: the upper layers are responsible for detecting errors and resending data if necessary. The physical layer merely provides some way of propagating data from one node to another.

Among other things, the specification of a physical layer protocol will need to specify the voltage of an electric signal or frequency and power of a laser, size and shape of connectors, modulation of the signal, and the way that multiple nodes share the same link.

Layer 2: The Data Link Layer

[edit | edit source]The data link layer is responsible for providing the means to transfer data from one node to the other, if possible detecting or correcting errors in the physical layer. Layer 2 introduces the concept of unique addresses that identify the nodes that are communicating. Unlike layer 3, data link layer addresses use a flat structure, i.e. the structure of the address doesn't yield any information about the relative location of nodes or the route that traffic should take between them.

The most familiar layer 2 protocol is Ethernet, although the Ethernet standard also specifies details of the physical layer.

Layer 3: The Network Layer

[edit | edit source]The network layer builds on the lower layers to support routing data across interconnected networks (rather than within a single network). Addressing at the network layer takes advantage of a hierarchical structure so that it's possible to summarise the route to thousands or millions of hosts as a single piece of information. Typically, nodes that are on the same network as each other will share a common prefix on their layer 3 address. Layer 3 communication doesn't contain any concept of a continuing connection: each packet of data sent between a pair of communicating hosts is treated separately, with no knowledge of the packets that went before it. Layer 3 protocols may be able to correct errors in a packet that have been introduced at the physical or data link layer, but do not guarantee that no packet will be lost.

Layer 4: The Transport Layer

[edit | edit source]The transport layer allows communicating hosts to establish an ongoing connection between them. Layer 4 protocols may detect missing packets and compensate by retransmitting them, but not all protocols do so: most obviously, TCP does provide reliable transmission but UDP doesn't. Providing reliable transmission incurs an overhead, and some data is obsolete once it has been even slightly delayed (e.g. data for a live phone call or video conference) so there are cases where unreliable transmission is desirable.

Layer 5: The Session Layer

[edit | edit source]The OSI model allowed for a fifth layer that provides the mechanism for creating, maintaining and destroying a semi-permanent session between end-user applications. For example, it might make it possible to checkpoint and restore communication sessions, or bring several streams from different sources into sync. In practice, although there are protocols that provide features of this type, layer 5 is rarely referred to as a general concept.

Layer 6: The Presentation Layer

[edit | edit source]Layer 6 is the layer at which data structures that have meaning to the application are mapped into a stream of bytes, the details of which need not concern the lower layers. In theory, this relieves the application layer of having to worry about the differences between one computer platform and another, e.g. a computer that uses ASCII to encode its text files communicating with one that uses EBCDIC. In practice, protocols rarely bother to differentiate this layer from the highest layer (the application layer), treating the two combined as one layer.

Layer 7: The Application Layer

[edit | edit source]The application layer covers the protocols that describe application-specific details of communication. FTP, HTTP and SMTP are all application-layer protocols.

Switching at different layers

[edit | edit source]The distinction between the aspects of communication that are constrained by each protocol layer may at first seem unimportant or arbitrary. This is true in the case of the most trivial network, consisting of just two computers with a single cable connecting them. However, as the number of nodes on the network increases, it gets more and more useful to clearly distinguish the responsibilities of each layer.

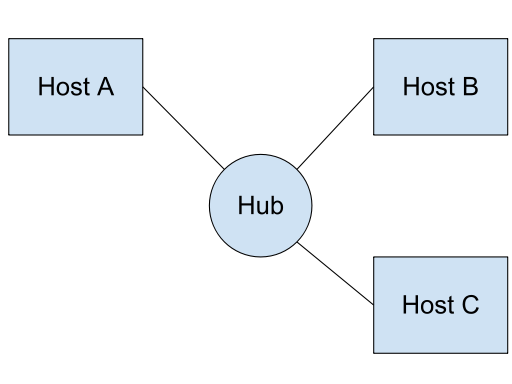

Two computers can share a single physical cable between them, but if we want to add a third computer to this micro-network, how do we connect it? Do we attempt to share the same physical cable by cutting it and splicing in a branch to the cable? This might work in theory, but would be inflexible in practice: apart from the time taken to cut and splice the cable, it would be very hard to add a node to the network without disrupting the network for the existing network users. A more maintainable solution is to plug each computer into a common hub. The hub will have network sockets on it that our network cables can plug into, and the circuitry within the hub will ensure that as soon as a cable is plugged in it will be able to send and receive signals with any other cables already connected.

The hub is a purely physical layer device. It doesn't know the meaning of any of the signals it transmits, nor does it make any decisions about which signals should go where or whether data is corrupt. Every signal on every cable is copied to all the other cables.

An alternative to using a physical-layer hub is to use a layer 2 switch to connect the hosts. Unlike a hub, a switch attempts to process the data it receives so as to understand something about the packets being transferred. A switch will only parse the layer 2 content of a packet, treating all the higher layer data as a blob of data that can be transferred without understanding it. The advantage of parsing the layer 2 wrapper is that this contains the source and destination addresses of the packet. If the switch knows which direction to send the packet (based on the destination address and its knowledge of the network) it can send the packet to only one link on the network, which saves bandwidth. If the switch doesn't know where to send the packet, it sends it to all interfaces other than the one on which it received the packet: this is called flooding.

Using switches in place of hubs saves bandwidth (by only sending packets on links that need to receive them), the only disadvantage being that switches are more complex devices and may cost more or require additional configuration. In practice, simple switches don't require any configuration and have long since become as cheap as hubs (or even cheaper, now that there is little demand for physical layer hubs).

This discussion has glossed over the detail of how exactly the switch knows where to send a particular packet. When a switch is first connected to the network, it doesn't know anything about the network. Without any knowledge, it has to flood every packet it receives onto every port, behaving in effectively the same way as a hub would. However, the switch can learn from each packet it sees, and this means it can make better decisions. For example, if a switch receives a packet with source address A and destination B on interface 2, it can conclude that source address A is reachable via interface 2. Next time it receives a packet with destination address A (whether or not it comes from address B) it can forward it straight to interface 2, saving bandwidth on the other links.

Inside every switch there is therefore a table mapping layer 2 addresses to port numbers. The size of this table is limited by the available memory, and the speed of looking up addresses in the table (packets must be looked up very quickly in order to be forwarded on without too much delay). The specialist hardware that enables this fast lookup is very expensive, so in practice if you want your switch to have more layer 2 address capacity you have to be prepared to pay extra for it. Home network switches might be able to store a few thousand addresses, while high-end data center switches can cope with tends or hundreds of thousands.

No matter how much you spend on your switch, it's never going to be able to cope with an entry in its lookup table for every one of the billions of hosts on the internet. The only sensible way to deal with this is to break down the network into sections, and store information about it a section at a time. This is the way that routing (which takes place at layer 3) differs from switching (taking place at layer 2).

Bridges

[edit | edit source]| A reader requests that the formatting and layout of this book be improved. Good formatting makes a book easier to read and more interesting for readers. See Editing Wikitext for ideas, and WB:FB for examples of good books. Please continue to edit this book and improve formatting, even after this message has been removed. See the discussion page for current progress. |

Bridge

[edit | edit source]“A device used to connect two separate Ethernet networks into one extended Ethernet. Bridges only forward packets between networks that are destined for the other network. Term used by Novell to denote a computer that accepts packets at the network layer and forward them to another network.”

Why Use Bridges?

[edit | edit source]Bridges are important in some networks because the networks are divided into many parts geographically remote from one another. Something is required to join these networks so that they can become part of the whole network. Take for example a divided LAN, if there is no medium to join these separate LAN parts an enterprise may be limited in its growth potential. The bridge is one of the tools to join these LANS.

Secondly a LAN (for example Ethernet) can be limited in its transmission distance. We can eliminate this problem using bridges as repeaters, so that we can connect a geographically extensive network within the building or campus using bridges. Hence geographically challenged networks can be created using Bridges.

Third, the network administrator can control the amount of traffic going through bridges sent across the expensive network media.

Fourth, the bridge is plug and play device so there is no need to configure the bridge. And suppose any machine was taken out from the network then there is no need for the network administrator to update the bridge configuration information as bridges are self configured. And also it provide easiness for the transfer of Data. Useful for data transfer.

The MAC Bridge

[edit | edit source]Bridges are used to connect LANs. Therefore in determining how to transmit traffic between LANs they use a destination MAC address. Bridges push the function of network layer such as route discovery and forwarding to the data link layer. There is no conventional network layer for bridge.

The bridges can not maintain the integrity of data transmission in the case of received errors. For example suppose there is an error in one frame and that frame is not transmitted properly the bridge will not give any acknowledgement to retransmit that frame. If the bridge becomes congested the frames can be discarded to make the traffic smooth. On the other hand the bridges are easy to implement and no need to configure them.

Types of Bridges

[edit | edit source]- Transparent Basic Bridge

- Source Routing Bridge

- Transparent Learning Bridge

- Transparent Spanning Bridge

The Transparent Basic Bridge

[edit | edit source]The simplest type of bridge is the transparent basic bridge. It stores the traffic until it can transmit it to the next network. The amount of time the data is stored is very brief. Traffic is sent to all ports except the port from which the bridge received the data. No conversion of traffic is performed by a bridge. In this regard, the bridge is similar to a repeaters.

Source Routing Bridge

[edit | edit source]The route through the LAN internet is determined by the source (originator) of the traffic hence this bridge is called as source routing bridge. The routing information field (RIF) in the LAN frame header, contains the information of route followed by the LAN network.

The intermediate nodes that are required to receive and send the frame must be identified by the routing information. For this reason source routing requires that the user traffic should follow the path determined by the routing information field.

The frames of the source routing protocol are different from the other bridge frames because the source routing information must be contained within the frame. The architecture of the other bridges and the source routing bridges are similar. Both uses MAC relay entity at the LAN node. Interfaces are provided through MAC relay entity and LLC.

The figure shown above shows the functional architecture of the source routing bridges. There are two primitives who are invoked between MAC entities and the LLC. The first is M_UNITDATA.request, and the second is M_UNITDATA.indication

The parameter in these primitives must provide the information about to create the frame (frame control), MAC address, the routing information which is used to forward the traffic through the LAN. The frame check sequence value should be included if frame check sequence operations are to be performed. The primitive also contain a user priority parameter, a data parameter, and a service class parameter. A user parameter and a service class parameter are used only with token rings and not used for other LANs, for example Ethernet or Token bus.

The Transparent Learning Bridge

[edit | edit source]The transparent bridge finds the location of user using the source and destination address. When the frame is received at the bridge it checks its source address and the destination address. The destination address is stored if it was not found in a routing table. Then the frame sent to all LAN excluding the LAN from which it came. The source address is also stored in the routing table. If another frame is arrived in which the previous source address is now its destination address then it is forwarded to that port.

The physical topology of transparent bridges cannot allow the loops in the network. This is the restriction over transparent learning bridge. The whole operation of this bridge is operated by the bridge processor which is responsible for routing traffic across its ports. The processor decides the destination ports of associated MAC addresses by accessing a routing database. When a frame arrives the processor will check the output port in the database on which the frame will be relayed. If the destination address is not in the database then the processor will broadcast that frame onto all ports except the port from which the frame was arrived. The bridge processor also stores the source address in the frame because this source address may be the destination address for another incoming frame.

The bridge processes the incoming frame as shown above in figure . The Bridge will check the source and destination address of the incoming frame. In this case the source address is ‘B’ and the destination address is ‘A’. After accessing its routing table, it finds that the destination address is not present in the database and it broadcast the frame to all outgoing ports except the port from which it was arrived. After forwarding the frame it will check the SA (source address) and confirms that it knows it. If SA is present in the database, it will refresh the database and update this entry by refreshing the timer which means that this address is still ‘timely and valid’. In the shown example it does not know about the SA of ‘B’. It stores ‘B’ into database that ‘B’ is an active station on the LAN and from the view point of the bridge ‘B’ can be found on the port 1.

As shown in figure , a frame arrives at the bridge on port 3 with DA (destination address) as ‘B’ and SA (source address) as C. The bridge will access its database and check the destination address is present or not. The bridge finds the destination port and determines that ’B’ can reach through its port 1. This decision was made from the previous operation in which a frame arrived from port 1 with SA= ’B’ and DA= ’A’. This source address becomes the destination address for this frame. So bridge know the exact destination port no. This time the bridge will not broadcast the frame because it knows the DA. It will forward the frame to port 1 and not on port 2. The bridge also stores in its routing table that the SA ‘C’ can be reached through port 3. This information will be useful in coming next frames. The learning bridge assumes that the frame received on an incoming port has been properly delivered by the other bridges and LANs so it does not forward the frame to the port from where it arrives. The learning bridge is totally based on trust.

In some situation bridges will not forward the frame to any of the port. The total filtering is possible as shown in the figure 5. a frame arrives to the bridge with SA= ’D’ and DA = ’B’ from port 1. The bridge will access its database and check the SA and DA and reveals that DA=’B’ can be found on port 1. The frame is arrived from the same port so it will not forward that frame to port 2 or port 3 and also not backward to port 1. It also checks the SA is present in the database. The SA =’D’ is not present in the database so it will add this entry to the database and time is attached to the entry.

The multicasting and broadcasting is permitted in learning bridge. AS shown in figure a frame arrives from port 1 with DA = ‘ALL’ (All 1s in the destination address field) and SA= ‘D’. The bridge will not update its routing table as ‘D’ is already present in the database. In this example traffic is sent to ports two and three.

The figure 6 shows examples of how a bridge forward and filter the incoming frame. A frame transmitted from station ‘A’ to station ‘B’ is not forwarded by bridge one because it assumes that the traffic was successfully transformed from ‘A’ to ‘B’ using broadcasting. The traffic sent by station ‘A’ to station ‘C’ must be forwarded by bridge 1 and discarded by bridge 2. And the traffic sent by station ‘A’ to station ’D’ must be forwarded by both of the bridges.

The following flow chart shows the overall working logic of the learning bridges. When the bridge will receive incoming frame from port Z, it will look for its destination MAC address in the database. If it is not present the bridge will broadcast that frame to all outgoing ports except from the arrival frame port. If the DA is present in the database, it will forward the frame else if the DA is the port from where it arrives, it will discard that frame. However if the DA is different then it will forward the frame to appropriate port. Then the bridge will check the SA is present in the database. If it is present then it will refresh the timer else it will add that SA to the database.

The Transparent Spanning Tree Bridge

[edit | edit source]The last type of bridge is transparent spanning bridge. These bridges use a subnet of the full topology to create a loop free operation. The following table show the logic of transparent spanning tree bridge. The received frame is checked by the bridge in following manner. The destination address of arrived frame is checked with routing table in the database. Here more information is required for bridge so the bridge port is also stored in the database. This information is known as port state information and it helps in deciding that, a port can be used for this destination address or not. The port can be in a block state to fulfill the requirements of spanning tree operations or in a forwarding state. If the port is in forwarding state the frame is routed across the port. The port can have different status such as; it may be in “disabled” state for the maintenance reason or may also be unavailable temporarily if databases are being changed in the bridge because of result of the change in the routed network.

Spanning tree Protocol

[edit | edit source]Some site uses two or more bridges in parallel between the pair of LANs to increase the reliability of the network as shown in figure 7. This arrangement introduces some looping problem in the network.

In above figure frame F, with unknown destination is handled.Bridges don't know the destination address so each of the two bridges broadcast that frame. For this example this means copying it to LAN 2. After that bridge 1 sees the frame F2 which is a frame with unknown destination address, which it copies to LAN1, generating another frame F3 which is not shown in figure. Same way bridge 2 copies frame F1 to LAN1 generating F4 which is also not shown in figure. Bridge 1 now forward frame F4 and bridge 2 copies frame F3. This cycle goes on and on. This is where looping problem came in picture.

The solution to this looping problem is bridges should communicate with each other and change their actual topology with spanning tree that reaches to each LAN in the network. In the spanning tree some bridges in the network are discarded as we want to construct the loop free topology. For example in figure shown below there are 10 routers connecting 9 different LANs. This configuration can be changed to loop free topology shown by the graph in figure 9. Here LANs are shown as node and the LANs are connected by the bridges shown by an arc. This graph can be reduced to the spanning tree by dropping the arc shown by dotted lines. Now there is only single path from one LAN to another. In this way looping problem was solved by the spanning tree and there is only single path from each source to each destination. Loops are totally removed using spanning 3.

To construct the spanning tree follow following Spanning tree Algorithm

- First of all select the root bridge. The root bridge is the bridge with the lowest serial number (this number is provided by the router manufacturer). All ports which are coming to the bridge or going out from the bridge are designated port. In our given example in figure the root bridge is ‘A’ and the ports coming from LAN 1 and LAN 2 are the designated ports.

- Then select a root port for the non-root bridge. Root port for the non-root bridge is the port with the lowest path cost to the root bridge. In our example the incoming port to bridge ‘B’ is lowest cost path. Same logic applies for the other bridges.

- Select a designated port on each segment. The designated port has the lowest cost to the root bridge. In our example the outgoing port from bridge ‘B’ is designated port which has the lowest cost to the root bridge. Same logic applies for the other bridges.

- After spanning tree algorithm determine the lowest cost spanning tree, it enables all root ports and the designated ports, and disables all other ports.

- The spanning tree algorithm continues to run during normal operation.

The advantages of bridging over routing are as follows:

- Transparent bridges are plug and play as they are self learning and do not require any configuration. For the assignment of network address routers require definition for each interface. These addresses should be unique.

- Bridging has less overhead for handling packets as compared to routing.

- Bridging is protocol independent while routing is protocol dependent.

- Bridging will forward all LAN protocols while router can route packets only.

Packet formats

[edit | edit source]The Configuration Message:

The following fig. shows the format for the configuration message. It is also called as a bridge protocol data unit (BPDU). The following parameters are set to zero:

- The protocol identifier,

- Version identifier,

- Message type.

| Octets | Parameters |

|---|---|

| 2 | Protocol Identifier |

| 1 | Protocol Version |

| 1 | Type of BPDU |

| 1 | Flags |

| 8 | Root identifier |

| 4 | Cost to root |

| 8 | Bridge identifier |

| 2 | Port identifier |

| 2 | Message age |

| 2 | Max age |

| 2 | Hello time |

| 2 | Forward Delay |

The root identifier field contains the identity of the root bridge, and a 2 octet field which is used for deciding the priority of the root bridge and the designated bridge. The root path cost field indicates the total cost from the transmitting bridge to the bridge which is listed in the root id. field.

The flag field in the bridge message contains a topology change notification flag which is used to inform non root bridges that they should update station entries in cache. This field can also be used to indicate topology change notification bit. The bridges do not have to inform a parent bridge that a topology changes has occurred using the previous field. The parent bridge will perform this task.

The priority and ID of the bridge that is sending the configuration message can be indicated by the bridge and port identifier. The message age field indicates the time in 1/256th of a second. The hello time field also indicates 1/256th of a second, defines the time between the sending of message by the root bridge. The forward delay field, also in 1/256th of a second, is the time during which a port should stay in an intermediate state such as learning or listening before moving from blocking state to forwarding state.

Port states

[edit | edit source]Each port on a bridge has a state that controls how it handles the packets it receives. The port can forward frames as normal, or can discard the frames. In addition, a port may choose to learn MAC addresses from frames it discards (in addition to learning from frames it forwards). It's theoretically possible that a port could forward a packet without learning the MAC address from it, but this isn't used in practice. The possible combinations are given the following names:

- Discarding

- No forwarding or learning takes place

- Learning

- The port learns MAC addresses, but discards the frames

- Forwarding

- The port both learns addresses and forwards frames

Remote bridges

[edit | edit source]Bridges are used to connect two (or more than 2) different distant LANs. For example a company may have different department at different locations each with its own LAN. The whole network should be connected so that it will act like one large LAN. This can be achieved by the placing a bridge on each LAN and connecting the bridges with the lines (the line given by the telephone company). In the figure10 the three LANs are connected with the remote bridges. As given in the figure we can join number of LANs using remote bridges.

Rapid Spanning Tree Protocol (RSTP)

[edit | edit source]In 2002 the IEEE introduced Rapid Spanning Tree Protocol, which is an extension of the original STP that supports faster convergence after a topology change. The new protocol requires a new BPDU format, but supports falling back to the old format in the event of a plain STP bridge being identified. This means that the STP protocol as such is no longer needed, and was later obsoleted in the 2004 release of the 802.1D standard.

Routers

[edit | edit source]Basics of Routing

[edit | edit source]| A reader requests that the formatting and layout of this book be improved. Good formatting makes a book easier to read and more interesting for readers. See Editing Wikitext for ideas, and WB:FB for examples of good books. Please continue to edit this book and improve formatting, even after this message has been removed. See the discussion page for current progress. |

What Is Routing?

[edit | edit source]Routing is the process of moving information across an internetwork from source to destination. At least one intermediate node must be encountered along the way. Routing and bridging look similar but the primary difference between the two is that bridging occurs at Layer 2 (the link layer) of the OSI reference model, whereas routing occurs at Layer 3 (network layer). One important difference between routing and bridging is that the layer 3 addresses are allocated hierarchically, so it is possible for a router to have a single rule allowing it to route to an entire address range of thousands or millions of addresses. This is an important advantage in dealing with the scale of the internet, where hosts are too numerous (and are added and removed too quickly) for any router to know about all hosts on the internet.

The role of routing the information in network layer is performed by routers. Routers are the heart of the network layer. Now first we look the architecture of the router, processing of datagram in routers and then we will learn about routing algorithms.

The Architecture of a router

[edit | edit source]A router will include the following components:

Input ports

[edit | edit source]Input port performs several functions. The physical layer function is performed by the line termination unit. Protocol decapsulation is performed by data link processing. Input port also performs lookup and forwarding function so that packets are forwarded into the switching fabric of the router emerges at the appropriate output port. Control packets like packets carrying routing protocol information for RIP, OSPF etc. are forwarded to routing processor. Input port also performs input queuing when output line is busy.

Output ports

[edit | edit source]Output port forwards the packets coming from switching fabric to the corresponding output line. It performs exact reverse physical and data link functionality than the input port. Output port also performs queuing of packets which comes from switching fabric.

Routing processor

[edit | edit source]Routing processor executes routing protocols. It maintains routing information and forwarding table. It also performs network management functions within the router.

Switching Fabric

[edit | edit source]The job of moving the packets to particular ports is performed by switching fabrics. Switching can be accomplished in number of ways:

- Switching via Memory: The simplest, easiest routers, with switching between output and input ports being done under direct control of CPU (router processor). Whenever a packet arrives at input port routing processor will come to know about it via interrupt. It then copies the incoming packets from input buffer to processor memory. Processor then extracts the destination address look up from appropriate forwarding table and copies the packet to output port’s buffer. In modern routers the lookup for destination address and the storing (switching) of the packet into the appropriate memory location is performed by processors input line cards.

- Switching via Bus: Input port transfers packet directly to the output port over a shared bus, without intervention by the routing processor. As the bus is shared only one packet is transferred at a time over the bus. If the bus is busy the incoming packets has to wait in queue. Bandwidth of router is limited by shared bus as every packet must cross the single bus. Examples: Bus switching CISCO-1900, 3-COM’s care builder5.

- Switching via Interconnection Networks: To overcome the bandwidth problem of a shared bus cross bar switching networks is used. In cross-bar switching networks input and output ports are connected by horizontal and vertical buses. If we have N input ports and N output ports it requires 2N buses to connect them. To transfer a packet from the input port to corresponding output port, the packet travels along the horizontal bus until it intersects with vertical bus which leads to destination port. If vertical is free the packet is transferred. But if vertical bus is busy because of some other input line must be transferring packets to same destination port. The packets are blocked and queued in same input port.

Processing the IP datagram

[edit | edit source]The incoming packets to the input port are stored in queue to wait for processing. As the processing begins, the IP header is processed first. The error checksum is performed to identify the errors in transmission. If it does not contain error then the destination IP address field is check. If it is for the local host then taking into account the protocol UDP, TCP or ICMP etc. the data field is passed to the corresponding module.

If the destination IP address is not for local host then it will check for the destination IP address in its routing table. Routing table consist of the address of next router to which the packet should be forwarded. Then the output operation are performed on the outgoing packet such as its TTL field must be decrease by one and checksum bits are again calculated and the packet is forwarded to the output port which leads to the corresponding destination. If the output port is busy then packet has to wait in output queue.

Packet scheduler at the output port must choose the packet from the queue for transmission. The selection of packet may be on the basis of First-come-first-serve (FCFS) or priority or waited fair queuing (WFQ), which shares the outgoing link “fairly” among the different end-to-end connections that have packets queued for transmission. For quality-of-service packet scheduling plays very crucial role. If the incoming datagram contains the routing information then the packet is send to the routing protocol which will modify the routing table entry accordingly.

Routing Algorithms

[edit | edit source]Now we will take into consideration different routing algorithms. There are two types of protocol for transferring information form source to destination.

Routed vs Routing Protocol

[edit | edit source]Routed protocols are used to direct user traffic such as IP or IPX between routers. Routed packet contains enough information to enable router to direct the traffic. Routed protocol defines the fields and how to use those fields.

Routed protocols include:

- Internet Protocol

- Telnet

- Remote Procedure Call (RPC)

- SNMP

- SMTP

- Novell IPX

- Open Standards Institute networking protocol

- DECnet

- Appletalk

- Banyan Vines

- Xerox Network System (XNS)

Routing protocol allow routers to gather and share the routing information to maintain and update routing table which in turn used to find the most efficient route to destination.

Routing protocol includes:

- Routing Information Protocol (RIP and RIP II)

- Open Shortest Path First (OSPF)

- Intermediate System to Intermediate System (IS-IS)

- Interior Gateway Routing Protocol (IGRP)

- Cisco's Enhanced Interior Gateway Routing Protocol (EIGRP)

- Border Gateway Protocol (BGP)

Design Goals of Routing Algorithms

[edit | edit source]Routing algorithms have one or more of the following design goals:

- Optimality: This is the capability of the routing protocol to search and get the best route. Routing metrics are used for finding best router. The number of hops or delay can be used to find the best path. Paths with fewer hops or paths having least delay should be preferred as the best route.

- Simplicity and low overhead: Routing algorithms also are designed to be as simple as possible. The routing algorithm must offer its functionality efficiently, with a minimum of software overhead. Efficiency is particularly important when the software implementing the routing algorithm must run on a computer with limited physical resources, or work with large volumes of routes.

- Robustness and stability: Routing protocol should perform correctly in the face of unusual or unforeseen circumstances, such as hardware failures, high load conditions, and incorrect implementations. This property of routing protocols is known as robustness. The best routing algorithms are often those that have withstood the test of time and that have proven stable under a variety of network conditions.

- Rapid convergence: Routing algorithms must converge rapidly. Convergence is the process of agreement, by all routers, on optimal routes. In a network when a network event causes routes to either go down or become available or cost of link changes, routers distribute routing update messages which causes the other network routers to recalculate optimal routes and eventually cause other routers in networks to agree on these routes.

- Flexibility: Routing algorithms should also be flexible. They should quickly and accurately adapt to a variety of network circumstances.

Classification of routing algorithms

[edit | edit source]Routing algorithms are mainly are of two types

- Static routing: In static routing algorithms the route followed by the packet always remains the same. Static routing algorithm is used when routes change very slowly. In this network administrator computes the routing table in advance, the path a packet takes between two destinations is always known precisely, and can be controlled exactly.

- Advantages:

- Predictability: Because the network administrator computes the routing table in advance, the path a packet takes between two destinations is always known precisely, and can be controlled exactly.

- No overhead on routers or network links: In static routing there is no need for all the routers to send a periodic update containing reachability information, so the overhead on routers or network links is low.

- Simplicity: Configuration for small networks is easy.

- Disadvantages:

- Lack of scalability: Computing the static routing for small number of hosts and routers is easy. But for larger networks finding static routes becomes cumbersome and may lead to errors.

- If a network segment moves or is added: To implement the change, you would have to update the configuration for every router on the network. If you miss one, in the best case, segments attached to that router will be unable to reach the moved or added segment. In the worst case, you'll create a routing loop that affects many routers

- It cannot adapt failure in a network: If a link fails on a network using static routing, then even if an alternative link is available that could serve as a backup, the routers won't know to use it.

- Advantages:

- Dynamic routing: Machines can communicate to each other through a routing protocol and build the routing table. The router then forwards the packets to the next hop, which is nearer to the destination. With dynamic routing, routes change more quickly. Periodic updates are send on the network, so that if there is change in link cost then all the routers on the network come to know it and will change there routing table accordingly.

- Advantages:

- Scalability and adaptability: A dynamically routed network can grow more quickly and grow larger without becoming unmanageable. It is able to adapt to changes in the network topology brought about by this growth.

- Adaptation to failures in a network: In dynamic routing routers learn about the network topology by communicating with other routers. Each router announces its presence. It also announces the routes it has available to the other routers on the network. Because of this if you add a new router, or add an additional segment to an existing router, the other routers will hear about the addition and adjust their routing tables accordingly.

- Disadvantages:

- Increase in complexity: In dynamic routing it has to send periodic updates about the communicating information about the topology. The router has to decide exactly what information it must send. When the router comes to know about the network information from other routers it is very difficult to correctly adapt to changes in the network and it must prepare to remove old or unusable routes, which adds to more complexity.

- Overhead on the lines and routers: Routers periodically send communication information in packets to the other router about the topology of the network. These packets does not contain user information but only the information necessary for the routers so it is nothing but the extra overhead on the lines and routers.

- Advantages:

Classification of Dynamic Routing Protocols

[edit | edit source]The first classification is based on where a protocol is intended to be used: between your network and another's network, or within your network: this is the distinction between interior and exterior. The second classification has to do with the kind of information the protocol carries and the way each router makes its decision about how to fill in its routing table which is link-state vs. distance vector.

Link State Routing

[edit | edit source]In a link-state protocol, a router provides information about the topology of the network in its immediate vicinity and does not provide information about destinations it knows how to reach. This information consists of a list of the network segments, or links, to which it is attached, and the state of those links (functioning or not functioning). This information is then broadcasted throughout the network. Every router can build its own picture of the current state of all of the links in the network because of the information broadcast throughout the network. As every router sees the same information, all of these pictures should be the same. From this picture, each router computes its best path to all destinations, and populates its routing table with this information. Now we will see the link state algorithm known as Dijkstra’s algorithm.

The notation and there meanings are as follows:

- Denotes set of all nodes in graph.

- is the link cost from node to node which are in . If both nodes are not directly connected, then . The most general form of the algorithm doesn't require that , but for simplicity we assumed that they are equal.

- is the node executing the algorithm to find the shortest path to all the other nodes.

- denotes the set of nodes incorporated so far by the algorithm to find the shortest path to all the other nodes in .

- cost of the path from the source node to destination node .

Definition of the Algorithm

[edit | edit source]In practice each router maintins two lists, known as Tentative and Confirmed. Each of these lists contains a set of entries of the form (Destination, Cost, Nexthop).

The algorithm works as follows:

- Initialize the Confirmed list with an entry for myself; this entry has a cost of 0.

- For the node just added to the Confirmed list in the previous step, call it node Next, select its LSP.

- For each neighbor (Neighbor) of Next, calculate the cost (Cost) to reach this Neighbor as the sum of the cost from myself to Next and from to Neighbor.

- If Neighbor is currently on neither the Confirmed not the Tentative list, then add (Neighbor, Cost, NextHop) to the Tentative list, where NextHop is the direction I go to reach Next.

- If Neighbor is currently on the Tentative list, and the Cost is less than the currently listed cost for Neighbor, then replace the current entry with (Neighbor , Cost, NextHop), where NextHop is the direction I go to reach Next.

- If the Tentative list is empty, stop. Otherwise, pick the entry from the Tentative

list with the lowest cost, move it to the Confirmed list, and return to step 2.

[algorithm from Computer Networks a system approach – Peterson and Davie.]

Now lets look at example : Consider the Network depicted below.

Steps for building routing table for A is as follows:

| Step | Confirmed | Tentative | Comments |

|---|---|---|---|

| 1 | ( A,0,-) | Since A is the only new member of the confirmed list, look at its LSP. | |

| 2 | (A,0,-) | (B,9,B) (C,3,C) (D,8,D) | A’s LSP says we can reach B through B at cost 9, which is better than anything else on either list, similarly for C and D. |

| 3 | (A,0,-) (C,3,C) | (B,9,B) (D,8,D) | Put lowest-cost member of Tentative (C) onto Confirmed list. Next, examine LSP of newly confirmed member (C) |

| 4 | (A,0,-) (C,3,C) | (D,4,C) | Cost to reach E through C is 4, so replace (B, infinity,-). |

| 5 | (A,0,-) (C,3,C) (D,4,C) | (B,6,E) (D,6,E) | Cost to reach B through E is 6, so replace (B, 9, B). |

| 6 | (A,0,-) (C,3,C) (D,4,C) (B,6,E) | The only node remains is D perform the steps 2 to 6 again and we will get distance of D from A through E is 6 by following algorithm. So next iteration add (D,6,E) | |

| 7 | (A,0,-) (C,3,C) (D,4,C) (B,6,E) (D,6,E) | We are done. Now shortest path to all the destinations are know. |

Distance vector routing

[edit | edit source]Distance vector algorithm is iterative, asynchronous, and distributed. In distance vector each node receives some information from one or more of its directly attached neighbors. It then performs some calculation and may then distribute the result of calculation to its neighbors. Hence it is distributive. It is interactive because this process of exchanging information continues until no more information is exchanged between the neighbors.

Let be the cost of the least-cost path from node to node y. The least cost are related by Bellman-Ford equation:

where min v in the equation is taken over all of x’s neighbors. After traveling from x to v , then we take the shortest path from v to y, the shortest path from x to y will be C(x, V) + dv(y). As we begin to travel to some neighbor v, the least cost from x to y is minimum of C(x, V) + dv(y) taken over all neighbours v.

In distance vector algorithm each node x maintains routing data. It should maintain :

- The cost of each link attached to neighbors i.e. for attach node v it should know C(x,v).

- It also maintains its routing table which is nothing but the x’s estimate of its cost to all destinations, y, in N.

- It also maintains distance vectors of each of its neighbors. i.e. Dv = [Dv(y): y in N]

In distributed , asynchronous algorithm each node sends a copy of distance vector time to time from each of the neighbors. When a node x receives a its neighbors distance vector then it saves it and update its distance vector as:

when node update its distance vector then it will send it to its neighbors. The neighbor performs the same actions this process continues until there is no information to send for each node.

Distance vector algorithm [ from kurose] is as follows :

At each node , x :

Lets consider the example: the network topology is given as

Now we will look at the steps for building the router table for R8 after step 1: after step 2: after step 3: and it is the solution. For node R8 now the routing table contains.

| Destination | Next hop | Cost to |

|---|---|---|

| R1 | R5 | 5 |

| R2 | R5 | 4 |

| R3 | R3 | 4 |

| R4 | R5 | 5 |

| R5 | R5 | 2 |

| R6 | R6 | 2 |

| R7 | R7 | 3 |

Count-to-infinity problem:

[edit | edit source]“In the network bad news travels slowly”. Consider R1, R2, R3 and R4 are the four routers connected in a following way.

The routing information of the routers to go from them to router R4 is R1 R2 R3 3, R2 2, R3 1, R4 Suppose R4 is failed. Then as there is no direct path between R3 and R4 it makes its distance to infinity. But in next data exchange R3 recognize that R2 has a path to R4 with hop 2 so it will update its entry from infinity to 2 + 1 = 3 i.e. (3,R3). In the second data exchange R2 come to know that both R1 and R2 goes to R4 with a distance of 3 so it updates its entry for R4 as 3 + 1 = 4 i.e. (4, R3). In the third the data exchange the router R1 will change its entry to 4 + 1 = 5 ie ( 5, R2). This process will continue to increase the distance. The summary to this is given in following table.

| Exchange | R1 | R2 | R3 |

|---|---|---|---|

| 0 | 3, R2 | 2, R3 | 1, R4 |

| 1 | 3, R2 | 2, R3 | 3, R3 |

| 2 | 3, R2 | 4, R3 | 3, R3 |

| 3 | 5, R2 | 4, R3 | 5, R3 |

| .. Count to infinity ... | |||

Solutions of count-to-infinity problem:

[edit | edit source]- Defining the maximum count

- For example, define the maximum count as 16 in RFC 1058 [2]. This means that if all else fails the counting to infinity stops at the 16th iteration.

- Split Horizon

- Use of Split Horizon.. Split Horizon means that if node A has learned a route to node C through node B, then node A does not send the distance vector of C to node B during a routing update.

- Poisoned Reverse

- Poisoned Reverse is an additional technique to be used with Split Horizon. Split Horizon is modified so that instead of not sending the routing data, the node sends the data but puts the cost of the link to infinity. Split horizon with poisoned reserve prevents routing loops involving only two routers. For loops involving more routers on the same link, split horizon with poisoned reverse will not suffice.

RIP

[edit | edit source]| A reader requests that the formatting and layout of this book be improved. Good formatting makes a book easier to read and more interesting for readers. See Editing Wikitext for ideas, and WB:FB for examples of good books. Please continue to edit this book and improve formatting, even after this message has been removed. See the discussion page for current progress. |

Interior Gateway Protocol is nothing but intra-AS routing protocol. It determines how routing is performed within an autonomous system. The intra routing protocols are Routing Information Protocol : (RIP) RIP is the distance vector routing protocol. Participating machines in RIP are participated as active and passive machines. Active machines participate in adverting the route and passive machines do not advertise but they listen to RIP messages and use them to update their routing table. Routing updates are exchanged( broadcast) between neighbors approximately every 30 seconds. This update message is called as RIP response message or RIP advertisement. In RIP routers apply hysteresis when it learns from another router. Hysteresis means router does not replace the route with an equal cost route. It will improve performance and reliability.

OSPF

[edit | edit source]| A reader requests that the formatting and layout of this book be improved. Good formatting makes a book easier to read and more interesting for readers. See Editing Wikitext for ideas, and WB:FB for examples of good books. Please continue to edit this book and improve formatting, even after this message has been removed. See the discussion page for current progress. |

OSPF is the link state protocol which uses flooding of link state information and Dijkrstra least cost path algorithm. It develops complete topological map with the help of OSPF and shortest-path in the autonomous system by running Dijkrastra’s algorithm on the router. In OSPF, router broadcasts routing information to all the other routers in the autonomous system. The router broadcast the link state information whenever there is change of link cost. It broadcast the link’s sate periodically at least once every 30 minutes. The OSPF protocol checks that links are operational by sending HELLO message that is sent to the attached neighbors. It allows the OSPF router to obtain a neighboring router’s database of network-wide link state.

Some of the advantages of OSPF are as follows:

- The specification is available in the published literature. As it is open standard anyone can implement it without paying the license fees that encourage many vendors to support OSPF.

- Load balancing is performed by OSPF. If there are multiple routes with the same cost then OSPF distributes traffic over all routes equally.

- OSPF allows site to partition networks and routers into subnets called area. The area topology is hidden from other area and each area is self-contained. The area can change its internal topology independently thus it permits growth and make the networks at a site easier to manage.

- All the exchanges between the routers are authenticated. OSPF allows variety of authentication schemes. Different area can use different authentication scheme. Router authentications are done because only trusted routers should propagate routing information.

- There is an integrated support for unicast and multicast routing. Multiple OSPF (MOSPF) provides multicast routing. MOSPF is simple extension to OSPF. MOSPF uses existing OSPF link database and adds a new type of link-state advertisement to the existing OSPF link-state broadcast mechanism.

- OSPF support for the hierarchy within a single routing algorithm.

Hierarchical structure of OSPF network is shown below:

[ Diagram similar to diagram from kurose]

- Internal router - They are within an area. They only perform intra AS routing.

- Area border router - These router belong to both an area and the backbone.

- attached to multiple areas

- runs a copy of SPF for each attached area

- relays topological info on attached areas to backbone

- Backbone router - These routes perform routing within the backbone but themselves are not area border routers.

- AS boundary routers – A boundary router perform inter-AS routing. It is through such a boundary router that other routers learn about paths to external networks.

BGP

[edit | edit source]An Exterior Gateway Protocol is any protocol that is used to pass routing information between two autonomous systems (AS), i.e. between networks that aren't under the control of a single common administrator. BGP is currently the de facto standard for exterior routing on the internet. The current version of BGP is v4, which has been in use since 1994, all earlier versions now being obsolete.

When two autonomous systems agree to exchange routing information then two routers that are used for exchanging information using BGP are known as BGP peers. As a router speaking BGP communicates with a peer in another AS which is near to the edge of the AS, this is referred to as a border gateway or border router.

BGP is a path vector protocol. Like distance vector protocols (and unlike link-state networks such as OSPF), it doesn't attempt to map the entire network. Instead, it maintains a database of the cost to access each subnet it knows about, and chooses the route that has the lowest cost. However, instead of storing a single cost, it keeps the entire path used to access each network (not with every single hop on the path, but with a list of ASes that the path passes through). This means that routing loops can be eliminated, which can be hard to ensure in simpler distance vector protocols like RIP, while still allowing the protocol to scale to the level of the entire internet.

Three types of activities involved in route advertisement are as follows:

- It receives and filters route advertisements from directly attached neighbors. Received routes with paths that contain the router's own AS number are rejected, to avoid creating routing loops.

- It selects the route. BGP router may receive several route advertisements to the same destination, and by default chooses a single route from among them as the preferred route (ECMP extends this to allow traffic to be load-balanced across several paths with equal cost).

- It also sends route advertisements to its neighbors.

BGP was originally specified to advertise IPv4 routes only, but the multi-protocol extensions in version 4 of the BGP protocol allow routes in other address families to be shared via BGP. In particular, BGP can be used to share IPv6 routes. The transport protocol that the BGP peers use to communicate is typically IPv4, but can be IPv6 or indeed any other protocol. In keeping with the layered networking model, BGP specifies the packets to be exchanged but doesn't rely on any details of how the packets are transferred.

Internal and External BGP

[edit | edit source]BGP is the de facto exterior gateway protocol, so routers that connect networks to the internet have to speak it. But that isn't the end of the story. In the simplest case, each AS would have one border router that shared routes with the outside world, and all routing inside the AS would be done with an interior protocol (OSPF, RIP, etc.) However, larger networks will rarely have a single border router for the entire AS. As a result, routes that are received by one border router need to be propagated over to the other border router in order to be shared with the peers of that router.

One way to do this would be to insert the received routes into the existing interior protocol (OSPF, say), and use the interior protocol to propagate them to the other border router. The second border router could then redistribute them back into BGP and out onto the open internet. However, this has some drawbacks. Most seriously, the AS_PATH information from the route is lost (since OSPF and other protocols don't know how to share an AS_PATH) so the main method by which routing loops are eliminated is unable to operate. In addition, interior protocols are not designed to cope with the sheer volume of routes on the internet.

A more scalable alternative it to use internal BGP between the routers within the AS. This propagates routes between the routers in a similar way to external BGP, except that AS_PATHs are not appended to.

Route Reflection

[edit | edit source]One problem with using iBGP within an AS is that the BGP routers have to be connected in a full mesh. That is, every router has to be connected to every other router within the AS. The reason for this is that although routers using iBGP will pass on to their peers routes that have been learned via eBGP, these routes only travel one hop within the AS. No route that is received via iBGP is passed on to another peer via iBGP. This is because the AS_PATH information can't be used to eliminate routing loops.

The reason why it's undesirable to have to connect all the routers in a full mesh is that the number of peerings gets very large when a large number of routers are in operation: connections are necessary to make a mesh of routers. Each connection takes up CPU, memory and network bandwidth resources.

An alternative is to use route reflection. This allows one or more routers in the AS to readvertise iBGP routes, and avoids the possibility of routing loops by placing constraints on which routes can be readvertised.

To use route reflection, one or more routers are designated as route reflectors. Each route reflector divides its peerings into client peers and non-client peers. The route reflector will reflect routs between one group and the other, and between client peers. Non-client peers must be fully meshed.

Route Aggregation

[edit | edit source]BGP Functionality and Message Types

[edit | edit source]BGP peers perform three basic functions as follows:

- Initial peer acquisition and authentication: the two peers establish a TCP connection and perform a message exchange that guarantees both sides have agreed to communicate.

- Both side sends positive or negative reachability information: it will advertise a network as unreachable if one or more neighbors are no longer reachable, and no backup route is available for the routes in question.

- Ongoing verification: It provides ongoing verification that the peers and the network connections between them are functioning correctly.

The BGP message types are:

| OPEN | A soon as two BGP peers establish a TCP connection, they each send an OPEN message to declare their autonomous system number and establish other operating parameters. An OPEN message contains a suggested length for the hold timer, which is the maximum number of seconds which may elapse between the receipt of two successive messages. On receiving an OPEN message, the receiver replies with KEEPALIVE. |

|---|---|

| UPDATE | After TCP connection and the sending and receiving of OPEN and acknowledgement, peers use UPDATE to advertise the new destinations that are reachable or withdraw previous advertisement. |

| NOTIFICATION | This BGP message is used to inform a peer that an error has been detected or sender is about to close the BGP session. |

| KEEPALIVE | This is used to test network connectivity and to verify that both peers continue to function. BGP uses TCP for transport, and TCP does not include a mechanism to continually test whether a connection endpoints is reachable. Both sides sends KEEPALIVE so that they know if the TCP connection fails. The KEEPALIVE message is as short as possible, so as not to waste bandwidth. |

The BGP state machine

[edit | edit source]BGP packet formats

[edit | edit source]The Message Header

[edit | edit source]Each BGP packet starts with a fixed-size header.

- Marker

- This field is for backward compatibility. It is 16 bytes of all ones.

- Length

- This is a 2-byte unsigned integer that specifies the length of the packet.

- Type

- This is one byte that specifies the type of the message: Open, Update, Notification or Keepalive.

The OPEN message

[edit | edit source]The OPEN message is the first message that each router sends to the other on a newly established peering. A successful OPEN message is acknowledged by sending back a KEEPALIVE message.

The BGP identifier is included in the OPEN message. This is a 4-byte number that must uniquely identify the router on the network. This must be the IPv4 address of one of the interfaces on the router. In theory, a router may not have any IPv4 addresses if it is only being used for IPv6, but in practice this rarely happens and doesn't matter anyway since any unique 4-byte value can be used.

The OPEN message can contain optional parameters. If it contains any parameters, then the Optional Parameter Length field will be set to a non-zero value to indicate the length. Each parameter in the parameters field is encoded as a group of three values: parameter type (1 byte), parameter length (1 byte) and a variable length (up to 255 bytes) field for the parameter.

The UPDATE message

[edit | edit source]An UPDATE message is sent from one peer to another to carry new information about the network: routes that are newly available and routes that are no longer available.

The NOTIFICATION message

[edit | edit source]The KEEPALIVE message

[edit | edit source]Since BGP doesn't rely on any details of the transport protocol that is used to carry packets between the peers, it can't make use of TCP to detect when a peer has become unavailable. Therefore the protocol requires that regular keepalive packets are sent between the peers. The hold timer is reset every time a packet is received, and the connection is closed if the timer runs out. UPDATE and NOTIFICATION packets also reset the timer, but if no other packet has been sent then the peer must send a KEEPALIVE packet. The keepalive packet is typically sent at one third of the hold time, in order to strike a balance between not flooding the network and ensuring that a single dropped packet doesn't cause the connection to be torn down.

BGP path attributes

[edit | edit source]BGP is designed to be extensible, so the base protocol allows for an extensible list of attributes to be attached to a route. BGP doesn't require that every BGP router understand every attribute that is used, but attributes are divided into four categories of how they should be handled:

- Well-known mandatory

- Every BGP router should recognise and process these attributes when received, and should advertise them to neighbors

- Well-known discretionary

- These attributes need not be advertised, but any BGP router should recognise them

- Optional transitive

- If the BGP router doesn't know what to do with this attribute, it will be passed on to its BGP neighbors

- Optional nontransitive

- If the BGP router doesn't know what to do with this attribute, it will be ignored.

Currently supported attributes include:

- ORIGIN

- Whether the route originated from an IGP, an EGP or elsewhere

- AS_PATH

- The list of ASes that the route has been through to reach the current router. Among other things, this enables the BGP router to reject routes that contain its own AS on the AS_PATH, since otherwise it could lead to a routing loop.

- NEXT_HOP

- The IP address of the router that should be used as the next hop for this route

- MULTI_EXIT_DISC

- An optional attribute that, if present, can be used by the router to choose between several different entry points to the same AS.

- LOCAL_PREF

- This attribute is only included on internal communication between peers within the same AS. It enables the BGP router to choose between external routes to the same subnet by using the route with the higher LOCAL_PREF value.

- ATOMIC_AGGREGATE

- This is used when the BGP router has aggregated several routes into one and omitted some ASes from the AS_PATH as a result.

- AGGREGATOR

- An option attribute that can be added by a BGP router to routes where the router has performed route aggregation. The attribute specifies the AS number and IP address of the router that performed aggregation.

- COMMUNITY

- A community value is used to specify a common property that can be applied to a number of routes. Some community values are standardised, but other community numbers can be allocated by any group of BGP ASes that can agree on the standard meaning.

- ORIGINATOR_ID

- The originator ID is used within an AS where route reflection is used to prevent routing loops. The originator ID is a 32-bit value that is either the router that injected the route into BGP (as a manually configured BGP prefix, or via redistribution from another protocol) or the border router that received the route via eBGP.

- CLUSTER_LIST

- Like the originator ID, this is used to prevent loops when route reflection is being used. It records the list of clusters that the route has passed through, much like the AS_PATH records the list of ASes that a route has passed through.

Border Router Selection

[edit | edit source]| A reader requests that the formatting and layout of this book be improved. Good formatting makes a book easier to read and more interesting for readers. See Editing Wikitext for ideas, and WB:FB for examples of good books. Please continue to edit this book and improve formatting, even after this message has been removed. See the discussion page for current progress. |

On a perimeter router there are generally two border routers to avoid single point of failure. Interior routers and hosts on the perimeter network choose a border router to deliver their Internet traffic.

Central Question in Border Router Selection

Now we will see how reliable internet connectivity is establish with at least one working border router with reliable connection. Reliability, complexity, and hardware requirements can be traded off to meet the needs.

Border Router Selection vs. Exit Selection

[edit | edit source]Exit selection is the process used by BGP to decide which exit from your AS will be used. Border router selection is the process your interior routers and hosts use to pick a border router. Border router selection happens first as a host or interior router must choose a border router. Then the chosen border router decides if the packet should exit through one of its connections or if it should instead be forwarded to another border router for delivery.

Border Router Selection with IBGP

[edit | edit source]If all interior routers and hosts on the perimeter network run IBGP with the border routers then the border router selection problem can be neatly solved. Selecting Border Router with IBGP

The copy of BGP routing tables from each border router is coped into the interior router and host by IBGP. The interior router and host would always pick the best border router for each destination as they learned via IBGP. A lot of complexity is added to most hosts because of BGP. The extra memory and CPU power required by BGP in interior routers may make them substantially more expensive than they'd be if they didn't run BGP. Hence, most network designs will run BGP only on the border routers and therefore be faced with the border router selection problem. Now we will discuss network policies for selecting border routers without using BGP. Border Router Selection with a Static Route The simplest way for a host or interior router to choose a border router is to use a static default route. Static routing may lead to “wrong” border router selection.

Host Choosing "Wrong" Border Router

[edit | edit source]Consider network had default static route pointing at Border RouterB and host wanted to deliver traffic to a customer of ISPA. ISPA was sending customer routes so that your AS was aware that the destination was a customer of ISPA. In this case, Border RouterB would have learned ISPA's customer routes via IBGP from Border RouterA. So Border RouterB would receive the traffic and immediately redirect or forward it to Border RouterA via the perimeter network. The traffic would've traversed the perimeter network twice, wasting bandwidth. But if Border RouterB fails then there's an even higher price to pay. Host Unreachable from Internet when Border Router Fails

The interior router would likely share an IGP with the border routers and your IGP should be configured to select a functioning border router with at least one good Internet connection. Your IGP would detect the failure of Border RouterB so your interior router would use Border RouterA as its default route. It has a static default route pointing at the now dead Border RouterB. Hence it has lost all Internet connectivity. This is another example of how static routes and reliable networks often don't mix.

Border Router Selection with HSRP

[edit | edit source]With the help of two or more routers can dynamically share a single IP address. Hosts that have static default routes pointing at this address will see a reliable exit path from your AS without having to listen to BGP or your IGP. HSRP isn't a routing protocol at all. It's simply a way for routers on the same multi-access network to present a "non-stop" IP address. HSRP has the benefit that it keeps host configuration simple—a commonly used static default is all that's required. It also reacts to failures in a matter of seconds. Here are some examples of HSRP in action. HSRP with Two Border Routers in Normal Operation

The site has a T3 for its primary Internet connection and a T1 on a different border router for a backup. The perimeter network interface of Border RouterA is configured to have address 10.0.0.253. The perimeter network interface of Border RouterB is configured to have address 10.0.0.254. Since Border RouterA has the primary Internet connection, HSRP on it is configured so that it normally also holds the shared virtual interface address (10.0.0.1) on its perimeter network interface. HSRP on Border RouterB is configured to monitor the health of Border RouterA. Internet traffic from the host follows the static default route toward 10.0.0.1 to Border RouterA and exits on the T3 when both border routers are operating. But suppose Border RouterA fails

HSRP with Failed Primary Border Router

[edit | edit source]Within seconds of Border RouterA's failure, Border RouterB's perimeter network interface takes over the shared virtual interface address (10.0.0.1). The static default route in the host now points to Border RouterB with no work on the host's part. Its Internet traffic now exits on the T1 via Border RouterB. Now suppose that the T3 fails but Border RouterA continues to operate. We want Border RouterB to take over the shared virtual address even though Border RouterA is still functioning. This case is handled by configuring Border RouterA to "give up" the address whenever it looses carrier detect on the T3.

HSRP with Failed Primary Internet Connection

[edit | edit source]This behavior is implemented with a priority system. Border RouterA is configured to lower its priority whenever carrier detect is lost on the T3. Border RouterB seizes control of the shared virtual interface address whenever it notices that its priority is now the highest in the group of routers sharing the address. (Yes, more than two routers can share a single virtual interface address.)

Limitations of HSRP

[edit | edit source]- HSRP won't help you if an interface fails to pass data but carrier detect doesn't drop. This type of failure can happen if line between you and the central office is good but the DAX at the CO fails. BGP will eventually notice this kind of failure and reroute your traffic—it just won't happen with the speed of HSRP.

- HSRP won't help your hosts pick the "optimal" border router. Note that HSRP is available on all Cisco routers, but can have only a single IP address on the lower-end routers (e.g. 1600, 2500, 2600, and 3600 series routers as of this writing).

- HSRP can appear to interfere with outbound load sharing if you're not taking at least customer routes from one of your ISPs.

- HSRP alone isn't sufficient for reliable Internet connectivity. You'll still need to have BGP configured correctly at your border routers and at all your ISPs to retain connectivity in the face of line and/or router failure.

Border Router Selection with Hosts Listening to IGP

[edit | edit source]HSRP is usually the best way for hosts to select a border router because it recovers quickly from failures and keeps host configuration simple. If you can't use HSRP, the next best choice for selecting a border router is to have hosts that listen to an IGP. It's most common for hosts to be able to listen to RIP, but the slow (several minute) convergence time of RIP makes it a poor IGP for those interested in reliability. OSPF makes a much better IGP, but is substantially more complicated than RIP.

Border Router Selection and Load Sharing