The Many Faces of TPACK/TPACK Survey

The impact of Schmidt at al. (2009)’s TPACK Survey

[edit | edit source]by Mustafa Sat

After the announcement of TPACK framework (Mishra & Koehler, 2006) a requirement for developing valid and reliable instrument was emerged among researchers (Mishra & Koehler, 2006; Schmidt at al., 2009) in order to understand the possible effects and practical applications of use of TPACK construct on pre-service and in-service teachers’ levels of technology integration in learning and teaching (Koehler & Mishra, 2005). Schmidt and his colleagues were pioneers among those researchers who noticed that issue and attempted to address this gap by developing the first TPACK survey that could be used to assess TPACK for pre-service teachers. Development of Schmidt survey opened new door to researchers and inspired them to both adopt and adapt it in their studies in all around the world within the TPACK practices, resulting in a great contributions on emerging of different researches and surveys. Consequently, because of being the first one and the one which was widely used around the world, Schmidt survey is the subject of this chapter.

In their study Schmidt at al. (2009) developed a TPACK measurement instrument in order to report the elementary and early childhood education of pre-service teachers’ self-assessment of TPACK knowledge within TPACK framework postulated by Mishra and Koehler (2006) based on the Shulman’s (1986) concept of pedagogical content knowledge(PCK). Seven knowledge domains are the components of TPACK framework respectively including technology knowledge (TK), content knowledge (CK), pedagogical knowledge (PK), pedagogical content knowledge (PCK), technological content knowledge (TCK), technological pedagogical knowledge (TPCK), and technological pedagogical content knowledge (TPACK).

Survey Construction Process

[edit | edit source]Effective survey construction process requires a great effort on examining different types of researches made in relevant field and thorough and iterative content analyses process (Oppenheim, 2001). Schmidt at al. (2009)’s survey construction process involved in a critical review of a large body of relevant instruments in different subjects and comprehensive and iterative content analyses of each TPACK component items for critical revisions. Basically, they followed the following procedures throughout survey construction process. During the process of developing instrument, comprehensive review of literature helped them make use of and gain information from the relevant instruments focused on assessment of technology use in educational settings. With the help of information from the review of literature, they firstly revised and then generated items designated to measure seven knowledge domains of pre-service teacher. For validating the issue of content validity of the instrument, they exposed designed 44 items to three well-known experts in TPACK domains. Based on ratings given on 10-point scale, comments, recommendations, and suggestions provided by each expert, they made some kinds of revisions such as removing and rewording items. As a result, 75 items together with the items concerning demographic information and faculty and PK-6 teacher models of TPACK were constructed. After administered to 124 pre-service teachers, the instrument was exposed to exploratory factor analyses. As a result of exploratory factor analyses they eliminated 28 problematic items, one TK item, 5 CK items, 3 PK items, 4 PCK items, 4 TCK items, 10 TPK items, and 1 TPACK item, which violated the reliability of instrument. High reliability of instrument was reported for both each knowledge domain (ranging .75 to .92.) and fact loadings (ranging .59 to .92) (Schmidt at al., 2009)

TPACK survey as defined above was refined and developed in the alignment with the structured process in which different well-known reliable and valid survey construction methods such as iterative and clear content review with experts, drawing information from literature, and exploratory factor analyses was applied. Based on the process that was pursued it could be concluded that Schmidt at al. (2009)’s survey merit taking the brand of validity and reliability in measuring pre-service TPACK knowledge.

Data Collection method

[edit | edit source]This study used Google Scholar while gathering articles having used TPACK survey in their studies in any way. Statistics showed that the article of Schmidt at al. (2009) had been cited 145 times by other authors. The studies having reached were rigorously analysed in order to reveal targeted studies that employed TPACK survey in any way. All the publications including dissertations seeming to use TPACK survey were checked out whether they are published in peer-reviewed journals or they are just published as conference paper, presentation, report, and proceedings. The decision on selecting publication was based on two approaches. The first one is that the adoption of TPACK survey should be reported in publication and the second one is that the publication in which adoption of TPACK survey is reported should be peer-reviewed article. These two approaches guided researcher when selection articles. Results of detailed analyses yielded 29 publications consisting of 16 peer-reviewed articles, 2 dissertations, 10 proceedings, and one presentation Moreover, several peer-reviewed articles were discovered to cite Schmidt at al. (2009)’s article however there were not accessed because of subscription state and therefore they couldn't be analysed whether they had adopted TPACK survey in their studies or not. This may be one of the limitations of this study.

The articles founded were thoroughly examined; especially the section of instrument where the author gives detailed information regarding what types of adaptations the original instrument was subjected to. That is, while examining and reading articles adaptations were identified and detailed account of those adaptations was noted. Moreover, researcher focused on finding information related to what the purpose of the study is, which type of sample, pre-service or in-service, was used, what sample size is, where the study was conducted, and how the reliability and validity of adapted instrument were ensured by researcher.

Results

[edit | edit source]Detailed analyses of articles resulted in 5 themes which were sample (field, size and type), adaptation (removing items, adding items, changing wording of items, adapting items to specified discipline), validity and reliability (Cronbach alpha, exploratory factor analyses, confirmatory factor analyses, structural equation modeling), study (type and purpose), place(where the study was conducted) ) .This study provided detailed information about mentioned areas above for each article in a composition form.

Sample

[edit | edit source]Regarding the theme of sample as represented in Table 1, varying sample sizes were used with the range between 17 and 1206. Furthermore, of the studies seven (43%) used pre-service (Koh, 2010; Agyei& Voogt, 2012; Alayyar at al., 2012; Chai at al., 2013; Hofer & Grandgenett, 2012; chai, Koh at al., 2012; Semiz & ince, 2012 ), and the same number of studies (43%) used in-service teachers as participants (Chuang & Ho, 2011; Messina & Tabone, 2012; Chai at al., 2010; Agyei & Keengwe, 2012; Öztürk & Horzum, 2011; Handal at al., 2013; Tee & Lee, 2011). Moreover, one of the studies (6%) used both pre-service and in-service teachers as participant (Lin at al., 2012).

Findings concerning field, size and type of sample highlighted that the survey was used almost equally the pre-service and in-service teacher levels. Moreover, high sample size was used in most of the studies, thus making occurrence of a more valid and reliable results. Furthermore, Teachers in secondary education were seemed to be more subjected to studies of TPACK survey when compared to other teachers from primary school, early childhood, and master levels.

| Author & Year | Field | Type of sample | Sample size |

|---|---|---|---|

| Koh, J.H.L., Chai, C.S., Tsai, C.C.(2010) | tongue languages, mathematics, physics, biology, music, and history | pre-service | 1185 |

| Chuang, H-H, & Ho, C-J. (2011) | Early childhood teachers | in-service | 335 |

| Messina, L., Tabone, S.(2012) | Lower secondary school teachers | in-service | 132 |

| Chai, C. S., Koh, J. H. L., & Tsai, C.-C. (2010) | Secondary postgraduate education teachers | in-service | 804 (437 for pre-test, 367 for post-test) |

| Agyei, D.D., Keengwe, J.(2012) | Mathematics teachers | in-service | 104 |

| Agyei, D.D., Voogt, J.(2012) | Mathematics teachers | pre-service | 125 |

| Alayyar, G.M., Fisser, P., Voogt, J.(2012) | Science teachers | pre-service | 78 |

| Chai, C.S., M.W.Ng, E., Li, W., Hong, H-Y., Koh, J. H. L.(2013) | Second and third year undergraduate students | pre-service | 550 |

| Öztürk, E., Horzum, M.B.(2011) | primary school teachers | in-service | 291 |

| Lin, T.C., Tsai, C.C., Chai, C.S., Lee, M.H.(2012) | Science teachers | pre-service

andin-service|| 222 | |

| Handal, B., Campbell, C., Cavanagh, M., Petocz, P., & Kelly, N. (2013). | secondary mathematics teachers | in-service | 280 |

| Hofer, M., Grandgenett, N.(2012) | Secondary education teachers in master’s program | pre-service | 17 |

| Peeraer, J., Petegem, P.V.(2012) | Teacher educators | ..... | 1191 |

| Tee, M.Y., Lee, S.S.(2011) | Elementary, secondary, tertiary teachers in master course program | in-service | 24 |

| Chai, C.S., Koh, J.H.L., Ho, H.N.J., Tsai, C.C.(2012) | 47% primary school teachers | pre-service | 1296

(668 for pre-test, 628 for post-test) |

| Semiz, K., Ince, M.L.(2012) | physical education students | pre-service | 760 |

Table 1: Representation of authors, field, type of sample, and sample size for each article

Adaptation

[edit | edit source]A variety of adaptations in superficial or marginal levels were performed on Schmidt at al. (2009)’s TPACK survey. The intensity of those adaptations varied in accordance with differing objectives identified in the studies. Specifically, each study tried to adapt or modify almost all components of TPACK survey or just a few of them so that the final version of survey could be used within the defined discipline and be fitted in the study purpose.

Result of the analyses of articles indicated that original Schmidt at al. (2009)’s TPACK measurement instrument was exposed to different kinds of adaptions by every study based on the nature of phenomena on which study focused. Descriptive information concerning those changes were given and categorized with respect to retained items, added items, removed items, adapted items or subject, Likert scale used, and the final number of items covered by adapted survey. As shown clearly in Table 2, 6 studies reported that some items and subdomains of TPACK were retained in their survey (Koh at al., 2010; Chai at al., 2010; Chai at al., 2013; Hofer & Grandgenett, 2012; Peeraer & Petegem, 2012; Tee & Lee, 2011). Moreover, it seems that just three studies added additional items to the original survey and interestingly those same studies also removed some items from the survey (Chuang & Ho, 2011; Chai at al., 2013; Semiz & ince, 2012). In addition to three studies, the other 3 ones also reported to remove the items that were not convenient or flawed for their studies (Koh, 2010; Chai at al., 2010; Lin at al., 2012). Furthermore, most of the studies (n=10) provided information related to how items were adapted for specific teaching subject however this information is very superficial and which item or items were adapted could not be recognized (Koh at al., 2010; Messina & Tabone, 2012). Although, in original Schmidt at al. (2009)’s survey the items were measured on 5-point Likert scale, three studies changed scale level from 5 to 7 point. Further and detailed information regarding the tracks of changes made on original instrument were provided in Table 2.

Although a set of adaptations were reported, most of the studies didn’t provide thorough information regarding which items in which component were adapted, adopted, removed, or reworded. For instance, in some studies it was only reported that some items were adapted however, no any descriptive information related to type of adaption was given. Even, in some of the studies the total number of items was not reported therefore as illustrated in Table 2 the cell showing the total number of items is empty for 6 studies. Unfortunately, deficiency of provided information about adaptations made on TPACK survey resulted in drawing and reporting incomplete adaptations information. Yet, finding of available information highlighted that the original 5-point Liker scale was increased to 7-point scale due to the intention of making results more reliable. In addition TPACK survey was translated into 3 different languages; Chinese, Arabic, and Turkish and interestingly, in two studies TPACK survey was translated into Turkish two times in subsequent year. Most of the studies preferred way of adapting items to their specified teaching subject rather than way of removing and adding items. Choosing such way may be attributed to easy applicability of TPACK survey to other disciplines.

| Author & Year | Retained items | Added items | Removed items | adapted items to specified teaching subject | Likert scale used | The final items |

|---|---|---|---|---|---|---|

| Koh, J.H.L., Chai, C.S., Tsai, C.C.(2010) | TK items, PK items, TPK items | 11 questions assessing teacher assessment of TPACK | Questions made more generic | 7-point | 29 | |

| Chuang, H-H, & Ho, C-J. (2011) | 7 items in CK, 3 items in TCK, 8 items in PCK, 3 items in TK, 6 items in TPK, 3 items in TPACK | (after pilot study)2 items from PK, 2 items from TK, 5 items from CK, 4 items from PCK, and 3 items from TPK | The survey was translated to Chinese | 5-point | 59 | |

| Messina, L., Tabone, S.(2012) | Survey was adapted based on different subjects | 5-point | 30 | |||

| Chai, C. S., Koh, J. H. L., & Tsai, C.-C. (2010) | TK, PK, and TPACK | TPK, TCK, and PCK | Questions assessed CK. | 7-point | 18 | |

| Agyei, D.D., Keengwe, J.(2012) | Survey items | 5-points | ||||

| Agyei, D.D., Voogt, J.(2012) | Survey items. The knowledge related to technology integration was focused (TK, TPK, TCK, and TPACK). | 5-points | ||||

| Alayyar, G.M., Fisser, P., Voogt, J.(2012) | The instrument was translated to Arabic language | 5-points | ||||

| Chai, C.S., M.W.Ng, E., Li, W., Hong, H-Y., Koh, J. H. L.(2013) | Just TPACK items were adopted | Adopted TPACK items were crafted and focused towards integrating ICT for meaningful learning -- items was translated to Chinese | 36 | |||

| Öztürk, E., Horzum, M.B.(2011) | items was translated to Turkish | 5-points | 47 | |||

| Lin, T.C., Tsai, C.C., Chai, C.S., Lee, M.H.(2012) | --11 items in Models of TPACK

--3 open-ended questions -- 8 items in the TK, PK, PCK, TCK, and TPC subscales. -items related to mathematics, social studies, and literacy teachers |

7-points | 27 | |||

| Handal, B., Campbell, C., Cavanagh, M., Petocz, P., & Kelly, N. (2013). | Some items were adapted | 30 | ||||

| Hofer, M., Grandgenett, N.(2012) | All components | 5-points | 47+11 | |||

| Peeraer, J., Petegem, P.V.(2012) | TPK items | 5-points | ||||

| Tee, M.Y., Lee, S.S.(2011) | All components | 5-point | ||||

| Chai, C.S., Koh, J.H.L., Ho, H.N.J., Tsai, C.C.(2012) | TK component related to web 2.0 technologies | Original TK ,PK, TPK | 34 | |||

| Semiz, K., Ince, M.L.(2012) | 2 open-ended questions | 11 items | --Survey was translated to Turkish

--remaining items were adapted |

Table 2: Representation of retained items, added items, removed items, adapted items or subject, Likert scale used, and the final number of items covered by adapted survey

Validity and Reliability

[edit | edit source]Different statistical methods or procedures are available in controlling validity end reliability of defined constructs and its sub items. The aim of the validity end reliability check is to find the extent to which defined constructs measure the phenomena you are inspecting. Studies having adapted Schmidt (2009)’s survey used a set of tests for reporting how much the defined constructs and items are reliable and valid. Detailed descriptive report in respect of statistical methods or procedures used in studies for controlling of developed survey’s reliability and validity were mentioned as follows.

Validity and reliability issue of adapted survey were considered in analyzed studies and ensured by resorting to Cronbach alpha value, exploratory factor analyses, confirmatory factor analyses, structural equation modeling, and content review by experts. Analyses of the studies illustrated that the Cronbach alpha value was measured in most of the studies (n=11) in order to validate the extent to which adapted survey is reliable (Koh at al., 2010; Chuang & Ho, 2011; Messina & Tabone, 2012; Chai at al., 2010; Alayyar at al., 2012; Chai at al., 2013; Öztürk & Horzum, 2011; Handal at al., 2013; Peeraer & Petegem, 2012; Chai at al., 2012; Semiz & ince, 2012). Moreover, a few of studies (n=5) utilized exploratory factor analyses for the purpose of indicating that common items measuring the same phenomena were under the same factor(Koh at al., 2010; Chuang & Ho, 2011; Chai at al., 2010; Öztürk & Horzum, 2011; Chai at al., 2012). In addition, some few studies (n=4) used confirmatory factor analyses in order to confirm that the items categorized under the related factor were the correct and valid items (Chai at al., 2013; Öztürk & Horzum, 2011; Chai at al., 2012; Semiz & ince, 2012). A relatively few studies (n=3) made use of structural equation modeling so as to reveal the relation among different subdomains of TPACK or between subdomain of TPACK and other constructs (Chai at al., 2013; Lin at al., 2012; Chai at al., 2012). 5 studies out of 16 exposed adapted survey content to multiple people who are experts in subject specified in the study in order to confirm whether adapted items or original items measured exactly what was intended to measure (Koh at al., 2010; Chuang & Ho, 2011; Alayyar at al., 2012; Öztürk & Horzum, 2011; Semiz & ince, 2012). Even though 3 studies were founded to not use any statistical approach for representation of validity and reliability of adapted survey, they commonly mentioned validity and reliability statistics of the original survey (Agyei & Keengwe, 2012; Agyei & Voogt, 2012; Hofer & Grandgenett, 2012; Tee & Lee, 2011).

Use of Cronbach Alpha in ensuring validity and reliability of survey is more common when compared to other different means as showed in Table3. The studies that used exploratory factor analyses were tended to use confirmatory factor analyses. Furthermore, structural equation modeling is the least employed method of validity and reliability among the studies.

| Validity and Reliability | The number of studies (N) |

|---|---|

| Cronbach alpha | 11 |

| Exploratory factor analyses | 5 |

| Confirmatory factor analyses | 4 |

| Structural equation modeling | 3 |

| Content review of instrument by expert | 5 |

| Not used | 3 |

Table 3: Representation of validity and reliability.

Study

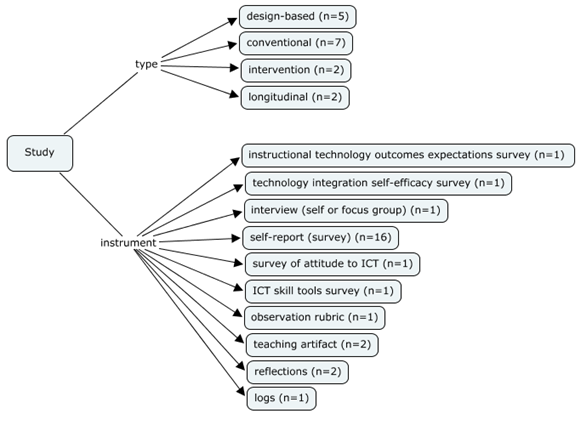

[edit | edit source]The third theme explored as a result of rigorous analyses of articles as portrayed in Figure 1 was concerned with study including study type (in which settings study was conducted?), the purpose (Which phenomena study tried to investigate in specified education settings?), and the instrument (what kinds of instruments were adopted to reveal information about the TPACK constructs being investigated?). Regarding to the study type analyses result indicated that almost half of the studies (n=7) attempted to measure students’ TPACK knowledge in courses where students are exposed to conventional education (Koh at al., 2010; Chuang & Ho, 2011; Chai at al., 2013; Öztürk & Horzum, 2011; Lin at al., 2012; Handal at al., 2013; Semiz & ince, 2012 ). Interestingly, except one study the other mentioned studies above (n=6) employed only adapted self-reported survey during data collection process. The distinct one differently used technology integration self-efficacy and instructional technology outcomes expectations survey (Semiz & ince, 2012). In addition 5 of the studies measured the tracks of students’ TPACK development in design-based course by using self-reported survey (Chai at al., 2010; Tee & Lee, 2011; Chai at al., 2012) as well as TPACK reflection questions, survey of attitude to ICT, and ICT skill tools survey (Alayyar at al., 2012); interview data(Agyei & Voogt, 2012). Longitudinal study was carried out in two studies in which students’ self-report of TPACK knowledge was measured by means of TPACK observation rubric, teaching artifacts (Agyei & Keengwe, 2012); focused group interview (Peeraer & Petegem, 2012) together with self-reported survey. Furthermore, intervention was used in only two studies to investigate teachers’ knowledge of TPACK with teaching artifacts, TPACK observation form (Agyei & Keengwe, 2012) as well as self-reported survey(Messina & Tabone, 2012).

Figure 1: Representation of study types and instruments

Findings regarding the type and purpose of the examined studies, and also the measurement instrument types used in these studies indicated that students’ self-reporting knowledge of TPACK was mostly measured in design-based and conventional settings rather than intervention and longitudinal. Moreover, self-report survey was the most commonly used instrument across studies for collecting data concerning students’ TPACK knowledge. Since there were several design-based studies, artifacts of students were also used by some studies as measurement tool.

Location of the Studies

[edit | edit source]Location of the conducted study plays an important role in the study and therefore may have a considerable amount of change on the study results because of the fact that in every location students are likely to have different characteristics, backgrounds, skills, learning ability, ICT knowledge, etc. Regarding those differences among students in different countries, reporting where the studies having adapted Schmidt (2009)’ survey were conducted may provide valuable information about distinguishing characteristics of changes made on survey.

Analysing articles resulted in the theme called “location (where the study was conducted)”. Place where the studies were administered is different with respect to continent. Most of the studies were detected to be conducted at the continent of Asia especially south-east Asia as portrayed in Figure 2. Figure 2 gives descriptive information related to in which country the study was carried out and how many studies were conducted in that country

Figure 2: representation of country where study was conducted

Studies conducted in Singapore comprised of a large portion when compared with the number of studies conducted in different countries. However, in general it may be concluded that studies adapted Schmidt (2009)’ survey was scattered all around the world.

Conclusion

[edit | edit source]Reported as valid and reliable instrument by several authors (Young at al., 2012; Abbitt, 2011; Baran, 2011) Schmidt (2009)’ survey of TPACK knowledge was adapted and directly used in most studies in order to reports students’ self-reporting of TPACK knowledge in different conditions. These studies were conducted almost equally in pre-service and in-service level and the secondary education is the field where most of the studies were carried out. Considering sample size even though studies conducted in conventional setting lean toward using large sample size, small sample size was used in design-based or intervention studies. With regard to types of adaptation Schmidt at al. (2009)’ survey was subject to, different studies applied different adaptations. That is, kinds of adaptation changed contingent upon the purpose of study and the nature of phenomena being investigated by study. Removing items, rewording items, adapting items to specified teaching subject, adding items, and changing Liker scale were mostly applied adaptations to survey. However, survey was mentioned to be adapted but detailed information related to how and which items specifically was adapted is scarce. Lack of information provided by authors causes most of calls in Table 2 to be empty. As part of adaptation Schmidt at al. (2009)’ survey was translated into three languages respectively Chinese, Arabic, and Turkish.

Conventional as type of study and self-reporting survey as an instrument were mostly used to measure pre-service and in-service teachers’ TPACK Knowledge in analyzed studies and the most of them are conducted in Singapore relative to other countries. In addition to the use of Cronbach Alpha value in most of the studies, the original 5 point Liker scale was changed as 7 point for getting better reliability.

As a new framework technological, pedagogical and content knowledge is interestingly getting high popularity among researchers and this popularity can be clearly observed in new trend of emerged publications. Therefore, contribution of this study to literature is highly important because the instrument of Schmidt at al. (2009) was one of the first valid and reliable surveys having developed to assess pre-service teachers’ knowledge of TPACK after the announcement of new emerged technological, pedagogical, and content knowledge (TPACK) framework postulated by Mishra and Koehler (2006). The measurement instrument of Schmidt at al. (2009) was the source from which subsequently conducted and emerged most of the studies fed while attempting to develop new instruments for identifying self-reported TPACK knowledge of not only pre-service but also in-service teachers from different disciplines in differently designed teaching contexts. More specifically, it served as artery that supplies the blood to other developing TPACK surveys.

This study gives opportunity to researchers, especially the ones who are new in TPACK and searching for valid and reliable TPACK survey for adopting in their studies, to look at and analyses all adapted surveys at multiple perspectives. Moreover, while contributing to the largely neglected area of research in the literature, this study also provides researchers a guide for resorting to evaluate the effectiveness of adapted surveys that can be used for further studies. Researcher can also make decisions regarding to adoption of any adapted survey with the help of valuable information provided about survey to be used.

References

[edit | edit source]Abbitt, J. T. (2011). Measuring technological pedagogical content knowledge in preservice teacher education: A review of current methods and instruments.Journal of Research on Technology in Education, 43(4), 281-300.

Agyei, D. D., & Keengwe, J. (2012). Using technology pedagogical content knowledge development to enhance learning outcomes. Education and Information Technologies, 1-17.

Agyei, D. D., & Voogt, J. (2012). Developing technological pedagogical content knowledge in pre-service mathematics teachers through collaborative design.Australasian Journal of Educational Technology, 28(4), 547-564.

Alayyar, G. M., Fisser, P., & Voogt, J. (2012). Developing technological pedagogical content knowledge in pre-service science teachers: Support from blended learning. Australasian Journal of Educational Technology, 28(8), 1298-1316.

Baran, E., Chuang, H. H., & Thompson, A. (2011). TPACK: An emerging research and development tool for teacher educators. TOJET, 10(4).

Chai, C. S., Koh, J. H. L., & Tsai, C. C. (2010). Facilitating preservice teachers’ development of technological, pedagogical, and content knowledge (TPACK).Educational Technology & Society, 13(4), 63-73.

Chai, C. S., Koh, J. H. L., Ho, H. N. J., & Tsai, C. C. (2012). Examining preservice teachers' perceived knowledge of TPACK and cyberwellness through structural equation modeling. Australasian Journal of Educational Technology,28(6), 1000-1019.

Chai, C.S., W.Ng, E.M.W., Li, W., Hong, H.Y., Koh, J.H.L. (2013). Validating and modeling technological pedagogical content knowledge framework among Asian pre-service teachers, Australasian Journal of Educational Technology, 29(1).

Chuang, H. H., & Ho, C. J. (2011). An investigation of early childhood teachers’ technological pedagogical content knowledge (TPACK) in Taiwan. Journal of Kirsehir Education Faculty, 12(2), 99-117.

Handal, B., Campbell, C., Cavanagh, M., Petocz, P., & Kelly, N. (2013). Technological pedagogical content knowledge of secondary mathematics teachers. Contemporary Issues in Technology and Teacher Education, 13(1). Retrieved from http://www.citejournal.org/vol13/iss1/mathematics/article1.cfm

Koehler, M. J., & Mishra, P. (2005). Teachers learning technology by design. Journal of Computing in Teacher Education, 21(3), 94-102.

Koh, J. H. L., Chai, C. S., & Tsai, C. C. (2010). Examining the technological pedagogical content knowledge of Singapore pre‐service teachers with a large‐scale survey. Journal of Computer AssistedLearning, 26(6), 563-573.

Lin, T. C., Tsai, C. C., Chai, C. S., & Lee, M. H. (2012). Identifying Science Teachers’ Perceptions of Technological Pedagogical and Content Knowledge (TPACK). Journal of Science Education and Technology, 1-12.

Messina, L., Tabone, S.(2012).Integrating Technology into Instructional Practices Focusing on Teacher Knowledge, Procedia - Social and Behavioral Sciences,46, 2012, Pages 1015-1027

Mishra, P. & Koehler, M. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017-1054. http://www.tcrecord.org/Content.asp?ContentID=12516

Oppenheim, A. N. (2001). Questionnaire design, interviewing and attitude measurement.

ÖZTÜRK, E., & HORZUM, M. B. (2011). Teknolojik Pedagojik İçerik Bilgisi Ölçeği’nin Türkçeye Uyarlaması. Ahi Evran Üniversitesi Eğitim Fakültesi Dergisi, 12(3), 255-278

Peeraer, J., & Van Petegem, P. (2012). The limits of programmed professional development on integration of information and communication technology in education. Australasian Journal of Educational Technology, 28(6), 1039-1056.

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for preservice teachers. Journal of Research on Computing in Education, 42(2), 123-149.

Semiz, K., & Ince, M. L. (2012). Pre-service physical education teachers' technological pedagogical content knowledge, technology integration self-efficacy and instructional technology outcome expectations. Australasian Journal of Educational Technology, 28(7), 1248-1265.

Tee, M. Y., & Lee, S. S. (2011). From socialization to internalization: Cultivating technological pedagogical content knowledge through problem-based learning. Australasian Journal of Educational Technology, 27(1), 89-104.

Young, J. R., Young, J. L., & Shaker, Z. (2012). Technological Pedagogical Content Knowledge (TPACK) Literature Using Confidence Intervals.TechTrends, 56(5), 25-33.