Local Area Network design/Print version

| This is the print version of Local Area Network design You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Local_Area_Network_design

Introduction to Local Area Networks

Origins

[edit | edit source]LAN definition

[edit | edit source]The IEEE 802 working group defined the Local Area Network (LAN) as a communication system through a shared medium, which allows independent devices to communicate together within a limited area, using an high-speed and reliable communication channel.

- Keywords

- shared medium: everyone is attached to the same communication medium;

- independent devices: everyone is peer, that is it has the same privilege in being able to talk (no client-server interaction);

- limited area: everyone is located within the same local area (e.g. corporate, university campus) and is at most some kilometers far one from each other (no public soil crossing);

- high-speed: at that time LAN speeds were measured in Megabit per second (Mbps), while WAN speeds in bit per second;

- reliable: faults are little frequent → checks are less sophisticated to the benefit of performance.

LAN vs. WAN comparison

[edit | edit source]Protocols for Wide Area Networks (WAN) and for Local Area Networks evolved independently until the 80s because purposes were different. In the 90s the IP technology finally allowed to interconnect these two worlds.

- WAN

WANs were born in the 60s to connect remote terminals to the few existing mainframes:

- communication physical medium: point-to-point leased line over long distance;

- ownership of physical medium: the network administrator has to lease cables from government monopoly;

- usage pattern: smooth, that is bandwidth occupancy for long periods of time (e.g. terminal session);

- type of communication: always unicast, multiple communications at the same time;

- quality of physical medium: high fault frequency, low speeds, high presence of electromagnetic disturbances;

- costs: high, also in terms of operating costs (e.g. leasing fee for cables);

- intermediate communication system: required to manage large-scale communications (e.g. telephone switches) → switching devices can fault.

- LAN

LANs appeared at the end of the 70s to share resources (such as printers, disks) among small working groups (e.g. departments):

- communication physical medium: multi-point shared bus architecture over short distance;

- ownership of physical medium: the network administrator owns cables;

- usage pattern: bursty, that is short-term data peaks (e.g. document printing) followed by long pauses;

- type of communication: always broadcast, just one communication at the same time;

- quality of physical medium: greater reliability against failures, high speeds, lower exposure to external disturbances;

- costs: reasonable, concentrated mainly when setting up the network;

- intermediate communication system: not required → lower cost, higher speed, greater reliability, greater flexibility in adding and removing stations.

Communication medium sharing

[edit | edit source]Before the advent of hubs and bridges, the shared communication medium could be implemented in two ways:

- physical broadcast: broadcast-based technologies, such as the bus: the signal sent by a station propagates to all the other stations;

- logical broadcast: point-to-point technologies, such as the token ring: the signal sent by a station arrives at the following station, which duplicates it toward the station after that one, and so on.

- Issues

- privacy: everyone can hear what crosses the shared medium → an addressing system should be made (nowadays: MAC addresses);

- concurrency: just one communication at a time is possible:

- collisions: if two stations transmit simultaneously, the data sent by a station may overlap the data sent by the other one → a mechanism for collision detection and recovery should be made (nowadays: CSMA/CD protocol);

- channel monopolization: in the back-to-back transmission, a station may occupy the channel for a long period of time preventing other stations from talking → a sort of statistical multiplexing, that is simulating multiple communications at the same time by defining a maximum transmission unit called chunk and by alternating chunks from a station with the ones from another one (nowadays: Ethernet frames), should be made.

Data-link sub-layers

[edit | edit source]In LANs the data-link layer is split in two sub-layers:

- MAC: it arbitrates the access to the physical medium, and is specific for each physical-layer technology;

- LLC: it defines the interface toward the network layer, and is common in all physical-layer technologies.

MAC

[edit | edit source]Every network card is identified uniquely by a MAC address. MAC addresses have the following format:

| 24 | 48 |

| OUI | NIC ID |

where the fields are:

- Organization Unique Identifier (OUI) field (3 bytes): code assigned uniquely by IEEE to identify the network card manufacturer:

- first least-significant bit in the first byte:[1]

- Individual (value 0): the address is associated to a single station (unicast);

- Group (value 1): the address refers to multiple stations (multicast/broadcast);

- second least-significant bit in the first byte:[1]

- Universal (value 0): the address is assigned uniquely;

- Local (value 1): the address is customized by the user;

- first least-significant bit in the first byte:[1]

- NIC Identifier (NIC ID) field (3 bytes): code assigned uniquely by the manufacturer to identify the specific network card (also called 'Network Interface Controller' [NIC]).

The Media Access Control (MAC) header has the following format:

| 48 | 96 | 112 | 46 to 1500 bytes | 4 bytes |

| Destination Address | Source Address | Length | payload | FCS |

where the fields are:

- Destination Address field (6 bytes): it specifies the destination MAC address.

- This is put before the source MAC address because in this way the destination can process it earlier and discard the frame if it is not addressed to it;

- Source Address field (6 bytes): it specifies the source MAC address (always unicast);

- Length field (2 bytes): it specifies the payload length;

- Frame Control Sequence (FCS) field (4 bytes): it includes the CRC code for integrity control over the entire frame.

- If the CRC code check fails, the arrived frame was corrupted (e.g. because of a collision) and is discarded; higher-layer mechanisms (e.g. TCP) will be responsible for recovering the error by sending again the frame.

A network card when receiving a frame:

- if the destination MAC address matches with the one of the network card or is of broadcast type ('FF-FF-FF-FF-FF-FF'), it accepts it and sends it to higher layers;

- if the destination MAC address does not match with the one of the network card, it discards it.

A network card set in promiscuous mode accepts all frames → it is useful for network sniffing.

LLC

[edit | edit source]The Logical Link Control (LLC) header has the following format:

| 8 | 16 | 24 or 32 |

| DSAP | SSAP | CTRL |

where the fields are:

- DSAP field (1 byte, of which 2 bits reserved): it identifies the upper-layer protocol used by the destination;

- SSAP field (1 byte, of which 2 bits reserved): it identifies the upper-layer protocol used by the source;

- Control (CTRL) field (1 or 2 bytes): it derives from the HDLC control field, but is unused.

- Issues of DSAP and SSAP fields

- limited set of values: just 64 protocols can be coded;

- codes assigned by ISO: just protocol published by an internationally recognized standard organization are corresponding to codes, while protocols defined by other bodies or pushed by some vendors (e.g. IP) are excluded;

- code redundancy: there is no reason to have two fields to defines protocols, because the source and the destination always talk the same protocol (e.g. both IPv4 or both IPv6).

SNAP

[edit | edit source]The Subnetwork Access Protocol (SNAP) is a particular implementation of LLC for protocols which have not a standard code.

The LLC SNAP header has the following format:

| 8 | 16 | 24 | 48 | 64 |

| DSAP (0xAA) | SSAP (0xAA) | CTRL (3) | OUI | Protocol Type |

where the fields are:

- DSAP, SSAP, CTRL fields: LLC fields are fixed to indicate the presence of the SNAP header;

- Organization Unique Identifier (OUI) field (3 bytes): it identifies the organization which defined the protocol.

- If it is equal to 0, the value in the 'Protocol Type' field is corresponding to the one used in Ethernet DIX;

- Protocol Type field (2 bytes): it identifies the upper-layer protocol (e.g. 0x800 = IP, 0x806 = ARP).

Actually, the LLC SNAP header is not very used due to waste of bytes, to the benefit of the 'Ethertype' field in Ethernet DIX.

References

[edit | edit source]- ↑ a b According to the canonical order (network byte order), which is the native order in IEEE 802.3 (Ethernet) but not in IEEE 802.5 (token ring) (please see section Bit-reversed notation in article MAC address on the English Wikipedia).

Ethernet

Ethernet is nowadays the most used technology in wired LANs with shared bus architecture, because it is a simple and little expensive solution with respect to other LAN technologies such as token ring and token bus.

Ethernet frame format

[edit | edit source]Two versions of Ethernet exist, with different frame formats:

- DIX Ethernet II (1982): version developed by DEC, Intel and Xerox (after this the 'DIX' acronym);

- IEEE 802.3 standard (1983): version standardized by the IEEE 802 working group.

Since there are two versions of Ethernet, a considerable inhomogeneity in upper-layer protocol envelopments exists:

- older protocols (e.g. IP) and protocols farther from IEEE use the DIX Ethernet II enveloping;

- protocols standardized since the beginning by IEEE (e.g. STP) use the IEEE 802.3 enveloping.

DIX Ethernet II

[edit | edit source]The DIX Ethernet II packet[1] has the following format:

| 7 bytes | 1 bytes | 6 bytes | 6 bytes | 2 bytes | 46 to 1500 bytes | 4 bytes | 12 bytes |

| preamble | SFD | destination MAC address | source MAC address | EtherType | payload | FCS | IFG |

| DIX Ethernet II frame (64 to 1518 bytes) | |||||||

where the most significant fields are:

- preamble (7 bytes): bit sequence to recover synchronization between the transmitter clock and the receiver clock.

- Preamble can be shortened whenever the packet crosses a hub → it is not possible to connect more than 4 hubs in a cascade:

Local Area Network design/Repeaters and bridges#Interconnection at the physical layer;

Local Area Network design/Repeaters and bridges#Interconnection at the physical layer;

- Start of Frame Delimiter (SFD) field (1 byte): bit sequence identifying the beginning of the frame;

- EtherType field (2 bytes): it identifies the upper-layer protocol used in the payload (it is a number greater or equal to 1500);

- Inter-Frame Gap (IFG) field (12 bytes): pause, that is no signal, identifying the end of the frame.

IEEE 802.3

[edit | edit source]The IEEE 802.3 packet can have one of the two following formats:

| 7 bytes | 1 byte | 14 bytes | 3 bytes | 0 to 1497 bytes | 0 to 43 bytes | 4 bytes | 12 bytes |

| preamble | SFD | MAC header | LLC header | payload | padding | FCS | IFG |

| IEEE 802.3 frame (64 to 1518 bytes) | |||||||

| 7 bytes | 1 byte | 14 bytes | 8 bytes | 0 to 1492 bytes | 0 to 38 bytes | 4 bytes | 12 bytes |

| preamble | SFD | MAC header | LLC SNAP header | payload | padding | FCS | IFG |

| IEEE 802.3 frame (64 to 1518 bytes) | |||||||

- Remarks

- the DIX Ethernet II and IEEE 802.3 frames have the same minimum and maximum lengths, because IEEE had to specify a frame format compatible with the old version of Ethernet;

- a DIX Ethernet II frame and an IEEE 802.3 frame can be distinguished by looking at the value in the field following the source MAC address:

- if it is lower or equal to 1500 ('Length' field), the frame is IEEE 802.3;

- if it is greater or equal to 1536 ('EtherType' field), the frame is DIX Ethernet II;

- in the IEEE 802.3 frame the 'Length' field would make superfluous the 'Inter-Frame Gap' (IFG) field, but it is present to keep compatibility with the DIX Ethernet II frame;

- in the DIX Ethernet II frame the upper layer has to transmit at least 46 bytes, while in the IEEE 802.3 frame the frame can be stretched to the minimum size with some padding as needed;

- the LLC and LLC SNAP headers in the IEEE 802.3 frame waste a lot more bytes with respect to the 'EtherType' field in the DIX Ethernet II frame although they are aimed to the same functionality of specifying the upper-layer protocol, and this is why the IEEE 802.3 standard has not been widely adopted to the benefit of DIX Ethernet II.

Physical layer

[edit | edit source]10-Mbps Ethernet can work over the following transmission physical media:

- coaxial cable:

- 10Base5: thick cable (max 500 m);

- 10Base2: thin cable (max 185 m);

- twisted copper pair:

- 10BaseT: cable with 4 twisted pairs of which just 2 used (max 100 m):

- Unshielded (UTP): unshielded;

- Shielded (STP): shielded with single global shield;

- Foiled (FTP): shielded with single global shield + a shield per pair;

- 10BaseT: cable with 4 twisted pairs of which just 2 used (max 100 m):

- optical fiber (max 1-2 km)

Coaxial cable

[edit | edit source]At the beginning shared bus was physically made by a coaxial cable:

- vampire taps: every network card is connected to a thick coaxial cable through a vampire clamp, which allowed electrical propagation via physical contact (galvanic continuity) → uncomfortable connection;

- T-connectors: every network card is connected to a thin coaxial cable through a T-connector → connecting and disconnecting a host requires to unplug the whole network.

Twisted copper pair

[edit | edit source]With the introduction of the twisted copper pair, cabling (that is cable laying in buildings) acquired a greater flexibility: every host can be connected to a RJ45 wall socket through the specific RJ45 connector, and all sockets are in turn connected to a cabinet.

In addition, the RJ11 connector used by telephony can be connected to the RJ45 wall socket, too → in cabling RJ45 sockets can be placed in the whole building and then one can decide whenever whether an Ethernet card or a telephone should be connected, by switching between the data connection and the telephone connection in the cabinet.

Optical fiber

[edit | edit source]- Characteristics

- no sensitivity to electromagnetic interferences

- larger distances

- higher costs

- lower flexibility

CSMA/CD

[edit | edit source]A collision occurs when two or more nodes within the same collision domain transmit at the same time and their signals overlap. The Carrier Sense Multiple Access with Collision Detection (CSMA/CD) protocol specify how to recognize a collision (CD) and how to recover a collision (retransmission).

CSMA/CD is a simple and distributed random-access (that is non-deterministic) protocol: it does not contemplates intermediate devices or particular synchronization mechanisms, unlike token ring where the synchronization mechanism is the token itself → the CSMA/CD protocol is efficient in terms of throughput because there is no overhead for synchronization, in terms of delays and channel occupancy.

In full-duplex mode the CSMA/CD protocol does no longer need to be enabled.

Detecting collisions

[edit | edit source]Instead of transmitting the whole frame and just at the end checking for a collision, the node can use Collision Detection (CD): during the transmission sometimes it tries to understand whether a collision occurred ('listen while talking'), and if so it immediately stops the transmission, avoiding to waste the channel for a useless transmission.

In the real world, collision detection is performed in two different ways depending on the type of transmission medium:

- coaxial cable: there is a single channel for both transmission and reception → measuring the average DC on link is enough;

- twisted copper pair, optical fiber: there are two channels, one for transmission and another for reception:

- transmitting stations: they can realize that a collision occurred by detecting activity on the receiving channel during the transmission;

- non-transmitting stations: they can realize that a collision occurred only by detecting a wrong CRC code on the received frame.

- The jamming sequence is a powerful signal which is sent by who has noticed a collision to guarantee that the CRC code is invalid and to maximize probability that all the other nodes understand that a collision occurred.

Reducing the number of collisions

[edit | edit source]Carrier Sense (CS) allows to reduce the number of collisions: the node which wants to transmit listens to the channel before transmitting:

- if it senses the channel is free: the node transmits the frame;

- if it senses the channel is busy:

- 1-persistent CSMA: the node keeps checking whether the channel is free and transmit as soon as it becomes free;

- 0-persistent CSMA: the node tries again after a random time;

- -persistent CSMA: the node with probability waits a random time (0-persistent), with probability immediately checks again (1-persistent).

In a LAN in the worst case the channel occupancy is equal to 30-40% the available bandwidth → Ethernet implements 1-persistent CSMA/CD because it is aimed for averagely unloaded networks with low probability of collisions.

- CSMA limitations

However, with twisted copper pair or optical fiber CSMA is not able to avoid collisions altogether (otherwise CD would not be useful): if propagation times are considered, a far node can sense the channel as free, even if actually it is busy but transmission has not reached the far node yet:

The vulnerability interval is defined as the time interval where starting a transmission by the far node would create a collision (it is equal to the propagation delay on the channel), and this interval is as larger as distance increases → this protocol works well on small networks.

Recovering collisions

[edit | edit source]After a collision occurred, the frame has to be transmitted again. If the stations involved in the collision transmitted again immediately, another collision would occur → back-off algorithm inserts into the wait a randomness element exponential in retransmissions:

- 1st retransmission: the node waits a time chosen randomly between 0 and 1 slot times;

- 2nd retransmission: the node waits a time chosen randomly from 0 to 3 slot times;

- 3rd retransmission: the node waits a time chosen randomly from 0 to 7 slot times;

and so on, according to formula:

where:

- is the number of collisions occurred on the current frame;

- is the slot time, that is the time required to send an Ethernet frame of minimum size (64 bytes), equivalent to 51.2 µs.

At the end of every wait, the node listens again to the channel by CS.

Constraint between frame size and collision diameter

[edit | edit source]Since the channel access is contended, when one manages to get the network access it is better to transmit large packets. A minimum size for frames needs to be established: if the frame is too small and the collided transmission lasts too little time, it may happen that no stations notice the collision:

A constraint between the frame size and the collision diameter exists so that all collisions are recognized: collision detection works only if the round trip time , that is the outward and return time, is lower than the transmission time :

where is the transmission speed and is the propagation speed.

Increasing the transmission speed means increasing the frame minimum size, or for the same minimum size it means decreasing the maximum distance among nodes, but too large frames would increase the transmission error probability and would clog the network.

In Ethernet DIX the theoretical collision diameter can not exceed 5750 meters:[2]

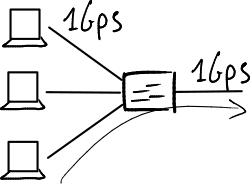

Without hubs the maximum network size is quite limited by maximum distances supported by transmission media (e.g. due to signal attenuation). Thanks to hubs the network size can be extended (although at most to 3 km due to non-idealities in devices): the hub, typically placed as the star center in a star topology, re-generates the signal (repeater) and internally simulates the shared bus allowing to connect multiple stations together through the twisted copper pair: ![]() Local Area Network design/Repeaters and bridges#Interconnection at the physical layer.

Local Area Network design/Repeaters and bridges#Interconnection at the physical layer.

References

[edit | edit source]- ↑ The standard names the Ethernet frame + the 'Preamble', 'SFD' e 'IFG' fields of the physical layer as 'Ethernet packet'.

- ↑ For frame length preamble and SFD, but not IFG, are considered.

Repeaters and bridges

Interconnection at the physical layer

[edit | edit source]

Repeater and hub[1] are network devices for interconnection at the physical layer, which just receive and propagate a sequence of bits. The interconnected physical-layer channels can also have different technologies (e.g. twisted pair to optical fiber), but all the upper layers must be equal (e.g. Ethernet-only, FDDI-only).

Repeater also performs the function of recovering the signal degradation: it synchronizes itself with the square wave signal and regenerates it so as to clean it. The preamble preceding the frame is used for synchronization, that is signal recognition, and so whenever the signal crosses a repeater a part of this preamble is 'eaten' → it is not possible to connect more than 4 repeaters in a cascade.

A collision domain is the set of nodes competing to access the same transmissive medium → the simultaneous transmission causes collision. Interconnecting two network segments creates a single collision domain: repeater is not able to recognize collisions which are propagated to all ports → this is a limit to the size of the physical domain.

Interconnection at the data-link layer

[edit | edit source]

Bridge and switch are network devices for interconnection at the data-link layer, which store (store-and-forward mode) and then regenerate the frame. Also the interconnected data-link-layer domains can have different technologies (e.g. Ethernet to FDDI).

- Maximum frame size issue

In practice it is often impossible to interconnect two different data-link-layer technologies, due to issues related to the maximum frame size: for example, in an Ethernet-based network having MTU = 1518 bytes interconnected with a token ring-based network having MTU = 4 KB, what happens if a frame larger than 1518 bytes comes from the token ring network? In addition fragmentation at the data-link layer does not exist.

Bridge decouples broadcast domain from collision domain:

- it 'splits' the collision domain: it implements the CSMA/CD protocol to detect collisions, avoiding propagating them to the other ports;

- it extends the broadcast domain: frames sent in broadcast are propagated to all ports.

Half-duplex and full-duplex modes

[edit | edit source]A point-to-point link at the data-link layer between two nodes (e.g. a host and a bridge) can be performed in two ways:

- half-duplex mode: the two nodes are connected through a single bidirectional wire → each node can not transmit and receive at the same time, because a collision would happen;

- full-duplex mode: the two nodes are connected through two separate unidirectional wires → each node can transmit and receive at the same time, thanks to the splitting of collision domains.

- Full-duplex mode advantages

- higher bandwidth: the throughput between the two nodes doubles;

- absence of collisions:

- the CSMA/CD protocol does no longer need to be enabled;

- the constraint on the minimum Ethernet frame size is no longer needed;

- the limit on the maximum diameter for the collision domain does no longer exist (the only distance limit is the physical one of the channel).

Transparent bridge

[edit | edit source]

Routing is performed in a transparent way: the bridge tries to learn the positions of the nodes connected to it filling a forwarding table called filtering database, whose entries have the following format:

where destination port is the port of the bridge, learnt by learning algorithms, which to make frames exit heading towards the associated destination MAC address.

- Learning algorithms

- frame forwarding: learning is based on destination MAC address: when a frame arrives whose destination is not still in the filtering database, the bridge sends the frame in broadcast on all ports but the input port (flooding) and it waits for the reply which the destination is very likely to send back and which the backward learning algorithm will act on;

- backward learning: learning is based on source MAC address: when a frame arrives at a certain port, the bridge checks if there is already the source associated to that port in the filtering database, and if needed it updates it.

Smart forwarding process increases the network aggregate bandwidth: frames are no longer propagated always in broadcast on all ports, but they are forwarded only on the port towards the destination, leaving other links free to transport other flows at the same time.

Mobility

[edit | edit source]Ageing time allows to keep the filtering database updated: it is set to 0 when the entry is created or updated by the backward learning algorithm, and it is increased over time until it exceeds the expiration time and the entry is removed. In this way the filtering database contains information about the only stations which are actually within the network, getting rid of information about old stations.

Data-link-layer networks natively support mobility: if the station is moved, remaining within the same LAN, so as to be reachable through another port, the bridge has to be 'notified' of the movement by sending any broadcast frame (e.g. ARP Request), so that the backward learning algorithm can fix the filtering database. Windows systems tend to be more 'loquacious' than UNIX systems.

Examples of stations which can move are:

- mobile phones;

- virtual machines in datacenters: during the day they can be spread over multiple web servers to distribute the workload, during the night they can be concentrated on the same web server because traffic is lower allowing to save power;

- stations connected to the network via two links, one primary used in normal conditions and one secondary fault-tolerant: when the primary link breaks, the host can restore the connectivity by sending a frame in broadcast through the secondary link.

Switches

[edit | edit source]

'Switch' is the commercial name given to bridges having advanced features to emphasize their higher performance:

- a switch is a multi-port bridge: a switch has a lot more ports, typically all in full-duplex mode, than a bridge;

- the smart forwarding process is no longer a software component but it is implemented into a hardware chip (the spanning tree algorithm keeps being implemented in software because more complex);

- lookup for the port associated to a given MAC address in the filtering database is faster thanks to the use of Content Addressable Memories (CAM), which however have a higher cost and a higher energy consumption;

- switches support cut-through forwarding technology faster than store-and-forward mode: a frame can be forwarded on the target port (unless it is already busy) immediately after receiving the destination MAC address.

Issues

[edit | edit source]Scalability

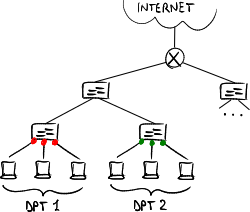

[edit | edit source]Bridges have scalability issues because they are not able to organize traffic, and therefore they are not suitable for complex networks (such as Wide Area Network):

- no filtering for broadcast traffic → over a wide network with a lot of hosts broadcast frames risk clogging the network;

- the spanning tree algorithm make completely unused some links which would create rings in topology disadvantaging load balancing:

Local Area Network design/Spanning Tree Protocol#Scalability.

Local Area Network design/Spanning Tree Protocol#Scalability.

Security

[edit | edit source]Some attacks to the filtering database are possible:

- MAC flooding attack

The attacking station generates frames with random source MAC addresses → the filtering database gets full of MAC addresses of inexistent stations, while the ones of the existent stations are thrown out → the bridge sends in flooding (almost) all the frames coming from the existent stations because it does not recognize the destination MAC addresses anymore → the network is slowed down and (almost) all the traffic within the network is received by the attacking station.[2]

- Packet storm

The attacking station generates frames with random destination MAC addresses → the bridge sends in flooding all the frames coming from the attacking station because it does not recognize the destination MAC addresses → the network is slowed down.

References

[edit | edit source]- ↑ The difference between repeaters and hubs lies in the number of ports: repeater has two ports, hub has more than two ports.

- ↑ Not all the traffic can be intercepted: when a frame comes from an existent station the bridge saves its source MAC address into its filtering database, so if immediately after a frame arrives heading towards that station, before the entry is cleared, it will not be sent in flooding.

Ethernet evolutions

With the success of Ethernet new issues arose:

- need for higher speed: DIX Ethernet II supported a transmission speed equal to 10 Mbit/s, while FDDI, used in backbone, supported a very higher speed (100 Mbit/s), but it would have been too expensive to wire buildings by optical fiber;

- need to interconnect multiple networks: networks of different technologies (e.g. Ethernet, FDDI, token ring), were difficult to interconnect because they had different MTUs → having the same technology everywhere would have solved this problem.

Fast Ethernet

[edit | edit source]Fast Ethernet, standardized as IEEE 802.3u (1995), raises the transmission speed to 100 Mbit/s and makes the maximum collision diameter 10 times shorter (~200-300 m) accordingly, keeping the same frame format and the same CSMA/CD algorithm.

Physical layer

[edit | edit source]Fast Ethernet physical layer is altogether different than 10-Mbit/s Ethernet physical layer: it partially derives from existing standards in the FDDI world, so much that Fast Ethernet and FDDI are compatible at the physical layer, and definitively abandons the coaxial cable:

- 100BASE-T4: twisted copper pair using 4 pairs;

- 100BASE-TX: twisted copper pair using 2 pairs;

- 100BASE-FX: optical fiber (only in backbone).

Adoption

[edit | edit source]When Fast Ethernet was introduced, its adoption rate was quite low because of:

- distance limit: network size was limited → Fast Ethernet was not appropriate for backbone;

- bottlenecks in backbone: the backbone made in 100-Mbps FDDI technology had the same speed as access networks in Fast Ethernet technology → it was unlikely to be able to drain all the traffic coming from access networks.

Fast Ethernet started to be adopted more widely with:

- the introduction of bridges: they break the collision domain overcoming the distance limit;

- the introduction of Gigabit Ethernet in backbone: it avoids bottlenecks in backbone.

Gigabit Ethernet

[edit | edit source]Gigabit Ethernet, standardized as IEEE 802.3z (1998), rises the transmission speed to 1 Gbit/s and introduces two features, 'Carrier Extension' and 'Frame Bursting', to keep the CSMA/CD protocol working.

Carrier Extension

[edit | edit source]Decuplicating the transmission speed would make the maximum collision diameter 10 more times shorter putting it down to a few tens of meters, too few for cabling → to keep the maximum collision diameter unchanged, the minimum frame size should be increased to 512 bytes[1].

Stretching the minimum frame however would cause an incompatibility issue: in the interconnection of a Fast Ethernet network and a Gigabit Ethernet network by a bridge, minimum-sized frames coming from the Fast Ethernet network could not enter the Gigabit Ethernet network → instead of stretching the frame the slot time, that is the minimum transmission time unit, was stretched: a Carrier Extension made up of padding dummy bits (up to 448 bytes) was appended to all the frames shorter than 512 bytes:

| 7 bytes | 1 byte | 64 to 1518 bytes | 0 to 448 bytes | 12 bytes |

| preamble | SFD | Ethernet II DIX/IEEE 802.3 frame | Carrier Extension | IFG |

| 512 to 1518 bytes | ||||

- Disadvantages

- Carrier Extension occupies the channel with useless bits.

For example with 64-byte-long frames useful throughput is very low: - in newer pure switched networks the full-duplex mode is enabled → CSMA/CD is disabled → Carrier Extension is useless.

Frame Bursting

[edit | edit source]The maximum frame size of 1518 bytes is obsolete by now: in 10-Mbit/s Ethernet the channel occupancy was equal to 1.2 ms, a reasonable time to guarantee the statistical multiplexing, while in Gigabit Ethernet the channel occupancy is equal to 12 µs → collisions are a lot less frequent → to reduce the header overhead in relation to useful data improving efficiency, the maximum frame size could be increased.

Stretching the maximum frame however would cause an incompatibility issue: in the interconnection of a Fast Ethernet network and a Gigabit Ethernet network by a bridge, maximum-sized frames coming from the Gigabit Ethernet network could not enter the Fast Ethernet networks → Frame Bursting consists in concatenating several standard-sized frames one after the other, without realising the channel:

| frame 1[2] + Carrier Extension |

FILL | frame 2[2] | FILL | ... | FILL | last frame[2] | IFG | |

| burst limit (8192 bytes) | ||||||||

- just the first frame is possibly extended by Carrier Extension, to make sure that the collision window is filled; in the next frames Carrier Extension is useless because, if a collision would occur, it would already be detected by the first frame;

- IFG between a frame and another is replaced by a 'Filling Extension' (FILL) to frame bytes and announce that another frame will follow;

- the transmitter station keeps a byte counter: when it arrives to byte number 8192, the frame currently in transmission must be the last one → up to 8191 bytes + 1 frame can be sent with Frame Bursting.

- Advantages

- the number of collision chances is reduced: once the first frame is transmitted without collisions, all the other stations detect that the channel is busy thanks to CSMA;

- the frames following the first one do not require Carrier Extension → useful throughput increases especially in case of small frames, thanks to saving for Carrier Extension.

- Disadvantages

- Frame Bursting does not address the primary goal of reducing the header overhead: it was opted to keep in every frame all headers (including preamble, SFD and IFG) to make the processing hardware simpler;

- typically a station using Frame Bursting has to send a lot of data → big frames do not require Carrier Extension → there is no saving for Carrier Extension;

- in newer pure switched networks the full-duplex mode is enabled → CSMA/CD is disabled → Frame Bursting has no advantages and therefore is useless.

Physical layer

[edit | edit source]Gigabit Ethernet can work over the following transmission physical media:

- twisted copper pair:

- Shielded (STP): the 1000BASE-CX standard uses 2 pairs (25 m);

- Unshielded (UTP): the 1000BASE-T standard uses 4 pairs (100 m);

- optical fiber: the 1000BASE-SX and 1000BASE-LX standards use 2 fibers, and can be:

- Multi-Mode Fiber (MMF): less valuable (275-550 m);

- Single-Mode Fiber (SMF): its maximum length is 5 km.

- GBIC

Gigabit Ethernet introduces for the first time gigabit interface converters (GBIC), which are a common solution for having the capability of updating the physical layer without having to update the rest of the equipment: the Gigabit Ethernet board has not the physical layer integrated on board, but it includes just the logical part (from the data-link layer upward), and the user can plug into the dedicated board slots the desired GBIC implementing the physical layer.

10 Gigabit Ethernet

[edit | edit source]10 Gigabit Ethernet, standardized as IEEE 802.3ae (2002), raises the transmission speed to 10 Gbps and finally abandons the half-duplex mode, removing all the issues deriving from CSMA/CD.

It is not still used in access networks, but it is mainly being used:

- in backbones: it works over optical fiber (26 m to 40 km) because the twisted copper pair is no longer enough because of signal attenuation limitations;

- in datacenters: besides optical fibers, also very short cables are used to connect servers to top-of-the-rack (TOR) switches:[3]

- Twinax: coaxial cables, first used because units for transmission over twisted copper pairs were consuming too much power;

- 10Gbase T: shielded twisted copper pairs, having a very high latency;

- in Metropolitan Area Networks (MAN) and Wide Area Networks (WAN): 10 Gigabit Ethernet can be transported over already existing MAN and WAN infrastructures, although at a transmission speed decreased to 9.6 Gb/s.

40 Gigabit Ethernet and 100 Gigabit Ethernet

[edit | edit source]40 Gigabit Ethernet and 100 Gigabit Ethernet, both standardized as IEEE 802.3ba (2010), raise the transmission speed respectively to 40 Gbit/s and 100 Gbit/s: for the first time the transmission speed evolution is no longer at 10×, but it was decided to define a standard at an intermediate speed due to still high costs for 100 Gigabit Ethernet. In addition, 40 Gigabit Ethernet can be transported over the already existing DWDM infrastructure.

These speeds are used only in backbone because they are not suitable yet not only for hosts, but also for servers, because they are very close to internal speeds in processing units (bus, memory, etc.) → the bottleneck is no longer the network.

References

[edit | edit source]- ↑ In theory the frame should be stretched 10 times more, then to 640 bytes, but the standard decides otherwise.

- ↑ a b c preamble + SFD + Ethernet II DIX/IEEE 802.3 frame

- ↑ Please refer to section FCoE.

Advanced features on Ethernet networks

Autonegotiation

[edit | edit source]Autonegotiation is a plug-and-play oriented feature: when a network card connects to a network, it sends impulses by a particular encoding to try to determine network characteristics:

- mode: half-duplex or full-duplex (over twisted pair);

- transmission speed: starting from the highest speed down to the lowest one (over twisted pair and optical fiber).

- Negotiation sequence

- 1 Gb/s full-duplex

- 1 Gb/s half-duplex

- 100 Mb/s full-duplex

- 100 Mb/s half-duplex

- 10 Mb/s full-duplex

- 10 Mb/s half-duplex

Issues

[edit | edit source]Autonegotiation is possible only if the station connects to another host or to a bridge: hubs in fact work at fixed speed, hence they can not negotiate anything. If during the procedure the other party does not respond, the negotiating station assumes it is connected to a hub → it automatically sets the mode to half-duplex.

If the user manually configures his own network card to work always in full-duplex mode disabling the autonegotiation feature, when he connects to a bridge the latter, not receiving reply from the other party, assumes to be connected to a hub and sets the half-duplex mode → the host considers sending and receiving at the same time over the channel as possible, while the bridge considers that as a collision on the shared channel → the bridge detects a lot of collisions which are false positives, and erroneously discards a lot of frames → every discarded frame is recovered by TCP error-recovery mechanisms, which however are very slow → the network access speed is very low. Very high values in collision counters on a specific bridge port are symptom of this issue.

Increasing the maximum frame size

[edit | edit source]The original Ethernet specification defines:

- maximum frame size: 1518 bytes;

- maximum payload size (MTU): 1500 bytes.

However in several cases it would be useful to have a frame larger than normal:

- additional headers:

#Baby Giant frames

#Baby Giant frames - bigger payload:

#Jumbo Frames

#Jumbo Frames - less CPU interrupts:

#TCP offloading

#TCP offloading

Baby Giant frames

[edit | edit source]Baby Giant frames are frames having sizes bigger than the maximum size of 1518 bytes defined by the Ethernet original specification, because of inserting new data-link-layer headers in order to transport additional information about the frame:

- frame VLAN tagging (IEEE 802.1Q) adds 4 bytes:

Local Area Network design/Virtual LANs#Frame tagging;

Local Area Network design/Virtual LANs#Frame tagging; - VLAN tag stacking (IEEE 802.1ad) adds 8 bytes:

Local Area Network design/Virtual LANs#Tag stacking;

Local Area Network design/Virtual LANs#Tag stacking; - MPLS adds 4 bytes per stacked label:

Computer network technologies and services/MPLS#MPLS header.

Computer network technologies and services/MPLS#MPLS header.

In Baby Giant frames the maximum payload size (e.g. IP packet) is unchanged → a router, when receiving a Baby Giant frame, in re-generating the data-link layer can envelop the payload into a normal Ethernet frame → interconnecting LANs having different supported frame maximum sizes is not a problem.

The IEEE 802.3as standard (2006) proposes to extend the maximum frame size to 2000 bytes, keeping the MTU size unchanged.

Jumbo Frames

[edit | edit source]Jumbo Frames are frames having sizes bigger than the maximum size of 1518 bytes defined by the Ethernet original specification:

- Mini Jumbos: frames having MTU size equal to 2500 bytes;

- Jumbos (or 'Giant' or 'Giant Frames'): frames having MTU size up to 9 KB;

because of enveloping bigger payloads in order to:

- transport storage data: typically elementary units of storage data are too big to be transported in a single Ethernet frame:

- the NFS protocol for NASes transports data blocks of about 8 KB;

- the FCoE protocol for SANs and the FCIP protocol for SAN interconnection transport Fibre Channel frames of about 2.5 KB;

- the iSCSI protocol for SANs transports SCSI commands of about 8 KB;

- reduce the header overhead in terms of:

- saving for bytes: it is not very significant, especially considering the high available bandwidth in today's networks;

- processing capability for TCP mechanisms (sequence numbers, timers...): every TCP packet triggers a CPU interrupt.

If a LAN using Jumbo Frames is connected to a LAN not using Jumbo Frames, all Jumbo Frames will be fragmented at the IP layer, but IP fragmentation is not convenient from the performance point of view → Jumbo Frames are used in independent networks within particular scopes.

TCP offloading

[edit | edit source]Network cards with the TCP offloading feature can automatically condense on-the-fly multiple TCP payloads into a single IP packet before turning it to the operating system (sequence numbers and other parameters are internally handled by the network card) → the operating system, instead of having to process multiple small packets by triggering a lot of interrupts, sees a single bigger IP packet and can make the CPU process it at once → this reduces overhead due to TCP mechanisms.

PoE

[edit | edit source]Bridges having the Power over Ethernet (PoE) feature are able to distribute electrical power (up to few tens of Watts) over Ethernet cables (only twisted copper pairs), to connect devices with moderate power needs (VoIP phones, wi-fi access points, surveillance cameras, etc.) avoiding additional cables for electrical power.

Non-PoE stations can be connected to PoE sockets.

Issues

[edit | edit source]- energy consumption: a PoE bridge consumes a lot more electrical power (e.g. 48 ports each one at 25 W consume 1.2 kW) and is more expensive than a traditional bridge;

- service continuity: a failure of the PoE bridge or a power blackout cause telephones, which instead are an important service in case of emergency, to stop working → uninterruptible power supplies (UPS) need to be set but they, instead of providing just traditional telephones with electrical power, have to provide the whole data infrastructure with electrical power.

Spanning Tree Protocol

The loop problem

[edit | edit source]

If the network has a logical ring in topology, some frames may start moving indefinitely in a chain multiplication around the loop:

- broadcast/multicast frames: they are always propagated on all ports, causing a broadcast storm;

- unicast frames sent to a station yet not included in the filtering database or inexistent: they are sent in flooding.

Moreover, bridges in the loop may have their filtering databases inconsistent, that is the entry in the filtering database related to the sender station changes its port every time a frame replication arrives through a different port, making the bridge believe that the frame has come from the station itself moving.

Spanning tree algorithm

[edit | edit source]| This page includes CC BY-SA contents from Wikipedia: Spanning Tree Protocol. |

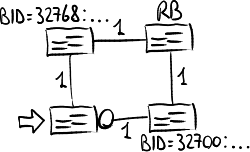

The spanning tree algorithm allows to remove logical rings from the network physical topology, by disabling links[1] to transform a mesh topology (graph) into a tree called spanning tree, whose root is one of the bridges called root bridge.

Each link is characterized by a cost based on the link speed: given a root bridge, multiple spanning trees can be built connecting all bridges one with each other, but the spanning tree algorithm chooses the spanning tree made up of the lowest cost edges.

- Parameters

- Bridge Identifier: it identifies the bridge uniquely and includes:

- bridge priority: it can be set freely (default value = 32768);

- bridge MAC address: it is chosen between the MAC address of his ports by a vendor-specific algorithm and can not be changed;

- Port Identifier: it identifies the bridge port and includes:

- port priority: it can be set freely (default value = 128);

- port number: in theory a bridge can not have more than 256 ports → in practice also the port priority field can be used if needed;

- Root Path Cost: it is equal to the sum of the costs of all links, selected by the spanning tree algorithm, traversed to reach the root bridge (the cost for traversing a bridge is null).

Criteria

[edit | edit source]The spanning tree can be determined by the following criteria.

Root bridge

[edit | edit source]A root bridge is the root for the spanning tree: all the frames going from one of its sub-trees to another one must cross the root bridge.[2]

The bridge with the smallest Bridge Identifier is selected as the root bridge: the root of the spanning tree will be therefore the bridge with the lowest priority, or if there is a tie the one with the lowest MAC address.

Root port

[edit | edit source]- Criteria for selecting a root port for the bridge the arrow points to.

-

Least-cost path.

-

Smallest remote Bridge ID.

-

Smallest remote Port ID.

-

Smallest local Port ID.

A root port is the port in charge of connecting to the root bridge: it sends frames toward the root bridge and receives frames from the root bridge.

- The cost of each possible path is determined from the bridge to the root. From these, the one with the smallest cost (a least-cost path) is picked. The port connecting to that path is then the root port for the bridge.

- When multiple paths from a bridge are least-cost paths toward the root, the bridge uses the neighbor bridge with the smallest Bridge Identifier to forward frames to the root. The root port is thus the one connecting to the bridge with the lowest Bridge Identifier.

- When two bridges are connected by multiple cables, multiple ports on a single bridge are candidates for root port. In this case, the path which passes through the port on the neighbor bridge that has the smallest Port Identifier is used.

- In a particular configuration with a hub where the remote Port Identifiers are equal, the path which passes through the port on the bridge itself that has the smallest Port Identifier is used.

Designated port

[edit | edit source]- Criteria for selecting a designated port for the link the arrow points to.

-

Least-cost path.

-

Smallest local Bridge ID.

-

Smallest local Port ID.

A designated port is the port in charge of serving the link: it sends frames to the leaves and receives frames from the leaves.

- The cost of each possible path is determined from each bridge connected to the link to the root. From these, the one with the smallest cost (a least-cost path) is picked. The port connected to the link of the bridge which leads to that path is then the designated port for the link.

- When multiple bridges on a link lead to a least-cost path to the root, the link uses the bridge with the smallest Bridge Identifier to forward frames to the root. The port connecting that bridge to the link is the designated port for the link.

- When a bridge is connected to a link with multiple cables, multiple ports on a single bridge are candidates for designated port. In this case, the path which passes through the port on the bridge itself that has the smallest Port Identifier is used.

Blocked port

[edit | edit source]A blocked port never sends frames on its link and discards all the received frames (except for BPDU configuration messages).

Any active port that is not a root port or a designated port is a blocked port.

BPDU messages

[edit | edit source]The above criteria describe one way of determining what spanning tree will be computed by the algorithm, but the rules as written require knowledge of the entire network. The bridges have to determine the root bridge and compute the port roles (root, designated, or blocked) with only the information that they have.

Since bridges can exchange information about Bridge Identifiers and Root Path Costs, Spanning Tree Protocol (STP), standardized as IEEE 802.1D (1990), defines messages called BPDUs.

BPDU format

[edit | edit source]BPDUs have the following format:

| 1 | 7 | 8 | 16 | 24 | 32 |

| Protocol ID (0) | Version (0) | BPDU Type (0) | |||

| TC | 000000 | TCA | Root Priority | ||

| Root MAC Address | |||||

| Root Path Cost | |||||

| Bridge Priority | |||||

| Bridge MAC Address | |||||

| Port Priority | Port Number | Message Age | |||

| Max Age | Hello Time | ||||

| Forward Delay | |||||

| 16 | 24 | 32 |

| Protocol ID (0) | Version (0) | BPDU Type (0x80) |

where the fields are:

- Protocol Identifier field (2 bytes): it specifies the IEEE 802.1D protocol (value 0);

- Version field (1 byte): it distinguishes Spanning Tree Protocol (value 0) from Rapid Spanning Tree Protocol (value 2);

- BPDU Type field (1 byte): it specifies the type of BPDU:

- Configuration BPDU (CBPDU) (value 0): used for spanning tree computation, that is to determine the root bridge and the port states:

#Ingress of a new bridge;

#Ingress of a new bridge; - Topology Change Notification BPDU (TCN BPDU) (value 0x80): used to announce changes in the network topology in order to update entries in filtering databases:

#Announcing topology changes;

#Announcing topology changes;

- Configuration BPDU (CBPDU) (value 0): used for spanning tree computation, that is to determine the root bridge and the port states:

- Topology Change (TC) flag (1 bit): set by the root bridge to inform all bridges that a change occurred in the network;

- Topology Change Acknowledgement (TCA) flag (1 bit): set by the root bridge to inform the bridge which detected the change that its Topology Change Notification BPDU has been received;

- Root Identifier field (8 bytes): it specifies the Bridge Identifier of the root bridge in the network:

- Root Priority field (2 bytes): it includes the priority of the root bridge;

- Root MAC Address field (6 bytes): it includes the MAC address of the root bridge;

- Bridge Identifier field (8 bytes): it specifies the Bridge Identifier of the bridge which is propagating the Configuration BPDU:

- Bridge Priority field (2 bytes): it includes the priority of the bridge;

- Bridge MAC Address field (6 bytes): it includes the MAC address of the bridge;

- Root Path Cost field (4 bytes): it includes the path cost to reach the root bridge, as seen by the bridge which is propagating the Configuration BPDU;

- Port Identifier field (2 bytes): it specifies the Port Identifier of the port which the bridge is propagating the Configuration BPDU on:

- Port Priority field (1 byte): it includes the port priority;

- Port Number field (1 byte): it includes the port number;

- Message Age field (2 bytes): value, initialized to 0, which whenever the Configuration BPDU crosses a bridge is increased by the transit time throughout the bridge;[3]

- Max Age field (2 bytes, default value = 20 s): if the Message Age reaches the Max Age value, the received Configuration BPDU is no longer valid;[3]

- Hello Time field (2 bytes, default value = 2 s): it specifies how often the root bridge generates the Configuration BPDU;[3]

- Forward Delay field (2 bytes, default value = 15 s): it specifies the waiting time before forcing a port transition to another state.[3]

BPDU generation and propagation

[edit | edit source]Only the root bridge can generate Configuration BPDUs: all the other bridges simply propagate received Configuration BPDUs on all their designated ports. Root ports are the ones that receive the best Configuration BPDUs, that is with the lowest Message Age value = lowest Root Path Cost. Blocked ports never send Configuration BPDUs but keep listening to incoming Configuration BPDUs.

Instead Topology Change Notification BPDUs can be generated by any non-root bridge, and they are always propagated on root ports.

When a bridge generates/propagates a BPDU frame, it uses the unique MAC address of the port itself as a source address, and the STP multicast address 01:80:C2:00:00:00 as a destination address:

| 6 bytes | 6 bytes | 2 bytes | 1 byte | 1 byte | 1 byte | 4 bytes | |

| 01:80:C2:00:00:00 (multicast) | source bridge address (unicast) | ... | 0x42 | 0x42 | 0x03 | BPDU | ... |

| destination MAC address | source MAC address | length | DSAP | SSAP | CTRL | payload | FCS |

Dynamic behavior

[edit | edit source]Port states

[edit | edit source]- Disabled

- A port switched off because no links are connected to the port.

- Blocking

- A port that would cause a loop if it were active. No frames are sent or received over a port in blocking state (Configuration BPDUs are still received in blocking state), but it may go into forwarding state if the other links in use fail and the spanning tree algorithm determines the port may transition to the forwarding state.

- Listening

- The bridge processes Configuration BPDUs and awaits possible new information that would cause the port to return to the blocking state. It does not populate the filtering database and it does not forward frames.

- Learning

- While the port does not yet forward frames, the bridge learns source addresses from frames received and adds them to the filtering database. It populates the filtering database, but does not forward frames.

- Forwarding

- A port receiving and sending data. STP still keeps monitoring incoming Configuration BPDUs, so the port may return to the blocking state to prevent a loop.

| port state | port role | receive frames? | receive and process CBPDUs? | generate or propagate CBPDUs? | update filtering database? | forward frames? | generate or propagate TCN BPDUs? |

|---|---|---|---|---|---|---|---|

| disabled | blocked | no | no | no | no | no | no |

| blocking | yes | yes | no | no | no | no | |

| listening | (on transitioning) | yes | yes | yes | no | no | no |

| learning | designated | yes | yes | yes | yes | no | no |

| root | yes | yes | no | yes | no | sì | |

| forwarding | designated | yes | yes | yes | yes | yes | no |

| root | yes | yes | no | yes | yes | yes |

Ingress of a new bridge

[edit | edit source]When a new bridge is connected to a data-link network, assuming it has a Bridge Identifier highest than the one of the current root bridge in the network:

- at first the bridge, without knowing anything about the rest of the network (yet), assumes to be the root bridge: it set all its ports as designated (listening state) and starts generating Configuration BPDUs on them, saying it is the root bridge;

- the other bridges receive Configuration BPDUs generated by the new bridge and compare the Bridge Identifier of the new bridge with the one of the current root bridge in the network, then they discard them;

- periodically the root bridge in the network generates Configuration BPDUs, which the other bridges receive from their root ports and propagate through their designated ports;

- when the new bridge receives a Configuration BPDU from the root bridge in the network, it learns it is not the root bridge because another bridge having a Bridge Identifier lower than its one exists, then it stops generating Configuration BPDUs and sets the port from which it received the Configuration BPDU from the root bridge as a root port;

- also the new bridge starts propagating Configuration BPDUs, this time related to the root bridge in the network, on all its other (designated) ports, while it keeps receiving Configuration BPDUs propagated by the other bridges;

- when a new bridge receives on a designed port a Configuration BPDU 'best', based on criteria for designated port selection, with respect to the Configuration BPDU it is propagating on that port, the latter stops propagating Configuration BPDUs and turns to blocked (blocking state);

- after a time Forward Delay long, ports still designated and the root port switch from the listening state to the learning one: the bridge starts populating its filtering database, to avoid the bridge immediately starts sending the frames in flooding overloading the network;

- after a time Forward Delay long, designated ports and the root port switch from the learning state to the forwarding one: the bridge can propagate also normal frames on those ports.

Changes in the network topology

[edit | edit source]Recomputing spanning tree

[edit | edit source]When a topology change occurs, STP is able to detect the topology change, thanks to the periodic generation of Configuration BPDUs by the root bridge, and to keep guaranteeing there are no rings in topology, by recomputing if needed the spanning tree, namely the root bridge and the port states.

Link fault

[edit | edit source]When a link (belonging to the current spanning tree) faults:

- Configuration BPDUs which the root bridge is generating can not reach the other network portion anymore: in particular, the designed port for the faulted link does not send Configuration BPDUs anymore;

- the last Configuration BPDU listened to by the blocked port beyond the link 'ages' within the bridge itself, that is its Message Age is increased over time;

- when the Message Age reaches the Max Age value, the last Configuration BPDU listened to expires and the bridge starts over again electing itself as the root bridge: it resets all its ports as designated, and starts generating Configuration BPDUs saying it is the root bridge;

- STP continues analogously to the case previously discussed related to the ingress of a new bridge:

- if a link not belonging to the spanning tree connecting the two network portions exists, the blocked port connected to that link at last will become root port in forwarding state, and the link will join the spanning tree;

- otherwise, if the two network portions can not be connected one with each other anymore, in every network portion a root bridge will be elected.

Insertion of a new link

[edit | edit source]When a new link is inserted, the ports which the new link is connected to become designated in listening state, and start propagating Configuration BPDUs generated by the root bridge in the network → new Configuration BPDUs arrive through the new link:

- if the link has a cost low enough, the bridge connected to the link starts receiving from a non-root port Configuration BPDUs having a Root Path Cost lower than the one from the Configuration BPDUs received from the root port → the root port is updated so that the root bridge can be reached through the best path (based on criteria for root port selection), as well as designated and blocked ports are possibly updated accordingly;

- if the link has a too high cost, Configuration BPDUs crossing it have a too high Root Path Cost → one of the two ports connected to the new link becomes blocked and the other one keeps being designated (based on criteria for designated port selection).

Announcing topology changes

[edit | edit source]When after a topology change STP alters the spanning tree by changing the port states, it does not change entries in filtering databases of bridges to reflect the changes → entries may be out of date: for example, the frames towards a certain destination may keep being sent on a port turned to blocked, until the entry related to that destination expires because its ageing time goes to 0 (in the worst case: 5 minutes!).

STP contemplates a mechanism to speed up the convergence of the network with respect to the filtering database when a topology change is detected:

- the bridge which detected the topology change generates a Topology Change Notification BPDU through its root port towards the root bridge to announce the topology change;[4]

- crossed bridges immediately forward the Topology Change Notification BPDU through their root ports;

- the root bridge generates back a Configuration BPDU with Topology Change and Topology Change Acknowledgement flags set to 1, which after being forwarded back by crossed bridges will be received by the bridge which detected the topology change;[5]

- the root bridge generates on all its designated ports a Configuration BPDU with the Topology Change flag set;

- every bridge, when receiving the Configuration BPDU:

- drops all the entries in its filtering database having ageing times lower than the Forward Delay;

- in turn propagates the Configuration BPDU on all its designated ports (keeping the Topology Change flag set);

- the network temporarily works in a sub-optimal condition because more frames are sent in flooding, until bridges populate again their filtering databases with new paths by learning algorithms.

Issues

[edit | edit source]Performance

[edit | edit source]The STP philosophy is 'deny always, allow only when sure': when a topology change occurs, frames are not forwarded until it is sure that the transient has dead out, that is there are no loops and the network is in a coherent status, also introducing long waiting times at the expense of convergence speed and capability of reaching some stations.

Assuming to follow the timers recommended by the standard, namely:

- the timing values recommended by the standard are adopted: Max Age = 20 s, Hello Time = 2 s, Forward Delay = 15 s;

- the transit time through every bridge by a BPDU does not exceed the TransitDelay = HelloTime ÷ 2 = 1 s;

more than 7 bridges in a cascade between two end-systems can not be connected so that a Configuration BPDU can cross the entire network twice within the Forward Delay: if an eighth bridge was put in a cascade, in fact, in the worst case the ports at the new bridge, self-elected as the root bridge, would turn from the listening state to the forwarding one[6] before the Configuration BPDU coming from the root bridge at the other end of the network can arrive in time at the new bridge:[7]

With the introduction of the learning state, after a link fault the network takes approximately 50 seconds to converge to a coherent state:

- 20 s (Max Age): required for the last Configuration BPDU listened to to expire and for the fault to be detected;

- 15 s (Forward Delay): required for the port transition from the listening state to the learning one;

- 15 s (Forward Delay): required for the port transition from the learning state to the forwarding one.

In addition, achieving a coherent state within the network does not result necessarily in ending the disservice experienced by the user: in fact the fault may reflect also at the application layer, very sensitive to connectivity losses beyond a certain threshold:

- database management systems may start long fault-recovery procedures;

- multimedia networking applications generating inelastic traffic (such as VoIP applications) suffer much from delay variations.

It would be possible to try customizing the values related to timing parameters to increase the convergence speed and extend the maximum bridge diameter, but this operation is not recommended:

- without paying attention one risks reducing network reactivity to topology changes and impairing network functionality;

- at first sight it appears enough to work just on the root bridge because those values are all propagated by the root bridge to the whole network, but indeed if the root bridge changes the new root bridge must advertise the same values → those parameters must actually be updated on all bridges.

Often STP is disabled on edge ports, that is the ports connected directly to the end hosts, to relieve disservices experienced by the user:

- due to port transition delays, a PC connecting to the network would initially be isolated for a time two Forward Delays long;

- connecting a PC represents a topology change → the cleanup of old entries triggered by the announcement of the topology change would considerably increase the number of frames sent in flooding in the network.

However exclusively hosts must be connected to edge ports, otherwise loops in the network could be created → some vendors do not allow this: for example, Cisco's proprietary mechanism PortFast achieves the same objective without disabling STP on edge ports, being able to make them turn immediately to the forwarding state and to detect possible loops on them (that is two edge ports connected one to each other through a direct wire).

Scalability

[edit | edit source]Given a root bridge, multiple spanning trees can be built connecting all bridges one with each other, but the spanning tree algorithm chooses the spanning tree made up of the lowest cost edges. In this way, paths are optimized only with respect to the root of the tree:

- disabled links are altogether unused, but someone still has to pay for keeping them active as secondary links for fault tolerance;

- load balancing is not possible to distribute traffic over multiple parallel links → links belonging to the selected spanning tree have to sustain also the load for the traffic which, if there was not STP, would take a shorter path by crossing disabled links:

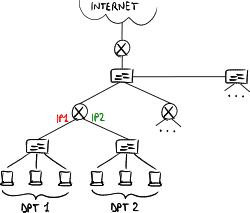

An IP network instead is able to organize traffic better: the spanning tree is not unique in the entire network, but every source can compute its own tree and send traffic to the shortest path guaranteeing a higher link load balancing.

Virtual LANs (VLAN) solve this problem by setting up multiple spanning trees.

Unidirectional links

[edit | edit source]STP assumes every link is bidirectional: if a link faults, frames can not be sent in either of two directions. Indeed fiber optical cables are unidirectional → to connect two nodes two optical fiber cables are needed, one for communication in one direction and another for communication in the opposite direction, and a fault on one of the two cables stops only traffic in one direction.

If one of the two unidirectional links faults, a loop may arise on the other link despite STP: Configuration BPDUs are propagated unidirectionally from the root to the leaves → if the direct propagation path breaks, the bridge at the other endpoint stops receiving from that link Configuration BPDUs from the root bridge, then moves the root port to another link and, assuming there is no one on that link, sets the port as designated creating a loop:

Unidirectional Link Detection (UDLD) is a proprietary protocol of Cisco's able to detect whether there are faults on a unidirectional link thanks to a sort of 'ping', and to disable the port ('error disabled' state) instead of electing it as designated.

Positioning the root bridge

[edit | edit source]The location of the root bridge has heavy impact on the network:

- traffic from one side to another one of the network has to cross the root bridge → performance, in terms of aggregate throughput and bandwidth of ports, of the bridge selected as the root bridge should be enough to sustain a high amount of traffic;

- a star topology, where the root bridge is the star center, is to be preferred → every link connect only one bridge to the root bridge:

- more equable link load balancing: the link should not sustain traffic coming from other bridges;

- higher fault tolerance: a fault of the link affects only connectivity for one bridge;

- servers and datacenters should be placed near the root bridge in order to reduce the latency of data communication;

→ the priority needs to be customized to a very low value for the bridge which has to be the root bridge, so as not to risk that another bridge is elected as the root bridge.

The location of the backup bridge, meant to come into play in case of fault of a primary link or of the primary root bridge:

- fault of a primary link: the optimal configuration is a redundant star topology made up of secondary links, where every bridge is connected to the backup bridge by a secondary link;

- fault of the primary root bridge: the priority of the backup root bridge needs to be customized to a value slightly higher than the priority of the primary root bridge too, so as to force that bridge to be elected as the root bridge in case of fault.

Security

[edit | edit source]STP has not built-in security mechanisms against attacks from outside.

- Electing the user's bridge as the root bridge

A user may connect to the network a bridge with a very low priority forcing it to become the new root bridge and changing the spanning tree of the network. Cisco's proprietary feature BPDU Guard allows edge ports to propagate only Configuration BPDUs coming from inside the network, rejecting the ones received from outside (the port goes into 'error disabled' state).

- Rate limit on broadcast storm

Almost all professional bridges have some form of broadcast storm control able to limit the amount of broadcast traffic on ports by dropping excess traffic beyond a certain threshold, but these traffic meters can not distinguish between frames in a broadcast storm and broadcast frames sent by stations → they risk filtering legitimate broadcast traffic, and a broadcast storm is more difficult to be detected.

- Connecting bridges without STP

A single bridge without STP or with STP disabled can start pumping broadcast traffic into the network so that a loop outside the control of STP is created: connecting a bridge port directly to another one of the same bridge, or connecting the user's bridge to two internal bridge of the network through two redundant links, are examples.

- Multiple STP domains

Sometimes two different STP domains, each one with its own spanning tree, should be connected to the same shared channel (e.g. two providers with different STP domains in the same datacenter). Cisco's proprietary feature BPDU Filter disables sending and receiving Configuration BPDUs on ports at domain peripheries, to keep the spanning trees separated.

References

[edit | edit source]- ↑ Really the spanning tree algorithm blocks ports, not links: {{subst:vedi|#Port states}}

- ↑ Please pay attention to the fact that the traffic moving within the same sub-tree does not cross the root bridge.

- ↑ a b c d Time fields are expressed in units of 256th seconds (about 4 ms).

- ↑ The bridge keeps generating the Topology Change Notification BPDU every Hello Time, until it receives the acknowledge.

- ↑ The root bridge keeps generating back the acknowledgement Configuration BPDU for Max Age + Forward Delay.

- ↑ When this constraint was established, the learning state had not been introduced yet and the ports turned directly from the listening state to the forwarding one.

- ↑ Exactly the minimum value for the Forward Delay would be equal to 14 s, but a tolerance 1 s long was contemplated.

Rapid Spanning Tree Protocol

Rapid Spanning Tree Protocol (RSTP), standardized as IEEE 802.1w (2001), is characterized by a greater convergence speed with respect to STP in terms of:

Port roles and states

[edit | edit source]RSTP defines new port roles and states:

- discarding state: the port does not forward frames and it discards the received ones (except for Configuration BPDUs), unifying disabled, blocking and listening states;

- alternate role: the port, in discarding state, is connected to the same link as a designated port of another bridge, representing a fast replacement for the root port;

- backup role: the port, in discarding state, is connected to the same link as a designed port of the same bridge, representing a fast replacement for the designated port;

- edge role: just hosts can be connected to the port, being aimed to reduce, with respect to the classical STP, disservices experienced by users in connecting their hosts to the network.

| port state | port role | receive frames? | receive and process CBPDUs? | generate and propagate CBPDUs? | update filtering database? | forward frames? |

|---|---|---|---|---|---|---|

| discarding | alternate | yes | yes | no | no | no |

| backup | yes | yes | no | no | no | |

| designated[1] | yes | yes | yes | no | no | |

| learning | designated | yes | yes | yes | yes | no |

| root | yes | yes | no | yes | no | |

| forwarding | designated | yes | yes | yes | yes | yes |

| root | yes | yes | no | yes | yes | |

| edge | yes | yes | no | yes | yes |

Configuration BPDU format

[edit | edit source]Configuration BPDU has the following format:

| 1 | 2 | 4 | 6 | 7 | 8 | 12 | 16 | 24 | 32 |

| Protocol ID (0) | Version (2) | BPDU Type (2) | |||||||

| TC | P | R | S | A | TCA | Root Priority | STP Instance | ||

| Root MAC Address | |||||||||

| Root Path Cost | |||||||||

| Bridge Priority | STP Instance | ||||||||

| Bridge MAC Address | |||||||||

| Port Priority | Port Number | Message Age | |||||||

| Max Age | Hello Time | ||||||||

| Forward Delay | |||||||||

where there are some changes with respect to BPDUs in the classical STP:

- Version field (1 byte): it identifies RSTP as version number 2 (in STP it was 0);

- BPDU Type field (1 byte): it identifies the Configuration BPDU always as type 2 (in STP it was 0), since Topology Change Notification BPDUs do no longer exist;[2]

- 6 new flags: they handle the proposal/agreement mechanism:

- Proposal (P) and Agreement (A) flags (1 bit each one): they specify whether the port role is being proposed by a bridge (P = 1) or has been accepted by the other bridge (A = 1);

- 2 flags in Role field (R) (2 bits): they encode the proposed or accepted port role (00 = unknown, 01 = alternate/backup, 10 = root, 11 = designated);

- 2 flags in State field (S) (2 bits): they specify whether the port which the role is being proposed or has been accepted for is in learning (10) or forwarding (01) state;

- Root Identifier and Bridge Identifier fields (8 bytes each one): RSTP includes technical specifications from IEEE 802.1t (2001) which change the format of Bridge Identifier:

- Bridge Priority field (4 bits, default value = 8);

- STP Instance field (12 bits, default value = 0): used in Virtual LANs to enable multiple protocol instances within the same physical network:

Local Area Network design/Virtual LANs#PVST;

Local Area Network design/Virtual LANs#PVST; - Bridge MAC Address field (6 bytes): unchanged from IEEE 802.1D-1998;

- Root Path Cost field (4 bytes): RSTP includes technical specifications from IEEE 802.1t (2001) which change the recommended values for Port Path Cost including new port speeds (up to 10 Tb/s);