RAC Attack - Oracle Cluster Database at Home/Print Book

| This is the print version of RAC Attack - Oracle Cluster Database at Home You won't see this message or any elements not part of the book's content when you print or preview this page. |

Overview

[edit | edit source]RAC Attack is a free curriculum and platform for hands-on learning labs related to Oracle RAC (cluster database). We believe that the best way to learn about RAC is with a lot of hands-on experience. This curriculum has been used by individuals at home and by instructors in classes since 2008.

The original contributors were Jeremy Schneider, Dan Norris and Parto Jalili. The handbook was published at http://www.ardentperf.com for several years before its migration to this wikibook. All RAC Attack content was released under the CC-BY-SA license in May 2011 when this project was initiated.

To learn about upcoming RAC Attack events or to organize one yourself, visit the Events page. You can use the shortcut http://racattack.org/events to access this page at any time.

The goal of this workbook is to help students learn about Oracle RAC cluster databases through guided examples. (Specifically, 11gR2 RAC on VMware Server with ASM or Shared Filesystem and Oracle Enterprise Linux 5.) It can be used by organizers of events, by instructors in classes or by individuals at home.

RAC Attack differs in depth from other tutorials currently available.

- Every keystroke and mouse click is carefully documented here.

- The process is covered from the very beginning to the very end - from the very first installation of VMware on your laptop to various experiments on your running cluster database... with everything in between.

- The labs in the main workbook have been tested thoroughly and repeatedly.

Prerequisites

[edit | edit source]Students should be able to navigate in Unix - for example, listing files with "ls".

Hardware Minimum Requirements

[edit | edit source]Most modern laptop and desktop computers should be powerful enough to run a two-node virtual RAC cluster. In a nutshell, these are the recommended minimums:

- Dual-core 2GHz 32-bit processor (it's been done with single-core)

- 4GB memory (it's been done with 3GB)

- Two physical hard disks - not partitions (it's been done with one)

- External HD for laptops (it's been done with certain USB flash memory sticks)

- 50 GB + 10.5 GB free space (it's been done with slightly less)

- Windows XP or Vista (Linux & Mac & Windows 7/8 are not directly covered in these instructions, but a supplementary PDF covering VirtualBox can be downloaded)

Making This Lab Successful

[edit | edit source]- Read about: Planning Your Time

- Focus on what you can learn.

- Choose as many specific learning goals as possible and take your time to investigate them.

- Be creative and experiment.

- Take risks and don't be afraid to break things.

- Take advantage of the classroom or event setting, if you're in one

- You can "jumpstart" back to the beginning of a lab with one click.

- Record discoveries and questions to share with others.

- Help each other out. There are more participants than instructors!

- When possible, cut-and-paste steps directly into a PuTTY SSH terminal session.

Lab Tips

[edit | edit source]- All passwords are racattack

- Always choose "I moved this VM" when asked, unless instructions specifically say to choose "copied".

- If using your own laptop, start the VMs one after another. That is, wait until the first VM completes bootup - including clusterware and database - before starting the second.

- Classroom specific tips:

- Common login account for Workstation and VMware console: "admin"

- Common changes from the lab handbook:

- Use 5GB shared disks – not 3.25GB

- Use 900MB memory – not 760MB

- Jumpstarts could take longer than you think... read: Planning Your Time

- "RAC11g" directory is often at C:\RAC11g

- Handout is available with stretch goals for advanced participants

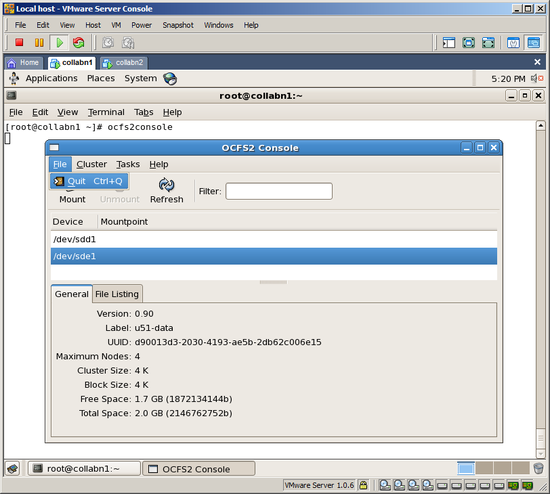

Storage Overview

[edit | edit source]| ASM | Shared FS | |

|---|---|---|

| /dev/sdb | DATA | /u51 (/u61) |

| /dev/sdc | BACKUP | /u52 |

Networking Overview

[edit | edit source]| collabn1 | collabn2 | |

|---|---|---|

| Interconnect | 172.16.100.51 | 172.16.100.52 |

| Administration | 192.168.78.51 | 192.168.78.52 |

| VIP | 192.168.78.61 | 192.168.78.62 |

| SCAN | 192.168.78.250 | |

Planning Your Time

[edit | edit source]For the most benefit, you must plan your time carefully. There will not be enough time to complete all of the labs - so choose the ones which most interest you.

If you are using your own computer at home or at an event, then you always need to complete the first lab (Hardware and Windows Preparation) before you can jumpstart to any following labs. If you are in a class then the instructor has probably completed the first lab for you, and you can begin with a jumpstart. |

Overview

[edit | edit source]

Time

[edit | edit source]These times were gathered with a laptop just meeting the recommended minimum requirements. In addition to the wait times listed below, we suggest that you reserve about 40 minutes of work time to complete any given lab.

Downloads only apply to home users. If you are at an event or a class then the organizers have already downloaded the software for you. |

| Jumpstart | Size | Next Lab | Jumpstart Time | Wait Times in the Lab | Build Time |

|---|---|---|---|---|---|

| Hardware and Windows Preparation | Download: VMware Server (500MB) Download: OEL (3GB) |

||||

| 0 | Create VM | 15 min: Linux Install Download: Oracle Cluster & Database (4.5GB) |

|||

| 1 | 3 GB | Create Cluster | 4 min: jumpstart | 5 min: Copy VM 3 min: startup collabn1 3 min: startup collabn2 |

6 min |

| 2 | 13 GB | Grid Install (ASM or Shared FS) | 11 min: jumpstart 3 min: startup nodes |

25 min: GI Install 10 min: root.sh collabn1 8 min: root.sh collabn2 |

17 min |

| 3 | 20 GB | RAC Install | 18 min: jumpstart 4 min: startup collabn1 4 min: startup collabn2 |

42 min: DB Install | 30 min |

| 4 | 27 GB | Create Database | 25 min: jumpstart 4 min: startup collabn1 4 min: startup collabn2 |

22 min: DB Create | 45 min |

| 5 | 28 GB | Rolling Patches Part II: Exploring RAC (w/o PSU) |

25 min: jumpstart 6 min: startup collabn1 6 min: startup collabn2 |

22 min: GI Patch collabn1 20 min: DB Patch collabn1 22 min: GI Patch collabn2 20 min: DB Patch collabn2 5 min: catbundle |

45 min |

| 6 | 37 GB | Part II: Exploring RAC (w/PSU) | 35 min: jumpstart 6 min: startup collabn1 6 min: startup collabn2 |

60 min |

Hardware and Windows Preparation

[edit | edit source]Hardware and Windows Minimum Requirements

[edit | edit source]This handbook will walk you through the process of creating a two-node Oracle RAC cluster on your own laptop or desktop computer.

A detailed explanation of virtualization is beyond the scope of this lab but here is a simple overview of what we are building:

Hardware Minimum Requirements

[edit | edit source]Most modern laptop and desktop computers should be powerful enough to run a two-node virtual RAC cluster. In a nutshell, these are the recommended minimums:

- Dual-core 2GHz 32-bit processor (it's been done with single-core)

- 4GB memory (it's been done with 3GB)

- Two physical hard disks - not partitions (it's been done with one)

- External HD for laptops (it's been done with certain USB flash memory sticks)

- 50 GB + 10.5 GB free space (it's been done with slightly less)

- Support files larger than 2GB, e.g. NTFS (it's been done without 2GB file support, on FAT32)

- Windows XP or Vista (Linux & Mac & Windows 7/8 are not directly covered in these instructions, but a supplementary PDF covering VirtualBox can be downloaded)

If your laptop or desktop does not meet these minimum requirements then it is not recommended to try completing the RAC Attack labs. Although it is possible to complete these labs with smaller configurations, there are many potential problems.

Although we recommend against trying, RAC Attack has been done with: single-core, 3GB memory, one physical hard drive, certain USB flash memory sticks, and less than 60GB of free space. |

Windows Preparation

[edit | edit source]- Reboot windows. After this clean boot-up, don't start any unneeded programs - for example email or instant messenger.

- If possible, disable virus scanning (so that your antivirus software[1] doesn't try to scan I/O on the virtual machine disks).

- Terminate any memory resident programs which are running, especially programs that help big applications "quick-start" (these often use up a lot of memory).

Hardware Verificaton

[edit | edit source]Processor

[edit | edit source]-

From the Start menu, choose or type RUN. In the dialog box that appears, type msinfo32.

-

Select System Summary in the left pane. Scroll down to Processor in the right pane. Verify that you have at least 2 cores and that the speed is at least 2000 Mhz.

Memory

[edit | edit source]-

Scroll down to Memory in the right pane. Verify that Installed Physical Memory is at least 4GB. Also, verify that Available Memory is at least 1.4GB. You can terminate programs which run in the foreground and background to increase the Available Memory.

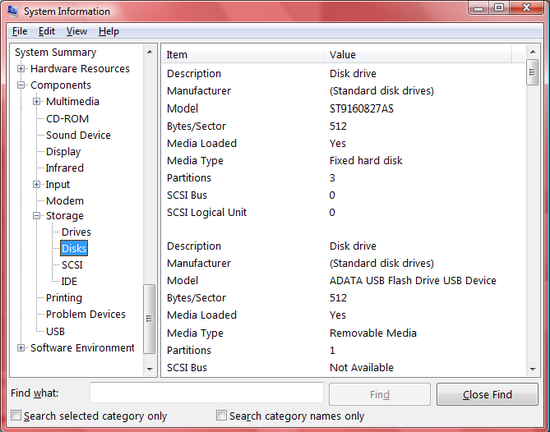

Hard Disks

[edit | edit source]-

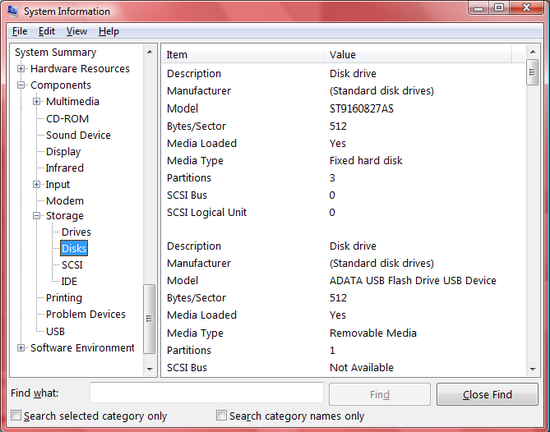

In the left pane, choose Components -> Storage -> Disks. Count the number of Disk Drive entries and verify that there are at least two.

Second Hard Disk

[edit | edit source]A single hard disk can max out as low as 45 MB/s. (This has been observed during RAC Attack testing.) Typical USB Flash Thumb Drives get very, very poor performance and should not be used. Some USB Flash Thumb Drives are marketed for performance; these typically get a maximum around 30 MB/s. In tests for RAC Attack, USB drives worked well for storing ISO images but somewhat poorly for storing virtual machine files.

For a detailed comparison of different connection types, refer to: http://www.pixelbeat.org/speeds.html |

Free Space Requirements

[edit | edit source]RAC Attack is carefully designed to use three directories and spread out I/O for the best possible responsiveness during labs. You can choose how to spread the directories across your hard disks, and the best configuration may vary depending on your connection and storage type.

| Directory Name | Description | Free Space | Suggested Location |

|---|---|---|---|

| RAC11g | Operating System Oracle RAC Software |

50 GB | Second Hard Disk (not flash) |

| RAC11g-shared | Oracle RAC Data | 7.5 GB | Windows Hard Disk* |

| RAC11g-iso | OEL Installation DVD (read-only) | 3 GB | Windows Hard Disk* |

*page file is usually on Windows Hard Disk

Note: do not create the RAC11g directory (with OS and Oracle Software) on a Flash Thumb Drive.

We worked hard to reduce the footprint of RAC Attack, however with 11gR2 it's very difficult to reduce it beyond this. |

Login Accounts

[edit | edit source]RAC Attack requires a local windows user account with a password and with administrative privileges. You may login using a network or password-free account only if the login account has admin privileges and you know the password for a local account which also has admin privileges (and not an empty password).

If your account is not local, or if your account does not have local admin privileges then you can create an admin account by following the directions here.

Creating a Local Admin Account

[edit | edit source]-

From the Start menu, choose RUN. In the dialog box that appears, type cmd to launch a command prompt.

On Windows 7 find the "search programs" field at the bottom of the Start menu. Type cmd in but don't run it. Right click on "cmd" then choose to "Run as administrator".

After you have opened the command prompt as an admin user, run the following two commands:

net user admin racattack /add net localgroup administrators admin /add

Login: admin

Password: racattack

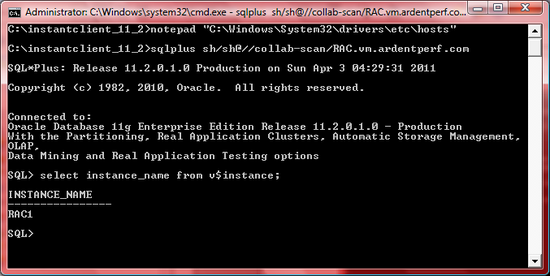

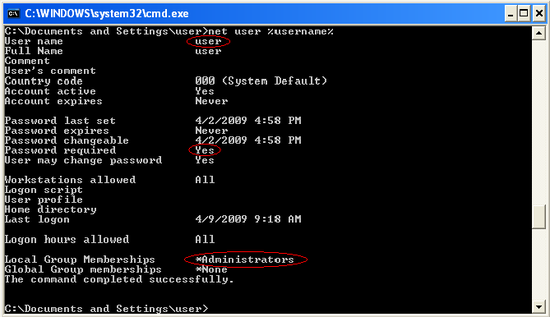

Verifying the Login Account

[edit | edit source]-

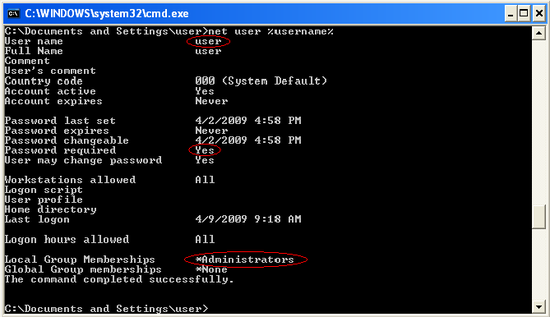

Type net user %username% (if you're using a network or password-free login account then replace %username% with the local password-ed admin account).

VERIFY the username, VERIFY that password required is yes, and VERIFY that local group memberships include Administrators.

Install VMware Server

[edit | edit source]-

These labs have been tested with version 2.0.1 of VMware Server. Go to the VMware Server website at http://www.vmware.com/go/getserver

-

Register for an account if you don't have one already.

- Write down your license number.

- If you are at home, then download VMware Server. If you are at a RAC Attack event then the instructor-provided Jumpstart Drive contains a copy of VMware Server, so that you don't need to download it. (However you still need a license number from the VMware website.)

These labs have been tested with version 2.0.1 of VMware Server.

These labs have been tested with version 2.0.1 of VMware Server.

-

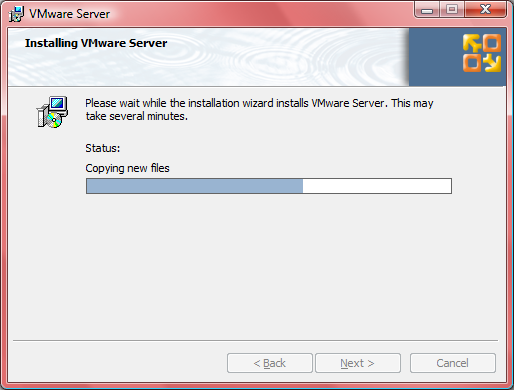

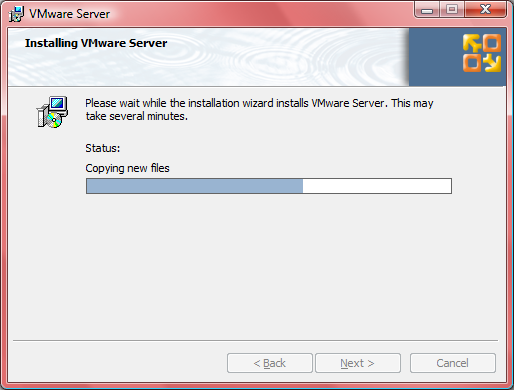

Run the VMware Installer

-

Accept the license agreement and all default options during the installation process.

-

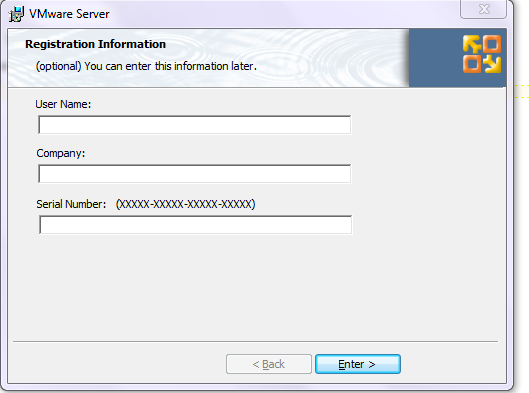

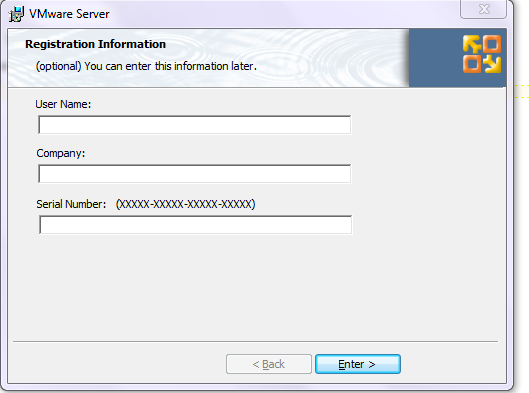

Enter your license information, which is visible at the VMware website on the same page where you downloaded the software.

- Reboot your computer if you are asked by the VMware installer.

Setup Virtual Networks

[edit | edit source]-

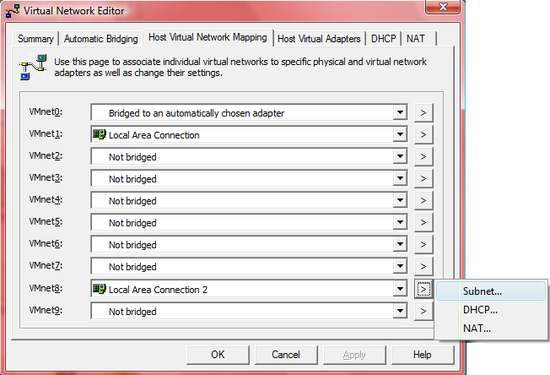

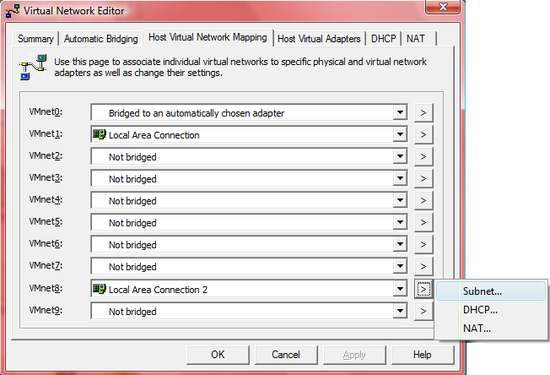

Choose Manage Virtual Networks from the start menu. After the program starts, make sure that you see an "Apply" button at the bottom. If you do not see an "Apply" button then close the program and re-start it by right-clicking and choosing to "run as administrator" (this must be done on Windows 7 normally).

-

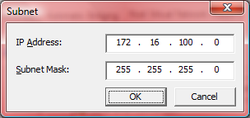

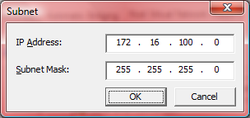

Click the Host Virtual Network Mapping Tab and then click the Right Arrow Button next to VMnet1. Choose Subnet from the submenu.

-

Set the IP address to 172.16.100.0 and click OK.

-

Click the Right Arrow Button next to Vmnet8 and choose Subnet from the submenu.

-

Set the IP address to 192.168.78.0 and click OK.

- Click the APPLY button.

-

Return to the Summary tab and VALIDATE:

VMnet1 has subnet 172.16.100.0

VMnet8 has subnet 192.168.78.0

-

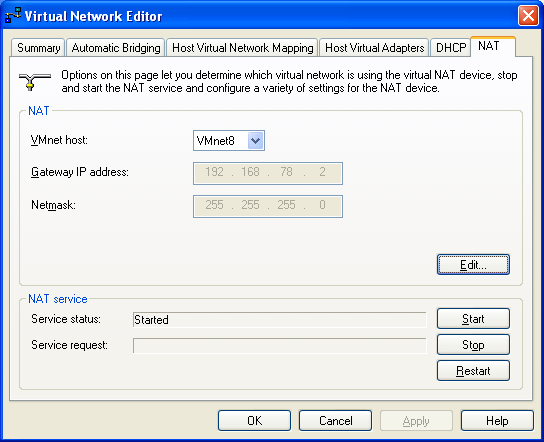

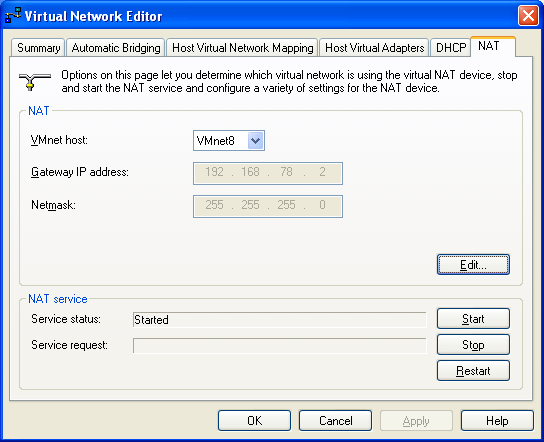

Go to the NAT tab and VALIDATE that the VMnet host is VMnet8 and Gateway IP is 192.168.78.2

Setup Virtual Storage

[edit | edit source]If you are at an event, then the event organizers might provide a special DEMO option - where you can run a pre-configured RAC cluster on your own laptop. In order to use this DEMO option, follow this lab but use the directories on the event-provided external hard drive.

- RAC Attack is carefully designed to use three directories and spread out I/O for the best possible responsiveness during labs. Create these three directories in the destinations that you chose in Hardware and Windows Minimum Requirements, taking the guidelines into consideration. mkdir C:\RAC11g mkdir D:\RAC11g-shared mkdir D:\RAC11g-iso In the RAC11g directory, make sure that collabn1 and collabn2 subdirectories don't exist. rmdir C:\RAC11g\collabn1 rmdir C:\RAC11g\collabn2

-

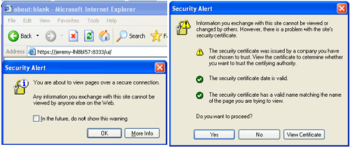

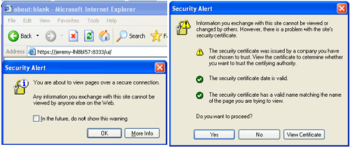

The VMware Server management interface is web-based, and some new web browsers are not compatible with it. There are two ways to open this management interface:

- Open a non-default web browser and go to the address https://localhost:8333/

- If you are at an event, then the event organizers might have provided Firefox 2.0.0.20 which has been tested with RAC Attack. You can run this browser directly from the Jumpstart Drive without installing it on your PC. This version of firefox can also be downloaded from the internet.

- Launch VMware Server Home Page from the start menu. This will use your default web browser.

- Open a non-default web browser and go to the address https://localhost:8333/

-

Depending on what web browser you use, you might receive security-related warnings. Proceed through all of these warnings and choose to view the web page.

The warning in Mozilla Firefox

The same alert in Internet Explorer 6 -

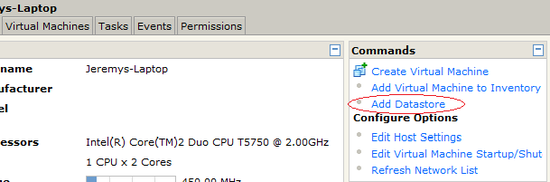

Login to the VMware console with the local windows admin account username and password.

-

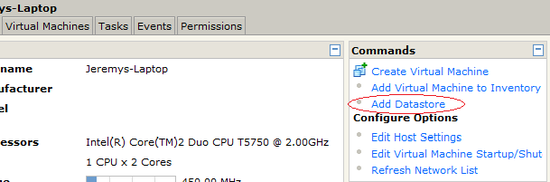

On the main screen (Summary tab), find the Commands box and choose Add Datastore.

-

Repeat this step three times. Set the datastore names to RAC11g, RAC11g-shared and RAC11g-iso. Choose Local Datastore and use the directory path which you previously chose and created.

-

VERIFY that the three new datastores exist in the Summary screen – named RAC11g and RAC11g-iso and RAC11g-shared. Also VERIFY that the two networks vmnet1 and vmnet8 are available as HostOnly and NAT respectively.

Download Oracle Enterprise Linux

[edit | edit source]If you are at an event, then the event organizers have already downloaded the software and copied it to the Jumpstart Drive.

- Skip this lab.

If you are at home, then follow these instructions to download Oracle Enterprise Linux.

- Continue below.

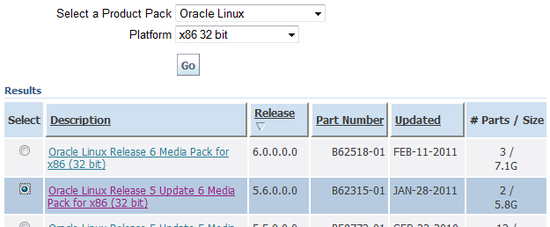

-

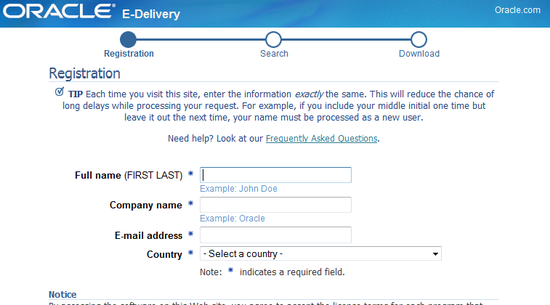

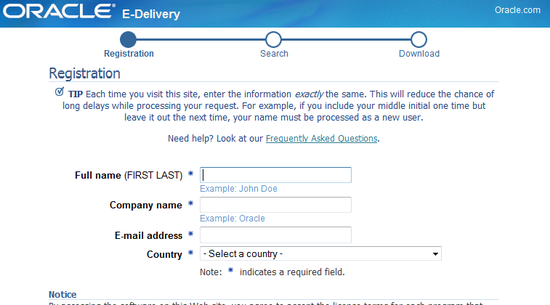

Visit http://edelivery.oracle.com/linux and choose Continue.

-

Enter your Name, Company, Email and Country and review/accept the license and export restrictions before clicking Continue. If you have visited Oracle EDelivery before then make sure to enter your information exactly the same.

If this is the first time you've downloaded software from Oracle, then you might have to wait a few days until you receive an email from Oracle granting you permission to continue.

-

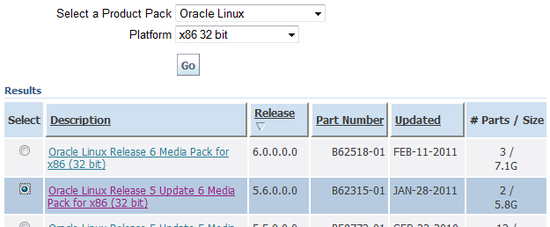

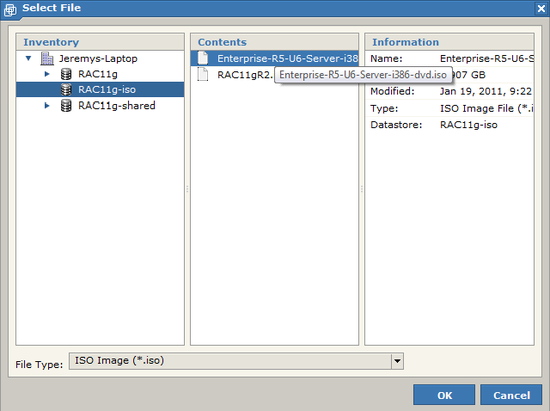

Search for Oracle Linux on the x86-32-bit platform. Choose Oracle Linux Release 5 Update 6.

-

Download the file for x86 (32 bit) – not the source.

- This zip file will contain a single file named Enterprise-R5-U6-Server-i386-dvd.iso – extract this file into the RAC11g-iso folder and then delete the original zip file.

Linux Install

[edit | edit source]Create VM

[edit | edit source]-

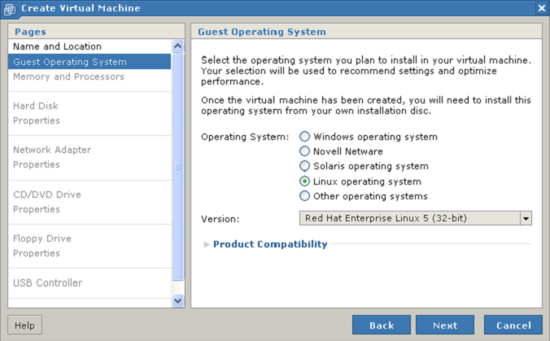

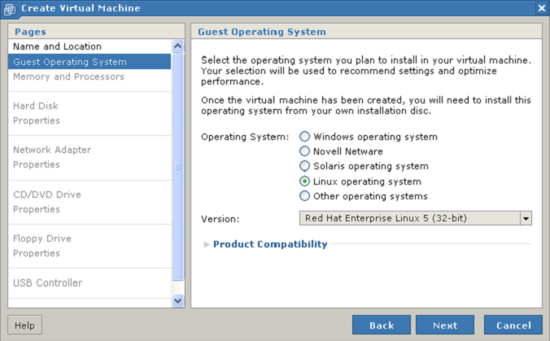

From the SUMMARY screen, choose Create Virtual Machine. Name the new machine collabn1 and select the RAC11g datastore.

-

Select Linux Operating System and choose Red Hat Enterprise Linux 5 (32-bit).

-

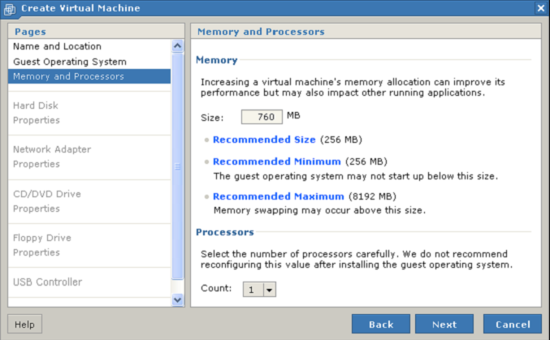

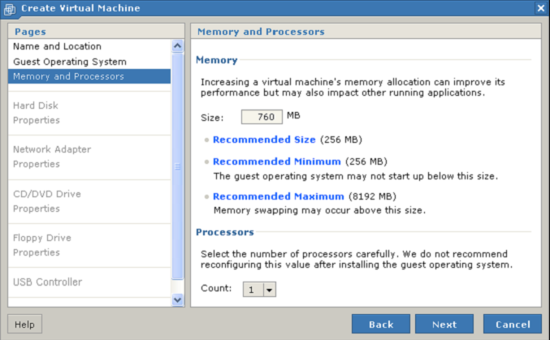

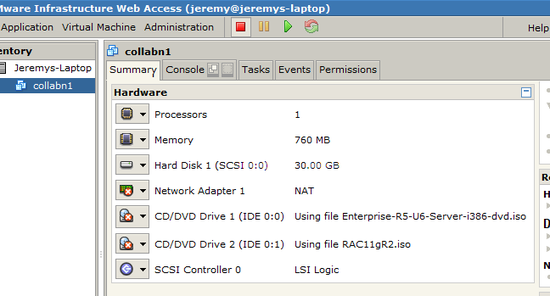

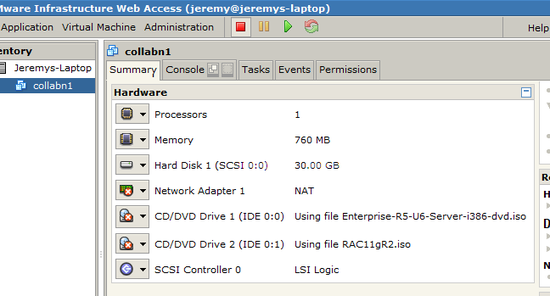

Allocate 760M of memory for the virtual machine and choose 1 processor.

-

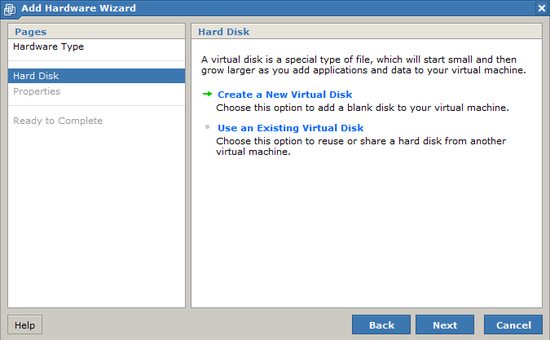

Choose to Create a New Virtual Disk.

-

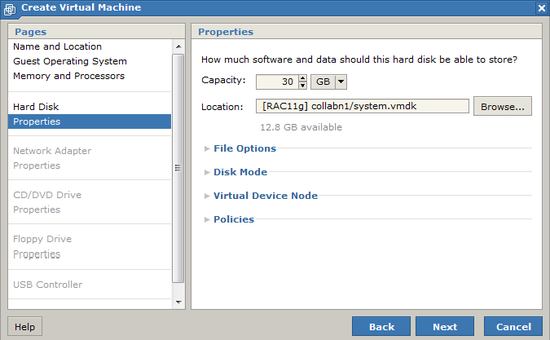

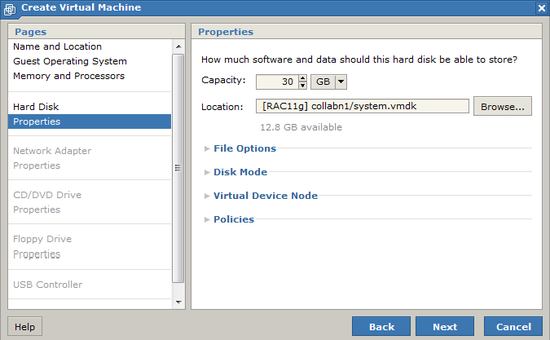

Set the disk size to 30G and name the file [RAC11g] collabn1/system.vmdk – leave all other options at their defaults and click Next.

-

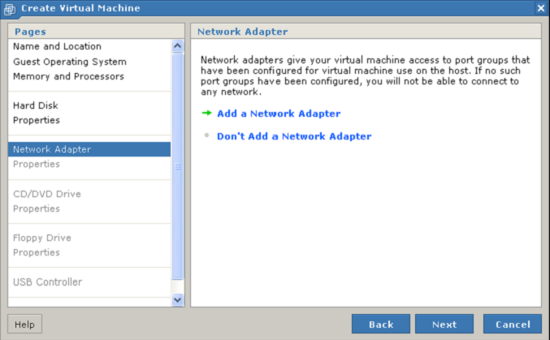

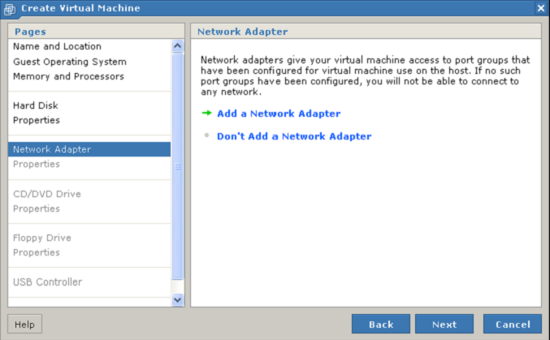

Choose to Add a Network Adapter.

-

Choose to create a NAT network connection.

-

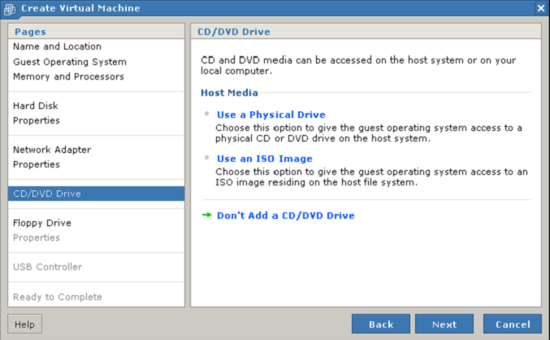

Choose Don't Add a CD/DVD Drive.

-

Choose Don't Add a Floppy Drive.

-

Choose Don't Add a USB Controller.

-

Review the configuration and click Finish. Do not power on the virtual machine yet.

Prep for OS Installation

[edit | edit source]VIRTUAL DVD CONFIGURATION:

| Virtual Device Node (Adapter/Device) | Path (Datastore/Directory/Filename) |

|---|---|

| IDE 0:0 | [RAC11g-iso] Enterprise-R5-U6-Server-i386-dvd.iso |

| IDE 0:1*** | [RAC11g-iso] RAC11gR2.iso*** |

***INSTRUCTOR-LED CLASS ONLY

-

In the Inventory tab at the left, select collabn1 (the virtual machine we just created).

-

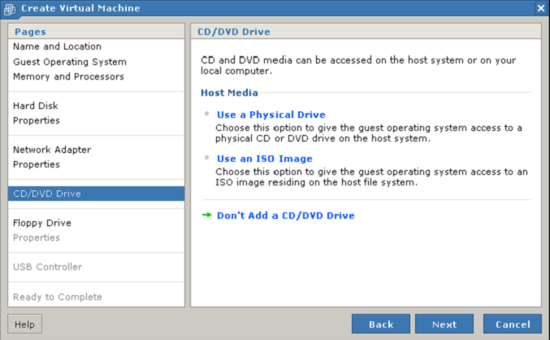

From the Commands box, click Add Hardware. In the window that appears, click CD/DVD Drive.

-

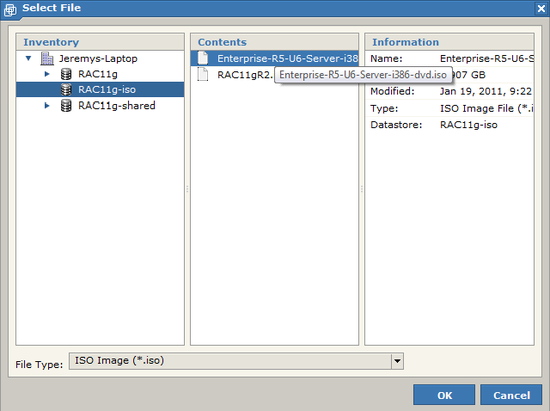

Choose to Use an ISO Image.

-

Click Browse and locate the file [RAC11g-iso] Enterprise-R5-U6-Server-i386-dvd.iso.

-

Open the section called Virtual Device Node and choose IDE 0:0. Then click Next.

Carefully follow this step because it's easy to miss.

Carefully follow this step because it's easy to miss.

-

Click Finish to add the device. Don't power on the virtual machine yet.

-

If you are in a class, then the instructor may have provided a second virtual DVD named RAC11gR2.iso to save some class time. It contains all additional software downloads.

- Repeat all previous steps from this lab to add the second DVD using RAC11gR2.iso image and choosing IDE 0:1.

- Continue below.

-

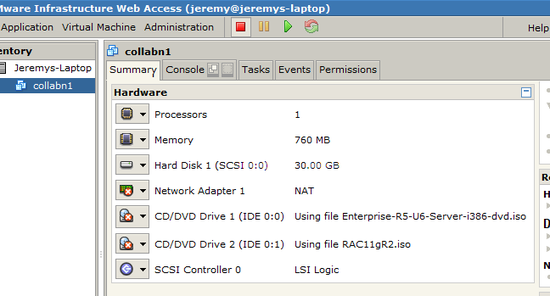

Scroll down to the Hardware box and confirm the Virtual Machine settings. They should match this picture (except that you should only see the second DVD if you are in a class and it was provided by the instructor):

OS Installation

[edit | edit source]-

Click the Console tab. You might see a message saying that the Remote Console Plug-in is not

installed. If you see this message then click Install plug-in and follow the directions before

continuing. (Note: you may be asked to restart your computer during this process.)

-

When the plugin is installed, you should see a large “play” button in the center of the console. Click

on the play button to start the VM.

-

When you see the square boxes, click anywhere to open a console window.

-

A new window will now open - outside of your web browser. If you opened this window soon after starting the Virtual Machine, then you will see the boot screen of the Oracle Enterprise Linux installer.

At first, this new console window will ignore your keyboard and mouse. Click inside the new console window and it will begin accepting your keyboard and mouse.

Anytime your keyboard and mouse are stuck in the VMware Virtual Machine, you can press CTRL and ALT together to move them outside the VM.

Anytime your keyboard and mouse are stuck in the VMware Virtual Machine, you can press CTRL and ALT together to move them outside the VM.If you still see the boot screen then you may press enter to continue, or just wait for it to automatically continue.

-

Choose to SKIP the media test.

-

Choose NEXT when the first installer screen comes up.

-

Accept the default English language and choose Next.

-

Choose US English keyboard layout and click Next.

-

Select YES to initialize the drive.

-

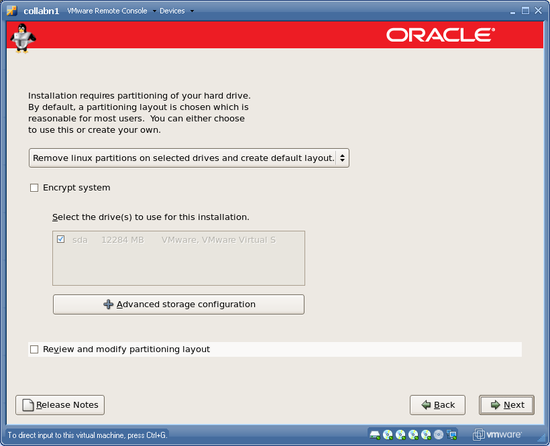

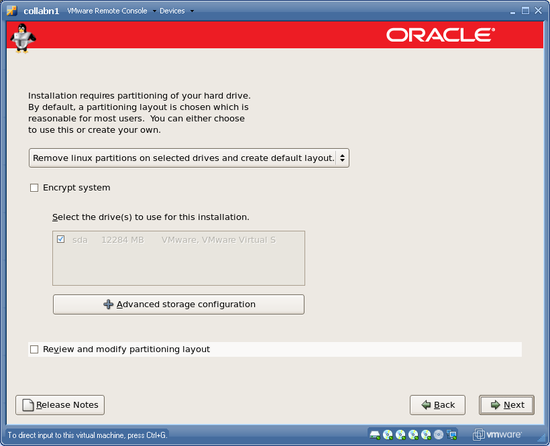

Accept the default layout (with no encryption) and choose NEXT.

-

Choose YES to remove all partitions.

-

Set the hostname to collabn1.vm.ardentperf.com and leave DHCP enabled before choosing NEXT.

-

Choose the timezone where you are located! Let the system clock run on UTC though.

-

Set the root password to racattack

-

Choose Customize Now – but don't choose any "additional tasks". Then click NEXT.

-

Select only these package groups, then click NEXT to continue:

Category Selections Desktop Environments Gnome Desktop Environment Applications Editors

Graphical Internet

Text-based InternetDevelopment Development Libraries

Development ToolsServers Server Configuration Tools Base System Administration Tools

Base

System Tools

X Window System Do not choose Cluster Storage or Clustering.

Do not choose Cluster Storage or Clustering.

-

Choose NEXT to start the installation.

-

Choose REBOOT when the installation is complete.

-

After the machine reboots – when you wee the Welcome screen – choose FORWARD.

-

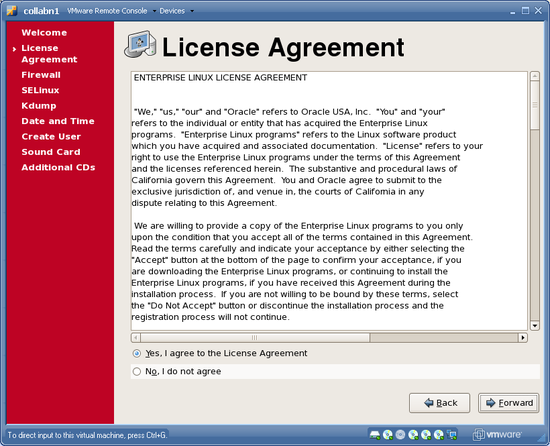

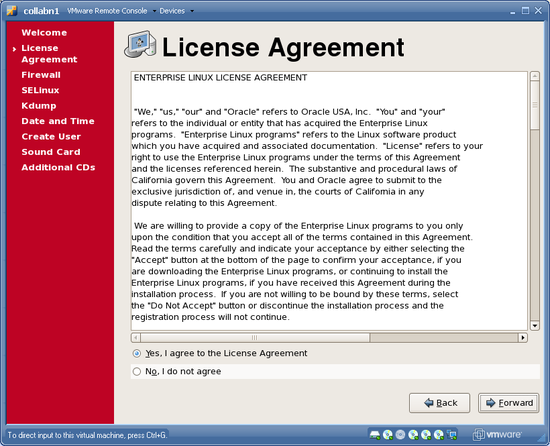

ACCEPT the license and choose FORWARD.

-

DISABLE the firewall and choose FORWARD. Confirm by clicking YES.

-

DISABLE SELinux before choosing FORWARD. Confirm with YES.

-

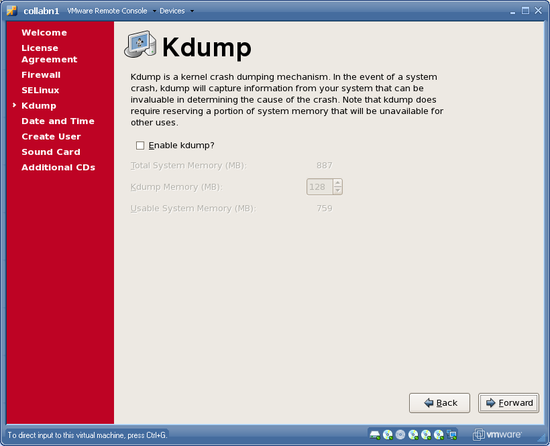

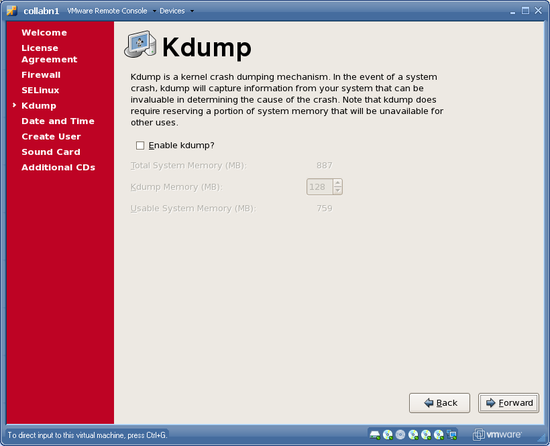

Leave Kdump disabled and choose FORWARD.

-

Leave the clock alone (with the wrong time) and click FORWARD.

-

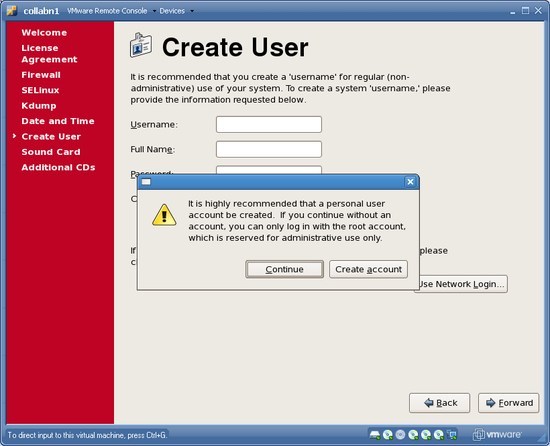

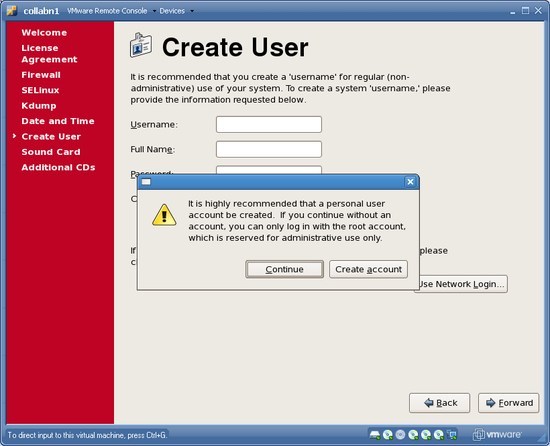

Don't create a user; click FORWARD. Choose CONTINUE to confirm.

-

Choose FORWARD to skip sound card config.

-

Choose FINISH to close the installer. Click OK to reboot.

-

After reboot you will see a login screen.

Wrap-up OS Installation

[edit | edit source]Tip: If you are familiar with the unix command-line, then we recommend connecting through SSH in addition to using the VMware console. You can then copy-and-paste many commands from this handbook! Until we configure networking, VMware will assign the address 192.168.78.128.

-

Login as the user root with password racattack.

-

GNOME is the graphical window environment installed by default in OEL. First, disable GNOME CD automount. Go to the menu System >> Preferences >> Removable Drives and Media.

-

Uncheck all of the options under Removable Storage and click Close.

-

Open a terminal window from the menu Applications >> Accessories >> Terminal.

-

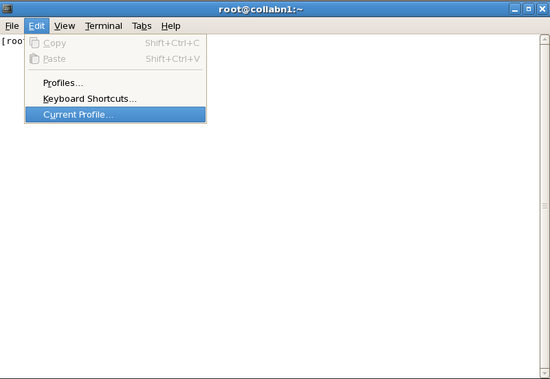

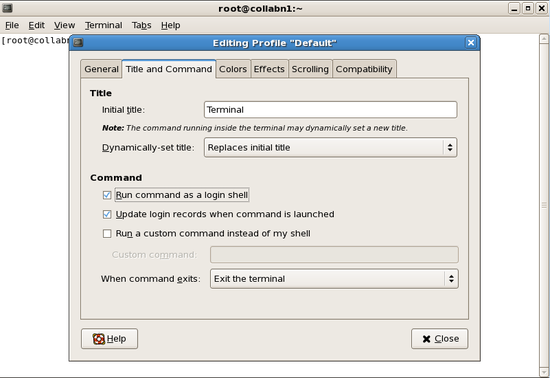

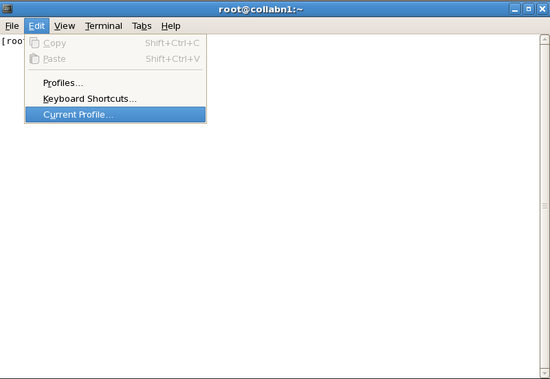

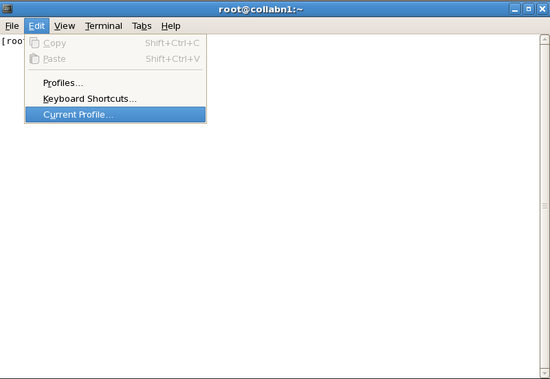

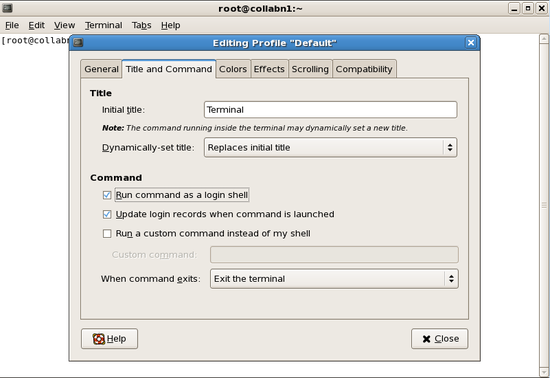

From the menus, open Edit >> Current Profile.

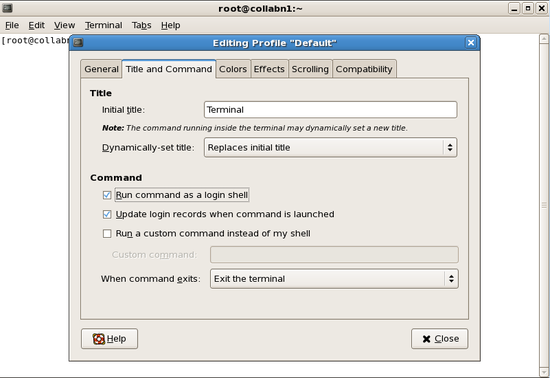

-

In the Title and Command tab, check the box for Run command as a login shell, then close the dialog.

-

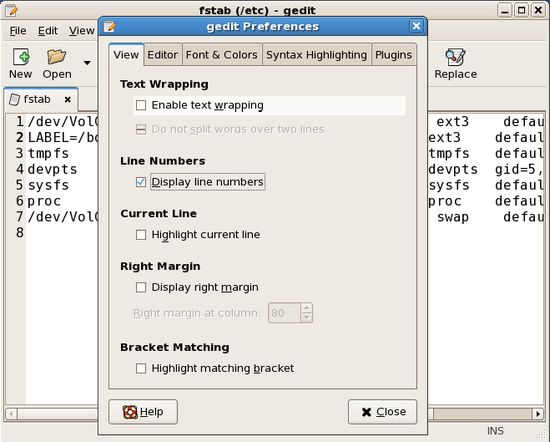

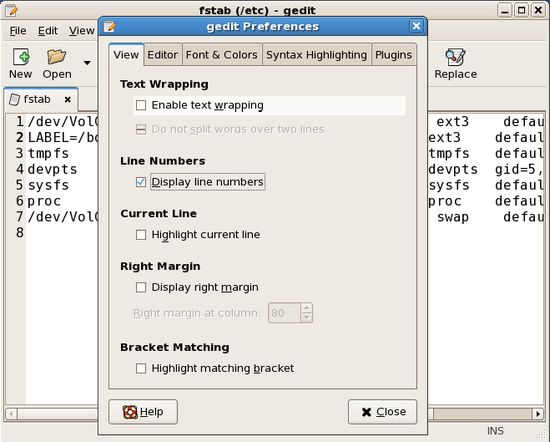

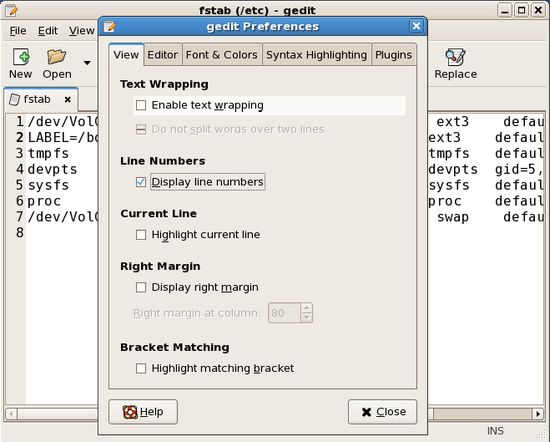

The editor "gedit" is a simple graphical editor – similar to notepad – and it can be used to edit files on Linux. If you are going to use gedit, then it is helpful if you open Edit > Preferences to disable text wrapping and enable line numbers.

-

In a terminal window as the root user, shutdown and disable anacron then run it manually with no delay.

[root@collabn1 ~]# service anacron stop

Stopping anacron: [ OK ]

[root@collabn1 ~]# chkconfig anacron off

[root@collabn1 ~]# chkconfig --list anacron

anacron 0:off 1:off 2:off 3:off 4:off 5:off 6:off

[root@collabn1 ~]# anacron -n

It should not cause any problems for you, but be aware that several CPU and I/O intensive jobs will run in the background for about 10 minutes while you continue with this lab (e.g. updatedb and makewhatis). You might notice some slight system performance degradation. You can always use the program top to see what is currently running.

It should not cause any problems for you, but be aware that several CPU and I/O intensive jobs will run in the background for about 10 minutes while you continue with this lab (e.g. updatedb and makewhatis). You might notice some slight system performance degradation. You can always use the program top to see what is currently running.

- In a terminal window as the root user, shutdown and disable the automounter. [root@collabn1 ~]# service autofs stop Stopping automount: [ OK ] [root@collabn1 ~]# umount /media/* [root@collabn1 ~]# chkconfig autofs off [root@collabn1 ~]# chkconfig --list autofs autofs 0:off 1:off 2:off 3:off 4:off 5:off 6:off

- In a terminal window as the root user, shutdown and disable the apple zeroconf service (avahi). [root@collabn1 ~]# service avahi-daemon stop Shutting down Avahi daemon: [ OK ] [root@collabn1 ~]# chkconfig avahi-daemon off [root@collabn1 ~]# chkconfig --list avahi-daemon avahi-daemon 0:off 1:off 2:off 3:off 4:off 5:off 6:off

-

If any of the small CD images in the status bar do not have a green dot, then click on the CD image and choose "Connect to [RAC11g] iso/... on Server". If a window opens showing the CD contents then make sure to close the window.

-

Create two CDROM directories named cdrom and cdrom5.

Make sure to use these names because many later steps in this handbook will reference them!

Make sure to use these names because many later steps in this handbook will reference them![root@collabn1 ~]# cd /mnt [root@collabn1 mnt]# mkdir cdrom [root@collabn1 mnt]# mkdir cdrom5

- Add entries to /etc/fstab for all CD's and then mount them. If you are in a class then you will probably have two CD's. If you are not in a class then you will probably have only one. [root@collabn1 ~]# ls /dev/cdrom-* /dev/cdrom-hda /dev/cdrom-hdb [root@collabn1 ~]# gedit /etc/fstab /dev/cdrom-hda /mnt/cdrom iso9660 defaults 0 0 /dev/cdrom-hdb /mnt/cdrom5 iso9660 defaults 0 0 [root@collabn1 mnt]# mount cdrom [root@collabn1 mnt]# mount cdrom5

- Install the additional required RPM's cd /mnt # From Enterprise Linux 5 CDROM 2 rpm -Uvh */*/compat-libstdc++-33* rpm -Uvh */*/libaio-devel-0.* rpm -Uvh */*/unixODBC-2.* rpm -Uvh */*/unixODBC-devel-2.* # From Enterprise Linux 5 CDROM 3 rpm -Uvh */*/sysstat-7.* # Additional required packages which are already installed: # From Enterprise Linux 5 CDROM 1 rpm -Uvh */*/binutils-2.* rpm -Uvh */*/elfutils-libelf-0.* rpm -Uvh */*/glibc-2.*i686* rpm -Uvh */*/glibc-common-2.* rpm -Uvh */*/libaio-0.* rpm -Uvh */*/libgcc-4.* rpm -Uvh */*/libstdc++-4.* rpm -Uvh */*/make-3.* # From Enterprise Linux 5 CDROM 2 rpm -Uvh */*/elfutils-libelf-devel-* rpm -Uvh */*/glibc-headers* rpm -Uvh */*/glibc-devel-2.* rpm -Uvh */*/libgomp* rpm -Uvh */*/gcc-4.* rpm -Uvh */*/gcc-c++-4.* rpm -Uvh */*/libstdc++-devel-4.*

- Eject the cdrom [root@collabn1 mnt]# eject /mnt/cdrom

-

Return to the Summary tab in the VMware console. From the Status box, choose to Install VMware Tools. Click the Install button to begin.

-

Install VMware client tools and run configuration tool.

You must perform this step in the VMware Console; do not use PuTTY or any other terminal program.

You must perform this step in the VMware Console; do not use PuTTY or any other terminal program.[root@collabn1 mnt]# mount /mnt/cdrom mount: block device /dev/cdrom-hda is write-protected, mounting read-only [root@collabn1 mnt]# rpm -ivh /mnt/cdrom/VMwareTools-7.7.5-156745.i386.rpm Preparing... ########################################### [100%] 1:VMwareTools ########################################### [100%] The installation of VMware Tools 7.7.5 for Linux completed successfully. You can decide to remove this software from your system at any time by invoking the following command: "rpm -e VMwareTools". Before running VMware Tools for the first time, you need to configure it for your running kernel by invoking the following command: "/usr/bin/vmware-config-tools.pl". Enjoy, --the VMware team [root@collabn1 cdrom]# vmware-config-tools.pl...

Choose NO to skip the VMware FileSystem Sync Driver (vmsync)

Choose display size [12] – 1024x768

Mounting HGFS shares will probably FAIL, but this is ok.

-

Run the network commands. (You can cut and paste the commands into the terminal.) Next, run vmware-toolbox and enable clock synchronization.

-

Logout from your session.

- Unmount the VMware Tools image: umount /mnt/cdrom Using VMware Infrastructure web interface, mount Oracle Enterprise Linux from [RAC11g-iso] data store. Mount it: mount /mnt/cdrom

Create RAC Attack DVD

[edit | edit source]If you are in a class or at an event, then the instructor may have provided a second virtual DVD named RAC11gR2.iso to save some class time. It contains all additional software downloads.

- Skip this lab and continue to the next one.

If you are at home, then follow these instructions to download all needed software and build the second DVD yourself.

- Continue below.

- While logged in as root, download the latest release of the RAC Attack supporting code (GPL). # cd ~ # curl -kL github.com/ardentperf/racattack/tarball/master | tar xz

- You can view the master list of downloads here: https://github.com/ardentperf/racattack/blob/master/makeDVD/auto.sh If you have already downloaded any of these files, you may optionally copy them to the /tmp directory in your virtual machine. When you create the DVD, any remaining files will be automatically downloaded.

-

Create the DVD by running the automatic build script. You will be prompted for your Oracle SSO login and password.

If your account is not authorized for Oracle Support then patch downloads will fail.

If your account is not authorized for Oracle Support then patch downloads will fail.[root@collabn1 ~]# '''sh ardentperf-racattack-*/makeDVD/auto.sh /mnt/cdrom5''' `oracle-profile' -> `/mnt/cdrom5/oracle-profile' root/ root/fix_cssd/ root/fix_cssd/fix_cssd.sh Oracle SSO Username: '''<your-username>''' Oracle SSO Password: '''<your-password>''' LOGGING IN TO ORACLE SSO % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 414 733 414 2977 0 0 1621 0 --:--:-- 0:00:01 --:--:-- 22383 DOWNLOADING: /tmp/oracleasmlib-2.0.4-1.el5.i386.rpm % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 13929 100 13929 0 0 23118 0 --:--:-- --:--:-- --:--:-- 2720k `/tmp/oracleasmlib-2.0.4-1.el5.i386.rpm' -> `./oracleasmlib-2.0.4-1.el5.i386.rpm' DOWNLOADING: /tmp/linux_11gR2_database_1of2.zip ( ... ) FINISHED BUILDING RAC ATTACK DVD

Prep for Oracle

[edit | edit source]- Edit /etc/sysctl.conf [root@collabn1 ~]# gedit /etc/sysctl.conf kernel.shmmni = 4096 # semaphores: semmsl, semmns, semopm, semmni kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_default=4194304 net.core.rmem_max=4194304 net.core.wmem_default=262144 net.core.wmem_max=262144 [root@collabn1 ~]# sysctl -p

- Edit /etc/security/limits.conf oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536

- Edit /etc/pam.d/login and insert the following lines BEFORE the "selinux open" line. session required /lib/security/pam_limits.so session required pam_limits.so

- Create groups and users. Make the oracle password racattack [root@collabn1 ~]# groupadd oinstall [root@collabn1 ~]# groupadd dba [root@collabn1 ~]# groupadd oper [root@collabn1 ~]# groupadd asmdba [root@collabn1 ~]# groupadd asmoper [root@collabn1 ~]# groupadd asmadmin [root@collabn1 ~]# [root@collabn1 ~]# useradd -u 500 -g oinstall -G dba,oper,asmdba,asmoper,asmadmin oracle [root@collabn1 ~]# passwd oracle Changing password for user oracle. New UNIX password: racattack BAD PASSWORD: it is based on a dictionary word Retype new UNIX password: racattack passwd: all authentication tokens updated successfully.

- Create directories. [root@collabn1 ~]# mkdir -p /u01/grid/oracle/product/11.2.0/grid_1 [root@collabn1 ~]# mkdir -p /u01/app/oracle/product/11.2.0/db_1 [root@collabn1 ~]# chown -R oracle:oinstall /u01

-

Logout of your session and login as the oracle user.

-

Disable GNOME CD automount for the oracle user. Go to the menu System >> Preferences >> Removable Drives and Media.

-

Uncheck all of the options under Removable Storage and click Close.

-

Open a terminal window.

-

From the menus, open Edit >> Current Profile.

-

In the Title and Command tab, check the box for Run command as a login shell, then close the dialog.

-

If you are using gedit, then open Edit > Preferences to disable text wrapping and enable line numbers.

- Setup ssh equivalence for oracle user. [oracle@collabn1 ~]$ ssh localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. RSA key fingerprint is 3f:65:e6:77:af:11:84:56:a4:b9:0f:8b:41:b4:2f:8a. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'localhost' (RSA) to the list of known hosts. oracle@localhost's password: ^C [oracle@collabn1 ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): ^M [default] Enter passphrase (empty for no passphrase): ^M [no password] Enter same passphrase again: ^M [no password] Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: 0a:33:74:d4:7a:c0:c6:d0:56:33:10:4e:71:63:4c:e8 oracle@collabn1.vm.ardentperf.com [oracle@collabn1 ~]$ cat /home/oracle/.ssh/id_rsa.pub >> /home/oracle/.ssh/authorized_keys

-

Setup oracle and root users' profiles.

[oracle@collabn1 ~]$ su - root

[root@collabn1 ~]# gedit /etc/oratab

grid:/u01/grid/oracle/product/11.2.0/grid_1:N

[root@collabn1 ~]# chown oracle:dba /etc/oratab

[root@collabn1 ~]# gedit /root/.bashrc

ORAENV_ASK=NO

ORACLE_SID=grid

. oraenv >/dev/null

unset ORAENV_ASK

[root@collabn1 ~]# su - oracle

[oracle@collabn1 ~]$ cd /mnt

[oracle@collabn1 ~]$ cat */oracle-profile >>/home/oracle/.bash_profile

The source code for this file can be found at https://github.com/ardentperf/racattack/blob/master/makeDVD/oracle-profile

The source code for this file can be found at https://github.com/ardentperf/racattack/blob/master/makeDVD/oracle-profile - Close and re-open your terminal sessions so that the new profiles take effect.

-

Install fix_cssd script.

In VMware test environments you usually have a very small amount of memory. Oracle CSS processes can take up a *LOT* of the memory (over 50% in this lab) because it locks several hundred MB in physical memory. In VMware (for both ASM and RAC environments) this may be undesirable. This low-level hack will make the memory swappable at runtime.

In VMware test environments you usually have a very small amount of memory. Oracle CSS processes can take up a *LOT* of the memory (over 50% in this lab) because it locks several hundred MB in physical memory. In VMware (for both ASM and RAC environments) this may be undesirable. This low-level hack will make the memory swappable at runtime.NEVER, EVER, EVER EVEN IN YOUR WILDEST DREAMS THINK ABOUT TRYING THIS ON ANYTHING CLOSE TO A PRODUCTION SYSTEM.

The source code for this file can be found at https://github.com/ardentperf/racattack/blob/master/makeDVD/root/fix_cssd/fix_cssd.sh[oracle@collabn1 ~]$ su - root [root@collabn1 ~]# cd / [root@collabn1 ~]# tar xvf mnt/*/fix_cssd.tar root/fix_cssd/fix_cssd.sh [root@collabn1 ~]# chmod 774 /root/fix_cssd/fix_cssd.sh [root@collabn1 ~]# gedit /etc/rc.d/rc.local cd /root/fix_cssd nohup nice -n -20 ./fix_cssd.sh 2>&1 & [root@collabn1 ~]# /etc/rc.d/rc.local nohup: appending output to `nohup.out'

Create Cluster

[edit | edit source]Create Interconnect

[edit | edit source]- If the machine is running then logout and shutdown. The machine needs to be powered off.

-

In the Inventory tab at the left, select collabn1 (the virtual machine we just created).

-

Scroll down to the Hardware box and CONFIRM that there is one Network Adapter of type NAT.

-

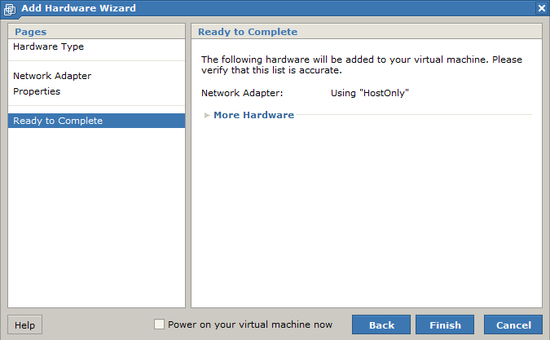

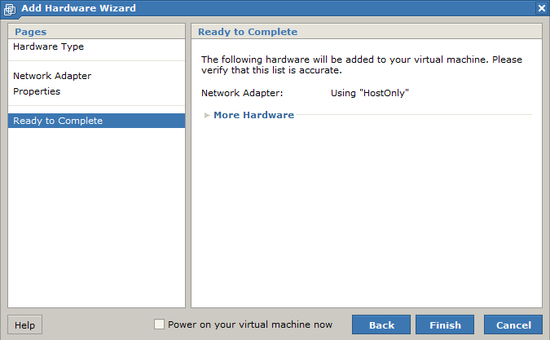

From the Commands box, click Add Hardware. In the window that appears, click Network Adapter.

-

Choose to create a HostOnly network connection. This will be used for the interconnect. Then click Next.

-

Click FINISH to create the network adapter.

Create Shared Disks

[edit | edit source]REPEAT STEPS 1-6 FOR BOTH OF THESE DEVICES:

| Virtual Device Node (Adapter/Device) | Size | Path (Datastore/Directory/Filename) |

|---|---|---|

| SCSI 1:0 | 3.25 GB | [RAC11g-shared] data.vmdk |

| SCSI 1:1 | 3.25 GB | [RAC11g-shared] backup.vmdk |

-

In the Inventory tab at the left, select collabn1 (the virtual machine we just created).

-

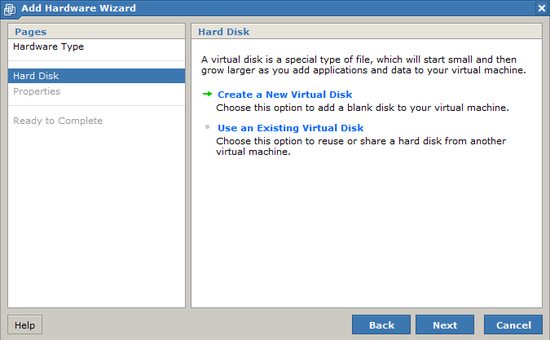

From the Commands box, click Add Hardware. In the window that appears, click Hard Disk.

-

Choose to Create a New Virtual Disk and click Next.

-

Enter a capacity of 3.25 GB and type the name “[RAC11g-shared] data.vmdk”.

- Choose File Options → Allocate all disk space now.

- Choose Disk Mode → Independent and Persistent.

- Choose Virtual Device Node → SCSI 1:0. Click Next to continue.

-

Click Finish to create the disk.

It may take a moment for the disk to appear to the VMware console. Wait until the new disk appears before you continue with the lab. Furthermore, the web browser may display an error which requires you to reload the page and login to VMware again.

It may take a moment for the disk to appear to the VMware console. Wait until the new disk appears before you continue with the lab. Furthermore, the web browser may display an error which requires you to reload the page and login to VMware again. - Repeat steps 1-5 for the second disk (it is listed at the beginning of this lab).

-

CONFIRM that your list of hard disks and network devices matches this screenshot.

-

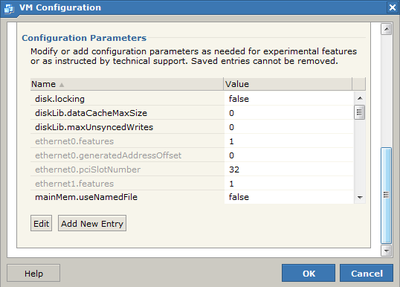

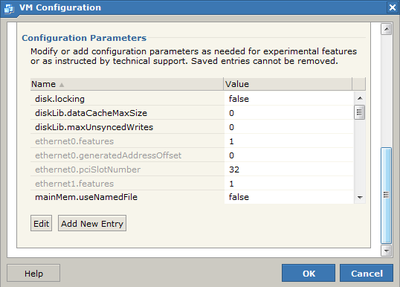

From the Commands box, click Configure VM.

-

Click the Advanced tab and scroll down to the Configuration Parameters. Use the Add New Entry button to add the entries listed here. Click OK to save the configuration changes.

Name Value disk.locking false diskLib.dataCacheMaxSize 0 diskLib.maxUnsyncedWrites 0 mainMem.useNamedFile false

I have found the following three websites among the most useful while creating custom VMware configurations. They show how powerful and versatile VMware is – even the free VMware Server product.

I have found the following three websites among the most useful while creating custom VMware configurations. They show how powerful and versatile VMware is – even the free VMware Server product.http://sanbarrow.com/vmx/vmx-advanced.html

http://vmfaq.com/?View=entry&EntryID=25

http://sanbarrow.com/vmx/vmx-config-ini.html

Copy VM

[edit | edit source]-

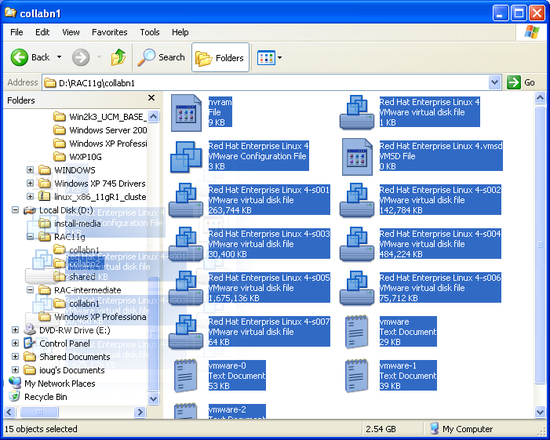

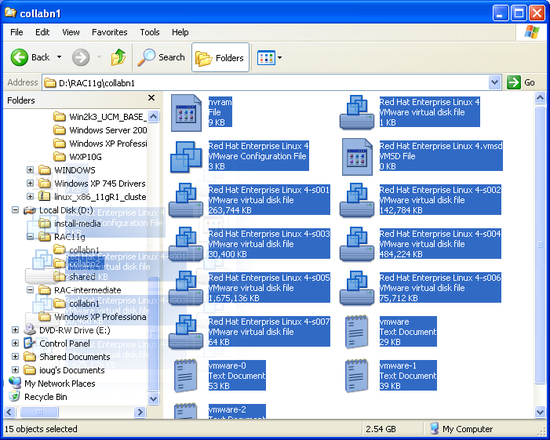

In Windows Explorer, browse to the folder [RAC11g] \collabn1. Copy all of the files to the folder [RAC11g] \collabn2.

- The location of [RAC11g] was determined in the first lab. In a class, the instructor may provide the location.

- If the collabn2 folder does not exist, then create it.

-

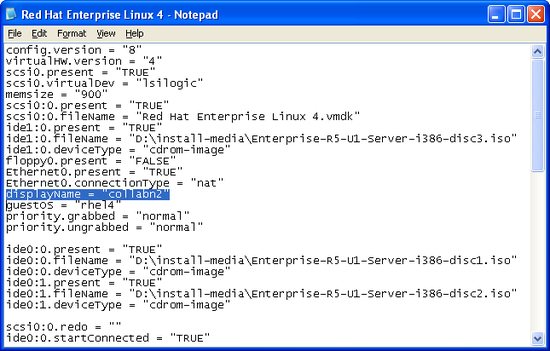

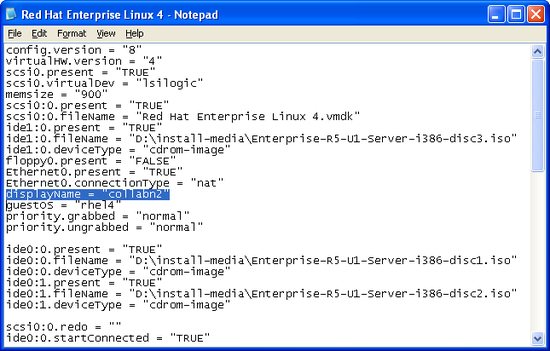

Browse to [RAC11g] \collabn2. Edit the file collabn1.vmx (the VMware Configuration File). You can use notepad or wordpad to edit the file.

-

Find the line displayName and change it to collabn2 (the new node name), then save and close the file.

displayName = "collabn2"

Configure Disks

[edit | edit source]-

In the Inventory tab at the left, select collabn1.

-

Choose the Console tab. Click on the play button to start the VM. When you see the square boxes, click anywhere to open a console window.

-

Login as the oracle user with the password racattack.

-

Open a terminal window.

- Switch to the root user with the "su -" command. (The password is racattack.) CONFIRM that two new disks exist and that they have the appropriate sizes. These two lines should exactly match!! [root@collabn1 ~]# cat /proc/partitions major minor #blocks name ... 8 16 3407872 sdb 8 32 3407872 sdc

-

Create partitions on all of the newly created disks with fdisk.

- run fdisk /dev/sdb You should see the message "Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel"

- type "n" to create a new partition.

- type "p" for a primary partition.

- type partition number 1.

- press enter twice to accept the default first/last cylinders.

- type "t" to set the partition type.

- enter partition type da (Non-FS data).

- type "w" to write the partition table to disk.

- Repeat the previous step for disk /dev/sdc

Configure Node 1

[edit | edit source]After completing the previous lab, you should already be logged in as the oracle user on collabn1. You should already have a terminal open, where you are currently switched to the root user.

- As the root user, CONFIRM that the IP address if eth0 starts with 192.168.78 and that the IP address of eth1 starts with 172.16.100. [root@collabn1 ~]# ifconfig|grep net eth0 Link encap:Ethernet HWaddr 00:0C:29:07:F5:C0 inet addr:192.168.78.128 Bcast:192.168.78.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe07:f5c0/64 Scope:Link eth1 Link encap:Ethernet HWaddr 00:0C:29:07:F5:CA inet addr:172.16.100.128 Bcast:172.16.100.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fe07:f5ca/64 Scope:Link inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host

- Update the IP addresses by directly editing the linux network configuration files. Update the BOOTPROTO line and add the remaining lines. [root@collabn1 ~]# cd /etc/sysconfig/network-scripts [root@collabn1 network-scripts]# gedit ifcfg-eth0 BOOTPROTO=none IPADDR=192.168.78.51 NETMASK=255.255.255.0 GATEWAY=192.168.78.2 [root@collabn1 network-scripts]# gedit ifcfg-eth1 BOOTPROTO=none IPADDR=172.16.100.51 NETMASK=255.255.255.0

- Update the DNS search domain [root@collabn1 network-scripts]# gedit /etc/resolv.conf search vm.ardentperf.com nameserver 192.168.78.2

-

As root, restart the network services by typing service network restart. Then confirm the new ip addresses with ifconfig. Also confirm the search domain by inspecting /etc/resolv.conf – if the file has reverted then edit it again. (When I wrote this lab, the change stuck after the second time I edited the file.)

You must perform this step in VMware; do not use PuTTY.

You must perform this step in VMware; do not use PuTTY.

- Edit /etc/ hosts. EDIT the line with 127.0.0.1 and then ADD all of the other lines below: [root@collabn1 etc]# vi /etc/hosts 192.168.78.51 collabn1 collabn1.vm.ardentperf.com 192.168.78.61 collabn1-vip collabn1-vip.vm.ardentperf.com 172.16.100.51 collabn1-priv collabn1-priv.vm.ardentperf.com 192.168.78.52 collabn2 collabn2.vm.ardentperf.com 192.168.78.62 collabn2-vip collabn2-vip.vm.ardentperf.com 172.16.100.52 collabn2-priv collabn2-priv.vm.ardentperf.com 192.168.78.250 collab-scan collab-scan.vm.ardentperf.com 192.168.78.251 collab-gns collab-gns.vm.ardentperf.com ::1 localhost6.localdomain6 localhost6 127.0.0.1 localhost.localdomain localhost

Configure Node 2

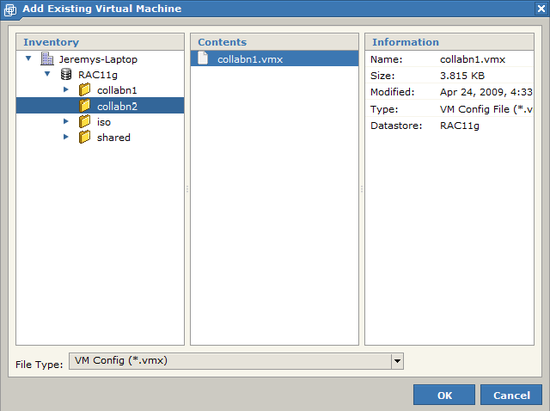

[edit | edit source]-

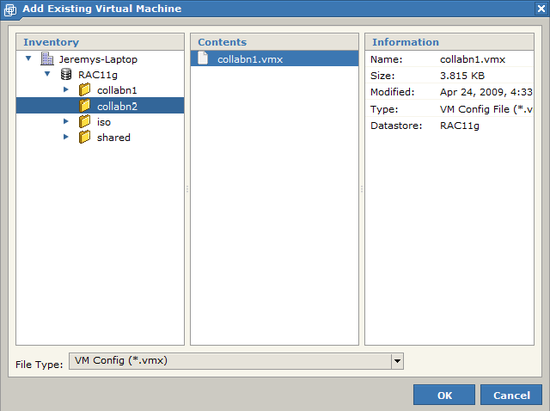

In the VMware console, go to the Virtual Machine menu and choose Add Virtual Machine to Inventory.

-

Browse to RAC11g/collabn2 and open collabn1.vmx. Make sure you browse to the right folder!

-

In the Inventory tab at the left, select the new VM – collabn2. Choose the Console tab and click the play button to start the VM.

-

The VMware console should ask you whether you copied or moved the VM. Answer that you copied the files and click OK.

-

When you see the square boxes, click anywhere to open a console window.

-

Login as the oracle user with the password racattack.

-

Open a terminal ( Applications >> Accessories >> Terminal ) and switch to the root user.

When you first open a console on collabn2, you will notice that the hostname still says collabn1. This is because we copied the machine; we will change the hostname shortly.

When you first open a console on collabn2, you will notice that the hostname still says collabn1. This is because we copied the machine; we will change the hostname shortly.

- CONFIRM that the IP address of eth1 starts with 172.16.100. [root@collabn1 ~]# ifconfig|grep net eth1 Link encap:Ethernet HWaddr 00:0C:29:C9:3E:C8 inet addr:172.16.100.129 Bcast:172.16.100.255 Mask:255.255.255.0 inet addr:127.0.0.1 Mask:255.0.0.0

- Update the IP addresses by directly editing the linux network configuration files. Update the BOOTPROTO line and add the remaining lines. COMMENT or DELETE the line which begins with HWADDR. [root@collabn1 ~]# cd /etc/sysconfig/network-scripts [root@collabn1 network-scripts]# gedit ifcfg-eth0 BOOTPROTO=none #HWADDR=... IPADDR=192.168.78.52 NETMASK=255.255.255.0 GATEWAY=192.168.78.2 [root@collabn1 network-scripts]# gedit ifcfg-eth1 BOOTPROTO=none #HWADDR=... IPADDR=172.16.100.52 NETMASK=255.255.255.0

- Update the DNS search domain [root@collabn1 network-scripts]# gedit /etc/resolv.conf search vm.ardentperf.com nameserver 192.168.78.2

- Update the HOSTNAME by editing the linux system configuration files. Set it to collabn2.vm.ardentperf.com. Then change the active system hostname with the hostname command. [root@collabn1 network-scripts]# gedit /etc/sysconfig/network HOSTNAME=collabn2.vm.ardentperf.com [root@collabn1 network-scripts]# hostname collabn2.vm.ardentperf.com

-

As root, restart the network services by typing service network restart. Then confirm the new ip addresses with ifconfig. Confirm that search domain by inspecting /etc/resolv.conf – if the file has reverted then edit it again. (The change stuck after the second time I edited the file while walking through this lab.) Also confirm the new hostname with hostname.

You must perform this step in VMware; do not use PuTTY.

You must perform this step in VMware; do not use PuTTY.

- Exit your terminal session and start a new one so that you can see the updated hostname in the prompt.

- Edit /etc/hosts. EDIT the line with 127.0.0.1 and then ADD all of the other lines below: [root@collabn2 etc]# vi /etc/hosts 192.168.78.51 collabn1 collabn1.vm.ardentperf.com 192.168.78.61 collabn1-vip collabn1-vip.vm.ardentperf.com 172.16.100.51 collabn1-priv collabn1-priv.vm.ardentperf.com 192.168.78.52 collabn2 collabn2.vm.ardentperf.com 192.168.78.62 collabn2-vip collabn2-vip.vm.ardentperf.com 172.16.100.52 collabn2-priv collabn2-priv.vm.ardentperf.com 192.168.78.250 collab-scan collab-scan.vm.ardentperf.com 192.168.78.251 collab-gns collab-gns.vm.ardentperf.com 127.0.0.1 localhost.localdomain localhost ::1 localhost6.localdomain6 localhost6

SSH and CVU

[edit | edit source]- Login to node collabn2. As the oracle user, make sure that you can ping both nodes on the interconnect and public network. [oracle@collabn2 ~]$ ping -c 1 collabn1 PING collabn1 (192.168.78.51) 56(84) bytes of data. 64 bytes from collabn1 (192.168.78.51): icmp_seq=1 ttl=64 time=0.793 ms [oracle@collabn2 ~]$ ping -c 1 collabn2 PING collabn2 (192.168.78.52) 56(84) bytes of data. 64 bytes from collabn2 (192.168.78.52): icmp_seq=1 ttl=64 time=0.024 ms [oracle@collabn2 ~]$ ping -c 1 collabn1-priv PING collabn1-priv (172.16.100.51) 56(84) bytes of data. 64 bytes from collabn1-priv (172.16.100.51): icmp_seq=1 ttl=128 time=0.901 ms [oracle@collabn2 ~]$ ping -c 1 collabn2-priv PING collabn2-priv (172.16.100.52) 56(84) bytes of data. 64 bytes from collabn2-priv (172.16.100.52): icmp_seq=1 ttl=64 time=0.058 ms

- Store the public keys for the hosts. You can do this with a tool called ssh-keyscan. [oracle@collabn2 .ssh]$ ssh-keyscan -t rsa collabn1 collabn1-priv collabn2 collabn2-priv >> ~/.ssh/known_hosts # collabn1 SSH-2.0-OpenSSH_4.3 # collabn1-priv SSH-2.0-OpenSSH_4.3 # collabn2 SSH-2.0-OpenSSH_4.3 # collabn2-priv SSH-2.0-OpenSSH_4.3

- Test ssh equivilance. It is important that there is no prompt and you are automatically logged in. [oracle@collabn2 .ssh]$ ssh collabn1 Warning: Permanently added the RSA host key for IP address '192.168.78.51' to the list of known hosts. Last login: Tue Apr 15 16:59:36 2008 from collabn2 [oracle@collabn1 ~]$ exit logout Connection to collabn1 closed.

- Switch to node collabn1. As the oracle user, confirm that you can ping both servers. Then copy the known_hosts file from collabn2. [oracle@collabn1 ~]$ ping -c 1 collabn1 PING collabn1 (192.168.78.51) 56(84) bytes of data. 64 bytes from collabn1 (192.168.78.51): icmp_seq=1 ttl=64 time=0.793 ms [oracle@collabn1 ~]$ ping -c 1 collabn2 PING collabn2 (192.168.78.52) 56(84) bytes of data. 64 bytes from collabn2 (192.168.78.52): icmp_seq=1 ttl=64 time=0.024 ms [oracle@collabn1 ~]$ ping -c 1 collabn1-priv PING collabn1-priv (172.16.100.51) 56(84) bytes of data. 64 bytes from collabn1-priv (172.16.100.51): icmp_seq=1 ttl=128 time=0.901 ms [oracle@collabn1 ~]$ ping -c 1 collabn2-priv PING collabn2-priv (172.16.100.52) 56(84) bytes of data. 64 bytes from collabn2-priv (172.16.100.52): icmp_seq=1 ttl=64 time=0.058 ms [oracle@collabn1 ~]$ scp collabn2:.ssh/known_hosts ~/.ssh/known_hosts The authenticity of host 'collabn2 (192.168.78.52)' can't be established. RSA key fingerprint is 3f:65:e6:77:af:11:84:56:a4:b9:0f:8b:41:b4:2f:8a. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'collabn2,192.168.78.52' (RSA) to the list of known hosts. known_hosts 100% 3146 3.1KB/s 00:00

- Test ssh equivilance. [oracle@collabn1 ~]$ ssh collabn2 Last login: Tue Apr 15 17:02:29 2008 from collabn2 [oracle@collabn2 ~]$ exit logout Connection to collabn2 closed.

- You need to be able to login to yourself too; test this. [oracle@collabn1 ~]$ ssh collabn1 Last login: Tue Apr 15 17:03:39 2008 from collabn2 [oracle@collabn1 ~]$ exit logout Connection to collabn1 closed.

- Run CLUVFY to validate your hardware and OS installation! The shared disk test will fail; this is OK. Ignore that error. collabn1:/home/oracle[RAC1]$ /mnt/cdrom*/grid/runcluvfy.sh stage -post hwos -n collabn1,collabn2 Performing post-checks for hardware and operating system setup Checking node reachability... Node reachability check passed from node "collabn1" Checking user equivalence... User equivalence check passed for user "oracle" Checking node connectivity... Checking hosts config file... Verification of the hosts config file successful Node connectivity passed for subnet "192.168.78.0" with node(s) collabn2,collabn1 TCP connectivity check passed for subnet "192.168.78.0" Node connectivity passed for subnet "172.16.100.0" with node(s) collabn2,collabn1 TCP connectivity check passed for subnet "172.16.100.0" Interfaces found on subnet "192.168.78.0" that are likely candidates for VIP are: collabn2 eth0:192.168.78.52 collabn1 eth0:192.168.78.51 Interfaces found on subnet "172.16.100.0" that are likely candidates for a private interconnect are: collabn2 eth1:172.16.100.52 collabn1 eth1:172.16.100.51 Node connectivity check passed Check for multiple users with UID value 0 passed Post-check for hardware and operating system setup was successful.

Grid Install (ASM)

[edit | edit source]Setup ASMLIB

[edit | edit source]-

Login to collabn1 as oracle and open a teminal. Switch to the root user.

-

Install ASMLib on both nodes and initialize the disks. mount command may fail (mount: /dev/cdrom-hda already mounted or /mnt/cdrom busy) if you already have cdrom mounted from previous steps.

[root@collabn1 ~]# cd /mnt

[root@collabn1 mnt]# mount /mnt/cdrom

mount: block device /dev/cdrom-hda is write-protected, mounting read-only

[root@collabn1 mnt]# mount /mnt/cdrom5

mount: block device /dev/cdrom-hdb is write-protected, mounting read-only

# OracleASM

rpm -Uvh */*/oracleasm-support*

rpm -Uvh */*/oracleasm*el5-2* # (Note - that's lowercase EL5-2.)

# Missing from the install media; copied to RAC Attack DVD.

rpm -Uvh */oracleasmlib*

[root@collabn1 mnt]# /etc/init.d/oracleasm configure

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: oracle

Default group to own the driver interface []: dba

Start Oracle ASM library driver on boot (y/n) [n]: y

Fix permissions of Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

Initializing the Oracle ASMLib driver: [ OK ]

Scanning the system for Oracle ASMLib disks: [ OK ]

The disks are shared so you only need to initialize them once.

The disks are shared so you only need to initialize them once.[root@collabn1 ~]# /etc/init.d/oracleasm createdisk data /dev/sdb1 Marking disk "data" as an ASM disk: [ OK ] [root@collabn1 ~]# /etc/init.d/oracleasm createdisk backup /dev/sdc1 Marking disk "backup" as an ASM disk: [ OK ] [root@collabn1 ~]# /etc/init.d/oracleasm listdisks BACKUP DATA

Now repeat some of the steps on the second virtual machine:

[root@collabn1 ~]# ssh collabn2 [root@collabn2 ~]# cd /mnt # OracleASM rpm -Uvh */*/oracleasm-support* rpm -Uvh */*/oracleasm*el5-2* # Missing from the install media; copied to RAC Attack DVD. rpm -Uvh */oracleasmlib* [root@collabn2 ~]# /etc/init.d/oracleasm configure Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: oracle Default group to own the driver interface []: dba Start Oracle ASM library driver on boot (y/n) [n]: y Fix permissions of Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done Initializing the Oracle ASMLib driver: [ OK ] Scanning the system for Oracle ASMLib disks: [ OK ] [root@collabn2 ~]# /etc/init.d/oracleasm listdisks BACKUP DATA

Cluster Verification Utility (ASM)

[edit | edit source]-

Login to collabn1 as the oracle user and open a terminal.

- Run CVU to validate that you're ready to install CRS. Use the new “fixup” feature of 11gR2 CVU to create a script that can fix missing parameters. [oracle@collabn1 ~]$ /mnt/cdrom*/grid/runcluvfy.sh stage -pre crsinst -n collabn1,collabn2 -fixup -r 11gR2 Performing pre-checks for cluster services setup Checking node reachability... Node reachability check passed from node "collabn1" Checking user equivalence... User equivalence check passed for user "oracle" Checking node connectivity... Checking hosts config file... Verification of the hosts config file successful Node connectivity passed for subnet "192.168.78.0" with node(s) collabn2,collabn1 TCP connectivity check passed for subnet "192.168.78.0" Node connectivity passed for subnet "172.16.100.0" with node(s) collabn2,collabn1 TCP connectivity check passed for subnet "172.16.100.0" Interfaces found on subnet "192.168.78.0" that are likely candidates for VIP are: collabn2 eth0:192.168.78.52 collabn1 eth0:192.168.78.51 Interfaces found on subnet "172.16.100.0" that are likely candidates for a private interconnect are: collabn2 eth1:172.16.100.52 collabn1 eth1:172.16.100.51 Node connectivity check passed Total memory check failed Check failed on nodes: collabn2,collabn1 Available memory check passed Swap space check failed Free disk space check passed for "collabn2:/tmp" Free disk space check passed for "collabn1:/tmp" User existence check passed for "oracle" Group existence check passed for "oinstall" Group existence check passed for "dba" Membership check for user "oracle" in group "oinstall" [as Primary] passed Membership check for user "oracle" in group "dba" passed Run level check passed Hard limits check passed for "maximum open file descriptors" Soft limits check passed for "maximum open file descriptors" Hard limits check passed for "maximum user processes" Soft limits check passed for "maximum user processes" System architecture check passed Kernel version check passed Kernel parameter check passed for "semmsl" Kernel parameter check passed for "semmns" Kernel parameter check passed for "semopm" Kernel parameter check passed for "semmni" Kernel parameter check passed for "shmmax" Kernel parameter check passed for "shmmni" Kernel parameter check passed for "shmall" Kernel parameter check failed for "file-max" Check failed on nodes: collabn2,collabn1 Kernel parameter check passed for "ip_local_port_range" Kernel parameter check passed for "rmem_default" Kernel parameter check passed for "rmem_max" Kernel parameter check passed for "wmem_default" Kernel parameter check failed for "wmem_max" Check failed on nodes: collabn2,collabn1 Kernel parameter check failed for "aio-max-nr" Check failed on nodes: collabn2,collabn1 Package existence check passed for "make-3.81" Package existence check passed for "binutils-2.17.50.0.6" Package existence check passed for "gcc-4.1.2" Package existence check passed for "gcc-c++-4.1.2" Package existence check passed for "libgomp-4.1.2" Package existence check passed for "libaio-0.3.106" Package existence check passed for "glibc-2.5-24" Package existence check passed for "compat-libstdc++-33-3.2.3" Package existence check passed for "elfutils-libelf-0.125" Package existence check passed for "elfutils-libelf-devel-0.125" Package existence check passed for "glibc-common-2.5" Package existence check passed for "glibc-devel-2.5" Package existence check passed for "glibc-headers-2.5" Package existence check passed for "libaio-devel-0.3.106" Package existence check passed for "libgcc-4.1.2" Package existence check passed for "libstdc++-4.1.2" Package existence check passed for "libstdc++-devel-4.1.2" Package existence check passed for "sysstat-7.0.2" Package existence check passed for "unixODBC-2.2.11" Package existence check passed for "unixODBC-devel-2.2.11" Package existence check passed for "ksh-20060214" Check for multiple users with UID value 0 passed Current group ID check passed Core file name pattern consistency check passed. User "oracle" is not part of "root" group. Check passed Default user file creation mask check passed Starting Clock synchronization checks using Network Time Protocol(NTP)... NTP Configuration file check started... NTP Configuration file check passed Checking daemon liveness... Liveness check failed for "ntpd" Check failed on nodes: collabn2,collabn1 PRVF-5415 : Check to see if NTP daemon is running failed Clock synchronization check using Network Time Protocol(NTP) failed Fixup information has been generated for following node(s): collabn2,collabn1 Please run the following script on each node as "root" user to execute the fixups: '/tmp/CVU_11.2.0.1.0_oracle/runfixup.sh' Pre-check for cluster services setup was unsuccessful on all the nodes.

- Switch to the root user and run the fixup script on both nodes. collabn1:/home/oracle[RAC1]$ su - Password: -bash: oraenv: No such file or directory [root@collabn1 ~]# /tmp/CVU_11.2.0.1.0_oracle/runfixup.sh Response file being used is :/tmp/CVU_11.2.0.1.0_oracle/fixup.response Enable file being used is :/tmp/CVU_11.2.0.1.0_oracle/fixup.enable Log file location: /tmp/CVU_11.2.0.1.0_oracle/orarun.log Setting Kernel Parameters... fs.file-max = 6815744 net.ipv4.ip_local_port_range = 9000 65500 net.core.wmem_max=262144 net.core.wmem_max = 1048576 fs.aio-max-nr = 1048576 [root@collabn1 ~]# ssh collabn2 /tmp/CVU_11.2.0.1.0_oracle/runfixup.sh root@collabn2's password: /root/.bashrc: line 16: oraenv: No such file or directory Response file being used is :/tmp/CVU_11.2.0.1.0_oracle/fixup.response Enable file being used is :/tmp/CVU_11.2.0.1.0_oracle/fixup.enable Log file location: /tmp/CVU_11.2.0.1.0_oracle/orarun.log Setting Kernel Parameters... fs.file-max = 6815744 net.ipv4.ip_local_port_range = 9000 65500 net.core.wmem_max=262144 net.core.wmem_max = 1048576 fs.aio-max-nr = 1048576

- As the oracle user, run CVU again to make sure the fixit script worked. The NTP, memory and swap checks might fail – but this is acceptable for our VMware test cluster. [root@collabn1 ~]# exit logout collabn1:/home/oracle[RAC1]$ /mnt/cdrom*/grid/runcluvfy.sh stage -pre crsinst -n collabn1,collabn2 -r 11gR2 ... Total memory check failed ... Swap space check failed ... Kernel parameter check passed for "file-max" ... Kernel parameter check passed for "wmem_max" Kernel parameter check passed for "aio-max-nr" ... Liveness check failed for "ntpd" ...

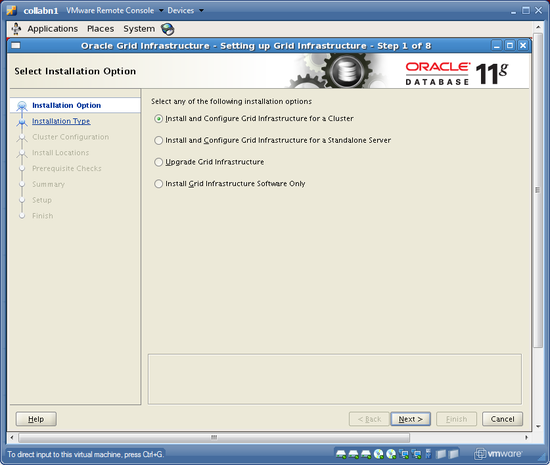

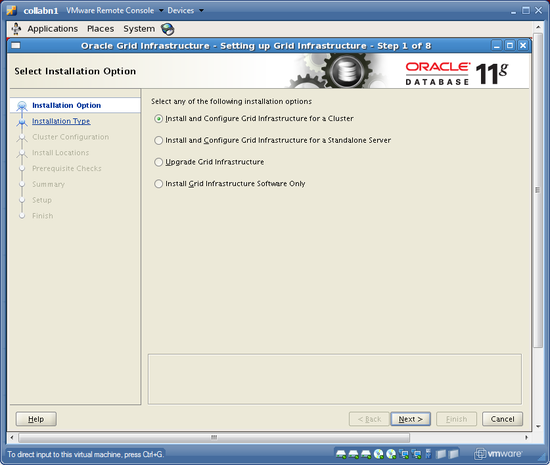

Install Grid Infrastructure (ASM)

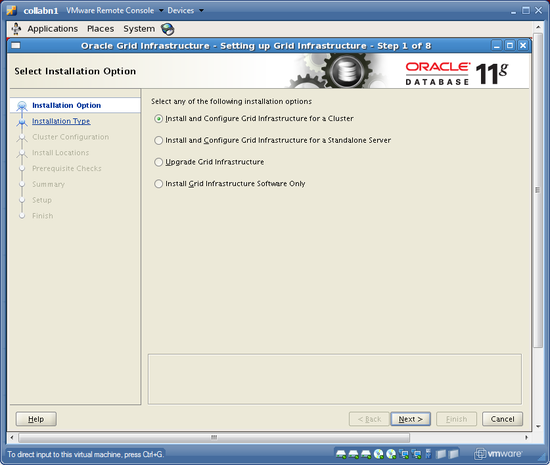

[edit | edit source]-

As the oracle user, launch the grid installer. At the first screen, choose Install and Configure Grid Infrastructure for a Cluster and click NEXT.

[oracle@collabn1 ~]$ /mnt/cdrom*/grid/runInstaller

-

Choose Advanced Installation and click NEXT.

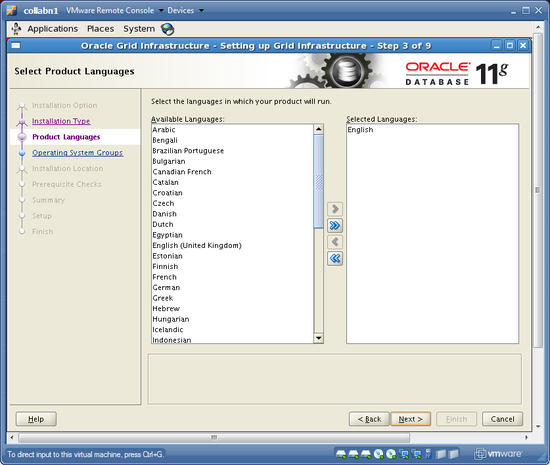

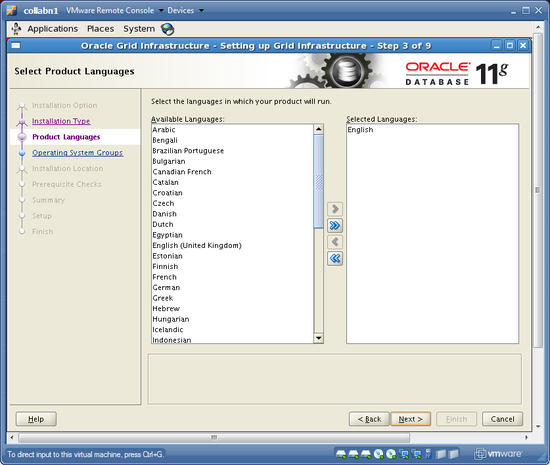

-

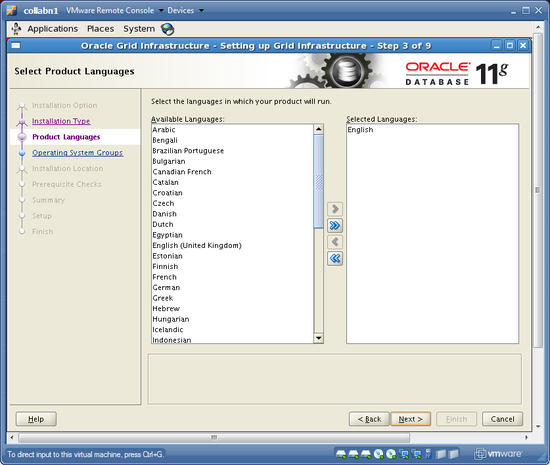

Accept the default language (English) and choose NEXT.

-

Name the cluster collab and make sure that the SCAN name is collab-scan with port 1521, then click NEXT.

-

Add node collabn2 with VIP collabn2-vip and choose NEXT to validate the cluster configuration.

-

Verify that eth0 on subnet 192.168.78.0 is PUBLIC and that eth1 on subnet 172.16.100.0 is PRIVATE, then click NEXT.

-

Choose to store the Clusterware Files in ASM and choose NEXT.

-

Create a diskgroup called DATA with External Redundancy using only the disk ORCL:DATA and click NEXT.

-

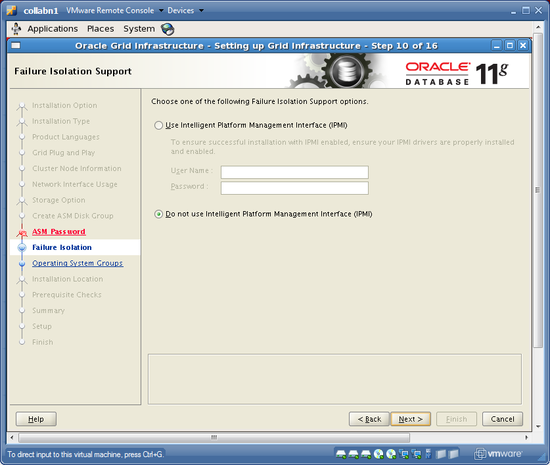

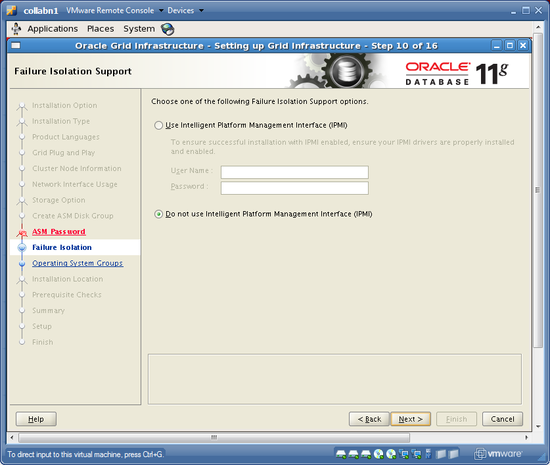

Choose to use the same passwords for all accounts and enter the password racattack, then click NEXT. (Ignore the message that Oracle doesn't like this password.)

-

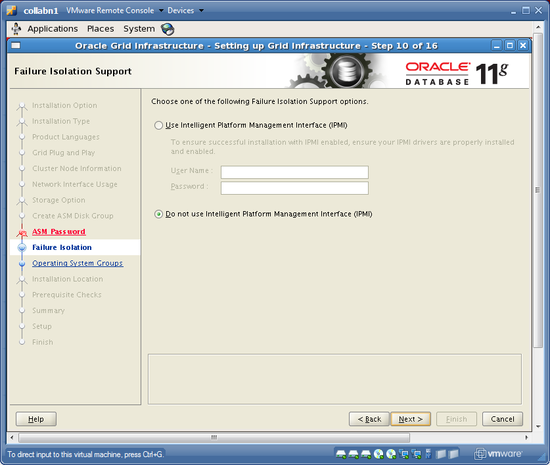

Do not use IPMI. Click NEXT.

-

Set the OSDBA group to asmdba, the OSOPER group to asmoper and the OSASM group to asmadmin. Then click NEXT.

-

Accept the ORACLE_BASE location of /u01/app/oracle and use the ORACLE_HOME location of /u01/grid/oracle/product/11.2.0/grid_1. Then click NEXT.

-

Accept the default inventory location of /u01/app/oraInventory and choose NEXT

-

The prerequisite checks will execute. A warning will be issued saying that three checks failed: physical memory, swap size and network time protocol. Click the CHECK BOX to Ignore All, then click NEXT.

-

SAVE a response file called grid.rsp in the oracle user home directory. Then click FINISH to install grid infrastructure.

-

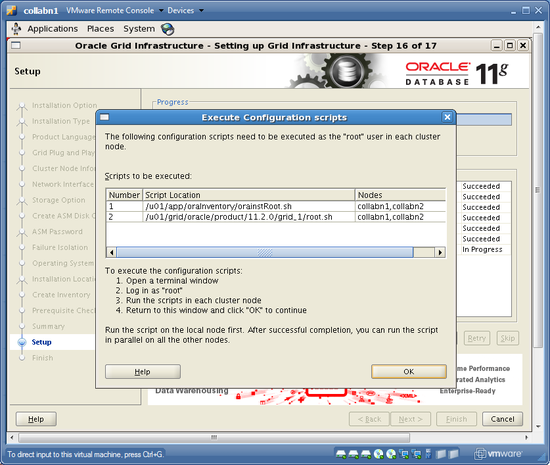

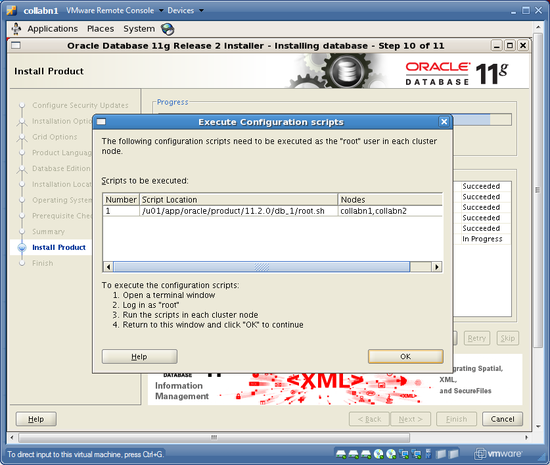

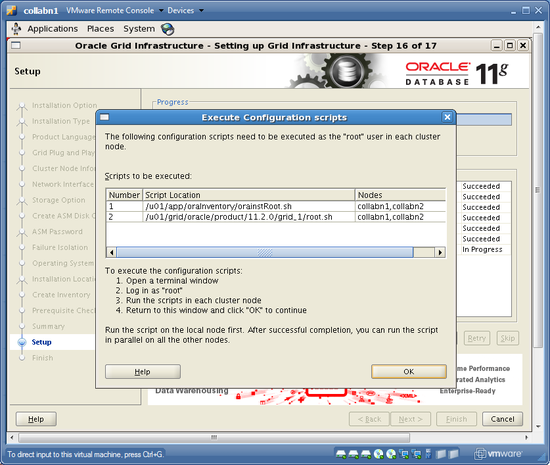

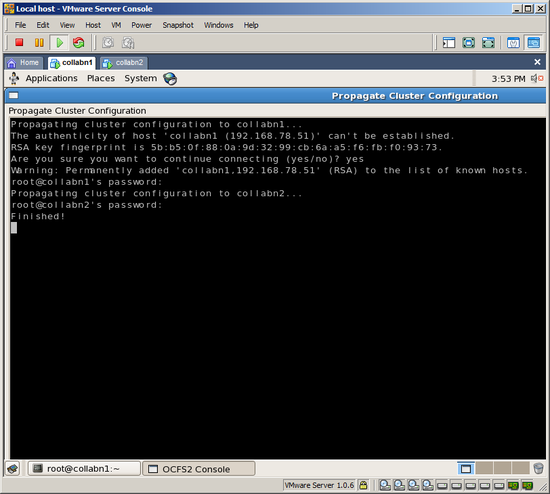

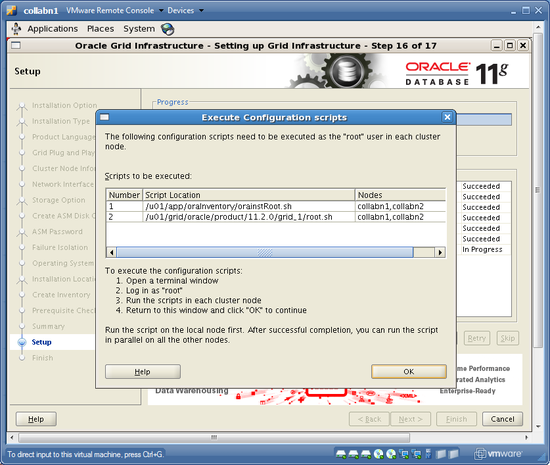

When prompted, open a terminal as the root user and run the two root.sh scripts. Make sure to run BOTH SCRIPTS on BOTH NODES!

[oracle@collabn1 ~]$ su - Password: racattack [root@collabn1 ~]# /u01/app/oraInventory/orainstRoot.sh Changing permissions of /u01/app/oraInventory. Adding read, write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /u01/app/oraInventory to oinstall. The execution of the script is complete. [root@collabn1 ~]# /u01/grid/oracle/product/11.2.0/grid_1/root.sh Running Oracle 11g root.sh script... The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/grid/oracle/product/11.2.0/grid_1 Enter the full pathname of the local bin directory: [/usr/local/bin]: /usr/bin Copying dbhome to /usr/bin ... Copying oraenv to /usr/bin ... Copying coraenv to /usr/bin ... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root.sh script. Now product-specific root actions will be performed. 2011-03-30 16:49:13: Parsing the host name 2011-03-30 16:49:13: Checking for super user privileges 2011-03-30 16:49:13: User has super user privileges Using configuration parameter file: /u01/grid/oracle/product/11.2.0/grid_1/crs/install/crsconfig_params Creating trace directory LOCAL ADD MODE Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert Adding daemon to inittab CRS-4123: Oracle High Availability Services has been started. ohasd is starting CRS-2672: Attempting to start 'ora.gipcd' on 'collabn1' CRS-2672: Attempting to start 'ora.mdnsd' on 'collabn1' CRS-2676: Start of 'ora.mdnsd' on 'collabn1' succeeded CRS-2676: Start of 'ora.gipcd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'collabn1' CRS-2676: Start of 'ora.gpnpd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'collabn1' CRS-2676: Start of 'ora.cssdmonitor' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'collabn1' CRS-2672: Attempting to start 'ora.diskmon' on 'collabn1' CRS-2676: Start of 'ora.diskmon' on 'collabn1' succeeded CRS-2676: Start of 'ora.cssd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.ctssd' on 'collabn1' CRS-2676: Start of 'ora.ctssd' on 'collabn1' succeeded ASM created and started successfully. DiskGroup DATA created successfully. clscfg: -install mode specified Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. CRS-2672: Attempting to start 'ora.crsd' on 'collabn1' CRS-2676: Start of 'ora.crsd' on 'collabn1' succeeded CRS-4256: Updating the profile Successful addition of voting disk 6b7c5142d3214fe9bf4d4273f0ff11fd. Successfully replaced voting disk group with +DATA. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 6b7c5142d3214fe9bf4d4273f0ff11fd (ORCL:DATA) [DATA] Located 1 voting disk(s). CRS-2673: Attempting to stop 'ora.crsd' on 'collabn1' CRS-2677: Stop of 'ora.crsd' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.asm' on 'collabn1' CRS-2677: Stop of 'ora.asm' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.ctssd' on 'collabn1' CRS-2677: Stop of 'ora.ctssd' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'collabn1' CRS-2677: Stop of 'ora.cssdmonitor' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'collabn1' CRS-2677: Stop of 'ora.cssd' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.gpnpd' on 'collabn1' CRS-2677: Stop of 'ora.gpnpd' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'collabn1' CRS-2677: Stop of 'ora.gipcd' on 'collabn1' succeeded CRS-2673: Attempting to stop 'ora.mdnsd' on 'collabn1' CRS-2677: Stop of 'ora.mdnsd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.mdnsd' on 'collabn1' CRS-2676: Start of 'ora.mdnsd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.gipcd' on 'collabn1' CRS-2676: Start of 'ora.gipcd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'collabn1' CRS-2676: Start of 'ora.gpnpd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'collabn1' CRS-2676: Start of 'ora.cssdmonitor' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'collabn1' CRS-2672: Attempting to start 'ora.diskmon' on 'collabn1' CRS-2676: Start of 'ora.diskmon' on 'collabn1' succeeded CRS-2676: Start of 'ora.cssd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.ctssd' on 'collabn1' CRS-2676: Start of 'ora.ctssd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'collabn1' CRS-2676: Start of 'ora.asm' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'collabn1' CRS-2676: Start of 'ora.crsd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.evmd' on 'collabn1' CRS-2676: Start of 'ora.evmd' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'collabn1' CRS-2676: Start of 'ora.asm' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.DATA.dg' on 'collabn1' CRS-2676: Start of 'ora.DATA.dg' on 'collabn1' succeeded CRS-2672: Attempting to start 'ora.registry.acfs' on 'collabn1' CRS-2676: Start of 'ora.registry.acfs' on 'collabn1' succeeded collabn1 2011/03/30 16:59:35 /u01/grid/oracle/product/11.2.0/grid_1/cdata/collabn1/backup_20110330_165935.olr Preparing packages for installation... cvuqdisk-1.0.7-1 Configure Oracle Grid Infrastructure for a Cluster ... succeeded Updating inventory properties for clusterware Starting Oracle Universal Installer... Checking swap space: must be greater than 500 MB. Actual 968 MB Passed The inventory pointer is located at /etc/oraInst.loc The inventory is located at /u01/app/oraInventory 'UpdateNodeList' was successful. Before you run any scripts on the second node, check the CPU utilization on the first node - where you just finished running scripts. If %idle is 0 then something is still running in the background and you should wait until %idle increases. You can monitor the CPU with any of these three commands:

Before you run any scripts on the second node, check the CPU utilization on the first node - where you just finished running scripts. If %idle is 0 then something is still running in the background and you should wait until %idle increases. You can monitor the CPU with any of these three commands:- top

- vmstat 3

- iostat -x sda sdb sdc 3

-

After running both scripts, return to the installer window and click OK to continue running configuration assistants.

-

The Cluster Verification Utility will fail because NTP is not running. If you want to, check the error message at the very end of the logfile. Then click OK to close the messagebox and click NEXT to continue.

-

You should now see the final screen! Click CLOSE to exit the installer.

[root@collabn1 ~]# vmstat 3 4

procs -----------memory---------- ---swap-- -----io---- --system-- -----cpu------

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 660156 11868 6472 263348 33 229 1505 2740 1194 2407 8 22 44 27 0

2 0 660156 11884 6472 263364 0 0 1 3 712 3635 2 4 90 4 0

1 0 660156 11884 6480 263368 0 0 0 34 701 3565 1 3 90 6 0

4 0 660156 11884 6480 263372 0 0 1 5 721 3642 2 8 88 2 0

[root@collabn1 ~]# ssh collabn2

root@collabn2's password: racattack

-bash: oraenv: No such file or directory

[root@collabn2 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read, write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@collabn2 ~]# /u01/grid/oracle/product/11.2.0/grid_1/root.sh

Running Oracle 11g root.sh script...

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/grid/oracle/product/11.2.0/grid_1

Enter the full pathname of the local bin directory: [/usr/local/bin]: /usr/bin

Copying dbhome to /usr/bin ...

Copying oraenv to /usr/bin ...

Copying coraenv to /usr/bin ...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root.sh script.

Now product-specific root actions will be performed.

2011-03-30 17:04:26: Parsing the host name

2011-03-30 17:04:26: Checking for super user privileges

2011-03-30 17:04:26: User has super user privileges

Using configuration parameter file: /u01/grid/oracle/product/11.2.0/grid_1/crs/install/crsconfig_params

Creating trace directory

LOCAL ADD MODE

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Adding daemon to inittab

CRS-4123: Oracle High Availability Services has been started.

ohasd is starting

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node collabn1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

CRS-2672: Attempting to start 'ora.mdnsd' on 'collabn2'

CRS-2676: Start of 'ora.mdnsd' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.gipcd' on 'collabn2'

CRS-2676: Start of 'ora.gipcd' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'collabn2'

CRS-2676: Start of 'ora.gpnpd' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'collabn2'

CRS-2676: Start of 'ora.cssdmonitor' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'collabn2'

CRS-2672: Attempting to start 'ora.diskmon' on 'collabn2'

CRS-2676: Start of 'ora.diskmon' on 'collabn2' succeeded

CRS-2676: Start of 'ora.cssd' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.ctssd' on 'collabn2'

CRS-2676: Start of 'ora.ctssd' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.drivers.acfs' on 'collabn2'

CRS-2676: Start of 'ora.drivers.acfs' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.asm' on 'collabn2'

CRS-2676: Start of 'ora.asm' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'collabn2'

CRS-2676: Start of 'ora.crsd' on 'collabn2' succeeded

CRS-2672: Attempting to start 'ora.evmd' on 'collabn2'

CRS-2676: Start of 'ora.evmd' on 'collabn2' succeeded

collabn2 2011/03/30 17:12:32 /u01/grid/oracle/product/11.2.0/grid_1/cdata/collabn2/backup_20110330_171232.olr

Preparing packages for installation...

cvuqdisk-1.0.7-1

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

Updating inventory properties for clusterware

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 1205 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'UpdateNodeList' was successful.

Increase CRS Fencing Timeout (ASM)

[edit | edit source]These steps are not necessary for a test or production environment. However they might make your VMware test cluster just a little more stable and they will provide a good learning opportunity about Grid Infrastructure.

- Grid Infrastructure must be running on only one node to change these settings. Shutdown the clusterware on collabn2 as user root. [oracle@collabn1 ~]$ ssh collabn2 Last login: Wed Mar 30 14:50:49 2011 Set environment by typing 'oenv' - default is instance RAC1. collabn2:/home/oracle[RAC1]$ su - Password: racattack [root@collabn2 bin]# crsctl stop crs CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'collabn2' CRS-2673: Attempting to stop 'ora.crsd' on 'collabn2' CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'collabn2' ... ... ... CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'collabn2' has completed CRS-4133: Oracle High Availability Services has been stopped.

- Return to node collabn1. As the root user, increase the misscount so that CRS waits 1.5 minutes before it reboots. (VMware can drag a little on some laptops!) [root@collabn1 ~]# crsctl get css misscount 30 [root@collabn1 ~]# crsctl set css misscount 90 Configuration parameter misscount is now set to 90.

- Increase the disktimeout so that CRS waits 10 minutes for I/O to complete before rebooting. [root@collabn1 ~]# crsctl get css disktimeout 200 [root@collabn1 ~]# crsctl set css disktimeout 600 Configuration parameter disktimeout is now set to 600.

- Restart CRS on the other node. [root@collabn1 bin]# ssh collabn2 [root@collabn2 bin]# crsctl start crs

Setup ASM

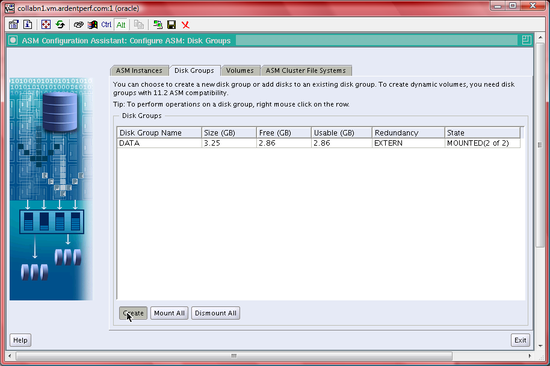

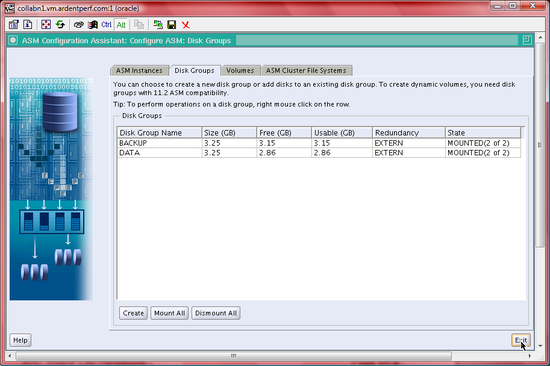

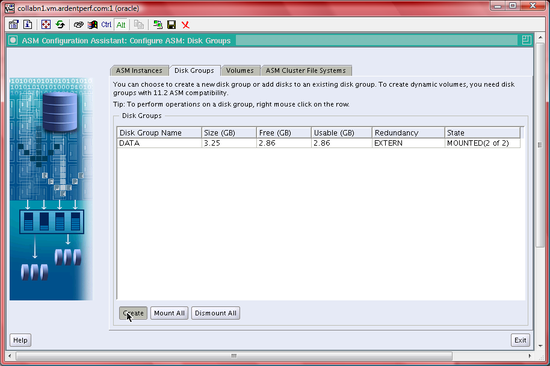

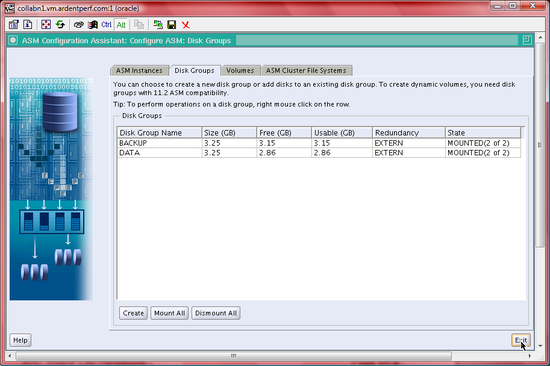

[edit | edit source]- As the oracle user, use the oenv macro (from Ardent Performance Computing) to set your environment for the SID +ASM1. Then, type asmca to launch ASMCA. collabn1:/home/oracle[RAC1]$ oenv SIDs here are: grid +ASM1 ORACLE_SID = [RAC1] ? +ASM1 The Oracle base for ORACLE_HOME=/u01/grid/oracle/product/11.2.0/grid_1 is /u01/app/oracle collabn1:/home/oracle[+ASM1]$ asmca

-

Make sure you're on the Disk Groups tab and then right-click on the DATA diskgroup and choose Edit Attributes.