Planet Earth/print version

Table of Contents

Front Matter

Section 1: EARTH’S SIZE, SHAPE, AND MOTION IN SPACE

- a. Science: How do we Know What We Know?

- b. Earth System Science: Gaia or Medea?

- c. Measuring the Size and Shape of Earth

- d. How to Navigate Across Earth using a Compass, Sextant, and Timepiece

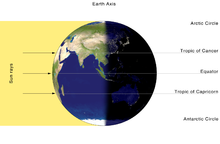

- e. Earth's Motion and Spin

- f. The Nature of Time: Solar, Lunar and Stellar Calendars

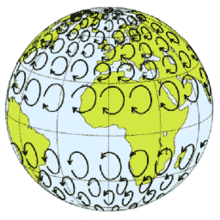

- g. Coriolis Effect: How Earth’s Spin Affects Motion Across its Surface

- h. Milankovitch cycles: Oscillations in Earth’s Spin and Rotation

- i. Time: The Invention of Seconds using Earth’s Motion

Section 2: EARTH’S ENERGY

- a. Energy and the Laws of Thermodynamics

- b. Solar Energy

- c. Electromagnetic Radiation and Black Body Radiators

- d. Daisy World and the Solar Energy Cycle

- e. Other Sources of Energy: Gravity, Tides, and the Geothermal Gradient

Section 3: EARTH’S MATTER

- a. Gas, Liquid, Solid (and other states of matter)

- b. Atoms: Electrons, Protons and Neutrons

- c. The Chart of the Nuclides

- d. Radiometric dating, using chemistry to tell time

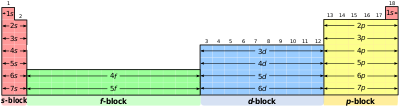

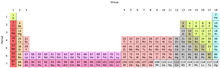

- e. The Periodic Table and Electron Orbitals

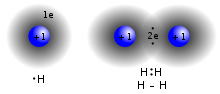

- f. Chemical Bonds (Ionic, Covalent, and others means to bring atoms together)

- g. Common Inorganic Chemical Molecules of Earth

- h. Mass spectrometers, X-Ray Diffraction, Chromatography and Other Methods to Determine Which Elements are in Things

Section 4: EARTH’S ATMOSPHERE

- a. The Air You Breathe

- b. Oxygen in the Atmosphere

- c. Carbon Dioxide in the Atmosphere

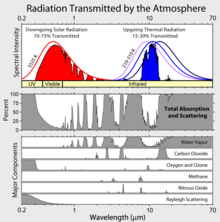

- d. Green House Gases

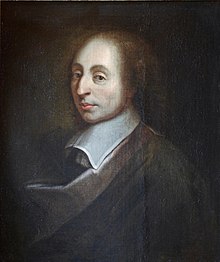

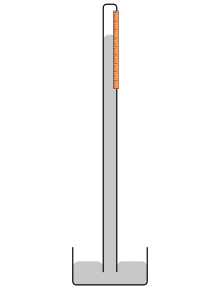

- e. Blaise Pascal and his Barometer

- f. Why are Mountain Tops Cold?

- g. What are Clouds?

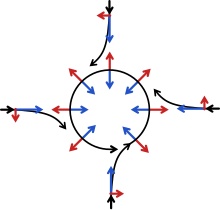

- h. What Makes Wind?

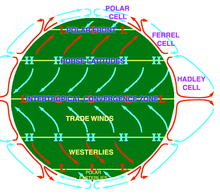

- i. Global Atmospheric Circulation

- j. Storm Tracking

- k. The Science of Weather Forecasting

- l. Earth’s Climate and How it Has Changed

Section 5: EARTH’S WATER

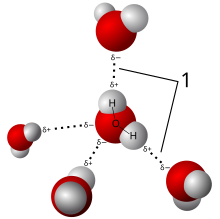

- a. H2O: A Miraculous Gas, Liquid and Solid on Earth

- b. Properties of Earth’s Water (Density, Salinity, Oxygen, and Carbonic Acid)

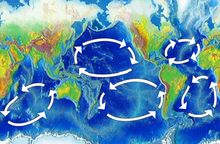

- c. Earth’s Oceans (Warehouses of Water)

- d. Surface Ocean Circulation

- e. Deep Ocean Circulation

- f. La Nina and El Nino, the sloshing of the Pacific Ocean

- g. Earth‘s Rivers.

- h. Earth’s Endangered Lakes and the Limits of Freshwater Sources

- i. Earth’s Ice: Glaciers, Ice Sheets, and Sea Ice

Section 6: EARTH’S SOLID INTERIOR

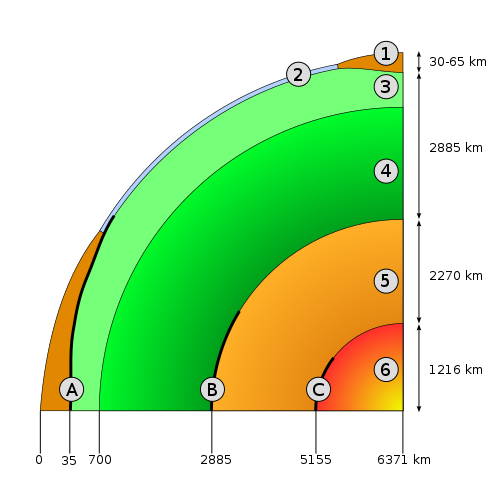

- a. Journey to the Center of the Earth: Earth’s Interior and Core

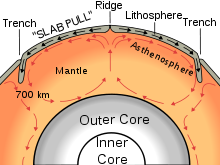

- b. Plate Tectonics: You are a Crazy Man, Alfred Wegener

- c. Earth’s Volcanoes: When Earth Goes Boom!

- d. You Can’t Fake an Earthquake: How to Read a Seismograph

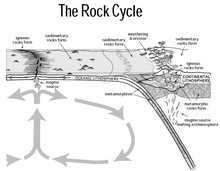

- e. The Rock Cycle and Rock Types (Igneous, Metamorphic and Sedimentary)

- f. Mineral Identification of Hand Samples

- g. Common Rock Identification

- h. Bowen’s Reaction Series

- i. Earth’s Surface Processes: Sedimentary Rocks and Depositional Environments

- j. Earth’s History Preserved in its Rocks: Stratigraphy and Geologic Time

Section 7: EARTH’S LIFE

- a. How Rare is Life in the Universe?

- b. What is Life?

- c. How did Life Originate?

- d. The Origin of Sex

- e. Darwin and the Struggle for Existence

- f. Heredity: Gregor Mendel’s Game of Cards

- g. Earth’s Biomes and Communities

- h. Soil: Living Dirt

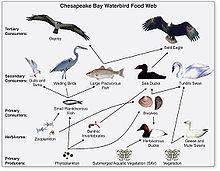

- i. Earth’s Ecology: Food Webs and Populations

Section 8: EARTH’S HUMANS AND FUTURE

- a. Ötzi’s World or What Sustainably Looks Like

- b. Rise of Human Consumerism and Population Growth

- c. Solutions for the Future

- d. How to Think Critically About Earth's Future

Section 1: EARTH’S SIZE, SHAPE, AND MOTION IN SPACE

1a. Science: How do we Know What We Know.

The Emergence of Scientific Thought

The term "science" comes from the Latin word for knowledge, scientia, although the modern definition of science only appears during the last 200 years. Between the years of 1347 to 1351, a deadly plague swept across the Eurasian Continent, resulting in the death of nearly 60% of the population. The years that followed the great Black Death, as the plague came to be called, was a unique period of reconstruction which saw the emergence of the field of science for the first time. Science became the pursuit of learning knowledge and gaining wisdom; it was synonymous with the more widely used term of philosophy. It was born in the time when people realized the importance of practical reason and scholarship in the curing of diseases and ending famines, as well as the importance of rational and experimental thought. The plague resulted in a profound acknowledgement of the importance of knowledge and scholarship to hold a civilization together. An early scientist was indistinguishable from being a scholar.

Two of the most well-known scholars to live during this time was Francesco “Petrarch” Petrarca and his good friend Giovanni Boccaccio; both were enthusiastic writers of Latin and early Italian, and they enjoyed a wide readership of their works of poetry, songs, travel writing, letters, and philosophy. Petrarch rediscovered the ancient writings of Greek and Roman figures of history and worked to popularize them into modern Latin, particularly rediscovering the writings of the Roman statesman Cicero, who had lived more than a thousand years before him. This pursuit of knowledge was something new, both Petrarch and Boccaccio proposed the kernel of thought in a scientific ideal that has transcended into the modern age, that the pursuit of knowledge and learning does not conflict with religious teachings as the capacity of intellectual and creative freedom is in itself divine. Secular pursuit of knowledge based on truth complements faith and religious doctrines which are based on belief and faith. This idea manifested during the Age of Enlightenment and eventually in the American Revolution as an aspiration for a clear separation of church and state. This sense of freedom to pursue knowledge and art, unhindered by religious doctrine, led to the Italian Renaissance of the early 1400s.

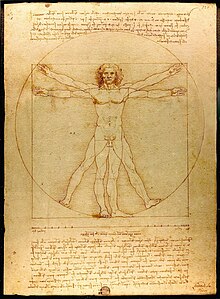

The Italian Renaissance was fueled as much by this new freedom to pursue knowledge as it was the global and economic shift that brought wealth and prosperity to northern Italy and later in northern Europe and England. This was a result of the fall of the Byzantine Empire, and the rise of a new merchant class of the city-states of northern Italy which took up the abandoned trade routes throughout the Mediterranean and beyond. The patronage of talented artists and scholars arose during this time as wealthy individuals financed not only artists but also the pursuit of science and technology. The first universities, places of learning outside of monasteries and convents, came into fashion for the first time as wealthy leaders of the city states of northern Italy sought talented artists and inventors to support within their own courts. Artists like Leonard da Vinci, Raphael, and Michelangelo received commissions from wealthy patrons, including the church and city-states, to create realistic artworks from the keen observation of the natural world. Science grew out of art, as the direct observation of the natural world led to deeper insights into the creation of realistic paintings and sculptures. This idea of the importance of observation found in Renaissance art transcended into the importance of observation in modern science today. In other words, science should reflect reality by the ardent observation of the natural world.

The Birth of Science Communication

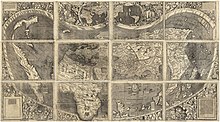

Science and the pursuit of knowledge during the Renaissance was enhanced to a greater extent by the invention of the printing press with moveable type, allowing the widespread distribution of information in the form of printed books. While block and intaglio prints using ink on hand-carved wood or metal blocks predated this period, re-movable type allowed the written word to be printed and copied onto pages much more quickly. The Gutenberg Bible was first printed in 1455, with 800 copies made in just a few weeks. This cheap and efficient way to replicate the written word had a dramatic effect on society, as literacy among the population grew. It was much more affordable to own books and written works than any previous time in history. With a little wealth, the common individual could pursue knowledge through the acquisition of books and literature. Of importance to science was the newfound ease to which you could disseminate information. The printing press led to the first information age and greatly influenced scientific thought during the middle Renaissance in the second half of the 1400s. Many of these early works were published with the mother tongue, the language spoken in the home, rather than the father tongue, the language of civic discourse found in the courts and the churches of the time, which was mostly Latin. These books spawned the early classic works of literature we have today in Italian, French, Spanish, English, and other European languages spoken across Europe and the world.

One of the key figures of this time was Nicolaus Copernicus, who published his mathematical theory that the Earth orbited around the Sun in 1543. The printed book entitled De revolutionibus orbium coelestium (On the Revolutions of the Celestial Spheres), written in the scholarly father tongue of Latin ushered in what historians called the Scientific Revolution. The book was influential because it was widely read by fellow astronomers across Europe. Each individual could verify the conclusions made by the book by carrying out the observations on their own. The Scientific Revolution was not so much what Nicolaus Copernicus discovered and reported (which will be discussed in depth later) but that the discovery and observations he made could be replicated by others interested in the same question. This single book led to one of the most important principles in modern science, that any idea or proposal must be verified through replication. What makes something scientific is that it can be replicated or verified by any individual interested in the topic of study. Science embodied at its core the reproducibility of observations made by individuals, and this principle ushered in the age of experimentation.

During this period of time, such verifications of observations and experiments was a lengthy affair. Printing costs of books and the distribution of that knowledge was very slow and often subjected to censure. This was also the time of the Reformation, first led by Martin Luther who protested corruption within the Catholic Church, leading to the establishment of the Protestant Movement in the early 1500s. This schism of thought and belief brought about primarily by the printing of new works of religious thought and discourse gave rise to the Inquisition. The Inquisition was a reactionary system of courts established by the Catholic Church to convict individuals who showed signs of dissent from the established beliefs sent forth by doctrine. Printed works that hinted at free thought and inquiry were destroyed and their authors imprisoned or executed. Science, which had flourished in the century before, suffered during the years of the Inquisition, but it also brought about one of the most important episodes in the history of science involving of one of the most celebrated scientists of its day, Galileo Galilei.

The Difference between Legal and Scientific Systems of Inquiry

Galileo was a mathematician, physicist, and astronomer who taught at one of the oldest Universities in Europe—the University of Padua. Galileo got into an argument with a fellow astronomer named Orazio Grassi, who taught at the Collegio Romano in Rome. Grassi had published a book in 1619 on the nature of three comets he had observed from Rome, entitled De tribus cometis anni MDCXVIII (On Three Comets in the Year 1618). The book angered Galileo who argued that comets were an effect of the atmosphere and not real celestial bodies. Although Galileo had invented an early telescope for observing the Moon, planets, and comets, he had not made observations of the three comets observed by Grassi. As a rebuttal, Galileo published his response in a book entitled The Assayer which, in a flourish, he dedicated to the Pope living in Rome. The dedication was meant as an appeal to authority, in which Galileo hoped that the Pope would take his side in the argument.

Galileo was following a legal protocol for science, where evidence is presented to a judge or jury, or Pope in this case, and they decide on a verdict based on the evidence presented before them. This appeal to authority was widely in use during the days of the Inquisition,and still practiced in law today. Galileo in his book The Assayer presented the notion that mathematics is the language of science. Or in other words, the numbers don’t lie. Despite being wrong, the Pope sided with Galileo, which emboldened Galileo to take on a topic he was interested in but was considered highly controversial by the church—the idea that Earth rotated around the Sun proposed by Copernicus. Galileo wanted to prove it using his own mathematics.

Before his position at the university, Galileo had served as a math tutor for the son of Christine de Lorraine the Grand Duchess of Tuscany. Christine was wealthy, highly educated, and more open to the idea of a heliocentric view of the Solar System. In a letter to her, Galileo proposed the rationale for undertaking a forbidden scientific inquiry, invoking the idea that science and religion were separate and that biblical writing was meant to be allegorical. Truth could be found in mathematics, even when it contradicted the religious teachings of the church.

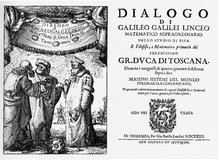

In 1632 Galileo published the book Dialogue Concerning the Two Chief World Systems in Italian and dedicated it to Christine's grandson. The book was covertly written in an attempt to bypass the censors of the time who could ban the work if they found that it was heretical to the teachings of the church. The book was written as a dialogue between three men (Simplicio, Salviati, and Sagredo), who over the course of four days debate and discuss the two world systems. Simplicio argues that the Sun rotates around the Earth, while Salviati argues that the Earth rotates around the Sun. The third man Sagredo, is neutral, and he listens and responds to the two theories as an independent observer. While the book was initially allowed to be published, it raised alarm among members of the clergy, and charges of heresy were brought forth against Galileo after its publication. The book was banned as well as all the previous writings of Galileo. The Pope, who had previously supported Galileo, saw himself as the character Simplicio, the simpleton. Furthermore, a letter to Christine was uncovered and brought forth during the trial. Galileo was found guilty of heresy and excommunicated from the church and placed under house arrest for the rest of his life. Galileo’s earlier appeal to authority appeared to be revoked as he faced these new charges. The result of Galileo’s ordeal was that fellow scientists felt that he had been wrongfully convicted and that the authority, whether religious or governmental, was not the determiner of truth in scientific inquiry.

Galileo’s ordeal established the important principle of the independence of science from authority in the determination of truth in science. The notion of appealing to authority figures should not be a principle of scientific inquiry. Unlike the practice of law, science was governed not by judges or juries, who could be fallible and wrong, nor was it governed through popular public opinion or even voting.

This led to an existential crisis in scientific thought. How can one define truth, especially if you can’t appeal to authority figures in leadership positions to judge what is true?

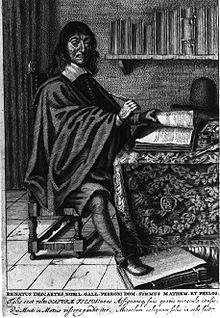

Scientific Deduction and How to Become a Scientific Expert

The first answer came from a contemporary of Galileo, René Descartes, a French philosopher who spent much of his life in the Dutch Republic. Descartes coined the motto, cogito, ergo sum, I think therefore I am, which was taken from his well-known preface entitled, (Discourse on the Method), published in both French and Latin in 1637. The essay is an exploration of how one can determine truth, and it is a very personal exploration of how he himself determines what is true or not. René Descartes argued for the importance of two principles in seeking truth.

First was the idea that it requires much reading, taking and passing classes, but also exploring the world around you— traveling and learning new cultures and meeting new people. He recommended joining the army, and living not only in books and university classrooms, but also living life in the real world and learning with everything that you do. Truth was based on common sense but only after careful study and work. What Descartes advocated was that expertise in a subject came not only from learning and studying a subject over many years but also practice in the real-world environment. A medical doctor who had never practiced nor read any books on the subject of medicine was a poorer doctor to one who attended many years of classes and kept up to date on the newest discoveries in books and journals and had practiced for many years in a medical office. The expert doctor would be able to discern a medical condition much more readily than a novice. With expertise and learning, one could come closer to knowing the truth.

The second idea was that anyone could obtain this expertise if they worked hard enough. René Descartes basically states that he was a normal average student, but through his experience and enthusiasm for learning more, he was able, over the years, to become expert enough to discern truth from fiction, hence he could claim, I think therefore I am.

What René Descartes advocated was that if you have to appeal to authority, seek experts within the field of study of your inquiry. These two principles of science should be a reminder that in today’s age of mass communication (Twitter, Facebook, Instagram) for everyone, much falsehood is perpetrated by novices in the spread of lies unknowingly, and to combat these lies or falsehoods, one must be educated and well informed through an exploration of written knowledge, educational institutions, and life experiences in the real world, and if you don’t have these, then seek experts.

René Descartes’ philosophy had a profound effect on science, although even he would reference this idea to le bon sens (common sense).

Descartes’ philosophy went further to answer the question of what if the experts are wrong? If two equally experienced experts disagree, how do we know who is right if there is no authority we can call upon to decide? How can one uncover truth through their own inquiry? Descartes’ answer was to use deduction. Deduction is where you form an idea and then test that idea with observation and experimentation. An idea is true until it is proven false.

The Idols of the Mind and Scientific Eliminative Induction

This idea was flipped on its head by a man so brilliant that rumors exist that he wrote William Shakespeare’s plays in his own free time. Although no evidence exists to prove these rumors true, they illustrate how widely regarded he was considered, even today. The man’s name was Francis Bacon, and he advanced the method of scientific inquiry that today we call the Baconian approach.

Francis Bacon studied at Trinity College in Cambridge England, and rose up the ranks to become Queen Elizabeth’s legal advisor, thus becoming the first Queen’s Counsel. This position led Francis Bacon to hear many court cases and take a very active role in interpreting the law on behalf of the Queen’s rule. Hence, he had to devise a way to determine truth on a nearly daily basis. In 1620 he published his most influential work, Novum Organum, (New Instrument). It was a powerful book.

Francis Bacon contrasted his new method of science from those advocated by René Descartes by stating that even experts could be wrong and that most ideas were false rather than true. According to Bacon, falsehood among experts comes from four major sources, or in his words, idols of the mind.

First was the personal desire to be right—the common notion that you consider yourself smarter than anyone else, this he called idola tribus. And it extended to the impression you might have that you are on the right track or had some brilliant insight even if you are incorrect in your conclusion. People cling to their own ideas and value them over others, even if they are false. This could also come from a false idea that your mother, father, or grandparent told you was true, and you held onto this idea more than others because it came from someone you respect.

The second source of falsehood among experts comes from idola specus. Bacon used the metaphor of a cave where you store all that you have learned, but we can use a more modern metaphor, watching YouTube videos or following groups on social media. If you consume only videos or follow writers with a certain worldview you will become an expert on something that could be false. If you read only books claiming that the world is flat, then you will come to a false conclusion that the world is flat. Bacon realized that as you consume information about the world around you, you are susceptible to false belief due to the random nature in what you learn and where you learn those things from.

The third source of falsehood among experts come from what he called idola fori. Bacon said that falsehood resulted from the misunderstanding of language and terms. He said that science, if it seeks truth should clearly define the words that it uses, otherwise even experts will come to false conclusions by their misunderstandings of a topic. Science must be careful to avoid ill-defined jargon, and it should define all terms it uses clearly and explicitly. Words can lie and when used well, can cloak falsehood as truth.

The final source of falsehood among experts results from the spectacle of idola theatri. Even if the idea makes a great story, it may not be true. Falsehood comes within the spectacle of trending ideas or widely held public opinions, which of course come and go based on fashion or popularity. Just because something is widely viewed or, in the modern sense, gone viral on the Internet, does not mean that it is true. Science and truth are not popularity contests nor does it depend on how many people come to see it in theaters, nor how fancy the computer graphics are in the science documentary you watched last night, nor how persuasive the Ted Talk was delivered. Science and truth should be unswayed by public perception and spectacle. Journalism is often engulfed within the spectacle of idola theatri, reporting stories that invoke fear and anxiety to increase viewership and outrage, and often they are untrue.

These four idols of the mind led Bacon to the conclusion that knowing the truth was an impossibility, that in science we can get closer to the truth, but we can never truly know what we know. We all fail at achieving truth. Bacon warned that truth was an artificial construct formed by the limitations of our perceptions and that it is easily cloaked or hidden in falsehood, principally by the Idols of the Mind.

So if we can’t know absolute truth, how can we get closer to the truth? Bacon proposed something philosophers call eliminative induction. Start with observations and experimentations, and using that knowledge to look for patterns, eliminate ideas which are not supported by those observations. This style of science, which starts with observations and experiments, resulted in a profound shift in scientific thinking.

Bacon viewed science as focused on the exploration and the documentation of all natural phenomena. The detailed cataloguing of all things observable, all experiments untaken, and the systematic analysis among multitudes of observations and experiments for threads of knowledge that lead to the truth. While previous scientists proposed theories and then sought out confirmation of those theories, Bacon proposed first making observations and then drawing theories which best fit the observations that had been made.

Francis Bacon realized that this method was powerful, and he proposed the idea that “with great knowledge comes great power.” He had seen how North and South American Empires, such as the Aztecs, had been crushed by the Spanish during the mid-1500s and how knowledge of ships, gun powder, cannons, metallurgy, and warfare had resulted in the fall and collapse of whole civilizations of peoples in the Americas. The Dutch utilized the technology of muskets against North American tribes, focusing on the assassination of its leaders. They also produced the wholesale manufacturing of wampum beads, which destroyed North American currencies and the native economies. Science was power because it provided technology that could be used to destroy nations and conquer people.

He foresaw the importance of exploration and scientific discovery if a nation was to remain of importance in a modern world. With Queen Elizabeth’s death in 1603, Francis Bacon encouraged her successor, King James, to colonize the Americas, envisioning the ideal of a utopian society in a new world. He called this utopian society Bensalem in his unfinished science fiction book New Atlantis. This society would be an industry into pure scientific inquiry, where researchers could experiment and document their observations with finer detail, and from those observations, large-scale patterns and theories could emerge that would lead to new technologies.

Francis Bacon’s utopian ideals took hold within his native England, especially within the Parliament of England under the House of Lords who viewed the authority of the King with less respect than any time in its history. The English Civil War and the execution of its King, Charles I, in 1649 tossed the country of England into chaos, and many people fled to the American Colonies in Virginia during the rise of Thomas Cromwell’s dictatorship.

But with the reestablishment of a monarchy in 1660, the ideas laid out by Francis Bacon came to fruition with the founding of the Royal Society of London for Improving Natural Knowledge, or simply the Royal Society. It was the first truly modern scientific society and it still exists today.

Scientific Societies

A scientific society is dedicated to research and sharing of discovery among its members. They are considered an “invisible college” since scientific societies are where experts in the fields of science come and learn from each other and demonstrate new discoveries and publish new results of experiments that they had conducted. As one of the first scientific societies, the Royal Society in England welcomed not only experiments of grand importance, but also insignificant, small-scale observations at their meetings. The Royal Society received support from its members as well as from the monarchy, Charles II, who viewed the society as a useful source of new technologies where new ideas would have important implementations in both state warfare and commerce. Its members included some of England’s most famous scientists of the time; including Isaac Newton, Robert Hooke, Charles Babbage, and even the American Colonialist Benjamin Franklin. Membership was exclusive to upper class men with English citizenship who could finance their own research and experimentation.

Most scientific societies today are open to membership of all citizens and genders, and they have had a profound influence on the sharing of scientific discoveries and knowledge among its members and the public. In the United States of America, the American Geophysical Union and Geological Society of America rank as the largest scientific societies dedicated to the study of Earth science, but hundreds of other scientific societies exist in the fields of chemistry, physics, biology, and geology. Often these societies hold meetings, where new discoveries are shared with scientists by its members giving presentations, and societies have their own journals which publish research and distribute these journals to libraries and fellow members of the society. These journals are often published as proceedings, which can be read by those who cannot attend meetings in person.

The rise of scientific societies allowed the direct sharing of information and a powerful sense of community among the elite experts in various fields of study. It also put into place an important aspect of science today, the idea of peer review. Before the advent of scientific societies, all sorts of theories and ideas were published in books, and most of these ideas were fictitious to the point that even courts of law favored verbal rather than written testimonies because they felt that the written word was much further from the truth, than the spoken word. Today we face a similar multitude of false ideas and opinions expressed on the Internet. It is easy for anyone to post a web page or express a thought on a subject, you just need a computer and internet connection.

Peer Review

To combat widely spreading fictitious knowledge, the publications of the scientific societies underwent a review system among its members. Before an idea or observation was placed into print in a society’s proceedings, it had to be approved by a committee of fellow members, typically between 3 to 5 members who agreed that it was with merit. This became what we call peer review. A paper or publication that underwent this process was given the stamp of approval among the top experts within that field. Many manuscripts submitted for peer review are never published, as one or more of the expert reviewers may find it lacking evidence and reject it. However, readers found peer-review articles of much better quality than other printed works, and they realized that these works carried more authority than written works that did not go through the process.

Today peer-review articles are an extremely important aspect of scholarly publication, and you can exclusively search among peer-reviewed articles by using many of the popular bibliographical databases or indexes, such as Google’s scholar.google.com, GeoRef published by the American Geosciences Institute, available through library subscription, and Web of Science published by the Canadian based Thomson Reuters Corporation and also available only through library subscription. If you are not a member of a scientific society, retrieved online articles are available for purchase, and many are now accessible to nonmembers for free online depending on the scientific society and publisher of their proceedings. Most major universities and colleges subscribe to these scholarly journals, and access may require a physical visit to a library to read articles.

While peer-reviewed publications carry more weight among experts than news articles and magazines published by the public press, they can be subjected to abuse. Ideas that are revolutionary and progress science and discovery beyond what your current peers believe is true are often rejected from publication because it may prove them wrong. Furthermore, ideas that conform to the current understanding of the peer reviewer’s ideas are often approved for publication. As a consequence, peer review favors more conservatively held ideas. Peer review can be stacked in an author’s favor when their close friends are the reviewers, while a newbie in a scientific society might have much more trouble getting their new ideas published and accepted. The process can be long, with some reviews taking several years to get an article published and accepted. Feuds between members of a scientific society can cause members to fight among themselves over controversial subjects or ideas. Max Planck, a well-known German physicist, lamented that “a new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die and a new generation grows up that is familiar with it.” In other words, science progresses one funeral at a time.

Another limitation of peer review is that the articles are often not read outside of the membership of the society. Most local public libraries do not subscribe to these specialized academic journals. Access to these scholarly articles are limited to students at large universities and colleges and members of the scientific society. Scientific societies were seen in the early centuries of their existence as the exclusive realm of privileged, wealthy, high-ranking men, and the knowledge contained in these articles was locked away from the general public. Public opinion of scientific societies, especially in the late 1600s and early 1700s, viewed them as secretive and often associated with alchemy, magic, and sorcery, with limited public engagement of the experiments and observations made by its members.

The level of secrecy rapidly changed during the Age of Enlightenment in the late 1700s and early 1800s with the rise of widely read newspapers which reported to the public on scientific discoveries. The American, French, and Haitian Revolutions likely were brought about as much by a desire for freedom of thought and press as they were fueled by the opening of scientific knowledge and inquiry into the daily lives of the public. Most of the founding members of the United States of America were avocational or professional in their scientific inquiry, directly influenced by the scientific philosophy of Francis Bacon, particularly Thomas Jefferson.

Major Paradigm Shifts in Science

In 1788 the Linnean Society of London was formed, which became the first major society dedicated to the study of biology and life in all its forms. This society get its name from the Swedish biologist Carl Linnaeus, who laid out an ambitious goal to name all species of life in a series of updated books first published 1735. The Linnean Society was for members interested in discovering new forms of life around the world. The great explorations of the world during the Age of Enlightenment resulted in the rising status of the society, as new reports of strange animals and plants were studied and documented.

The great natural history museums were born during this time of discovery to hold physical samples of these forms of life for comparative study. The Muséum National d’histoire Naturelle in Paris was founded in 1793 following the French Revolution. It was the first natural history museum established to store the vast variety of life forms from the planet, and it housed scientists who specialized on the study of life. Similar natural history museums in Britain and America struggled to find financial backing until the mid-1800s, with the establishment of a permanent British Museum of Natural History (now known as the Natural History Museum of London) in the 1830s, as well as the American Museum of Natural History, and Smithsonian Institute following the American Civil War in the 1870s.

The vast search for new forms of life resulted in the discovery by Charles Darwin and Alfred Wallace that through a process of natural selection, life forms can evolve and originate into new species. Charles Darwin published his famous book, On the Origin of Species by Means of Natural Selection in 1859, and like Copernicus before him, science was forever changed. Debate over the acceptance of this new paradigm proposed by Charles Darwin resulted in a schism among scientists of the time, and it developed in a new informal society of his supporters dubbed X Club and lead by Thomas Huxley, who became known as Darwin’s Bulldog. Articles which supported Darwin’s theory were systematically rejected in the established scientific journals of the time, and the members of X Club established the journal Nature, which is today considered one of the most prestigious scientific journals. New major scientific paradigm shifts often result in new scientific societies.

The Industrialization of Science

Public fascination of natural history and the study of Earth grew greatly during this time in the late 1700s and early 1800s, with the first geological mapping of the countryside and naming of layers of rocks. The first suggestions of an ancient age and long history for the Earth were proposed with the discovery of dinosaurs and other extinct creatures by the mid-1800s.

The study of Earth led to the discovery of natural resources such as coal, petroleum, and valuable minerals, and advances in the use of fertilizers and agriculture, which led to the Industrial Revolution.

All of this was due to eliminative induction advocated by Francis Bacon, but it was beginning to reach its limits. Charles Darwin wrote of the importance of his pure love of natural science based solely on observation and the collection of facts coupled with a strong desire to understand or explain whatever is observed. He also had a willingness to give up any hypothesis no matter how beloved it was to him. Darwin distrusted deductive reasoning, where an idea is examined by looking for its confirmation, and he strongly recommended that science remains based on blind observation of the natural world, but he realized that observation without a hypothesis, without a question, was foolish. For example, it would be foolish to measure the orientation of every blade of grass in a meadow, just for the sake of observation. The act of making observations assumed that there was a mystery to be solved, but the solution of which should remain unverified until all possible observations are made.

Darwin was also opposed to the practice of vivisection, the cruel practice of making observations upon experiments and dissections on live animal's or people that would lead to an animal or person’s suffering pain or death. There was a dark side to Francis Bacon’s unbridled observation when it came to experimenting on living people and animals without ethical oversight. Mary Shelley’s novel Frankenstein published in 1818 was the first of a common literary trope of the mad scientist and the unethical pursuit of knowledge through the practice of vivisection and the general cruelty of experimentation on people and animals. Yet these experiments advanced knowledge, particularly in medicine, and they still remain an ethical issue science grapples with even today.

Following the American Civil War and into World War I, governments became more involved in the pursuit of science than they had in any prior time, with the founding of federal agencies for the study of science, including maintaining the safety of industrially produced food and medicine. The industrialization of the world left citizens dependent on the government for oversight in the safety of food that was purchased for the home rather than grown at the home. New medicines which were addictive or poisonous were tested by governmental scientists before they could be sold. Governments mapped in greater detail their borders with government-funded surveys, and charted trade waters for the safe passage of ships. Science was integrated into warfare and the development of airplanes, tanks, and guns. Science was assimilated within the government, which funded its pursuits, as science became instrumental to the political ambitions of nations.

However, freedom of inquiry and the pursuit of science through observation was restricted around the rise of authoritarianism and national identity. Fascism arose in the 1930s through the dissemination of falsehoods which stoked hatred and fear upon the populations of Europe and elsewhere. The rise of propaganda using the new media of radio and later television nearly destroyed the world of the 1940s, and the scientific pursuit of pure observation was not enough to question political propaganda.

The Modern Scientific Method

During the 1930s, Karl Popper, who watched the rise of Nazi fascism in his native Austria, set about codifying a new philosophy of science. He was particularly impressed by a famous experiment conducted on Albert Einstein’s theory of general relativity. In 1915 Albert Einstein proposed, using predications on the orbits of planets in the Solar System, that large masses aren’t just attracted to each other but that matter and energy are curving the very fabric of space. To test the idea of curved space, scientists planned to study the position of the stars in the sky during a solar eclipse. If Einstein’s theory was correct, the star’s light would bend around the Sun, resulting in an apparent new position of the stars around the Sun, and if he was incorrect, the stars would remain in the same position. In 1919 Arthur Eddington led a trip to Brazil to observe a total solar eclipse and using a telescope he confirmed that the stars’ positions did change during the solar eclipse due to general relativity. Einstein was right! The experiment was in all the newspapers, and Albert Einstein went from an obscure physicist to a someone synonymous with genius.

Influenced by this famous experiment, Karl Popper dedicated the rest of his life to the study of scientific methods as a philosopher. Popper codified what made Einstein’s theory and Eddington’s experiment “scientific.” It carried the risk of proving his idea wrong. Popper wrote that in general what makes something scientific is the ability to falsify an idea through experimentation. Science is not just the collection of observations, because if you view it under the lens of a proposed idea you are likely to see confirmation and verification everywhere. Popper wrote “the criterion of the scientific status of a theory is it's falsifiability, or refutability, or testability” in his 1963 book Conjectures and Refutations. And as Darwin wrote, a scientist must give up their theory if it is falsified through observations, and if a scientist tries to save it with ad hoc exceptions, it destroys the scientific merit of the theory.

Popper developed the modern scientific method that you find in most school textbooks: a formulaic recipe where you come up with a testable hypothesis, you carry out an experiment which either confirms the hypothesis or refutes it, and then you report your results. Scientific writing shifted during this time to a very structured format —introduce your hypothesis, describe your experimental methods, report your results, and discuss your conclusions. Popper also developed a hierarchy of scientific ideas; with the lowest being hypotheses, which are unverified testable ideas, above which sat theories, which are verified through many experiments, and finally principles, which have been verified to such an extent that no exception has ever been observed. This does not mean that principles are truth, but they are supported by all observations and attempts at falsification.

Popper drew a line in the sand to distinguish what he called science and pseudoscience. Science is falsifiable whereas pseudoscience is unfalsifiable. Once a hypothesis is proven false, it should be rejected, but this does not mean that it should be abandoned.

For example, a hypothesis might be “Bigfoot exists in the mountains of Utah.” The test might be “Has anyone ever captured a bigfoot?” with the result “No”, then “Bigfoot does not exist.” However, this does not mean that we stop looking for Bigfoot, but that it is not likely this hypothesis will be supported. However, if someone continues to defend the idea that Bigfoot exists in the mountains of Utah, despite the lack of evidence, the idea moves into the realm of pseudoscience, where as "Bigfoot does not exist" moves into the realm of science. There is a greater risk that someone will find a Bigfoot and prove it wrong, but if you cling to the idea that Bigfoot exists without evidence, then it is not science, it is pseudoscience, because it is now unfalsifiable.

How Governments Can Awaken Scientific Discovery

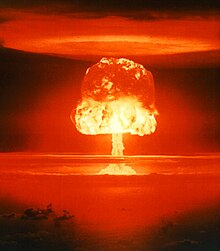

On August 6 and 9 1945, the United States dropped atomic bombs on the cities of Hiroshima and Nagasaki in Japan, ending World War II. It sent a strong message that scientific progress was powerful. Two weeks before the dramatic end of the war, Vannevar Bush wrote to President Franklin Roosevelt that “scientific progress is one essential key to our security as a nation, to our better health, to more jobs, to a higher standard of living, and to our cultural progress.”

What Bush proposed was that funds should be set aside for pure scientific pursuit which would cultivate scientific research within the United States of America, and he drafted his famous report called Science, the Endless Frontier. From the recommendations in the report, five years later in 1950, the United States government created the National Science Foundation for the promotion of science. Unlike agency or military scientists, which were full-time employees, the National Science Foundation offered grants to scientists for the pursuit of scientific questions. It allowed funding for citizens to pursue scientific experiments, travel to collect observations, and carry out scientific investigations of their own.

The hope was that these grants would cultivate scientists, especially in academia, and that they could be called upon during times of crisis. Funding was determined by the scientific process of peer review rather than the legal process of appeal to authority. However, the National Science Foundation has struggled since its inception as it has been railed against by politicians with a legal persuasion, who argue that only Congress or the President should be the ones to decide what scientific questions are deserving funding. Most government science funding supports military applications as funded directly by politicians, rather than panels of independent scientists, as demonstrated by finances of most governments.

How to Think Critically in a Media Saturated World

During the post war years until the present time, false ideas were not only perpetrated by those in authority but also by the meteoritic rise of advertising—propaganda designed to sell things.

With mass media of the late 1900s and even today, the methods of science inquiry become more important in combating falsehood, not only among those who practiced science, but also the general public. Following modern scientific methods skepticism became a vital tool in not only science, but critical thinking and the general pursuit of knowledge. Skepticism assumes that everyone is lying to you, but people are especially prone to lie to you when selling you something. The common midcentury phrase “there's a sucker born every minute” exalted the pursuit of tricking people for profit, and to protect yourself from scams and falsehood, one needs to become skeptical.

To codify this in a modern scientific framework, Carl Sagan developed his “baloney detection kit”, outlined in his book The Demon-Haunted World: Science as a Candle in the Dark. A popular Professor at Cornell University in New York, Sagan, best known for his television show Cosmos had been diagnosed with cancer when he set out to write his final book. Sagan worried that like a lit candle in the dark, science could be extinguished if not put into practice.

He was aghast to learn that the general public believed in witchcraft, magic stones, ghosts, astrology, crystal healing, holistic medicine, UFOs, Bigfoot and the Yeti, sacred geometry, and opposition to vaccination and the inoculation of cured diseases. He feared that with a breath of wind, scientific thought would be extinguished by the widespread belief in superstition. To prevent that, before his death in 1996 he left us with this “baloney detection kit”, a method of skeptical thinking to help evaluate ideas:

- Wherever possible, there must be independent confirmation of the “facts.”

- Encourage substantive debate on the evidence by knowledgeable proponents of all points of view.

- Arguments from authority carry little weight—“authorities” have made mistakes in the past. They will do so again in the future. Perhaps a better way to say it is that in science, there are no authorities; at most, there are experts.

- Spin more than one hypothesis. If there’s something to be explained, think of all the different ways in which it could be explained. Then think of tests by which you might systematically disprove each of the alternatives.

- Try not to get overly attached to a hypothesis just because it’s yours. It’s only a way station in the pursuit of knowledge. Ask yourself why you like the idea. Compare it fairly with the alternatives. See if you can find reasons for rejecting it. If you don’t, others will.

- Quantify. If whatever it is you are explaining has some measure, some numerical quantity attached to it, you’ll be much better able to discriminate among competing hypotheses. What is vague and qualitative is open to many explanations. Of course, there are truths to be sought in the many qualitative issues we are obliged to confront, but finding them is more challenging.

- If there’s a chain of argument, every link in the chain must work (including the premise) — not just most of them.

- Occam’s razor. This convenient rule-of-thumb urges us when faced with two hypotheses that explain the data equally well to choose the simpler.

- Always ask whether the hypothesis can be, at least in principle, falsified. Propositions that are untestable, unfalsifiable, are not worth much. You must be able to check assertions out. Inveterate skeptics must be given the chance to follow your reasoning, to duplicate your experiments, and see if they get the same result.

The baloney detection kit is a causal way to evaluate ideas through a skeptical lens. This kit borrows heavily from the scientific method but has enjoyed a wider adaption outside of science as a method in critical thinking.

Carl Sagan never witnessed the incredible growth of mass communication through the development of the Internet at the turn of the last century and the rapidity of how information could be shared globally instantaneously which has become a powerful tool, both in the rise of science but also propaganda.

Accessing Scientific Information

The newest scientific revolution of the early 2000s regards the access to scientific information and the breaking of barriers to free inquiry. In the years leading up to the Internet, scientific societies relied on traditional publishers to print journal articles. Members of the societies would author new works as well as review other submissions for free and on a voluntary basis. The society or publisher would own the copyright to the scientific article which was sold to libraries and institutions for a profit. Members would receive a copy as part of their membership fees. However, low readership for these specialized publications with high printing costs resulted in expensive library subscriptions for these publications.

With the advent of the Internet in the 1990s, traditional publishers begin scanning and archiving their vast libraries of copyright content onto the Internet, allowing access through paywalls. University libraries with an institutional subscription account would allow students to connect through a university library to access articles while locking the content of the archival articles beyond paywalls to the general public.

Academic scientists were locked into the system because tenure and advancement within universities and colleges was dependent on their publication record. Traditional publications carried higher prestige, despite having low readership.

Publishers exerted a huge amount of control on who had access to scientific peer-reviewed articles, and students and aspiring scientists at universities were often locked out of access to these sources of information. There was a need to revise the peer-review traditional model.

Open Access and Science

One of the most important originators of a new model for the distribution of scientific knowledge was Aaron Swartz. In 2008 Swartz published a famous essay entitled Guerilla Open Access Manifesto, and led a life as an activist fighting for free access to scientific information online. Swartz was fascinated with online collaborative publications, such as Wikipedia. Wikipedia was assembled by gathering information from volunteers who contribute articles on topics. This information is verified and modified by large groups of users on the platform who keep the website up to date. Wikipedia grew out of a large user community, much like scientific societies, but with an easy entry to contribute new information and edit pages. Wikipedia quickly became one of the most visited sites on the internet in the retrieval of factual information. Swartz advocated for open access, and that all scientific knowledge should be accessible to anyone. He petitioned for Creative Commons Licensing and strongly encouraged scientists to publish their knowledge online without copyrights or restrictions to share that information.

Open access had its adversaries in the form of law enforcement, politicians and governments with nationalist or protectionist tendencies, and private companies with large revenue streams from intellectual property. These adversaries to Open access argued that scientific information could be used to make illicit drugs, new types of weapons, hack computer networks, encrypt communications and share state secrets and private intellectual properties. But it was private companies with large sources of intellectual property who worried the most about the open access movement, lobbying politicians to enact stronger laws to prohibit the sharing of copyright information online.

In 2010 Aaron Swartz used a computer located at MIT’s Open Campus to download scientific articles from the publisher JSTOR, using an MIT computer account. After JSTOR noticed a large surge of online request from MIT it contacted campus police. The campus police arrested Swartz, and he was charged with 35 years in prison and $1,000,000 in fines. Faced with the criminal charges, Swartz committed suicide in 2013.

The repercussions of Aaron Swartz’s ordeal pushed scientists to find alternative ways to distribute scientific information to the public, rather than relying on corporate for-profit publishers. The open access movement was ignited and radicalized, but details even today are still being worked out among various groups of scientists.

The Ten Principle Sources of Scientific Information

There are ten principle sources of scientific information you will encounter, each one should be viewed with skepticism. These sources of information can be ranked based on a scale of the reliability of the information they present. Knowing the original source can be difficult to determine, but by organizing the sources into these ten categories, you can distinguish the relative level of truthfulness of information presented. All of them report some level of falsehood, however, the higher the ranking the more likely the material contains less falsehoods and approaches more truthful statements.

- 1. Advertisements and Sponsored Content

- Any content that is intended to sell you something and made with the intention of making money. Examples include commercials on radio, television, printed pamphlets, paid posts on Facebook and Twitter, sponsored YouTube videos, web page advertisements, and spam email and phone calls. These sources are the least reliable sources of truthfulness.

- 2. Personal Blogs or Websites

- Any content written by a single person without any editorial control or any verification by another person. These include personal websites, YouTube videos, blog posts, Facebook, Twitter, Reddit posts and other online forums, and opinion pieces written by single individuals. These sources are not very good sources of truthfulness, but they can be insightful in specific instances.

- 3. News Sources

- Any content produced by a journalist with the intention of maintaining interest and viewership with an audience. Journalism is the production and distribution of reports on recent events, and while subjected to a higher standard of fact checking (by an editor or producer), they are limited by the need to maintain interest with an audience who will tune in or read its content. News stories tend to be shocking, scandalous, feature famous individuals, and address trending or controversial ideas. They are written by nonexperts who rely on the opinion of experts who they interview. Many news sources are politically orientated in what they report. Examples of new sources are cable news channels, local and national newspapers, online news websites, aggregated news feeds, and news reported on the radio or broadcast television. These tend to be truthful but often with strong biases on subjects covered, factual mistakes and errors, and a fair amount of sensationalism.

- 4. Trade Magazines or Media

- Any content produced on a specialized topic by freelance writers who are familiar with the topic which they are writing about. Examples include magazines which cover a specialized topic, podcasts hosted by experts in the field, and edited volumes with chapters contributed by experts. These tend to have a higher level of truthfulness because the writing staff who create the content are more familiar with the specialized topics covered, with some editorial control over the content.

- 5. Books

- Books are lengthy written treatments on a topic, which require the writer to become familiar with a specific subject of interest. Books are incredible sources of information and are insightful to readers wishing to learn more about a topic. They also convey information that can be inspirational. Books are the result of a long-term dedication on behalf of an author or team of authors, who are experts on the topic or become experts through the research that goes into writing a book. Books encourage further learning of a subject and have a greater depth of content than other sources of information. Books require editorial oversight if its contents are published traditionally. One should be aware that authors can write with a specific agenda or point of view, which may express falsehoods.

- 6. Collaborative Publications or Encyclopedias

- These are sources of scientific information produced by teams of experts with the specific intention of presenting a consensus on a topic. Since the material presented must be subjected to debate, they tend to carry more authority as they must satisfy skepticism from multiple contributors on the topic. Examples of these include Wikipedia, governmental agency reports, reports by the National Academy of Sciences, and the United Nations.

- 7. Preprints, Press Releases, and Meeting Abstracts

-

- Preprints

- Preprints are manuscripts submitted for peer review, but made available online to solicit additional comments and suggestions by fellow scientists. In the study of the Earth, the most common preprint service is eartharxiv.org. Preprints are often picked up by journalists and reported as news stories. Preprints are a way for scientists to get information conveyed to the public more quickly than going through full peer review, and they help establish a precedent by the authors on a scientific discovery. They are a fairly recent phenomenon in science, first developed in 1991 with arxiv.org (pronounced archive) a moderated web service which hosts papers in the fields of science.

- Press Releases

- Press releases are written by staff writers at universities, colleges, and government agencies when an important research study is going to be published in a peer-review paper. Journalist will often write a story based on the press release, as they are written for a general audience and avoid scientific words and technical details. Most press releases will link to the scientific peer-reviewed paper that has been published, so you should also read the referenced paper.

- Meeting Abstracts

- Meeting abstracts are short summaries of research that are presented at scientific conferences or meetings. These are often reported on by journalists who attend the meetings. Some meeting abstracts are invited, or peer reviewed, before scientists are allowed to present their research at the meeting, others are not. Abstracts represent ongoing research that is being presented for scientific evaluation. Not all preprints, and meeting abstracts will make it through the peer-review process, and while many ideas are presented in these formats, not all will be published with a followup paper. In scientific meetings, scientists can present their research as a talk or as a poster. Recordings of the talks are sometimes posted on the Internet, while copies of the posters are sometimes uploaded as preprints. Meeting abstracts are often the work of graduate or advanced undergraduate students who are pursuing student research on a topic.

- 8. Sponsored Scholarly Peer-Review Articles with Open Access

- Sponsored scholarly peer review articles with open access are publications that are selected by an editor and peer-reviewed, but the authors pay to publish the article with the journal, if accepted. Technically these are advertisements or sponsored content since there is an exchange of money from the author or creator of the material to the journal, who allows the article to be accessible to the public on the journal’s website, however, their intention is not to sell a product. With the open access movement, many journals publish scholarly articles in this fashion since the published articles are available to the public to read, free of charge. However, there is abuse. The Beall’s List was established to list predatory or fraudulent scholarly journals that actively solicit scientists and scholars but don’t offer quality peer review and hosting in return of money exchanged for publication. Not all sponsored scholarly peer review articles with open access are problematic, and many are well respected, as many traditional publications offer options to allow public access to an article in exchange of money from the authors. The publication rates for these journals vary greatly between publishers, asking for a few hundred dollars up to the price of a new car. Large governments and well-funded laboratories tend to publish in the more expensive open access journals, which often offer press releases and help publicize their work on social media.

- 9. Traditional Scholarly Peer-Review Articles behind a Paywall

- Most scholarly scientific peer-reviewed articles are written in traditional journals. These journals earn income only from subscriptions from readers, rather than authors paying to publish their works. Authors and reviewers are not paid any money, and there is no exchange of money to publish in these journals. They are reviewed by 3 to 5 expert reviewers who are contacted by the editor to review the manuscript before any consideration of publication. Copyright is held by the journal, and individual articles can be purchased online. Many university and college libraries will have institutional online and print subscriptions with specific journals, so you can borrow a physical copy of the journal from the library if you like to read the publication. Older back issues are often available for free online when the copyright has expired. Most scientific articles are published in this format.

- 10. Traditional Scholarly Peer-Review Articles with Open Access

- These journals are operated by volunteers, allowing authors to submit works for consideration to peer-review, and are not required to pay any money to the journal if the article is accepted for publication. Editors and reviewers work on a voluntary basis, with web hosting services provided by endowments and donations. Articles are available online for free downloading by the general public without any subscription to the journal and are open access. Copyright can be retained by the author or journal or distributed under a Creative Commons License. These journals are rarer, because they are operated by a volunteer staff of scientists.

Researchers studying a topic will often limit themselves to sources 5 through 10 as acceptable sources of information while others may be more restrictive and consult only 8 through 10 sources or only fully peer-reviewed sources. Any source of information can present falsehoods and any source can touch upon truth, but the higher the scale in this scheme, the more verification the source had to go through to get published.

Imagine that a loved one is diagnosed with cancer, and you want to learn more about the topic. Most people will consult sources 1 through 3, or 1 through 5, but if you want to learn what medical professionals are reading, sources above 5 are good sources to read since they are more likely verified by experts than lower ranking sources of information. The higher the ranking, the more technical the writing will be and the more specific the information will be. Remember it is important that you consult many sources of information to verify that the information you consume is correct.

Why We Pursue Scientific Discovery

Hope Jahren wrote in her 2016 book Lab Girl, “science has taught me that everything is more complicated than we first assume, and that being able to derive happiness from discovery is a recipe for a beautiful life.” In a modern world where it appears that everything has been discovered and explored, and everything ever known has been written down by someone, it is refreshing to know that there are still scientific mysteries to discover.

For a budding scientist it can be incredibly daunting as you learn about science. The more you learn about a scientific topic, the more you become overwhelmed by its complexity. Furthermore, any new scientific contribution you make is often met with extreme criticism from scientific experts. Scientists are taught to be overly critical and skeptical of new ideas, and they rarely embrace new contributions easily, especially from someone new to the field. Too frequently young scientists are told by experts what to study and how to study it, but science is still a field of experimentation, observation, and exploration. Remember, science should be fun. The smallest scientific discovery often leads to the largest discoveries.

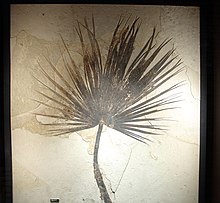

Hope Jahren discovered that hackberry trees have seeds which are made of calcium carbonate (aragonite), while this is an interesting fact alone, it opened the door onto a better understanding of past climate change, since oxygen elements in aragonite crystals can be used to determine the annual growing temperature of the trees that produced them. This discovery allowed scientists to determine the climate whenever hackberry trees dropped their seeds, even in rock layers millions of years old, establishing a long record of growing temperatures extending millions of years into the Earth’s past.

Such discoveries lead to the metaphor that scientists craft tiny keys that unlock giant rooms, and it is not until the door is unlocked that people, even fellow scientists, realize the implications of the years of research used to craft the tiny key. So, it is important to derive happiness from each and every scientific discovery you make, no matter how small or how insignificant it may appear. Science and actively seeking new knowledge and new experiences will be the most rewarding pursuit of your life.

| Previous | Current | Next | |

|---|---|---|---|

1b. Earth System Science: Gaia or Medea?

Earth as a Puddle

“Imagine a puddle waking up one morning and thinking, ‘This is an interesting world I find myself in — an interesting hole I find myself in — fits me rather neatly, doesn’t it? In fact it fits me staggeringly well, must have been made to have me in it!’ This is such a powerful idea that as the sun rises in the sky and the air heats up and as, gradually, the puddle gets smaller and smaller, frantically hanging on to the notion that everything’s going to be alright, because this world was meant to have him in it, was built to have him in it; so the moment he disappears catches him rather by surprise. I think this may be something we need to be on the watch out for.”

In December 1968, Astronaut William A. Anders on Apollo 8 took a picture of the Earth rising above the Moon’s horizon. It captured how small Earth is when viewed from space, and this image suddenly had a strange effect on humanity. Our planet, our home, is a rather small, insignificant place when viewed from the great distance of outer space. Our lives are collectively from the perspective of Earth looking outward, but with this picture, taken above the surface of the Moon looking back at us, we realized that our planet is really just a small place in the universe.

Earth system science was born during this time in history, in the 1960s, when the exploration of the moon and other planets, allowed us to turn the cameras back on Earth and study it from afar. Earth system science is the scientific study of Earth’s component parts and how these components —the solid rocks, liquid oceans, growing life-forms, and gaseous atmosphere—function, interact, and evolve, and how these interactions change over long timescales. The goal of Earth system science is to develop the ability to predict how and when those changes will occur from naturally occurring events, as well as in response to human activity. Using the metaphor of Douglas Adams’ salient puddle above, we don’t want to get surprised if our little puddle starts to dry up!

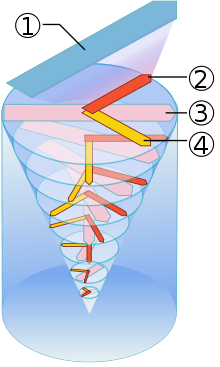

A system is a set of things working together as parts of a mechanism or an interconnecting network, and Earth system science is interested in how these mechanisms work in unison with each other. Scientists interested in these global questions simplify their study into global box models. Global box models are analogies that can be used to help visualize how matter and energy move and change across an entire planet from one place or state to another.

For example, the global hydrological cycle can be illustrated by a simple box model, where there are three boxes, representing the ocean, the atmosphere, and lakes and rivers. Water evaporates from the ocean into the atmosphere where it forms clouds. Clouds in the atmosphere rain or snow on the surface of the ocean and land filling rivers and lakes (and other sources of fresh water), which eventually drain into the ocean. Arrows between each of the boxes indicate the direction that water moves between these categories. Flux is the rate at which matter moves from one box into another, which can change depending on the amount of energy. Flux is a rate, which means that it is calculated as a unit over time, in the case of water, this could be determined as volume of water that it rains or snows per year.

There are three types of systems that can be modeled. Isolated systems, in which energy and matter cannot enter into the model from the outside. Closed systems, in which energy, but not matter, can enter into the model, and open systems, in which both energy and matter can enter into the model from elsewhere.

Global Earth systems are regarded as closed systems since the amount of matter entering Earth from outer space is a tiny fraction of the total matter that makes up the Earth. In contrast, the amount of energy from outer space, in the form of sunlight, is large. Earth is largely open to energy entering the system and closed to matter. (Of course, there are rare exceptions to this when meteorites from outer space strike the Earth.)

In our global hydrological cycle, if our box model was isolated, allowing no energy and no matter to enter the system, there would be no incoming energy for the process of evaporation, and the flux rate between the ocean and atmosphere would decrease to zero. In isolated systems with no exchange of energy and matter, over time they will slow down and eventually stop functioning, even if they have an internal energy source. We will explore why this happens when we discuss energy. If the box model is open, such as if ice-covered comets frequently hit the Earth from outer space, there would be a net increase in the total amount of water in the model, or if water was able to escape into outer space from the atmosphere, there would be a net decrease in the total amount of water in the model over time. So it is important to determine if the model is truly closed or open to both matter and energy.

In box models we also want to explore all possible places where water can be stored, for example, water on land might go underground to form groundwater and enter into spaces beneath Earth’s surface, hence we might add an additional box to represent groundwater and its interaction with surface water. We might want to distinguish water locked up in ice and snow by adding another box to represent frozen water resources. You can begin to see how a simple model can, over time, become more complex as we consider all the types of interactions and sources that may exist on the planet.

A reservoir is a term used to describe a box which represents a very large abundance of matter or energy relative to other boxes. For example, the world’s ocean is a reservoir of water because most of the water is found in the world’s oceans. A reservoir is relative and can change if the amount of energy or matter in the source decreases in relation to other sources. For example, if solar energy from the sun increased and the oceans boiled and dried away, the atmosphere would become the major reservoir of water for the planet since the portion of water locked in the atmosphere would be more than found in the ocean. In a box model, a reservoir is called a sink when more matter is entering the reservoir than is leaving it, while a reservoir is called a source if more matter leaves the box than is entering it. Reservoirs are increasing in size when they are a sink and decreasing in size when they are a source.

Sequestration is a term used when a source becomes isolated and the flux between boxes is a very slow rate of exchange. Groundwater, which represents a source of water isolated from the ocean and atmosphere, can be considered an example of sequestration. Matter and energy which is sequestrated have very long residence times, the length of time energy and matter reside in these boxes.

Residence times can be very short, such as a few hours when water from the ocean evaporates, then falls back down into the ocean as rain; or very long, such as a few thousand years when water is locked up in ice sheets and even millions of years underground. Matter that is sequestered is locked up for millions of years, such that it is taken out of the system.

An example of sequestration is an earth system box model of salt (NaCl), or sodium chloride. Rocks weather in the rain, resulting in the dissolution of sodium and chloride, which are transported to the ocean dissolved in water. The ocean is a reservoir of salt since salt will accumulate over time by the process of the continued weathering of the land. Edmund Halley (who predicted a comet’s return and was later posthumously named after him) proposed in 1715 that the amount of salt in the oceans is related to the age of the Earth, and he suggested that salt has been increasing in the world’s ocean over time, which will become saltier and saltier into the future. However, this idea was proved false when scientists determined that the world’s oceans have maintained a similar salt content over its history. There had to be a mechanism to remove salt from ocean water. The ocean loses salt through the evaporation of shallow seas and landlocked water. The salt left behind from the evaporation of the water in these regions is buried under sediments and becomes sequestered underground. The flux of incoming salt into the ocean from weathering is similar to the flux leaving the ocean by the process of evaporated salt being buried. This buried salt will remain underground for millions of years. The salt cycle is at an equilibrium, as the oceans maintain a fairly persistent rate of salinity. The sequestration of evaporated salt is an important mechanism that removes salt from the ocean. Scientists begin to wonder if Earth exhibits similar mechanisms that maintain an equilibrium through a process of feedbacks.

Equilibrium is a state in which opposing feedbacks are balanced and conditions remain stable. To illustrate this, imagine a classroom which is climate controlled with a thermostat. When the temperature in the room is above 75 degrees Fahrenheit, the air conditioner turns on, when the temperature in the room is below 65 degrees Fahrenheit, the heater turns on. The temperature within the classroom will most of the time be at equilibrium between 65 and 75 degrees Fahrenheit, as the heater and air conditioner are opposing forces that keep the room in a comfortable temperature range. Imagine now that the room becomes filled with students, which increase the temperature in the room when the room reaches 75 degrees Fahrenheit, the air conditioner turns on, cooling the room. The air conditioner is a negative feedback. A negative feedback is where there is an opposing force that reduces fluctuations in a system. In this example, the increase in the heat of the students in the room is opposed by the cooling of the air conditioner system turning on.

Imagine that a classmate plays a practical joke, and switches the thermostat. When the temperature in the room is above 75 degrees Fahrenheit, the heater turns on, when the temperature in the room is below 65 degrees Fahrenheit, the air conditioner will turn on. With this arrangement, when students enter the classroom and the temperature slowly reaches 75 degrees Fahrenheit, the heater turns on! The heater is a positive force in the same direction as the heat produced by the students entering the room. A positive feedback is where there are two forces that join together in the same direction, which leads to instability of a system over time. The classroom will get hotter and hotter, even if the students leave the room, the classroom will remain hot, since there is no opposing force to turn on the air conditioner. It likely will never drop down to 65 degrees Fahrenheit, with the heater turned on. Positive feedbacks are sometimes referred to as vicious cycles. The tipping point in our example is 75 degrees Fahrenheit, when the positive feedback (the heater) turned on, resulting in the instability of the system and leading to a very miserable hot classroom experience. Tipping points are to be avoided if there are systems in place with positive feedbacks.

Gaia or Medea?